记录几条疑问

- The sample size required for a target utility level increases with the privacy constraint.

- Optimization methods for large data sets must also be scalable.

- SGD algorithms satisfy asymptotic guarantees

Introduction

-

主要工作简介:

In this paper we derive differentially private versions of single-point SGD and mini-batch SGD, and evaluate them on real and synthetic data sets. -

更多运用SGD的原因:

Stochastic gradient descent (SGD) algorithms are simple and satisfy the same asymptotic guarantees as more computationally intensive learning methods. -

由于asymptotic guarantees带来的影响:

to obtain reasonable performance on finite data sets practitioners must take care in setting parameters such as the learning rate (step size) for the updates. -

上述影响的应对之策:

Grouping updates into “minibatches” to alleviate some of this sensitivity and improve the performance of SGD. This can improve the robustness of the updating at a moderate expense in terms of computation, but also introduces the batch size as a free parameter.

Preliminaries

- 优化目标:

solve a regularized convex optimization problem :

where is the normal vector to the hyperplane separator, and is a convex loss function.

若 选为 logistic loss, 即 , 则 Logistic Regression

若 选为 hinge loss, 即 max, 则 SVM

- 优化算法:

SGD with mini-batch updates :

where is a learning rate, the update at each step is based on a small subset of examples of size .

SGD with Differential Privacy

- 满足差分隐私的 mini-batch SGD :

A differentially-private version of the mini-batch update :

where is a random noise vector in drawn independently from the density:

- 使用上式的 mini-batch update 时, 此种updates满足-differentially private的条件:

If the initialization point is chosen independent of the sensitive data, the batches are disjoint, and if for all , and all , then SGD with mini-batch updates is -differentially private.

Experiments

-

实验现象:

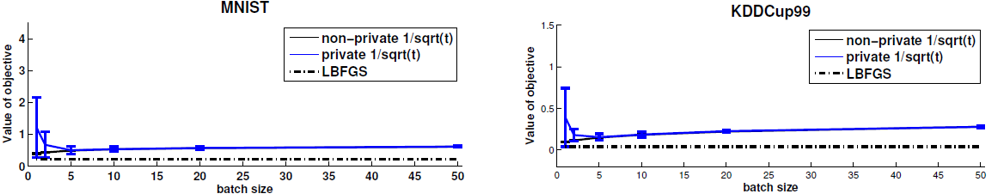

batch size 为1时DP-SGD的方差比普通的SGD更大。但 batch size 调大后则方差减小了很多。

-

由此而总结出的经验:

In terms of objective value, guaranteeing differential privacy can come for “free” using SGD with moderate batch size. -

实际上 batch size 带来的影响是先减后增

increasing the batch size improved the performance of private SGD, but there is a limit , much larger batch sizes actually degrade performance.

额外记录几条经验

- 数据维度与隐私保护参数会影响实验所需的数据量:

Differentially private learning algorithms often have a sample complexity that scales linearly with the data dimension and inversely with the privacy risk . Thus a moderate reduction in or increase in may require more data.

来源:CSDN

作者:止损°

链接:https://blog.csdn.net/weixin_44549245/article/details/104109448