打造用户态存储利器,基于SPDK的存储引擎Blobstore & BlobFS

https://community.mellanox.com/s/article/howto-configure-nvme-over-fabrics

SPDK自动精简配置的逻辑卷使用 construct_lvol_bdev https://www.sdnlab.com/21098.html

spdk 块设备层bdev https://www.cnblogs.com/whl320124/p/10064878.html https://spdk.io/doc/bdev.html#bdev_ug_introduction

转自 spdk中nvmf源码 https://mp.weixin.qq.com/s/ohPaxAwmhGtuQQWz--J6WA

https://spdk.io/doc/nvmf_tgt_pg.html

nvmf的spec http://nvmexpress.org/resources/specifications/

总的知识点+较详细不同bdev配置 https://www.jianshu.com/p/b11948e55d80

https://www.gitmemory.com/issue/spdk/spdk/627/478899588

SPDK NVMe-oF target 多路功能介绍 http://syswift.com/508.html

nvmf源码解读1

spdk中nvmf的库在 lib/nvmf中,app/nvmf_tgt.c用的就是库中的api

基本数据类型

The library exposes a number of primitives - basic objects that the user creates and interacts with. They are:

struct spdk_nvmf_tgt:

是subsystems(及其namespace),transports 和相关的网络连接的 一个集合。

An NVMe-oF target. This concept, surprisingly, does not appear in the NVMe-oF specification. SPDK defines this to mean the collection of subsystems with the associated namespaces, plus the set of transports and their associated network connections. This will be referred to throughout this guide as a target.

struct spdk_nvmf_subsystem:

An NVMe-oF subsystem, as defined by the NVMe-oF specification. Subsystems contain namespaces and controllers and perform access control. This will be referred to throughout this guide as a subsystem.

struct spdk_nvmf_ns:

An NVMe-oF namespace, as defined by the NVMe-oF specification. Namespaces are bdevs. See Block Device User Guide for an explanation of the SPDK bdev layer. This will be referred to throughout this guide as a namespace.

struct spdk_nvmf_qpair:

An NVMe-oF queue pair, as defined by the NVMe-oF specification. These map 1:1 to network connections. This will be referred to throughout this guide as a qpair.

struct spdk_nvmf_transport:

An abstraction for a network fabric, as defined by the NVMe-oF specification. The specification is designed to allow for many different network fabrics, so the code mirrors that and implements a plugin system. Currently, only the RDMA transport is available. This will be referred to throughout this guide as a transport.

struct spdk_nvmf_poll_group:

An abstraction for a collection of network connections that can be polled as a unit. This is an SPDK-defined concept that does not appear in the NVMe-oF specification. Often, network transports have facilities to check for incoming data on groups of connections more efficiently than checking each one individually (e.g. epoll), so poll groups provide a generic abstraction for that. This will be referred to throughout this guide as a poll group.

struct spdk_nvmf_listener:

A network address at which the target will accept new connections.

struct spdk_nvmf_host:

An NVMe-oF NQN representing a host (initiator) system. This is used for access control.

基本api

The Basics

A user of the NVMe-oF target library begins by creating a target using spdk_nvmf_tgt_create(), setting up a set of addresses on which to accept connections by calling spdk_nvmf_tgt_listen(), then creating a subsystem using spdk_nvmf_subsystem_create().

Subsystems begin in an inactive state and must be activated by calling spdk_nvmf_subsystem_start(). Subsystems may be modified at run time, but only when in the paused or inactive state. A running subsystem may be paused by calling spdk_nvmf_subsystem_pause() and resumed by calling spdk_nvmf_subsystem_resume().

Namespaces may be added to the subsystem by calling spdk_nvmf_subsystem_add_ns() when the subsystem is inactive or paused. Namespaces are bdevs. See Block Device User Guide for more information about the SPDK bdev layer. A bdev may be obtained by calling spdk_bdev_get_by_name().

Once a subsystem exists and the target is listening on an address, new connections may be accepted by polling spdk_nvmf_tgt_accept().

All I/O to a subsystem is driven by a poll group, which polls for incoming network I/O. Poll groups may be created by calling spdk_nvmf_poll_group_create(). They automatically request to begin polling upon creation on the thread from which they were created. Most importantly, a poll group may only be accessed from the thread on which it was created.

When spdk_nvmf_tgt_accept() detects a new connection, it will construct a new struct spdk_nvmf_qpair object and call the user provided new_qpair_fn callback for each new qpair. In response to this callback, the user must assign the qpair to a poll group by calling spdk_nvmf_poll_group_add(). Remember, a poll group may only be accessed from the thread on which it was created, so making a call to spdk_nvmf_poll_group_add() may require passing a message to the appropriate thread.

Access Control

Access control is performed at the subsystem level by adding allowed listen addresses and hosts to a subsystem (see spdk_nvmf_subsystem_add_listener() and spdk_nvmf_subsystem_add_host()). By default, a subsystem will not accept connections from any host or over any established listen address. Listeners and hosts may only be added to inactive or paused subsystems.

Discovery Subsystems

A discovery subsystem, as defined by the NVMe-oF specification, is automatically created for each NVMe-oF target constructed. Connections to the discovery subsystem are handled in the same way as any other subsystem - new qpairs are created in response to spdk_nvmf_tgt_accept() and they must be assigned to a poll group.

Transports

The NVMe-oF specification defines multiple network transports (the "Fabrics" in NVMe over Fabrics) and has an extensible system for adding new fabrics in the future. The SPDK NVMe-oF target library implements a plugin system for network transports to mirror the specification. The API a new transport must implement is located in lib/nvmf/transport.h. As of this writing, only an RDMA transport has been implemented.

The SPDK NVMe-oF target is designed to be able to process I/O from multiple fabrics simultaneously.

Choosing a Threading Model

The SPDK NVMe-oF target library does not strictly dictate threading model, but poll groups do all of their polling and I/O processing on the thread they are created on. Given that, it almost always makes sense to create one poll group per thread used in the application. New qpairs created in response to spdk_nvmf_tgt_accept() can be handed out round-robin to the poll groups. This is how the SPDK NVMe-oF target application currently functions.

More advanced algorithms for distributing qpairs to poll groups are possible. For instance, a NUMA-aware algorithm would be an improvement over basic round-robin, where NUMA-aware means assigning qpairs to poll groups running on CPU cores that are on the same NUMA node as the network adapter and storage device. Load-aware algorithms also may have benefits.

Scaling Across CPU Cores

Incoming I/O requests are picked up by the poll group polling their assigned qpair. For regular NVMe commands such as READ and WRITE, the I/O request is processed on the initial thread from start to the point where it is submitted to the backing storage device, without interruption. Completions are discovered by polling the backing storage device and also processed to completion on the polling thread. Regular NVMe commands (READ, WRITE, etc.) do not require any cross-thread coordination, and therefore take no locks.

NVMe ADMIN commands, which are used for managing the NVMe device itself, may modify global state in the subsystem. For instance, an NVMe ADMIN command may perform namespace management, such as shrinking a namespace. For these commands, the subsystem will temporarily enter a paused state by sending a message to each thread in the system. All new incoming I/O on any thread targeting the subsystem will be queued during this time. Once the subsystem is fully paused, the state change will occur, and messages will be sent to each thread to release queued I/O and resume. Management commands are rare, so this style of coordination is preferable to forcing all commands to take locks in the I/O path.

Zero Copy Support

For the RDMA transport, data is transferred from the RDMA NIC to host memory and then host memory to the SSD (or vice versa), without any intermediate copies. Data is never moved from one location in host memory to another. Other transports in the future may require data copies.

RDMA

The SPDK NVMe-oF RDMA transport is implemented on top of the libibverbs and rdmacm libraries, which are packaged and available on most Linux distributions. It does not use a user-space RDMA driver stack through DPDK.

In order to scale to large numbers of connections, the SPDK NVMe-oF RDMA transport allocates a single RDMA completion queue per poll group. All new qpairs assigned to the poll group are given their own RDMA send and receive queues, but share this common completion queue. This allows the poll group to poll a single queue for incoming messages instead of iterating through each one.

Each RDMA request is handled by a state machine that walks the request through a number of states. This keeps the code organized and makes all of the corner cases much more obvious.

RDMA SEND, READ, and WRITE operations are ordered with respect to one another, but RDMA RECVs are not necessarily ordered with SEND acknowledgements. For instance, it is possible to detect an incoming RDMA RECV message containing a new NVMe-oF capsule prior to detecting the acknowledgement of a previous SEND containing an NVMe completion. This is problematic at full queue depth because there may not yet be a free request structure. To handle this, the RDMA request structure is broken into two parts - an rdma_recv and an rdma_request. New RDMA RECVs will always grab a free rdma_recv, but may need to wait in a queue for a SEND acknowledgement before they can acquire a full rdma_request object.

Further, RDMA NICs expose different queue depths for READ/WRITE operations than they do for SEND/RECV operations. The RDMA transport reports available queue depth based on SEND/RECV operation limits and will queue in software as necessary to accommodate (usually lower) limits on READ/WRITE operations.

块设备bdev层

介绍

块设备是支持以固定大小的块读取和写入数据的存储设备。这些块通常为512或4096字节。设备可以是软件中的逻辑构造,或者对应于诸如NVMe SSD的物理设备。

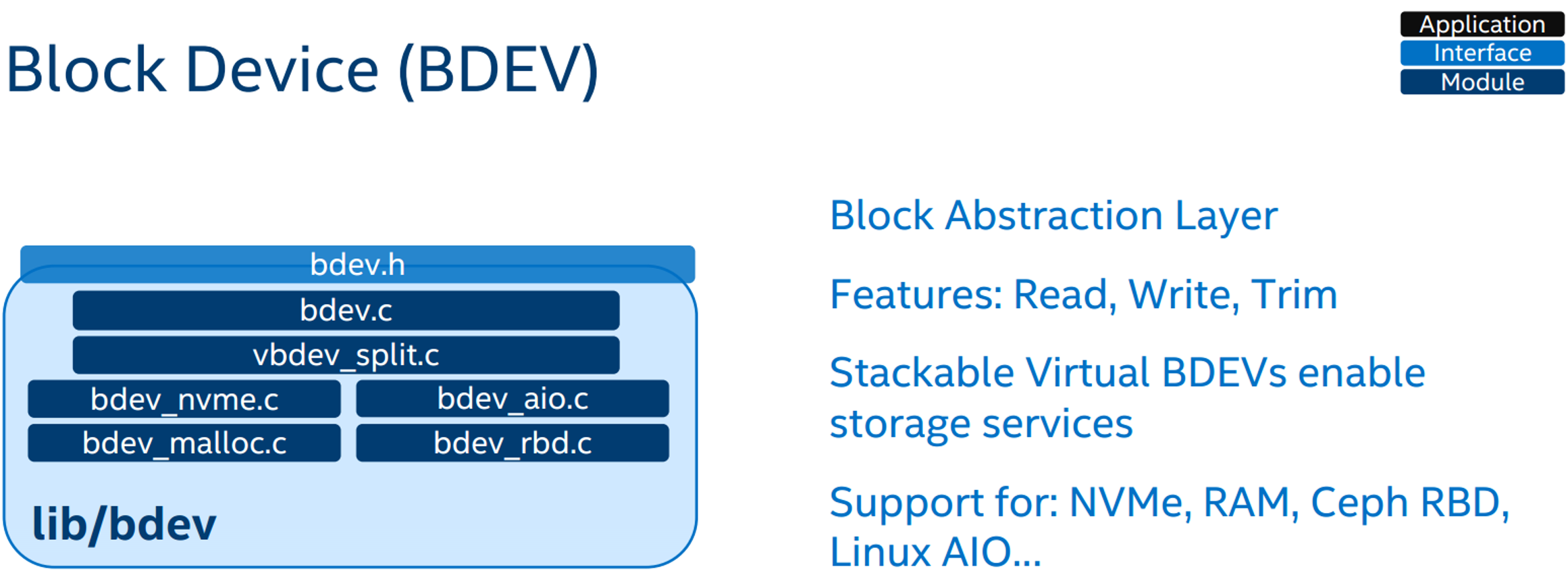

块设备层包含单个通用库lib/bdev,以及实现各种类型的块设备的许多可选模块(作为单独的库)。通用库的公共头文件是bdev.h,它是与任何类型的块设备交互所需的全部API。

下面将介绍如何使用该API与bdev进行交互。有关实现bdev模块的指南,请参阅编写自定义块设备模块。

除了为所有块设备提供通用抽象之外,bdev层还提供了许多有用的功能:

- 响应队列满或内存不足的情况自动排队I / O请求

- 支持热移除,即使在I / O流量发生时也是如此。

- I / O统计信息,如带宽和延迟

- 设备重置支持,和I / O超时跟踪

基本原语

bdev API的用户与许多基本对象进行交互。

struct spdk_bdev,本指南将其称为bdev,表示通用块设备。struct spdk_bdev_desc,此前称为描述符,表示给定块设备的句柄。描述符用于建立和跟踪使用底层块设备的权限,非常类似于UNIX系统上的文件描述符。对块设备的请求是异步的,由spdk_bdev_io对象表示。请求必须在关联的I / O channel上提交。消息传递和并发中描述了I / O channel的动机和设计。

Bdev可以是分层的,这样一些bdev通过将请求路由到其他bdev来服务I / O. 这可用于实现缓存,RAID,逻辑卷管理等。的BDEV该路线I / O到其他的BDEV通常被称为虚拟的BDEV,或vbdevs的简称。

初始化库

bdev层依赖于头文件include/spdk/thread.h抽象的通用消息传递基础结构。有关完整说明,请参阅消息传递和并发。最重要的是,只能通过调用spdk_allocate_thread()从已经分配了SPDK的线程调用bdev库。

从分配的线程中,可以通过调用spdk_bdev_initialize()来初始化bdev库,这是一个异步操作。在调用完成回调之前,不能调用其他bdev库函数。同样,要拆除bdev库,请调用spdk_bdev_finish()。

发现块设备

所有块设备都有一个简单的字符串名称。在任何时候,都可以通过调用spdk_bdev_get_by_name()来获取指向设备对象的指针,或者可以使用spdk_bdev_first()和spdk_bdev_next()及其变体来迭代整个bdev集。

一些块设备也可以给出别名,也是字符串名称。别名的行为类似于符号链接 - 它们可以与实名互换使用以查找块设备。

准备使用块设备

为了将I / O请求发送到块设备,必须首先通过调用spdk_bdev_open()来打开它。这将返回一个描述符。多个用户可能同时打开bdev,并且用户之间的读写协调必须由bdev层之外的某些更高级别的机制来处理。如果虚拟bdev模块声明了bdev,则打开具有写入权限的bdev可能会失败。虚拟bdev模块实现RAID或逻辑卷管理之类的逻辑,并将其I / O转发到较低级别的bdev,因此它们将这些较低级别的bdev标记为声称可防止外部用户发出写入。

打开块设备时,可以提供可选的回调和上下文,如果删除了为块设备提供服务的底层存储,则将调用该回调和上下文。例如,当NVMe SSD热插拔时,将在物理NVMe SSD支持的bdev的每个打开描述符上调用remove回调。回调可以被认为是关闭打开描述符的请求,因此可以释放其他内存。当存在开放描述符时,不能拆除bdev,因此强烈建议提供回调。

当用户完成描述符时,他们可以通过调用spdk_bdev_close()来释放它。

描述符可以同时传递给多个线程并从中使用。但是,对于每个线程,必须通过调用spdk_bdev_get_io_channel()获得单独的I / O channel。这将分配必要的每线程资源,以便在不接受锁定的情况下向bdev提交I / O请求。要释放channel,请调用spdk_put_io_channel()。在销毁所有相关 channel之前,不能关闭描述符。

SPDK 的I/O 路径采用无锁化机制。当多个thread操作同意SPDK 用户态block device (bdev) 时,SPDK会提供一个I/O channel的概念 (即thread和device的一个mapping关系)。不同的thread 操作同一个device应该拥有不同的I/O channel,每个I/O channel在I/O路径上使用自己独立的资源就可以避免资源竞争,从而去除锁的机制。详见SPDK进程间的高效通信。

发送I / O

一旦一个描述符和一个信道已经获得,I / O可以通过调用各种I / O功能提交诸如发送spdk_bdev_read() 。这些调用都将回调作为参数,稍后将使用spdk_bdev_io对象的句柄调用该参数。响应完成,用户必须调用spdk_bdev_free_io()来释放资源。在此回调中,用户还可以使用函数spdk_bdev_io_get_nvme_status()和spdk_bdev_io_get_scsi_status()以他们选择的格式获取错误信息。

通过调用spdk_bdev_read()或spdk_bdev_write()等函数来执行I / O提交。这些函数将一个指向内存区域的指针或一个描述将被传输到块设备的内存的分散集合列表作为参数。必须通过spdk_dma_malloc()或其变体分配此内存。有关内存必须来自特殊分配池的完整说明,请参阅用户空间驱动程序的内存管理。在可能的情况下,内存中的数据将使用直接内存访问直接传输到块设备。这意味着它不会被复制。

所有I / O提交功能都是异步和非阻塞的。它们不会因任何原因阻塞或停止线程。但是,I / O提交功能可能会以两种方式之一失败。首先,它们可能会立即失败并返回错误代码。在这种情况下,将不会调用提供的回调。其次,它们可能异步失败。在这种情况下,关联的spdk_bdev_io将传递给回调,它将报告错误信息。

某些I / O请求类型是可选的,给定的bdev可能不支持。要查询bdev以获取其支持的I / O请求类型,请调用spdk_bdev_io_type_supported()。

重置块设备

为了处理意外的故障情况,bdev库提供了一种通过调用spdk_bdev_reset()来执行设备重置的机制。这会将消息传递给bdev存在I / O channel的每个其他线程,暂停它,然后将重置请求转发到底层bdev模块并等待完成。完成后,I / O channel将恢复,重置将完成。bdev模块中的特定行为是特定于模块的。例如,NVMe设备将删除所有队列对,执行NVMe重置,然后重新创建队列对并继续。最重要的是,无论设备类型如何,块设备的所有未完成的I / O都将在重置完成之前完成。

rpc到bdev流程

./script/rpc.py construct_malloc_bdev -b Malloc0 64 512 :

--------[rpc.py] p = subparsers.add_parser('construct_malloc_bdev',````)

---------[rpc.py] def construct_malloc_bdev(args) 中 rpc.bdev.construct_malloc_bdev

---------------[script/rpc/bdev.py] def construct_malloc_bdev(client, num_blocks, block_size, name=None, uuid=None) 中client.call('construct_malloc_bdev', params)

---------------------[lib/bdev/malloc/bdev_malloc_rpc.c ] SPDK_RPC_REGISTER("construct_malloc_bdev", spdk_rpc_construct_malloc_bdev, ····)

spdk_rpc_construct_malloc_bdev()

----------------------------[lib/bdev/malloc/bdev_malloc.c] create_malloc_disk()

来源:oschina

链接:https://my.oschina.net/u/4306156/blog/3598117