这一周我学习了python的一些基本语法和函数 以及如何从不同类型的网站爬虫

爬取了丁香园-的疫情数据还有拉勾网,猫眼的一些信息

学到两种方法,一种是xpath方法,一种是正则表达式

xpath

import requests

from lxml import etree

import xlwt

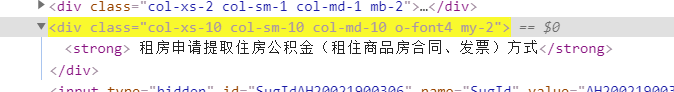

url='http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=AH20021900306'

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36"

}

response=requests.post(url,headers=headers)

res_html=etree.HTML(response.text)//将爬取出的数据解析为要xpath的形式

dd_list=res_html.xpath('//*[@id="f_baner"]/div[1]/div[1]/div[2]/strong/text()')[0].strip()

print(dd_list)

提取选择的文字,.strip()将空格切割出去

提取选择的文字,.strip()将空格切割出去

还有要爬取的数据就在源代码上,用正则表达式

import requests

import re

import xlwt

url = 'https://maoyan.com/board/4?'

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36"

}

def get_page(url):

try:

response = requests.get(url, headers=headers)

response.encoding = 'utf-8'

if response.status_code == 200:

return response.text

else:

print('获取网页失败')

except Exception as e:

print(e)

def get_info(page):

items = re.findall('.*?class="name"><a href=".*?" title="(.*?)".*?">.*?</a></p>.*?<p class="releasetime">(.*?)</p>',page,re.S)//(.*?)表示你要爬取的内容

for item in items:

data = {}

data['name'] = item[0]

data['time'] = item[1]

# print (123)

print(data)

yield data

urls = ['https://maoyan.com/board/4?offset={}'.format(i * 10) for i in range(10)]

DATA = []

for url in urls:

print (url)

page = get_page(url)

datas = get_info(page)

for data in datas:

DATA.append(data) # 将所有的数据添加到DATA里

f = xlwt.Workbook(encoding='utf-8')

sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True)

sheet01.write(0, 0, 'name') # 第一行第一列

sheet01.write(0, 1, 'time') # 第一行第一列

# 写内容

for i in range(len(DATA)):

sheet01.write(i + 1, 0, DATA[i]['name'])

sheet01.write(i + 1, 1, DATA[i]['time'])

print('p', end='')

f.save('F:\\作业.xls')

还有数据在json上是通过ajax来获取的

import requests

import time

import json

import xlwt

urls='https://www.lagou.com/jobs/list_%E7%88%AC%E8%99%AB/p-city_0?&cl=false&fromSearch=true&labelWords=&suginput='

url = 'https://www.lagou.com/jobs/positionAjax.json?needAddtionalResult=false'

headers = {

'Host': 'www.lagou.com',

'Origin': 'https://www.lagou.com',

'Referer': 'https://www.lagou.com/jobs/list_%E7%88%AC%E8%99%AB/p-city_0?&cl=false&fromSearch=true&labelWords=&suginput=',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36',

'X-Requested-With': 'XMLHttpRequest'

}

# data参数在network/XHR中找到包含数据的网页,然后在该网页headers的最下面可以找到data参数。

# 每一页url不变,但data里面的参数会变,是用来控制搜索关键字和页数的。

def get_page(url, i):

data = {

'first': 'false',

'kd': '爬虫', # 搜索关键词 改关键字的时候,headers里面的referer也要改。

'pn': i # 第几页

}

try:

s = requests.Session()

s.get(urls, headers=headers, timeout=3) # 请求首页获取cookies

cookie = s.cookies # 为此次获取的cookies

response = s.post(url, data=data, headers=headers, cookies=cookie, timeout=3) # 获取此次文本

time.sleep(5)

response.raise_for_status()

response.encoding = response.apparent_encoding

return response.json()

except requests.ConnectionError as e:

print('Error:', e.args)

def get_info(json):

json = json['content']['positionResult']['result']

for item in json:

data = {}

data['companyFullName'] = item['companyFullName']

data['city'] = item['city']

data['district'] = item['district']

data['education'] = item['education']

data['financeStage'] = item['financeStage']

data['industryField'] = item['industryField']

data['companySize'] = item['companySize']

data['positionName'] = item['positionName']

data['salary'] = item['salary']

data['workYear'] = item['workYear']

yield data

DATA = []

for i in range(1, 3):

json = get_page(url, i)

print('请求:' + str(i) + url)

time.sleep(2)

datas = get_info(json) # 如果这一步出了问题,那么可能就是因为上一步就出了问题。

for data in datas:

DATA.append(data)

'''

with open('./拉勾网.text','w',encoding='utf-8') as fp:

fp.write(str(DATA))'''

'''f = xlwt.Workbook(encoding='utf-8')

sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True)

sheet01.write(0, 0, 'companyFullName') # 第一行第一列

sheet01.write(0, 1, 'city')

sheet01.write(0, 2, 'district')

sheet01.write(0, 3, 'education')

sheet01.write(0, 4, 'financeStage')

sheet01.write(0, 5, 'positionName')

sheet01.write(0, 6, 'salary')

sheet01.write(0, 7, 'workYear')

sheet01.write(0, 8, 'companySize')

sheet01.write(0, 9, 'industryField')

# 写内容

for i in range(len(DATA)):

sheet01.write(i + 1, 0, DATA[i]['companyFullName'])

sheet01.write(i + 1, 1, DATA[i]['city'])

sheet01.write(i + 1, 2, DATA[i]['district'])

sheet01.write(i + 1, 3, DATA[i]['education'])

sheet01.write(i + 1, 4, DATA[i]['financeStage'])

sheet01.write(i + 1, 5, DATA[i]['positionName'])

sheet01.write(i + 1, 6, DATA[i]['salary'])

sheet01.write(i + 1, 7, DATA[i]['workYear'])

sheet01.write(i + 1, 8, DATA[i]['companySize'])

sheet01.write(i + 1, 9, DATA[i]['industryField'])

print('p', end='')

f.save(u'F:\\爬虫.xls')

'''

来源:https://www.cnblogs.com/zlj843767688/p/12345261.html