This graph trains a simple signal identity encoder, and in fact shows that the weights are being evolved by the optimizer:

import tensorflow as tf

import numpy as np

initia = tf.random_normal_initializer(0, 1e-3)

DEPTH_1 = 16

OUT_DEPTH = 1

I = tf.placeholder(tf.float32, shape=[None,1], name='I') # input

W = tf.get_variable('W', shape=[1,DEPTH_1], initializer=initia, dtype=tf.float32, trainable=True) # weights

b = tf.get_variable('b', shape=[DEPTH_1], initializer=initia, dtype=tf.float32, trainable=True) # biases

O = tf.nn.relu(tf.matmul(I, W) + b, name='O') # activation / output

#W1 = tf.get_variable('W1', shape=[DEPTH_1,DEPTH_1], initializer=initia, dtype=tf.float32) # weights

#b1 = tf.get_variable('b1', shape=[DEPTH_1], initializer=initia, dtype=tf.float32) # biases

#O1 = tf.nn.relu(tf.matmul(O, W1) + b1, name='O1')

W2 = tf.get_variable('W2', shape=[DEPTH_1,OUT_DEPTH], initializer=initia, dtype=tf.float32) # weights

b2 = tf.get_variable('b2', shape=[OUT_DEPTH], initializer=initia, dtype=tf.float32) # biases

O2 = tf.matmul(O, W2) + b2

O2_0 = tf.gather_nd(O2, [[0,0]])

estimate0 = 2.0*O2_0

eval_inp = tf.gather_nd(I,[[0,0]])

k = 1e-5

L = 5.0

distance = tf.reduce_sum( tf.square( eval_inp - estimate0 ) )

opt = tf.train.GradientDescentOptimizer(1e-3)

grads_and_vars = opt.compute_gradients(distance, [W, b, #W1, b1,

W2, b2])

clipped_grads_and_vars = [(tf.clip_by_value(g, -4.5, 4.5), v) for g, v in grads_and_vars]

train_op = opt.apply_gradients(clipped_grads_and_vars)

saver = tf.train.Saver()

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

for i in range(10000):

print sess.run([train_op, I, W, distance], feed_dict={ I: 2.0*np.random.rand(1,1) - 1.0})

for i in range(10):

print sess.run([eval_inp, W, estimate0], feed_dict={ I: 2.0*np.random.rand(1,1) - 1.0})

However, when I uncomment the intermediate hidden layer and train the resulting network, I see that the weights are not evolving anymore:

import tensorflow as tf

import numpy as np

initia = tf.random_normal_initializer(0, 1e-3)

DEPTH_1 = 16

OUT_DEPTH = 1

I = tf.placeholder(tf.float32, shape=[None,1], name='I') # input

W = tf.get_variable('W', shape=[1,DEPTH_1], initializer=initia, dtype=tf.float32, trainable=True) # weights

b = tf.get_variable('b', shape=[DEPTH_1], initializer=initia, dtype=tf.float32, trainable=True) # biases

O = tf.nn.relu(tf.matmul(I, W) + b, name='O') # activation / output

W1 = tf.get_variable('W1', shape=[DEPTH_1,DEPTH_1], initializer=initia, dtype=tf.float32) # weights

b1 = tf.get_variable('b1', shape=[DEPTH_1], initializer=initia, dtype=tf.float32) # biases

O1 = tf.nn.relu(tf.matmul(O, W1) + b1, name='O1')

W2 = tf.get_variable('W2', shape=[DEPTH_1,OUT_DEPTH], initializer=initia, dtype=tf.float32) # weights

b2 = tf.get_variable('b2', shape=[OUT_DEPTH], initializer=initia, dtype=tf.float32) # biases

O2 = tf.matmul(O1, W2) + b2

O2_0 = tf.gather_nd(O2, [[0,0]])

estimate0 = 2.0*O2_0

eval_inp = tf.gather_nd(I,[[0,0]])

distance = tf.reduce_sum( tf.square( eval_inp - estimate0 ) )

opt = tf.train.GradientDescentOptimizer(1e-3)

grads_and_vars = opt.compute_gradients(distance, [W, b, W1, b1,

W2, b2])

clipped_grads_and_vars = [(tf.clip_by_value(g, -4.5, 4.5), v) for g, v in grads_and_vars]

train_op = opt.apply_gradients(clipped_grads_and_vars)

saver = tf.train.Saver()

init_op = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init_op)

for i in range(10000):

print sess.run([train_op, I, W, distance], feed_dict={ I: 2.0*np.random.rand(1,1) - 1.0})

for i in range(10):

print sess.run([eval_inp, W, estimate0], feed_dict={ I: 2.0*np.random.rand(1,1) - 1.0})

The evaluation of estimate0 converging quickly in some fixed value that becomes independient from the input signal. I have no idea why this is happening

Question:

Any idea what might be wrong with the second example?

TL;DR: the deeper the neural network becomes, the more you should pay attention to the gradient flow (see this discussion of "vanishing gradients"). One particular case is variables initialization.

Problem analysis

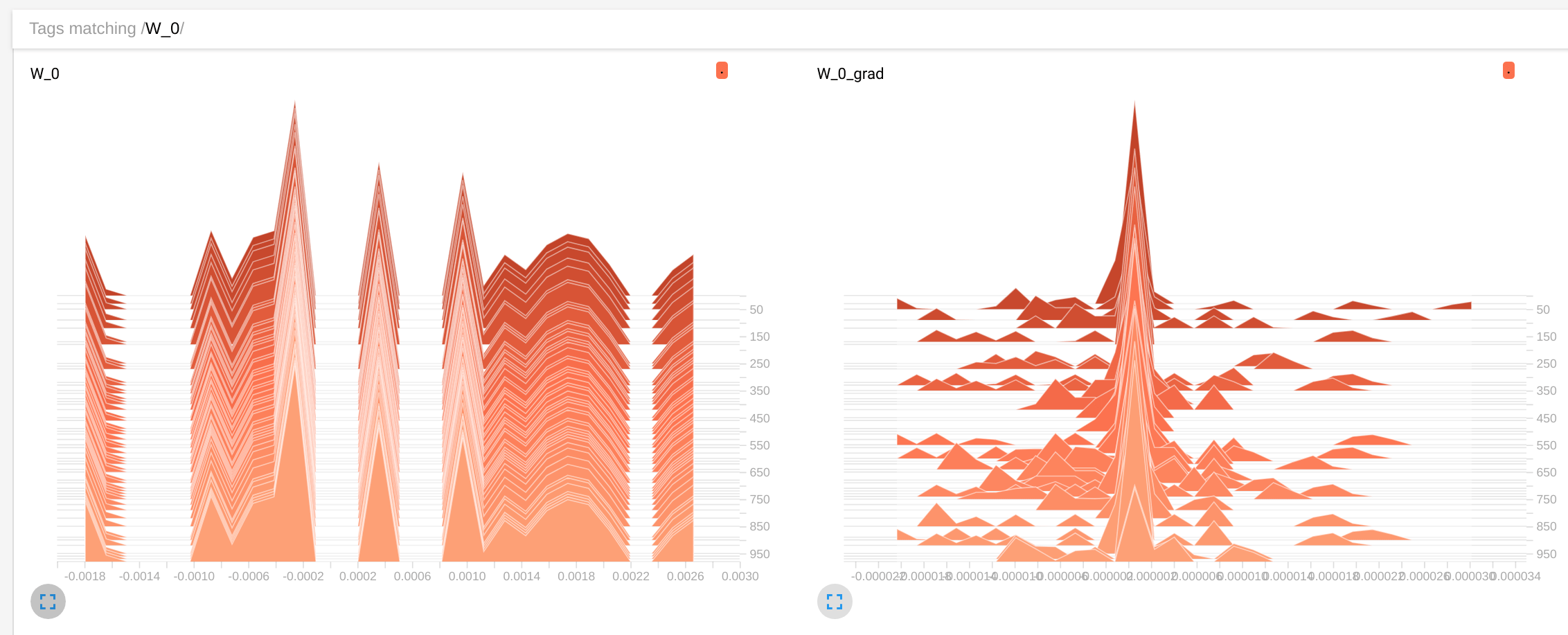

I've added tensorboard summaries for the variables and gradients into both of your scripts and got the following:

2-layer network

3-layer network

The charts show the distributions of W:0 variable (the first layer) and how they are changed from 0 epoch to 1000 (clickable). Indeed, we can see, the rate of change is much higher in a 2-layer network. But I'd like to pay attention to the gradient distribution, which is much closer to 0 in a 3-layer network (first variance is around 0.005, the second one is around 0.000002, i.e. 1000 times smaller). This is the vanishing gradient problem.

Here's the helper code if you're interested:

for g, v in grads_and_vars:

tf.summary.histogram(v.name, v)

tf.summary.histogram(v.name + '_grad', g)

merged = tf.summary.merge_all()

writer = tf.summary.FileWriter('train_log_layer2', tf.get_default_graph())

...

_, summary = sess.run([train_op, merged], feed_dict={I: 2*np.random.rand(1, 1)-1})

if i % 10 == 0:

writer.add_summary(summary, global_step=i)

Solution

All deep networks suffer from this to some extent and there is no universal solution that will auto-magically fix any network. But there are some techniques that can push it in the right direction. Initialization is one of them.

I replaced your normal initialization with:

W_init = tf.contrib.layers.xavier_initializer()

b_init = tf.constant_initializer(0.1)

There are lots of tutorials on Xavier init, you can take a look at this one, for example. Note that I set the bias init to be slightly positive to make sure that ReLu outputs are positive for the most of neurons, at least in the beginning.

This changed the picture immediately:

The weights are still not moving quite as fast as before, but they are moving (note the scale of W:0 values) and the gradients distribution became much less peaked at 0, thus much better.

Of course, it's not the end. To improve it further, you should implement the full autoencoder, because currently the loss is affected by the [0,0] element reconstruction, so most outputs aren't used in optimization. You can also play with different optimizers (Adam would be my choice) and the learning rates.

来源:https://stackoverflow.com/questions/48816873/intermediate-layer-makes-tensorflow-optimizer-to-stop-working