安装Scrapy进过的坑

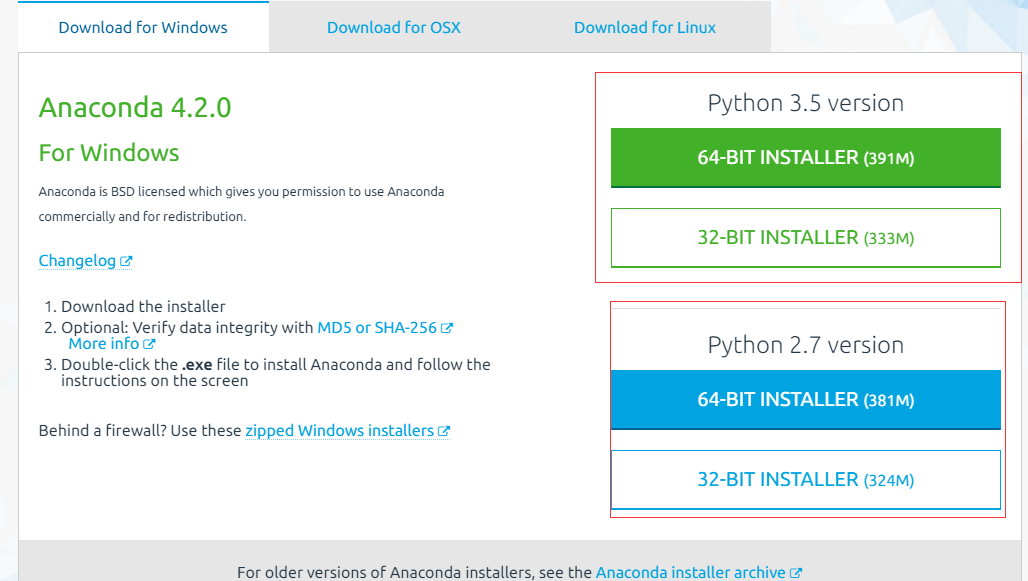

在学习爬虫的时候,也上网搜过不少相关教程,最终决定选择在Linux上开发,只能用虚拟机了,但是虚拟机比较卡,也比较占用系统资源,所以决定尝试在Windows win7上安装爬虫Scrapy,可以说安装过程是这个坑跳到那个坑,累觉不爱啊。后来经过多方打探,终于找到一款安装Scrapy的利器,真正的利器,下面放上地址:https://www.continuum.io/downloads

安装Python2.7

系统版本:Win7 64位

选择的版本为2.7,因为2.7比较成熟,点击下载,一路安装,其中有一个界面是选择是否要覆盖本地已经安装的Python版本,选择是,最好是和安装包一起配套安装,不然会出现不可知的错误。或者直接卸载本地已经安装的Python版本,目录手动删除。我就是先卸载本地安装的版本,删除目录,然后一路next,这样更省心。默认会安装最新版本的Python。

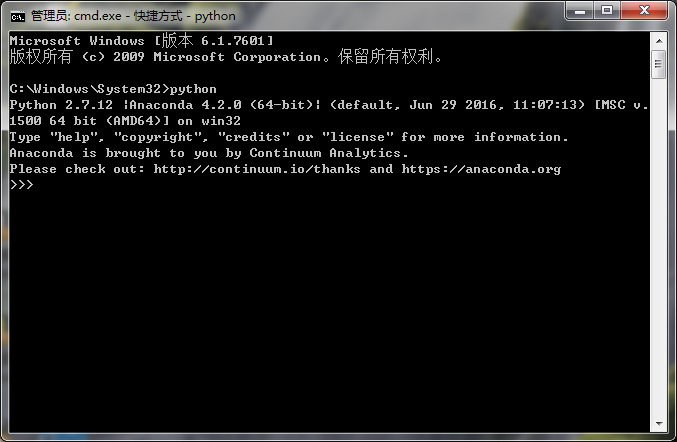

安装完成后,检测Python版本,以管理员身份打开cmd:

使用命令:python

说明已经是最新的版本了,这下就放心了。

安装爬虫Scrapy

使用命令:conda intall scrapy

C:\Windows\System32>

C:\Windows\System32>conda install scrapy

Fetching package metadata .........

Solving package specifications: ..........

Package plan for installation in environment C:\Program Files\Anaconda2:

The following packages will be downloaded:

package | build

---------------------------|-----------------

twisted-16.6.0 | py27_0 4.4 MB

service_identity-16.0.0 | py27_0 13 KB

scrapy-1.1.1 | py27_0 378 KB

------------------------------------------------------------

Total: 4.8 MB

The following NEW packages will be INSTALLED:

attrs: 15.2.0-py27_0

conda-env: 2.6.0-0

constantly: 15.1.0-py27_0

cssselect: 1.0.0-py27_0

incremental: 16.10.1-py27_0

parsel: 1.0.3-py27_0

pyasn1-modules: 0.0.8-py27_0

pydispatcher: 2.0.5-py27_0

queuelib: 1.4.2-py27_0

scrapy: 1.1.1-py27_0

service_identity: 16.0.0-py27_0

twisted: 16.6.0-py27_0

w3lib: 1.16.0-py27_0

zope: 1.0-py27_0

zope.interface: 4.3.2-py27_0

The following packages will be UPDATED:

conda: 4.2.9-py27_0 --> 4.2.13-py27_0

Proceed ([y]/n)? y

Fetching packages ...

An unexpected error has occurred. | ETA: 0:11:48 4.17 kB/s

Please consider posting the following information to the

conda GitHub issue tracker at:

https://github.com/conda/conda/issues

Current conda install:

platform : win-64

conda version : 4.2.9

conda is private : False

conda-env version : 4.2.9

conda-build version : 2.0.2

python version : 2.7.12.final.0

requests version : 2.11.1

root environment : C:\Program Files\Anaconda2 (writable)

default environment : C:\Program Files\Anaconda2

envs directories : C:\Program Files\Anaconda2\envs

package cache : C:\Program Files\Anaconda2\pkgs

channel URLs : https://repo.continuum.io/pkgs/free/win-64/

https://repo.continuum.io/pkgs/free/noarch/

https://repo.continuum.io/pkgs/pro/win-64/

https://repo.continuum.io/pkgs/pro/noarch/

https://repo.continuum.io/pkgs/msys2/win-64/

https://repo.continuum.io/pkgs/msys2/noarch/

config file : None

offline mode : False

`$ C:\Program Files\Anaconda2\Scripts\conda-script.py install scrapy`

Traceback (most recent call last):

File "C:\Program Files\Anaconda2\lib\site-packages\conda\exceptions.py", l

ine 473, in conda_exception_handler

return_value = func(*args, **kwargs)

File "C:\Program Files\Anaconda2\lib\site-packages\conda\cli\main.py", lin

e 144, in _main

exit_code = args.func(args, p)

File "C:\Program Files\Anaconda2\lib\site-packages\conda\cli\main_install.

py", line 80, in execute

install(args, parser, 'install')

File "C:\Program Files\Anaconda2\lib\site-packages\conda\cli\install.py",

line 420, in install

raise CondaRuntimeError('RuntimeError: %s' % e)

CondaRuntimeError: Runtime error: RuntimeError: Runtime error: Could not ope

n u'C:\\Program Files\\Anaconda2\\pkgs\\twisted-16.6.0-py27_0.tar.bz2.part' for

writing (HTTPSConnectionPool(host='repo.continuum.io', port=443): Read timed out

.).手动安装twisted库

发现是在安装Twisted库的时候超时了,所以呢,就单独安装这个库吧

使用命令:conda install twisted

C:\Windows\System32>conda install twisted

Fetching package metadata .........

Solving package specifications: ..........

Package plan for installation in environment C:\Program Files\Anaconda2:

The following packages will be downloaded:

package | build

---------------------------|-----------------

twisted-16.6.0 | py27_0 4.4 MB

The following NEW packages will be INSTALLED:

conda-env: 2.6.0-0

constantly: 15.1.0-py27_0

incremental: 16.10.1-py27_0

twisted: 16.6.0-py27_0

zope: 1.0-py27_0

zope.interface: 4.3.2-py27_0

The following packages will be UPDATED:

conda: 4.2.9-py27_0 --> 4.2.13-py27_0

Proceed ([y]/n)? y

Fetching packages ...

twisted-16.6.0 100% |###############################| Time: 0:01:09 66.89 kB/s

Extracting packages ...

[ COMPLETE ]|##################################################| 100%

Unlinking packages ...

[ COMPLETE ]|##################################################| 100%

Linking packages ...

[ COMPLETE ]|##################################################| 100%显示安装成功,没有任何错误,然后开始安装爬虫Scrapy

使用命令:conda install scrapy

C:\Windows\System32>conda install scrapy

Fetching package metadata .........

Solving package specifications: ..........

Package plan for installation in environment C:\Program Files\Anaconda2:

The following packages will be downloaded:

package | build

---------------------------|-----------------

service_identity-16.0.0 | py27_0 13 KB

scrapy-1.1.1 | py27_0 378 KB

------------------------------------------------------------

Total: 391 KB

The following NEW packages will be INSTALLED:

attrs: 15.2.0-py27_0

cssselect: 1.0.0-py27_0

parsel: 1.0.3-py27_0

pyasn1-modules: 0.0.8-py27_0

pydispatcher: 2.0.5-py27_0

queuelib: 1.4.2-py27_0

scrapy: 1.1.1-py27_0

service_identity: 16.0.0-py27_0

w3lib: 1.16.0-py27_0

Proceed ([y]/n)? y

Fetching packages ...

service_identi 100% |###############################| Time: 0:00:00 68.39 kB/s

scrapy-1.1.1-p 100% |###############################| Time: 0:00:05 65.50 kB/s

Extracting packages ...

[ COMPLETE ]|##################################################| 100%

Linking packages ...

[ COMPLETE ]|##################################################| 100%刚才已经安装过Twisted库了,这次不会超时了,显示安装成功,没有任何报错

测试安装Scrapy是否成功

测试是否已经安装成功了,

测试命令:scrapy

scrapy startproject hello

C:\Windows\System32>scrapy

Scrapy 1.1.1 - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

commands

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run from project directory

Use "scrapy <command> -h" to see more info about a command

C:\Windows\System32>d:

D:\>dir

驱动器 D 中的卷没有标签。

卷的序列号是 0002-9E3C

D:\ 的目录

2016/12/03 12:20 399,546,128 Anaconda2-4.2.0-Windows-x86_64.exe

2016/12/03 09:43 <DIR> Program Files (x86)

2016/12/03 16:57 <DIR> python-project

2016/12/03 09:43 <DIR> 新建文件夹

2016/12/03 12:19 <DIR> 迅雷下载

1 个文件 399,546,128 字节

4 个目录 38,932,201,472 可用字节

D:\>cd python-project

D:\python-project>scrapy startproject hello

New Scrapy project 'hello', using template directory 'C:\\Program Files\\Anacond

a2\\lib\\site-packages\\scrapy\\templates\\project', created in:

D:\python-project\hello

You can start your first spider with:

cd hello

scrapy genspider example example.com

D:\python-project>tree /f

文件夹 PATH 列表

卷序列号为 0002-9E3C

D:.

└─hello

│ scrapy.cfg

│

└─hello

│ items.py

│ pipelines.py

│ settings.py

│ __init__.py

│

└─spiders

__init__.py

D:\python-project>可以看出可以使用scrapy命令创建爬虫工程,剩下的就是快乐的啪啪啪吧。

来源:oschina

链接:https://my.oschina.net/u/39617/blog/796932