项目简介

本项目使用paddle实现图像分类模型 MobileNet-V1网络的训练和预测。MobileNet-V1是针对传统卷积模块计算量大的缺点进行改进后,提出的一种更高效的能够在移动设备上部署的轻量级神经网络,建议使用GPU运行。动态图版本请查看:用PaddlePaddle实现图像分类-MobileNet(动态图版)

下载安装命令

## CPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/cpu paddlepaddle

## GPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/gpu paddlepaddle-gpu

模型结构

MobileNet的核心思想是将传统卷积分解为深度可分离卷积与1 x 1卷积。深度可分离卷积是指输入特征图的每个channel都对应一个卷积核,这样输出的特征的每个channel只与输入特征图对应的channel相关,具体的例如输入一个K×M×NK\times M\times NK×M×N的特征图,其中K为特征图的通道数,M、N为特征图的宽高,假设传统卷积需要一个大小为C×K×3×3C\times K\times 3\times 3C×K×3×3的卷积核来得到输出大小为C×M′×N′C\times M^{'}\times N^{'}C×M′×N′的新的特征图。而深度可分离卷积则是首先使用K个大小为3×33\times 33×3的卷积核分别对输入的K个channel进行卷积得到K个特征图(DepthWise Conv部分),然后再使用大小为C×K×1×1C\times K \times 1\times 1C×K×1×1的卷积来得到大小为C×M′×N′C\times M^{'}\times N^{'}C×M′×N′的输出(PointWise Conv部分)。这种卷积操作能够显著的降低模型的大小和计算量,而在性能上能够与标准卷积相当,深度可分离卷积具体的结构如下图。

假设输入特征图大小为:Cin×Hin×WinC_{in}\times H_{in}\times W_{in}Cin×Hin×Win,使用卷积核为K×KK\times KK×K,输出的特征图大小为Cout×Hout×WoutC_{out}\times H_{out}\times W_{out}Cout×Hout×Wout,对于标准的卷积,其计算量为:

K×K×Cint×Cout×Hout×WoutK\times K\times C_{int}\times C_{out}\times H_{out}\times W_{out}K×K×Cint×Cout×Hout×Wout

对于分解后的深度可分离卷积,计算量可通过DW部分和PW部分计算量的和得到,公式如下:

K×K×Cint×Hout×Wout+Cint×Cout×Hout×WoutK\times K\times C_{int}\times H_{out}\times W_{out} + C_{int}\times C_{out}\times H_{out}\times W_{out}K×K×Cint×Hout×Wout+Cint×Cout×Hout×Wout

因此相比于标准卷积,深度可分离卷积的计算量降低了:

K×K×Cint×Hout×Wout+Cint×Cout×Hout×WoutK×K×Cint×Cout×Hout×Wout=1Cout+1K2\frac{K\times K\times C_{int}\times H_{out}\times W_{out} + C_{int}\times C_{out}\times H_{out}\times W_{out}}{K\times K\times C_{int}\times C_{out}\times H_{out}\times W_{out}}= \frac{1}{C_{out}} + \frac{1}{K^2}K×K×Cint×Cout×Hout×WoutK×K×Cint×Hout×Wout+Cint×Cout×Hout×Wout=Cout1+K21

由上式可知,对于一个大小为3×33\times 33×3的卷积核,计算量降低了约7-9倍。

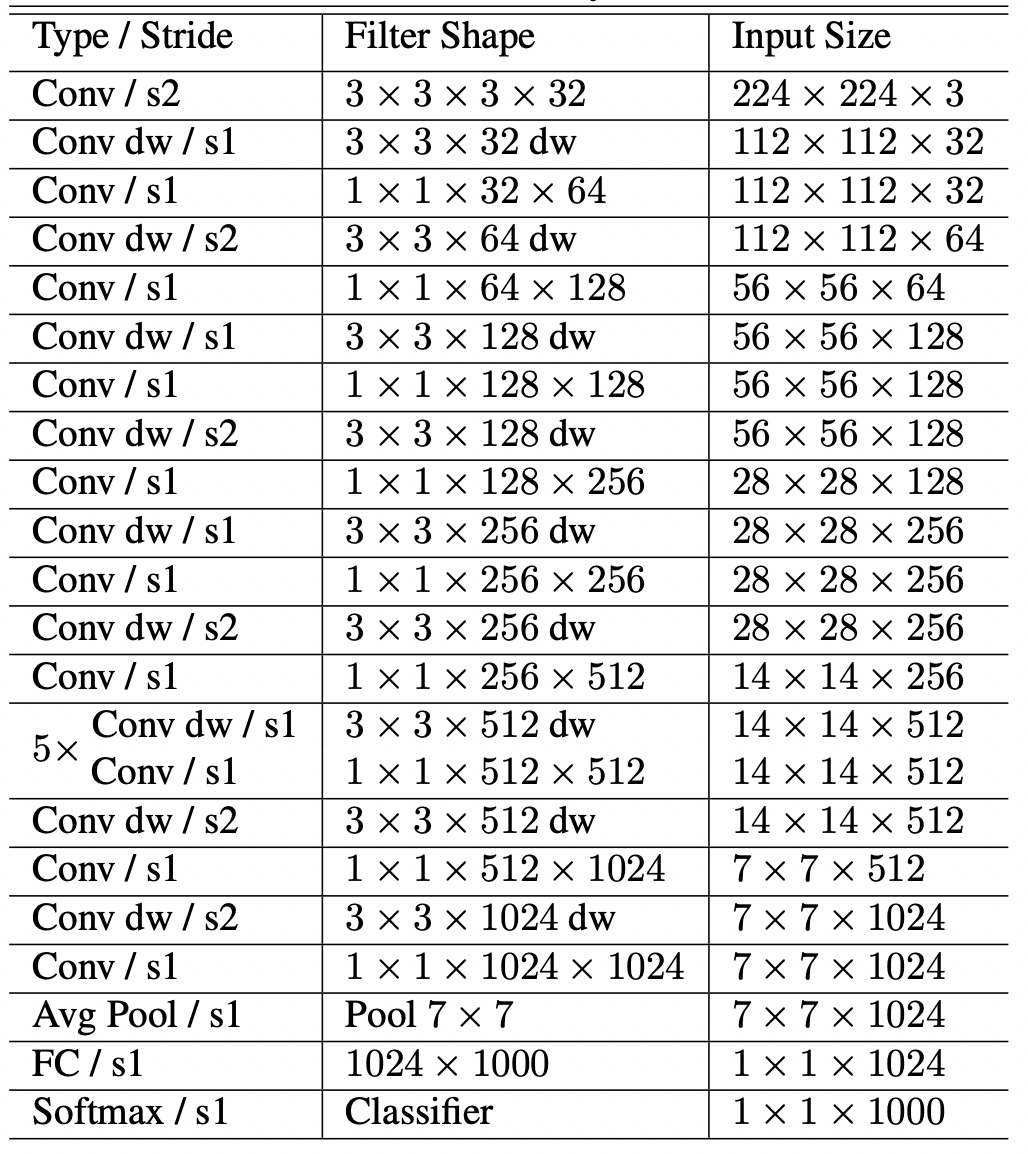

MobileNet-V1的网络结构比较简单直观,采用VGG类似的直筒型结构,具体结构如下表:

参考链接

论文原文:MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

参考博客:深度学习之MobileNetV1

数据介绍

使用公开鲜花据集,数据集压缩包里包含五个文件夹,每个文件夹一种花卉。分别是雏菊,蒲公英,玫瑰,向日葵,郁金香。每种各690-890张不等

# 解压花朵数据集 !cd data/data2815 && unzip -qo flower_photos.zip# 解压预训练参数 !cd data/data6591 && unzip -qo MobileNetV1_pretrained.zip预处理数据,将其转化为需要的格式

# 预处理数据,将其转化为标准格式。同时将数据拆分成两份,以便训练和计算预估准确率 import codecs import os import random import shutil from PIL import Image train_ratio = 4.0 / 5 all_file_dir = 'data/data2815' class_list = [c for c in os.listdir(all_file_dir) if os.path.isdir(os.path.join(all_file_dir, c)) and not c.endswith('Set') and not c.startswith('.')] class_list.sort() print(class_list) train_image_dir = os.path.join(all_file_dir, "trainImageSet") if not os.path.exists(train_image_dir): os.makedirs(train_image_dir) eval_image_dir = os.path.join(all_file_dir, "evalImageSet") if not os.path.exists(eval_image_dir): os.makedirs(eval_image_dir) train_file = codecs.open(os.path.join(all_file_dir, "train.txt"), 'w') eval_file = codecs.open(os.path.join(all_file_dir, "eval.txt"), 'w') with codecs.open(os.path.join(all_file_dir, "label_list.txt"), "w") as label_list: label_id = 0 for class_dir in class_list: label_list.write("{0}\t{1}\n".format(label_id, class_dir)) image_path_pre = os.path.join(all_file_dir, class_dir) for file in os.listdir(image_path_pre): try: img = Image.open(os.path.join(image_path_pre, file)) if random.uniform(0, 1) <= train_ratio: shutil.copyfile(os.path.join(image_path_pre, file), os.path.join(train_image_dir, file)) train_file.write("{0}\t{1}\n".format(os.path.join(train_image_dir, file), label_id)) else: shutil.copyfile(os.path.join(image_path_pre, file), os.path.join(eval_image_dir, file)) eval_file.write("{0}\t{1}\n".format(os.path.join(eval_image_dir, file), label_id)) except Exception as e: pass # 存在一些文件打不开,此处需要稍作清洗 label_id += 1 train_file.close() eval_file.close()['daisy', 'dandelion', 'roses', 'sunflowers', 'tulips']

模型训练主体

# -*- coding: UTF-8 -*- """ 训练常用视觉基础网络,用于分类任务 需要将训练图片,类别文件 label_list.txt 放置在同一个文件夹下 程序会先读取 train.txt 文件获取类别数和图片数量 """ from __future__ import absolute_import from __future__ import division from __future__ import print_function import os import numpy as np import time import math import paddle import paddle.fluid as fluid import codecs import logging from paddle.fluid.initializer import MSRA from paddle.fluid.initializer import Uniform from paddle.fluid.param_attr import ParamAttr from PIL import Image from PIL import ImageEnhance train_parameters = { "input_size": [3, 224, 224], "class_dim": -1, # 分类数,会在初始化自定义 reader 的时候获得 "image_count": -1, # 训练图片数量,会在初始化自定义 reader 的时候获得 "label_dict": {}, "data_dir": "data/data2815", # 训练数据存储地址 "train_file_list": "train.txt", "label_file": "label_list.txt", "save_freeze_dir": "./freeze-model", "save_persistable_dir": "./persistable-params", "continue_train": False, # 是否接着上一次保存的参数接着训练,优先级高于预训练模型 "pretrained": True, # 是否使用预训练的模型 "pretrained_dir": "data/data6591/MobileNetV1_pretrained", "mode": "train", "num_epochs": 5, "train_batch_size": 128, "mean_rgb": [127.5, 127.5, 127.5], # 常用图片的三通道均值,通常来说需要先对训练数据做统计,此处仅取中间值 "use_gpu": True, "image_enhance_strategy": { # 图像增强相关策略 "need_distort": True, # 是否启用图像颜色增强 "need_rotate": True, # 是否需要增加随机角度 "need_crop": True, # 是否要增加裁剪 "need_flip": True, # 是否要增加水平随机翻转 "hue_prob": 0.5, "hue_delta": 18, "contrast_prob": 0.5, "contrast_delta": 0.5, "saturation_prob": 0.5, "saturation_delta": 0.5, "brightness_prob": 0.5, "brightness_delta": 0.125 }, "early_stop": { "sample_frequency": 50, "successive_limit": 3, "good_acc1": 0.92 }, "rsm_strategy": { "learning_rate": 0.0008, "lr_epochs": [20, 40, 60, 80, 100], "lr_decay": [1, 0.5, 0.25, 0.1, 0.01, 0.002] } } class MobileNet(): def __init__(self): pass def name(self): """ 返回网络名字 :return: """ return 'mobile-net' def net(self, input, class_dim=1000, scale=1.0): # conv1: 112x112 input = self.conv_bn_layer( input, filter_size=3, channels=3, num_filters=int(32 * scale), stride=2, padding=1, name="conv1") # 56x56 input = self.depthwise_separable( input, num_filters1=32, num_filters2=64, num_groups=32, stride=1, scale=scale, name="conv2_1") input = self.depthwise_separable( input, num_filters1=64, num_filters2=128, num_groups=64, stride=2, scale=scale, name="conv2_2") # 28x28 input = self.depthwise_separable( input, num_filters1=128, num_filters2=128, num_groups=128, stride=1, scale=scale, name="conv3_1") input = self.depthwise_separable( input, num_filters1=128, num_filters2=256, num_groups=128, stride=2, scale=scale, name="conv3_2") # 14x14 input = self.depthwise_separable( input, num_filters1=256, num_filters2=256, num_groups=256, stride=1, scale=scale, name="conv4_1") input = self.depthwise_separable( input, num_filters1=256, num_filters2=512, num_groups=256, stride=2, scale=scale, name="conv4_2") # 14x14 for i in range(5): input = self.depthwise_separable( input, num_filters1=512, num_filters2=512, num_groups=512, stride=1, scale=scale, name="conv5" + "_" + str(i + 1)) # 7x7 input = self.depthwise_separable( input, num_filters1=512, num_filters2=1024, num_groups=512, stride=2, scale=scale, name="conv5_6") input = self.depthwise_separable( input, num_filters1=1024, num_filters2=1024, num_groups=1024, stride=1, scale=scale, name="conv6") input = fluid.layers.pool2d( input=input, pool_size=0, pool_stride=1, pool_type='avg', global_pooling=True) output = fluid.layers.fc(input=input, size=class_dim, act='softmax', param_attr=ParamAttr( initializer=MSRA(), name="fc7_weights"), bias_attr=ParamAttr(name="fc7_offset")) return output def conv_bn_layer(self, input, filter_size, num_filters, stride, padding, channels=None, num_groups=1, act='relu', use_cudnn=True, name=None): conv = fluid.layers.conv2d( input=input, num_filters=num_filters, filter_size=filter_size, stride=stride, padding=padding, groups=num_groups, act=None, use_cudnn=use_cudnn, param_attr=ParamAttr( initializer=MSRA(), name=name + "_weights"), bias_attr=False) bn_name = name + "_bn" return fluid.layers.batch_norm( input=conv, act=act, param_attr=ParamAttr(name=bn_name + "_scale"), bias_attr=ParamAttr(name=bn_name + "_offset"), moving_mean_name=bn_name + '_mean', moving_variance_name=bn_name + '_variance') def depthwise_separable(self, input, num_filters1, num_filters2, num_groups, stride, scale, name=None): depthwise_conv = self.conv_bn_layer( input=input, filter_size=3, num_filters=int(num_filters1 * scale), stride=stride, padding=1, num_groups=int(num_groups * scale), use_cudnn=False, name=name + "_dw") pointwise_conv = self.conv_bn_layer( input=depthwise_conv, filter_size=1, num_filters=int(num_filters2 * scale), stride=1, padding=0, name=name + "_sep") return pointwise_conv def init_log_config(): """ 初始化日志相关配置 :return: """ global logger logger = logging.getLogger() logger.setLevel(logging.INFO) log_path = os.path.join(os.getcwd(), 'logs') if not os.path.exists(log_path): os.makedirs(log_path) log_name = os.path.join(log_path, 'train.log') sh = logging.StreamHandler() fh = logging.FileHandler(log_name, mode='w') fh.setLevel(logging.DEBUG) formatter = logging.Formatter("%(asctime)s - %(filename)s[line:%(lineno)d] - %(levelname)s: %(message)s") fh.setFormatter(formatter) sh.setFormatter(formatter) logger.addHandler(sh) logger.addHandler(fh) def init_train_parameters(): """ 初始化训练参数,主要是初始化图片数量,类别数 :return: """ train_file_list = os.path.join(train_parameters['data_dir'], train_parameters['train_file_list']) label_list = os.path.join(train_parameters['data_dir'], train_parameters['label_file']) index = 0 with codecs.open(label_list, encoding='utf-8') as flist: lines = [line.strip() for line in flist] for line in lines: parts = line.strip().split() train_parameters['label_dict'][parts[1]] = int(parts[0]) index += 1 train_parameters['class_dim'] = index with codecs.open(train_file_list, encoding='utf-8') as flist: lines = [line.strip() for line in flist] train_parameters['image_count'] = len(lines) def resize_img(img, target_size): """ 强制缩放图片 :param img: :param target_size: :return: """ target_size = input_size img = img.resize((target_size[1], target_size[2]), Image.BILINEAR) return img def random_crop(img, scale=[0.08, 1.0], ratio=[3. / 4., 4. / 3.]): aspect_ratio = math.sqrt(np.random.uniform(*ratio)) w = 1. * aspect_ratio h = 1. / aspect_ratio bound = min((float(img.size[0]) / img.size[1]) / (w**2), (float(img.size[1]) / img.size[0]) / (h**2)) scale_max = min(scale[1], bound) scale_min = min(scale[0], bound) target_area = img.size[0] * img.size[1] * np.random.uniform(scale_min, scale_max) target_size = math.sqrt(target_area) w = int(target_size * w) h = int(target_size * h) i = np.random.randint(0, img.size[0] - w + 1) j = np.random.randint(0, img.size[1] - h + 1) img = img.crop((i, j, i + w, j + h)) img = img.resize((train_parameters['input_size'][1], train_parameters['input_size'][2]), Image.BILINEAR) return img def rotate_image(img): """ 图像增强,增加随机旋转角度 """ angle = np.random.randint(-14, 15) img = img.rotate(angle) return img def random_brightness(img): """ 图像增强,亮度调整 :param img: :return: """ prob = np.random.uniform(0, 1) if prob < train_parameters['image_enhance_strategy']['brightness_prob']: brightness_delta = train_parameters['image_enhance_strategy']['brightness_delta'] delta = np.random.uniform(-brightness_delta, brightness_delta) + 1 img = ImageEnhance.Brightness(img).enhance(delta) return img def random_contrast(img): """ 图像增强,对比度调整 :param img: :return: """ prob = np.random.uniform(0, 1) if prob < train_parameters['image_enhance_strategy']['contrast_prob']: contrast_delta = train_parameters['image_enhance_strategy']['contrast_delta'] delta = np.random.uniform(-contrast_delta, contrast_delta) + 1 img = ImageEnhance.Contrast(img).enhance(delta) return img def random_saturation(img): """ 图像增强,饱和度调整 :param img: :return: """ prob = np.random.uniform(0, 1) if prob < train_parameters['image_enhance_strategy']['saturation_prob']: saturation_delta = train_parameters['image_enhance_strategy']['saturation_delta'] delta = np.random.uniform(-saturation_delta, saturation_delta) + 1 img = ImageEnhance.Color(img).enhance(delta) return img def random_hue(img): """ 图像增强,色度调整 :param img: :return: """ prob = np.random.uniform(0, 1) if prob < train_parameters['image_enhance_strategy']['hue_prob']: hue_delta = train_parameters['image_enhance_strategy']['hue_delta'] delta = np.random.uniform(-hue_delta, hue_delta) img_hsv = np.array(img.convert('HSV')) img_hsv[:, :, 0] = img_hsv[:, :, 0] + delta img = Image.fromarray(img_hsv, mode='HSV').convert('RGB') return img def distort_color(img): """ 概率的图像增强 :param img: :return: """ prob = np.random.uniform(0, 1) # Apply different distort order if prob < 0.35: img = random_brightness(img) img = random_contrast(img) img = random_saturation(img) img = random_hue(img) elif prob < 0.7: img = random_brightness(img) img = random_saturation(img) img = random_hue(img) img = random_contrast(img) return img def custom_image_reader(file_list, data_dir, mode): """ 自定义用户图片读取器,先初始化图片种类,数量 :param file_list: :param data_dir: :param mode: :return: """ with codecs.open(file_list) as flist: lines = [line.strip() for line in flist] def reader(): np.random.shuffle(lines) for line in lines: if mode == 'train' or mode == 'val': img_path, label = line.split() img = Image.open(img_path) try: if img.mode != 'RGB': img = img.convert('RGB') if train_parameters['image_enhance_strategy']['need_distort'] == True: img = distort_color(img) if train_parameters['image_enhance_strategy']['need_rotate'] == True: img = rotate_image(img) if train_parameters['image_enhance_strategy']['need_crop'] == True: img = random_crop(img, train_parameters['input_size']) if train_parameters['image_enhance_strategy']['need_flip'] == True: mirror = int(np.random.uniform(0, 2)) if mirror == 1: img = img.transpose(Image.FLIP_LEFT_RIGHT) # HWC--->CHW && normalized img = np.array(img).astype('float32') img -= train_parameters['mean_rgb'] img = img.transpose((2, 0, 1)) # HWC to CHW img *= 0.007843 # 像素值归一化 yield img, int(label) except Exception as e: pass # 以防某些图片读取处理出错,加异常处理 elif mode == 'test': img_path = os.path.join(data_dir, line) img = Image.open(img_path) if img.mode != 'RGB': img = img.convert('RGB') img = resize_img(img, train_parameters['input_size']) # HWC--->CHW && normalized img = np.array(img).astype('float32') img -= train_parameters['mean_rgb'] img = img.transpose((2, 0, 1)) # HWC to CHW img *= 0.007843 # 像素值归一化 yield img return reader def optimizer_rms_setting(): """ 阶梯型的学习率适合比较大规模的训练数据 """ batch_size = train_parameters["train_batch_size"] iters = train_parameters["image_count"] // batch_size learning_strategy = train_parameters['rsm_strategy'] lr = learning_strategy['learning_rate'] boundaries = [i * iters for i in learning_strategy["lr_epochs"]] values = [i * lr for i in learning_strategy["lr_decay"]] optimizer = fluid.optimizer.RMSProp( learning_rate=fluid.layers.piecewise_decay(boundaries, values)) return optimizer def load_params(exe, program): if train_parameters['continue_train'] and os.path.exists(train_parameters['save_persistable_dir']): logger.info('load params from retrain model') # fluid.io.load_persistables(executor=exe, # dirname=train_parameters['save_persistable_dir'], # main_program=program) fluid.load(program, os.path.join(train_parameters['save_persistable_dir'], "checkpoint"), exe) elif train_parameters['pretrained'] and os.path.exists(train_parameters['pretrained_dir']): logger.info('load params from pretrained model') def if_exist(var): return os.path.exists(os.path.join(train_parameters['pretrained_dir'], var.name)) fluid.io.load_vars(exe, train_parameters['pretrained_dir'], main_program=program, predicate=if_exist) # fluid.load(program, train_parameters['pretrained_dir'], exe) def print_func(var): logger.info("in py func type: {0}".format(type(var))) print(np.array(var)) sys.stdout.flush() def focal_loss(pred, label, gama): one_hot = paddle.fluid.layers.one_hot(label, train_parameters['class_dim']) prob = one_hot * pred cross_entropy = one_hot * fluid.layers.log(pred) cross_entropy = fluid.layers.reduce_sum(cross_entropy, dim=-1) sum = paddle.fluid.layers.sum(cross_entropy) weight = -1.0 * one_hot * paddle.fluid.layers.pow((1.0 - pred), gama) weight = fluid.layers.reduce_sum(weight, dim=-1) return weight * cross_entropy def train(): train_prog = fluid.Program() train_startup = fluid.Program() logger.info("create prog success") logger.info("train config: %s", str(train_parameters)) logger.info("build input custom reader and data feeder") file_list = os.path.join(train_parameters['data_dir'], "train.txt") mode = train_parameters['mode'] batch_reader = paddle.batch(custom_image_reader(file_list, train_parameters['data_dir'], mode), batch_size=train_parameters['train_batch_size']) batch_reader = paddle.reader.shuffle(batch_reader, train_parameters['train_batch_size']) place = fluid.CUDAPlace(0) if train_parameters['use_gpu'] else fluid.CPUPlace() # 定义输入数据的占位符 img = fluid.data(name='img', shape=[-1] + train_parameters['input_size'], dtype='float32') label = fluid.data(name='label', shape=[-1] + [1], dtype='int64') feeder = fluid.DataFeeder(feed_list=[img, label], place=place) if not os.path.exists(train_parameters['save_persistable_dir']): os.mkdir(train_parameters['save_persistable_dir']) # 选取不同的网络 logger.info("build newwork") model = MobileNet() out = model.net(input=img, class_dim=train_parameters['class_dim']) cost = fluid.layers.cross_entropy(out, label) # cost = focal_loss(out, label, 2.0) avg_cost = fluid.layers.mean(x=cost) acc_top1 = fluid.layers.accuracy(input=out, label=label, k=1) # 选取不同的优化器 optimizer = optimizer_rms_setting() optimizer.minimize(avg_cost) exe = fluid.Executor(place) main_program = fluid.default_main_program() exe.run(fluid.default_startup_program()) train_fetch_list = [avg_cost.name, acc_top1.name, out.name] load_params(exe, main_program) # 训练循环主体 stop_strategy = train_parameters['early_stop'] successive_limit = stop_strategy['successive_limit'] sample_freq = stop_strategy['sample_frequency'] good_acc1 = stop_strategy['good_acc1'] successive_count = 0 stop_train = False total_batch_count = 0 for pass_id in range(train_parameters["num_epochs"]): logger.info("current pass: %d, start read image", pass_id) batch_id = 0 for step_id, data in enumerate(batch_reader()): t1 = time.time() # logger.info("data size:{0}".format(len(data))) loss, acc1, pred_ot = exe.run(main_program, feed=feeder.feed(data), fetch_list=train_fetch_list) t2 = time.time() batch_id += 1 total_batch_count += 1 period = t2 - t1 loss = np.mean(np.array(loss)) acc1 = np.mean(np.array(acc1)) if batch_id % 10 == 0: logger.info("Pass {0}, trainbatch {1}, loss {2}, acc1 {3}, time {4}".format(pass_id, batch_id, loss, acc1, "%2.2f sec" % period)) # 简单的提前停止策略,认为连续达到某个准确率就可以停止了 if acc1 >= good_acc1: successive_count += 1 logger.info("current acc1 {0} meets good {1}, successive count {2}".format(acc1, good_acc1, successive_count)) fluid.io.save_inference_model(dirname=train_parameters['save_freeze_dir'], feeded_var_names=['img'], target_vars=[out], main_program=main_program, executor=exe) if successive_count >= successive_limit: logger.info("end training") stop_train = True break else: successive_count = 0 # 通用的保存策略,减小意外停止的损失 if total_batch_count % sample_freq == 0: logger.info("temp save {0} batch train result, current acc1 {1}".format(total_batch_count, acc1)) fluid.save(main_program, os.path.join(train_parameters['save_persistable_dir'], "checkpoint")) if stop_train: break logger.info("training till last epcho, end training") fluid.save(main_program, os.path.join(train_parameters['save_persistable_dir'], "checkpoint")) fluid.io.save_inference_model(dirname=train_parameters['save_freeze_dir'], feeded_var_names=['img'], target_vars=[out], main_program=main_program, executor=exe) if __name__ == '__main__': init_log_config() init_train_parameters() train()2020-02-12 15:03:28,867-INFO: create prog success 2020-02-12 15:03:28,867 - <ipython-input-4-85d1bfa42344>[line:528] - INFO: create prog success 2020-02-12 15:03:28,870-INFO: train config: {'input_size': [3, 224, 224], 'class_dim': 5, 'image_count': 2933, 'label_dict': {'daisy': 0, 'dandelion': 1, 'roses': 2, 'sunflowers': 3, 'tulips': 4}, 'data_dir': 'data/data2815', 'train_file_list': 'train.txt', 'label_file': 'label_list.txt', 'save_freeze_dir': './freeze-model', 'save_persistable_dir': './persistable-params', 'continue_train': False, 'pretrained': True, 'pretrained_dir': 'data/data6591/MobileNetV1_pretrained', 'mode': 'train', 'num_epochs': 5, 'train_batch_size': 128, 'mean_rgb': [127.5, 127.5, 127.5], 'use_gpu': True, 'image_enhance_strategy': {'need_distort': True, 'need_rotate': True, 'need_crop': True, 'need_flip': True, 'hue_prob': 0.5, 'hue_delta': 18, 'contrast_prob': 0.5, 'contrast_delta': 0.5, 'saturation_prob': 0.5, 'saturation_delta': 0.5, 'brightness_prob': 0.5, 'brightness_delta': 0.125}, 'early_stop': {'sample_frequency': 50, 'successive_limit': 3, 'good_acc1': 0.92}, 'rsm_strategy': {'learning_rate': 0.0008, 'lr_epochs': [20, 40, 60, 80, 100], 'lr_decay': [1, 0.5, 0.25, 0.1, 0.01, 0.002]}} 2020-02-12 15:03:28,870 - <ipython-input-4-85d1bfa42344>[line:529] - INFO: train config: {'input_size': [3, 224, 224], 'class_dim': 5, 'image_count': 2933, 'label_dict': {'daisy': 0, 'dandelion': 1, 'roses': 2, 'sunflowers': 3, 'tulips': 4}, 'data_dir': 'data/data2815', 'train_file_list': 'train.txt', 'label_file': 'label_list.txt', 'save_freeze_dir': './freeze-model', 'save_persistable_dir': './persistable-params', 'continue_train': False, 'pretrained': True, 'pretrained_dir': 'data/data6591/MobileNetV1_pretrained', 'mode': 'train', 'num_epochs': 5, 'train_batch_size': 128, 'mean_rgb': [127.5, 127.5, 127.5], 'use_gpu': True, 'image_enhance_strategy': {'need_distort': True, 'need_rotate': True, 'need_crop': True, 'need_flip': True, 'hue_prob': 0.5, 'hue_delta': 18, 'contrast_prob': 0.5, 'contrast_delta': 0.5, 'saturation_prob': 0.5, 'saturation_delta': 0.5, 'brightness_prob': 0.5, 'brightness_delta': 0.125}, 'early_stop': {'sample_frequency': 50, 'successive_limit': 3, 'good_acc1': 0.92}, 'rsm_strategy': {'learning_rate': 0.0008, 'lr_epochs': [20, 40, 60, 80, 100], 'lr_decay': [1, 0.5, 0.25, 0.1, 0.01, 0.002]}} 2020-02-12 15:03:28,871-INFO: build input custom reader and data feeder 2020-02-12 15:03:28,871 - <ipython-input-4-85d1bfa42344>[line:530] - INFO: build input custom reader and data feeder 2020-02-12 15:03:28,874-INFO: build newwork 2020-02-12 15:03:28,874 - <ipython-input-4-85d1bfa42344>[line:546] - INFO: build newwork 2020-02-12 15:03:31,991-INFO: load params from pretrained model 2020-02-12 15:03:31,991 - <ipython-input-4-85d1bfa42344>[line:499] - INFO: load params from pretrained model 2020-02-12 15:03:32,094-INFO: current pass: 0, start read image 2020-02-12 15:03:32,094 - <ipython-input-4-85d1bfa42344>[line:574] - INFO: current pass: 0, start read image 2020-02-12 15:04:02,157-INFO: Pass 0, trainbatch 10, loss 0.4433901906013489, acc1 0.8671875, time 0.25 sec 2020-02-12 15:04:02,157 - <ipython-input-4-85d1bfa42344>[line:590] - INFO: Pass 0, trainbatch 10, loss 0.4433901906013489, acc1 0.8671875, time 0.25 sec 2020-02-12 15:04:04,414-INFO: current acc1 0.921875 meets good 0.92, successive count 1 2020-02-12 15:04:04,414 - <ipython-input-4-85d1bfa42344>[line:594] - INFO: current acc1 0.921875 meets good 0.92, successive count 1 2020-02-12 15:04:05,051-INFO: Pass 0, trainbatch 20, loss 0.28840866684913635, acc1 0.8828125, time 0.37 sec 2020-02-12 15:04:05,051 - <ipython-input-4-85d1bfa42344>[line:590] - INFO: Pass 0, trainbatch 20, loss 0.28840866684913635, acc1 0.8828125, time 0.37 sec 2020-02-12 15:04:05,302-INFO: current acc1 0.921875 meets good 0.92, successive count 1 2020-02-12 15:04:05,302 - <ipython-input-4-85d1bfa42344>[line:594] - INFO: current acc1 0.921875 meets good 0.92, successive count 1 2020-02-12 15:04:06,063-INFO: current acc1 0.9296875 meets good 0.92, successive count 1 2020-02-12 15:04:06,063 - <ipython-input-4-85d1bfa42344>[line:594] - INFO: current acc1 0.9296875 meets good 0.92, successive count 1 2020-02-12 15:04:06,302-INFO: current pass: 1, start read image 2020-02-12 15:04:06,302 - <ipython-input-4-85d1bfa42344>[line:574] - INFO: current pass: 1, start read image 2020-02-12 15:04:33,310-INFO: current acc1 0.9375 meets good 0.92, successive count 2 2020-02-12 15:04:33,310 - <ipython-input-4-85d1bfa42344>[line:594] - INFO: current acc1 0.9375 meets good 0.92, successive count 2 2020-02-12 15:04:33,821-INFO: current acc1 0.9296875 meets good 0.92, successive count 3 2020-02-12 15:04:33,821 - <ipython-input-4-85d1bfa42344>[line:594] - INFO: current acc1 0.9296875 meets good 0.92, successive count 3 2020-02-12 15:04:34,045-INFO: end training 2020-02-12 15:04:34,045 - <ipython-input-4-85d1bfa42344>[line:601] - INFO: end training 2020-02-12 15:04:34,056-INFO: training till last epcho, end training 2020-02-12 15:04:34,056 - <ipython-input-4-85d1bfa42344>[line:613] - INFO: training till last epcho, end training

from __future__ import absolute_import from __future__ import division from __future__ import print_function import os import numpy as np import random import time import codecs import sys import functools import math import paddle import paddle.fluid as fluid from paddle.fluid import core from paddle.fluid.param_attr import ParamAttr from PIL import Image, ImageEnhance target_size = [3, 224, 224] mean_rgb = [127.5, 127.5, 127.5] data_dir = "data/data2815" eval_file = "eval.txt" use_gpu = True place = fluid.CUDAPlace(0) if use_gpu else fluid.CPUPlace() exe = fluid.Executor(place) save_freeze_dir = "./freeze-model" [inference_program, feed_target_names, fetch_targets] = fluid.io.load_inference_model(dirname=save_freeze_dir, executor=exe) # print(fetch_targets) def crop_image(img, target_size): width, height = img.size w_start = (width - target_size[2]) / 2 h_start = (height - target_size[1]) / 2 w_end = w_start + target_size[2] h_end = h_start + target_size[1] img = img.crop((w_start, h_start, w_end, h_end)) return img def resize_img(img, target_size): ret = img.resize((target_size[1], target_size[2]), Image.BILINEAR) return ret def read_image(img_path): img = Image.open(img_path) if img.mode != 'RGB': img = img.convert('RGB') img = crop_image(img, target_size) img = np.array(img).astype('float32') img -= mean_rgb img = img.transpose((2, 0, 1)) # HWC to CHW img *= 0.007843 img = img[np.newaxis,:] return img def infer(image_path): tensor_img = read_image(image_path) label = exe.run(inference_program, feed={feed_target_names[0]: tensor_img}, fetch_list=fetch_targets) return np.argmax(label) def eval_all(): eval_file_path = os.path.join(data_dir, eval_file) total_count = 0 right_count = 0 with codecs.open(eval_file_path, encoding='utf-8') as flist: lines = [line.strip() for line in flist] t1 = time.time() for line in lines: total_count += 1 parts = line.strip().split() result = infer(parts[0]) # print("infer result:{0} answer:{1}".format(result, parts[1])) if str(result) == parts[1]: right_count += 1 period = time.time() - t1 print("total eval count:{0} cost time:{1} predict accuracy:{2}".format(total_count, "%2.2f sec" % period, right_count / total_count)) if __name__ == '__main__': eval_all() total eval count:737 cost time:13.05 sec predict accuracy:0.9199457259158752

点击链接,使用AI Studio一键上手实践项目吧:https://aistudio.baidu.com/aistudio/projectdetail/169427

下载安装命令

## CPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/cpu paddlepaddle

## GPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/gpu paddlepaddle-gpu

>> 访问 PaddlePaddle 官网,了解更多相关内容。

来源:oschina

链接:https://my.oschina.net/u/4067628/blog/3235637