1. 什么是YARN

Yet Another Resource Negotiator(另一种资源协调者),是一种新的Hadoop资源管理器,它是一个通用资源管理系统,可为上层应用提供统一的资源管理和调度。

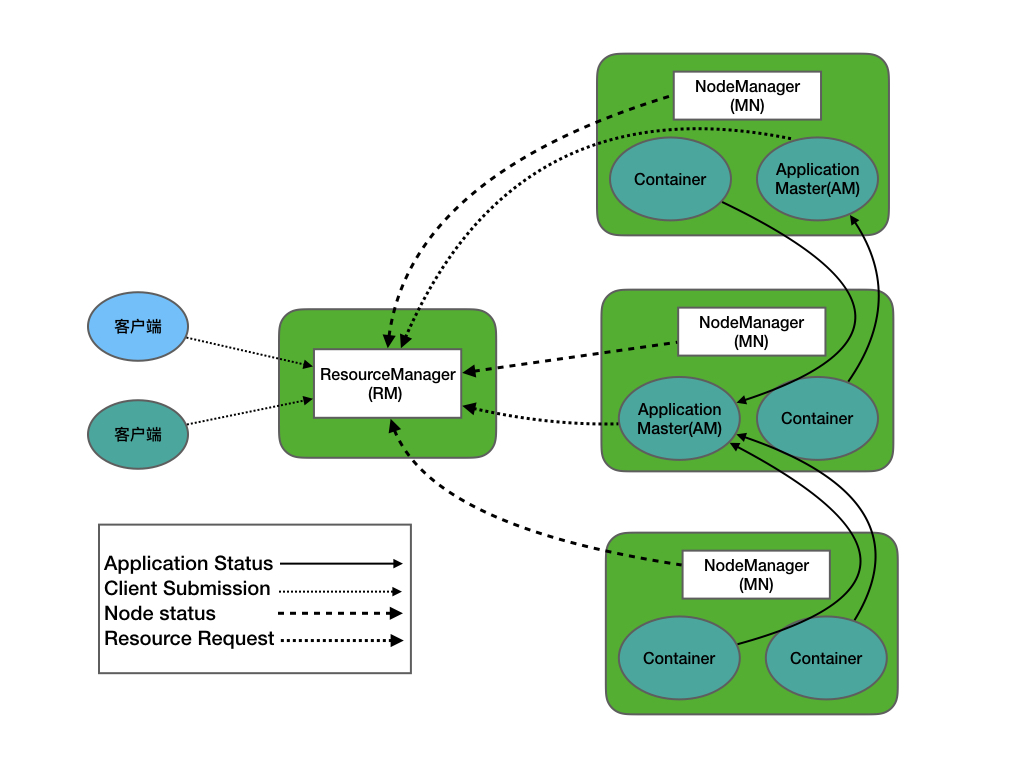

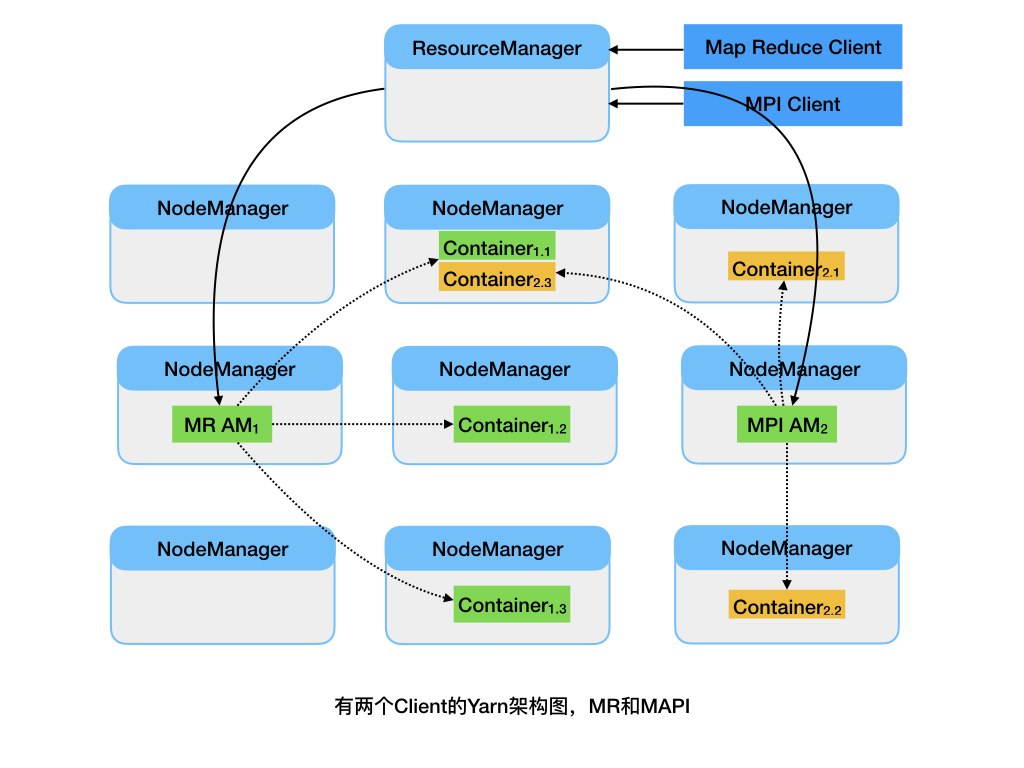

2. YARN架构

- ResurceManager(RM):一个纯粹的调度器,专门负责集群中可用资源的分配和管理。

- Container :分配给具体应用的资源抽象表现形式,包括内存、cpu、disk

- NodeManager(NM) :负责节点本地资源的管理,包括启动应用程序的Container,监控它们的资源使用情况,并报告给RM

- App Master (ApplicationMaster(AM)):特定框架库的一个实例,负责有RM协商资源,并和NM协调工作来执行和监控Container以及它们的资源消耗。AM也是以一个的Container身份运行。

- 客户端(Client):是集群中一个能向RM提交应用的实例,并且指定了执行应用所需要的AM类型

3. 如何编写YARN应用程序

-

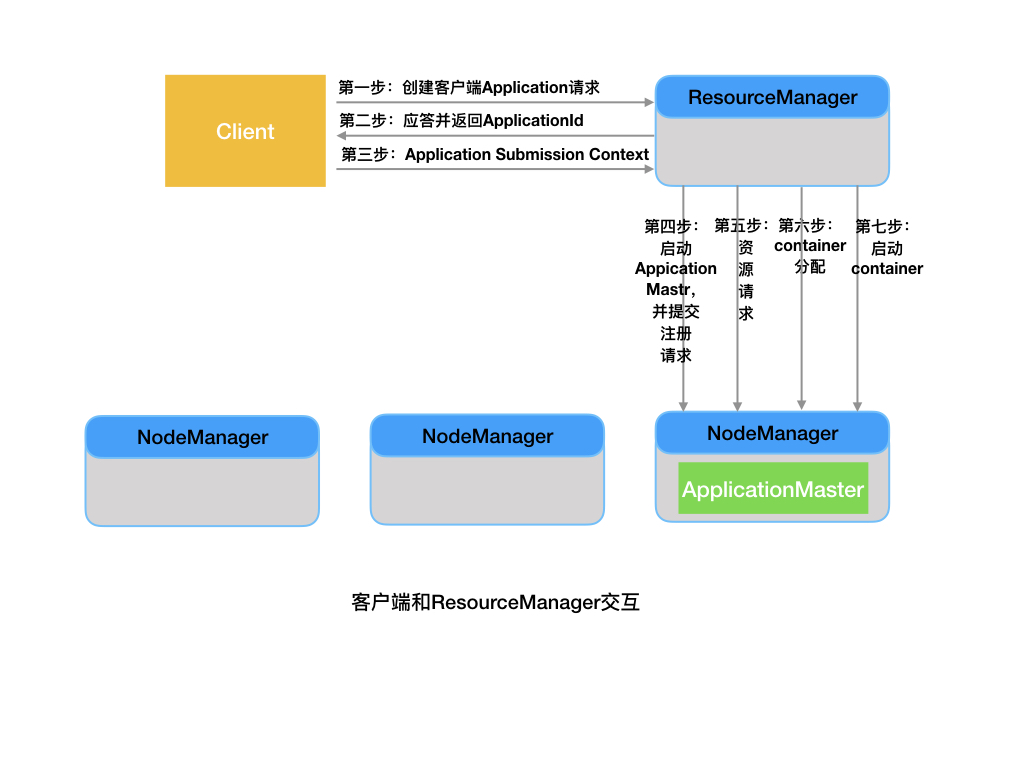

Client

- 初始化并启动一个YarnClient

Configuration yarnConfig = new YarnConfiguration(getConf()); YarnClient client = YarnClient.createYarnClient(); client.init(yarnConfig); client.start();- 创建一个应用程序

YarnClientApplication app = client.createApplication(); GetNewApplicationResponse appResponse = app.getNewApplicationResponse();- 设置应用程序提交上下文

// 1. 设置应用程序提交上下文基本信息 ApplicationSubmissionContext appContext = app.getApplicationSubmissionContext(); appContext.setApplicationId(appResponse.getApplicationId()); appContext.setApplicationName(config.getProperty("app.name")); appContext.setApplicationType(config.getProperty("app.type")); appContext.setApplicationTags(new LinkedHashSet<>(Arrays.asList(config.getProperty("app.tags").split(",")))); // queue:默认是default appContext.setQueue(config.getProperty("app.queue")); appContext.setPriority(Priority.newInstance(Integer.parseInt(config.getProperty("app.priority")))); appContext.setResource(Resource.newInstance(Integer.parseInt(config.getProperty("am.memory")), Integer.parseInt(config.getProperty("am.vCores")))); //2. 设置am container启动上下文 ContainerLaunchContext amContainer = Records.newRecord(ContainerLaunchContext.class); // 3. 设置am localResources Map<String, LocalResource> amLocalResources = new LinkedHashMap<>(); LocalResource drillArchive = Records.newRecord(LocalResource.class); drillArchive.setResource(ConverterUtils.getYarnUrlFromPath(drillArchiveFileStatus.getPath())); drillArchive.setSize(drillArchiveFileStatus.getLen()); drillArchive.setTimestamp(drillArchiveFileStatus.getModificationTime()); drillArchive.setType(LocalResourceType.ARCHIVE); drillArchive.setVisibility(LocalResourceVisibility.PUBLIC); amLocalResources.put(config.getProperty("drill.archive.name"), drillArchive); amContainer.setLocalResources(amLocalResources); // 4. 设置am environment Map<String, String> amEnvironment = new LinkedHashMap<>(); // add Hadoop Classpath for (String classpath : yarnConfig.getStrings(YarnConfiguration.YARN_APPLICATION_CLASSPATH, YarnConfiguration.DEFAULT_YARN_APPLICATION_CLASSPATH)) { Apps.addToEnvironment(amEnvironment, Environment.CLASSPATH.name(), classpath.trim(), ApplicationConstants.CLASS_PATH_SEPARATOR); } Apps.addToEnvironment(amEnvironment, Environment.CLASSPATH.name(), Environment.PWD.$() + File.separator + "*", ApplicationConstants.CLASS_PATH_SEPARATOR); StringWriter sw = new StringWriter(); config.store(sw, ""); String configBase64Binary = DatatypeConverter.printBase64Binary(sw.toString().getBytes("UTF-8")); Apps.addToEnvironment(amEnvironment, "DRILL_ON_YARN_CONFIG", configBase64Binary, ApplicationConstants.CLASS_PATH_SEPARATOR); amContainer.setEnvironment(amEnvironment); // 5. 设置am command List<String> commands = new ArrayList<>(); commands.add(Environment.SHELL.$$()); commands.add(config.getProperty("drill.archive.name") + "/bin/drill-am.sh"); commands.add("1>" + ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/" + ApplicationConstants.STDOUT); commands.add("2>" + ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/" + ApplicationConstants.STDERR); StringBuilder amCommand = new StringBuilder(); for (String str : commands) { amCommand.append(str).append(" "); } amCommand.setLength(amCommand.length() - " ".length()); amContainer.setCommands(Collections.singletonList(amCommand.toString())); // 6. 设置安全令牌 if (UserGroupInformation.isSecurityEnabled()) { Credentials credentials = new Credentials(); String tokenRenewer = yarnConfig.get(YarnConfiguration.RM_PRINCIPAL); final Token<?> tokens[] = fileSystem.addDelegationTokens(tokenRenewer, credentials); DataOutputBuffer dob = new DataOutputBuffer(); credentials.writeTokenStorageToStream(dob); ByteBuffer fsTokens = ByteBuffer.wrap(dob.getData(), 0, dob.getLength()); amContainer.setTokens(fsTokens); } appContext.setAMContainerSpec(amContainer);- 提交应用程序

client.submitApplication(appContext); -

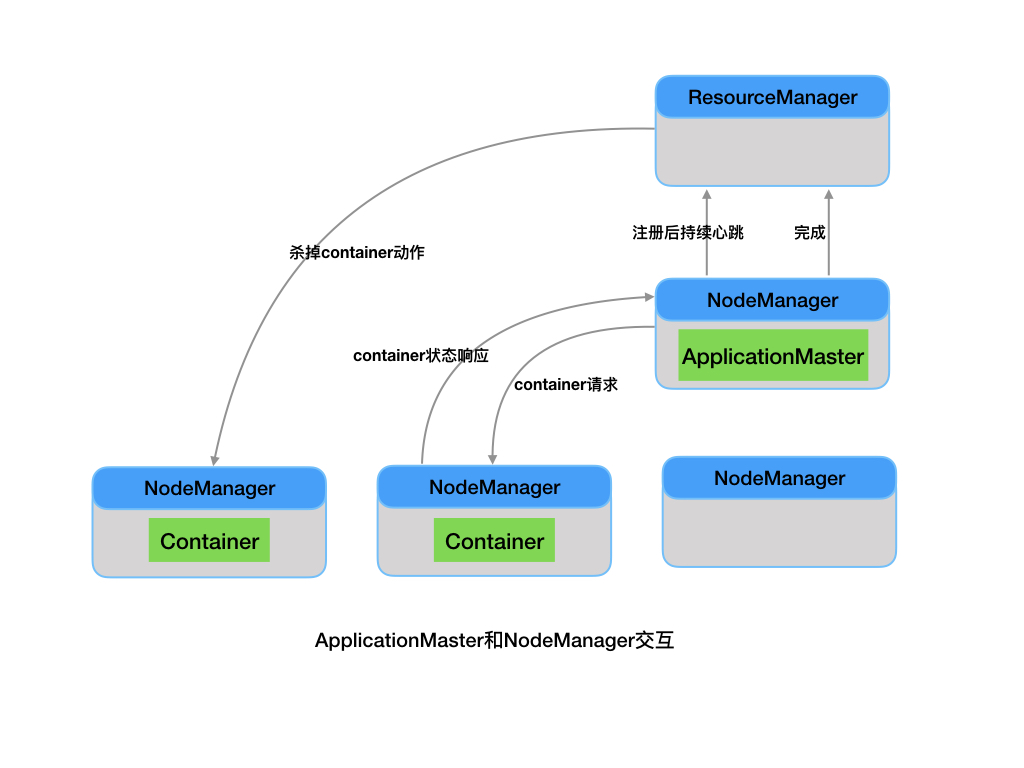

ApplicationMaster(AM)

- 初始化AMRMClientAsync

YarnConfiguration yarnConfig = new YarnConfiguration(); AMRMClientAsync amrmClientAsync = AMRMClientAsync.createAMRMClientAsync(5000, new AMRMCallbackHandler()); amrmClientAsync.init(yarnConfig); amrmClientAsync.start();- 初始化NMClientAsync

YarnConfiguration yarnConfig = new YarnConfiguration(); NMClientAsync nmClientAsync = NMClientAsync.createNMClientAsync(new NMCallbackHandler()); nmClientAsync.init(yarnConfig); nmClientAsync.start();- 注册ApplicationMaster(AM)

String thisHostName = InetAddress.getLocalHost(); amrmClientAsync.registerApplicationMaster(thisHostName, 0, ""); 添加ContainerRequest for (NodeReport containerReport : containerReports) { ContainerRequest containerRequest = new ContainerRequest(capability, new String[] {containerReport.getNodeId().getHost()}, null, priority, false); amrmClientAsync.addContainerRequest(containerRequest); }- 启动容器

private static class AMRMCallbackHandler implements AMRMClientAsync.CallbackHandler { @Override public void onContainersAllocated(List<Container> containers) { for (Container container : containers) { ContainerLaunchContext containerContext = Records.newRecord(ContainerLaunchContext.class); // setEnvironment Map<String, String> containerEnvironment = new LinkedHashMap<>(); // add Hadoop Classpath for (String classpath : yarnConfig.getStrings(YarnConfiguration.YARN_APPLICATION_CLASSPATH, YarnConfiguration.DEFAULT_YARN_APPLICATION_CLASSPATH)) { Apps.addToEnvironment(containerEnvironment, Environment.CLASSPATH.name(), classpath.trim(), ApplicationConstants.CLASS_PATH_SEPARATOR); } Apps.addToEnvironment(containerEnvironment, Environment.CLASSPATH.name(), Environment.PWD.$() + File.separator + "*", ApplicationConstants.CLASS_PATH_SEPARATOR); containerContext.setEnvironment(containerEnvironment); // setContainerResource Map<String, LocalResource> containerResources = new LinkedHashMap<>(); LocalResource drillArchive = Records.newRecord(LocalResource.class); String drillArchivePath = appConfig.getProperty("fs.upload.dir") + appConfig.getProperty( "drill.archive.name"); Path path = new Path(drillArchivePath); FileStatus fileStatus = FileSystem.get(yarnConfig).getFileStatus(path); drillArchive.setResource(ConverterUtils.getYarnUrlFromPath(fileStatus.getPath())); drillArchive.setSize(fileStatus.getLen()); drillArchive.setTimestamp(fileStatus.getModificationTime()); drillArchive.setType(LocalResourceType.ARCHIVE); drillArchive.setVisibility(LocalResourceVisibility.PUBLIC); containerResources.put(appConfig.getProperty("drill.archive.name"), drillArchive); containerContext.setLocalResources(containerResources); // setContainerCommand List<String> commands = new ArrayList<>(); commands.add(Environment.SHELL.$$()); commands.add(appConfig.getProperty("drill.archive.name") + "/bin/drillbit.sh run"); commands.add("1>" + ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/" + ApplicationConstants.STDOUT); commands.add("2>" + ApplicationConstants.LOG_DIR_EXPANSION_VAR + "/" + ApplicationConstants.STDERR); StringBuilder containerCommand = new StringBuilder(); for (String str : commands) { containerCommand.append(str).append(" "); } containerCommand.setLength(containerCommand.length() - " ".length()); containerContext.setCommands(Collections.singletonList(containerCommand.toString())); nmClientAsync.startContainerAsync(container, containerContext); } } }- unregisterApplicationMaster(AM)

amrmClientAsync.unregisterApplicationMaster(appStatus, appMessage, null);

5. 为什么会有YARN

- 可扩展性:实践证明在MRv1中要将JobTracker扩展至4000个节点规模是极度困难,因为JobTarcker承担太多的职责,包括资源的调度,任务的跟踪和监控,当节点规模越来越大时,JobTracker编程越来越不堪重负。而在YARN中,JobTarcker被拆分为了RM和AM,职责更清晰,每个组件承担的职责变少,更加的轻量级。

- 对编程模型多样性的支持:由于MRv1中JobTarcker和TaskTarckerde的设计和MR框架耦合验证,导致MRv1仅仅支持mapreduce计算框架,对并行计算、流式计算、内存计算不支持。而在YARN中具体的框架由AM负责,是应用程序自己控制,YARN提供了统一的资源调度。

- 框架升级更容易:在MRv1中若要对MR框架升级,则需要重启整个Hadoop集群,风险很大。而在YARN中,具体的框架由AM负责,是应用程序自己控制,所以只需升级用户程序库即可。

- 集群资源利用率:MRv1引入了“slot”概念表示各个节点上的计算资源,将各个节点上的资源(CPU、内存和磁盘等)等量切分成若干份,每一份用一个slot表示,同时规定一个task可根据实际需要占用多个slot,slot又被分为Mapslot和Reduceslot两种,但不允许共享。对于一个作业,刚开始运行时,Map slot资源紧缺而Reduce slot空闲,当Map Task全部运行完成后,Reduce slot紧缺而Map slot空闲,很明显,降低了资源的利用率。而在YARN中,资源以Container形式表现,包含了内存、cpu等,相比MRv1,资源抽象粒度更细,其次,通过RM的Scheduler(FIFO、Capacity、Fair),保障资源的弹性。

6. 参考资料

- 《HadoopYARN权限指南》

- 《Hadoop技术内幕 深入解析YARN架构设计与实现原理》

来源:oschina

链接:https://my.oschina.net/u/4285215/blog/4030356