I am trying to get the pdf files from this website. I am trying to create a double loop so I can scroll over the years (Season) to get all the main pdf located in each year.

The line of code is not working is this one. The problem is, I can not make this line work (The one that is supposed to loop all over the years (Season):

for year in wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#season a aria-valuetext"))):

year.click()

This is the full code:

os.chdir("C:..")

driver = webdriver.Chrome("chromedriver.exe")

wait = WebDriverWait(driver, 10)

driver.get("http://www.motogp.com/en/Results+Statistics/")

links = []

for year in wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#season a aria-valuetext"))):

year.click()

for item in wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#event option"))):

item.click()

elem = wait.until(EC.presence_of_element_located((By.CLASS_NAME, "padleft5")))

print(elem.get_attribute("href"))

links.append(elem.get_attribute("href"))

wait.until(EC.staleness_of(elem))

driver.quit()

This is a previous post where I got help with the code above:

The solution below should work for you. First, we iterate over the # of years in the CSS slider. Then we work the list using your code example. Added a sleep command because I kept getting a timeout.

CODE

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.keys import Keys

import time

driver = webdriver.Chrome("chromedriver.exe")

wait = WebDriverWait(driver, 10)

driver.get("http://www.motogp.com/en/Results+Statistics/")

slider = driver.find_element_by_xpath('//*[@id="handle_season"]')

for year in range(68):

wait.until(EC.presence_of_all_elements_located((By.XPATH, '//*[@id="event"]')))

for item in wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#event option"))):

item.click()

elem = wait.until(EC.presence_of_element_located((By.CLASS_NAME, "padleft5")))

print(elem.get_attribute("href"))

wait.until(EC.staleness_of(elem))

slider.send_keys(Keys.ARROW_LEFT)

time.sleep(1)

driver.quit()

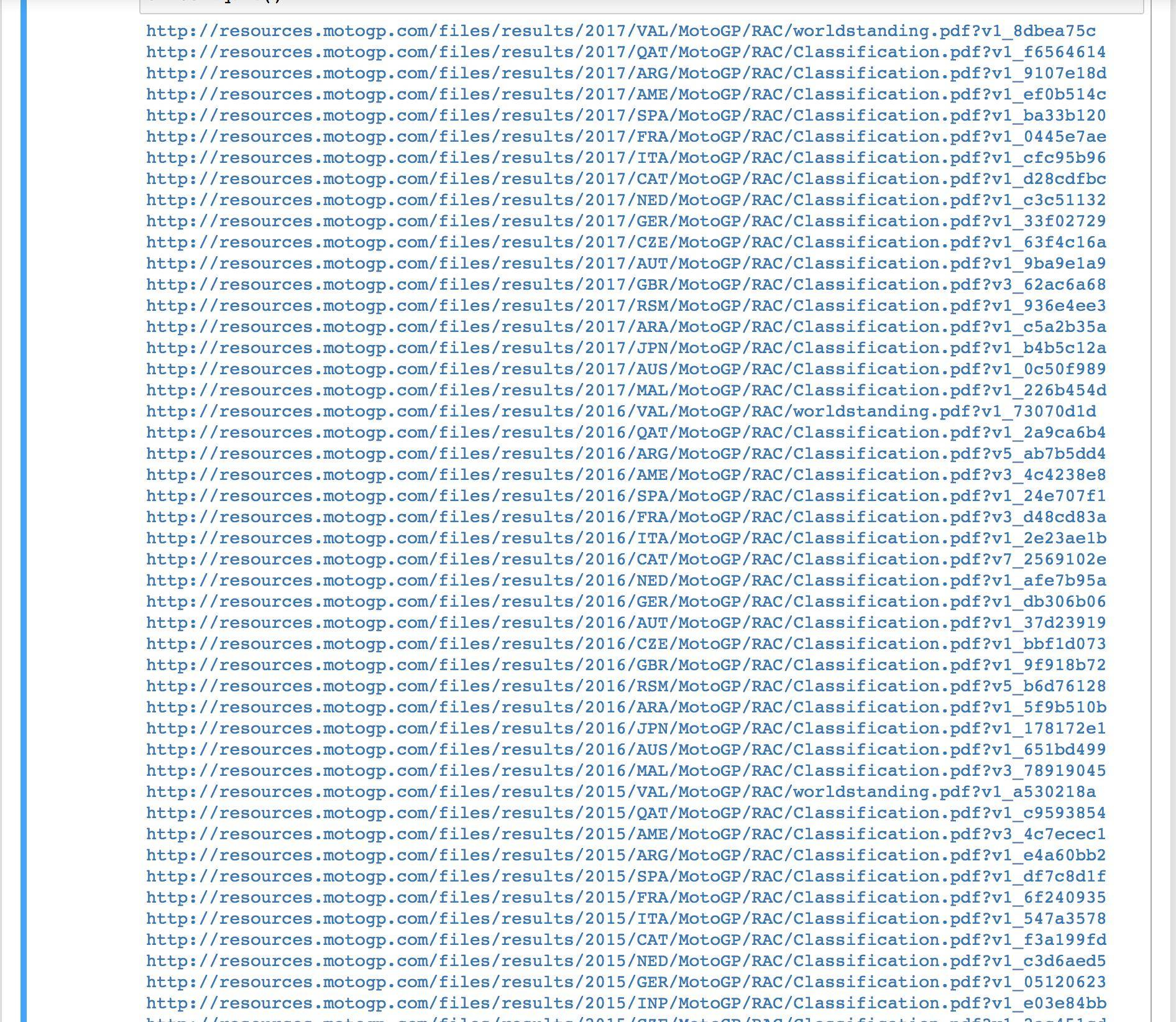

Result:

If your working behind a firewall then a lot of times your EC’s won’t work. See if a time.sleep(10) function doesn’t get you past it, instead of an EC. Secondly, check the page_source before you run the EC... if you’re behind a firewall the HTML source code will tell you.

来源:https://stackoverflow.com/questions/48288376/web-scrapping-with-a-double-loop-with-selenium-and-using-by-selector