-

爬取前程无忧的数据(大数据职位)

1 # -*- coding: utf-8 -*-

2 """

3 Created on Wed Nov 1 14:47:27 2019

4

5 @author: loo

6 """

7

8

9 import scrapy

10 import csv

11 from scrapy.crawler import CrawlerProcess

12

13

14 class MySpider(scrapy.Spider):

15 name = "spider"

16

17 def __init__(self):

18 # 保存为CSV文件操作

19 self.f = open('crawl_51jobs.csv', 'wt', newline='', encoding='GBK', errors='ignore')

20 self.writer = csv.writer(self.f)

21 '''title,locality,salary,companyName,releaseTime'''

22 self.writer.writerow(('职位', '公司地区','薪资', '公司名称', '发布时间'))

23

24 # 设置待爬取网站列表

25 self.urls = []

26 # 设置搜索工作的关键字 key

27 key = '大数据'

28 print("关键字:", key)

29 # 设置需要爬取的网页地址

30 for i in range(1,200):

31 f_url = 'https://search.51job.com/list/000000,000000,0000,00,9,99,' + key + ',2,' + str(i) + '.html?lang=c&stype=1&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare='

32 self.urls.append(f_url)

33 # print(self.urls)

34

35

36 def start_requests(self):

37 # self.init_urls()

38 for url in self.urls:

39 yield scrapy.Request(url=url, callback=self.parse)

40 # parse方法会在每个request收到response之后调用

41

42

43 def parse(self, response):

44 # 提取工作列表

45 jobs = response.xpath('//*[@id="resultList"]/div[@class="el"]')

46 # print(jobs)

47

48 for job in jobs:

49 # 工作职位

50 title = job.xpath('p/span/a/text()').extract_first().strip()

51 # 工作地区

52 locality = job.xpath('span[2]/text()').extract_first()

53 # 薪资

54 salary = job.xpath('span[3]/text()').extract_first()

55 # 公司名称

56 companyName = job.xpath('span[1]/a/text()').extract_first().strip()

57 # 发布时间

58 releaseTime = job.xpath('span[4]/text()').extract_first()

59

60 print(title, locality, salary, companyName, releaseTime)

61 # 保存数据

62 self.writer.writerow((title, locality, salary, companyName, releaseTime))

63 # print("over: " + response.url)

64

65

66 def main():

67 process = CrawlerProcess({

68 'USER_AGENT': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36'

69 })

70

71 process.crawl(MySpider)

72 process.start() # 这句代码就是开始了整个爬虫过程 ,会输出一大堆信息,可以无视

73

74

75 if __name__=='__main__':

76 main()

-

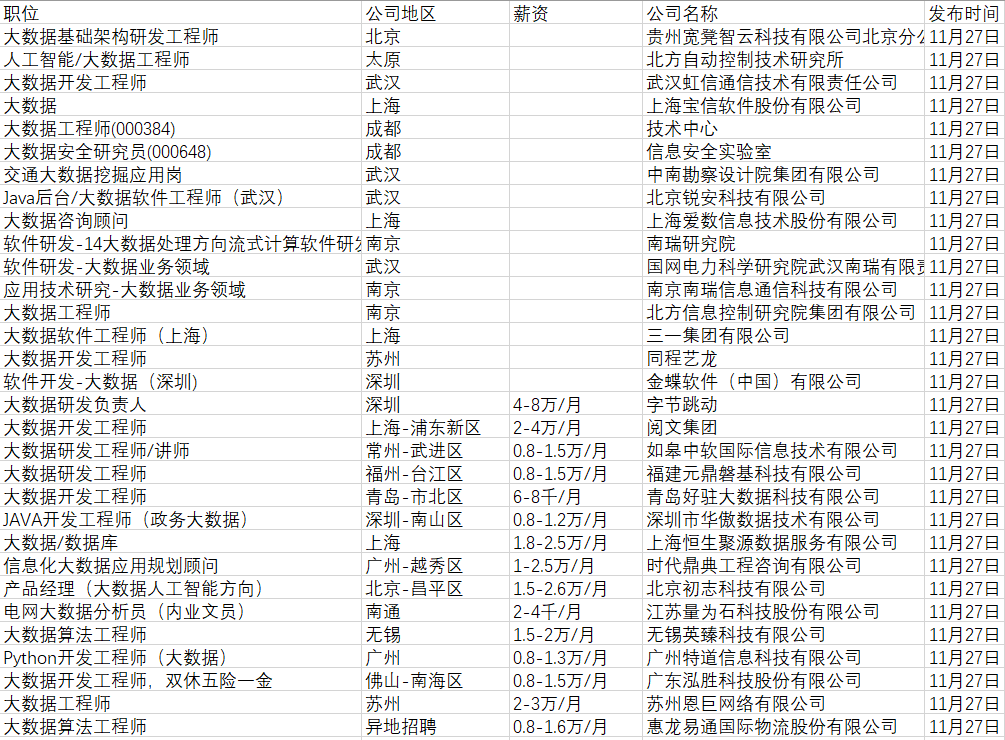

爬取后的数据保存到CSV文件中(如下图)

-

可以在文件中观察数据的特点

- 薪资单位不一样

-

公司地区模式不一样(有的为城市,有的是城市-地区)

-

有职位信息的空白

-

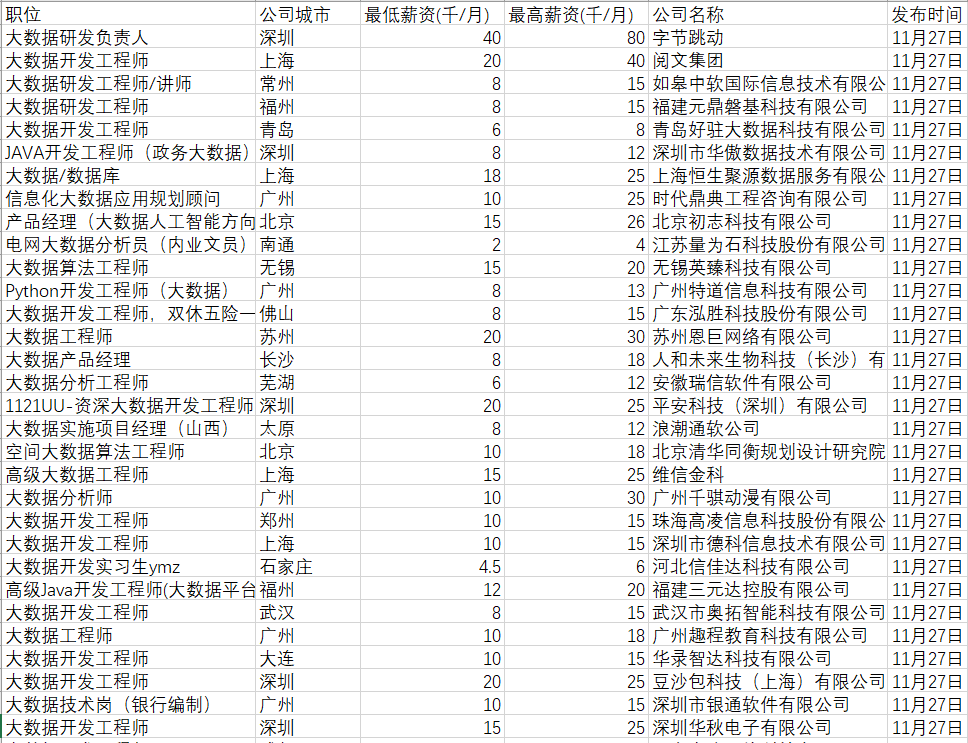

清洗数据

根据CSV文件中信息的特点进行数据清洗

- 将公司位置从区域改为公司城市:地区取到城市,把区域去掉。如“上海-浦东”转化为“上海”

- 薪资规范化(源数据有的是千/月,有的是万/月):统一单位(千元/月),并且将薪资范围拆分为最低薪资和最高薪资。如将“4-6千/月”转化为:最低薪资为4,最高薪资为6

- 删除含有空值的行(有的岗位信息的工作地点、薪资等可能为空,需要删除,便于后面分析)和公司地区为“异地招聘”的行

1 # -*- coding: utf-8 -*-

2 """

3 Created on Wed Nov 1 14:47:27 2019

4

5 @author: loo

6 """

7

8

9 import re

10 import csv

11 import numpy as np

12

13

14 def salaryCleaning(salary):

15 """

16 统一薪资的单位:(千元/月);

17 将薪资范围拆分为最低薪资和最高薪资

18 """

19 minSa, maxSa = [], []

20 for sa in salary:

21 if sa:

22 if '-'in sa: # 针对1-2万/月或者10-20万/年的情况,包含-

23 minSalary=re.findall(re.compile('(\d*\.?\d+)'),sa)[0]

24 maxSalary=re.findall(re.compile('(\d?\.?\d+)'),sa)[1]

25 if u'万' in sa and u'年' in sa: # 单位统一成千/月的形式

26 minSalary = float(minSalary) / 12 * 10

27 maxSalary = float(maxSalary) / 12 * 10

28 elif u'万' in sa and u'月' in sa:

29 minSalary = float(minSalary) * 10

30 maxSalary = float(maxSalary) * 10

31 else: # 针对20万以上/年和100元/天这种情况,不包含-,取最低工资,没有最高工资

32 minSalary = re.findall(re.compile('(\d*\.?\d+)'), sa)[0]

33 maxSalary=""

34 if u'万' in sa and u'年' in sa: # 单位统一成千/月的形式

35 minSalary = float(minSalary) / 12 * 10

36 elif u'万' in sa and u'月' in sa:

37 minSalary = float(minSalary) * 10

38 elif u'元'in sa and u'天'in sa:

39 minSalary=float(minSalary)/1000*21 # 每月工作日21天

40 else:

41 minSalary = ""; maxSalary = "";

42

43 minSa.append(minSalary); maxSa.append(maxSalary)

44 return minSa,maxSa

45

46

47 def locFormat(locality):

48 """

49 将“地区-区域”转化为“地区”

50 """

51 newLocality = []

52 for loc in locality:

53 if '-'in loc: # 针对有区域的情况,包含-

54 newLoc = re.findall(re.compile('(\w*)-'),loc)[0]

55 else: # 针对没有区域的情况

56 newLoc = loc

57 newLocality.append(newLoc)

58 return newLocality

59

60

61 def readFile():

62 """

63 读取源文件

64 """

65 data = []

66 with open("crawl_51jobs.csv",encoding='gbk') as f:

67 csv_reader = csv.reader(f) # 使用csv.reader读取f中的文件

68 data_header = next(csv_reader) # 读取第一行每一列的标题

69 for row in csv_reader: # 将csv 文件中的数据保存到data中

70 data.append(row)

71

72 nd_data = np.array(data) # 将list数组转化成array数组便于查看数据结构

73 jobName = nd_data[:, 0]

74 locality = nd_data[:, 1]

75 salary = nd_data[:, 2]

76 companyName = nd_data[:, 3]

77 releaseTime = nd_data[:, 4]

78 return jobName, locality, salary, companyName, releaseTime

79

80 def saveNewFile(jobName, newLocality, minSa, maxSa, companyName, releaseTime):

81 """

82 将清洗后的数据写入新文件

83 """

84 new_f = open('cleaned_51jobs.csv', 'wt', newline='', encoding='GBK', errors='ignore')

85 writer = csv.writer(new_f)

86 writer.writerow(('职位', '公司城市','最低薪资(千/月)','最高薪资(千/月)', '公司名称', '发布时间'))

87

88 num = 0

89 while True:

90 try: # 所有数据都写入文件后,退出循环

91 if newLocality[num] and minSa[num] and maxSa[num] and companyName[num] and newLocality[num]!="异地招聘": # 当有空值时或者公司地点为异地招聘时不存入清洗后文件

92 writer.writerow((jobName[num], newLocality[num], minSa[num], maxSa[num], companyName[num], releaseTime[num]))

93 num += 1

94 except Exception:

95 break

96

97

98 def main():

99 """

100 主函数

101 """

102 # 获取源数据

103 jobName, locality, salary, companyName, releaseTime = readFile()

104

105 # 清洗源数据中的公司地区和薪资

106 newLocality = locFormat(locality)

107 minSa, maxSa = salaryCleaning(salary)

108

109 # 将清洗后的数据存入CSV文件

110 saveNewFile(jobName, newLocality, minSa, maxSa, companyName, releaseTime)

111

112

113 if __name__ == '__main__':

114 main()

-

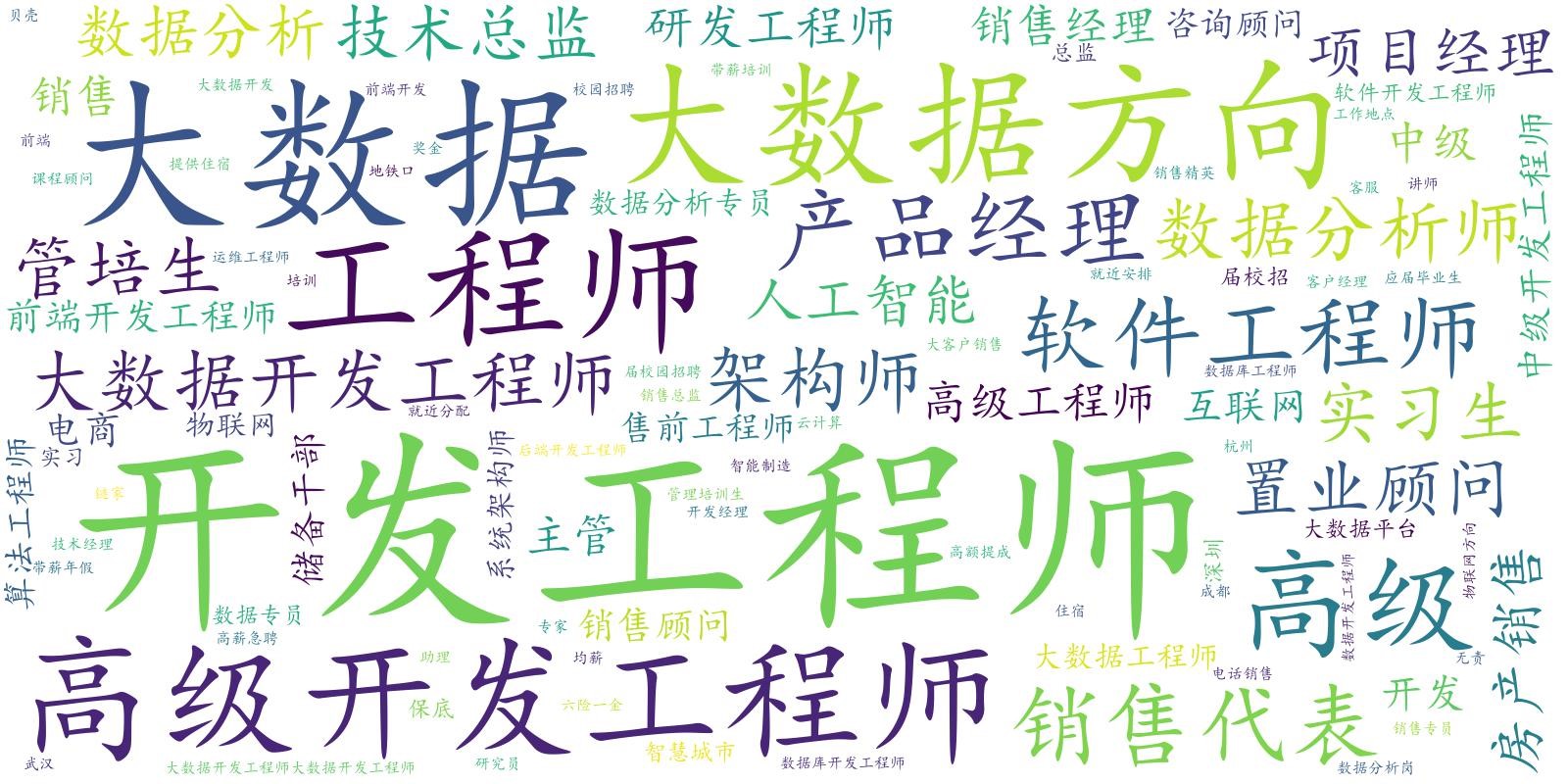

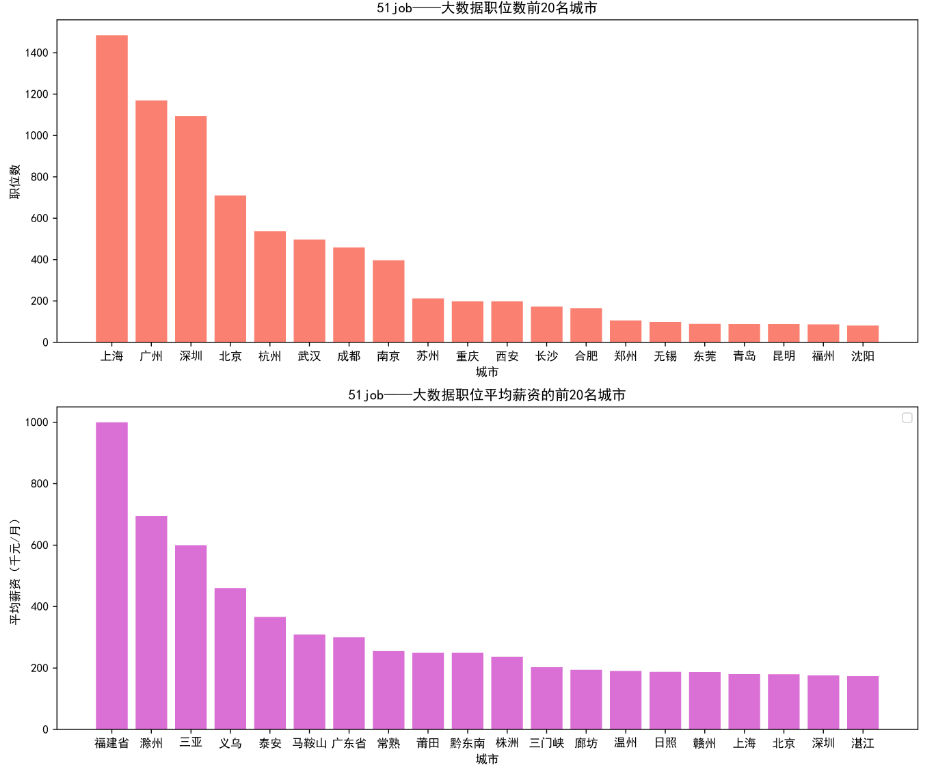

可视化并分析数据

-

职位数前20名的城市以及平均薪资前20的城市

-

大数据岗位的职称情况

-

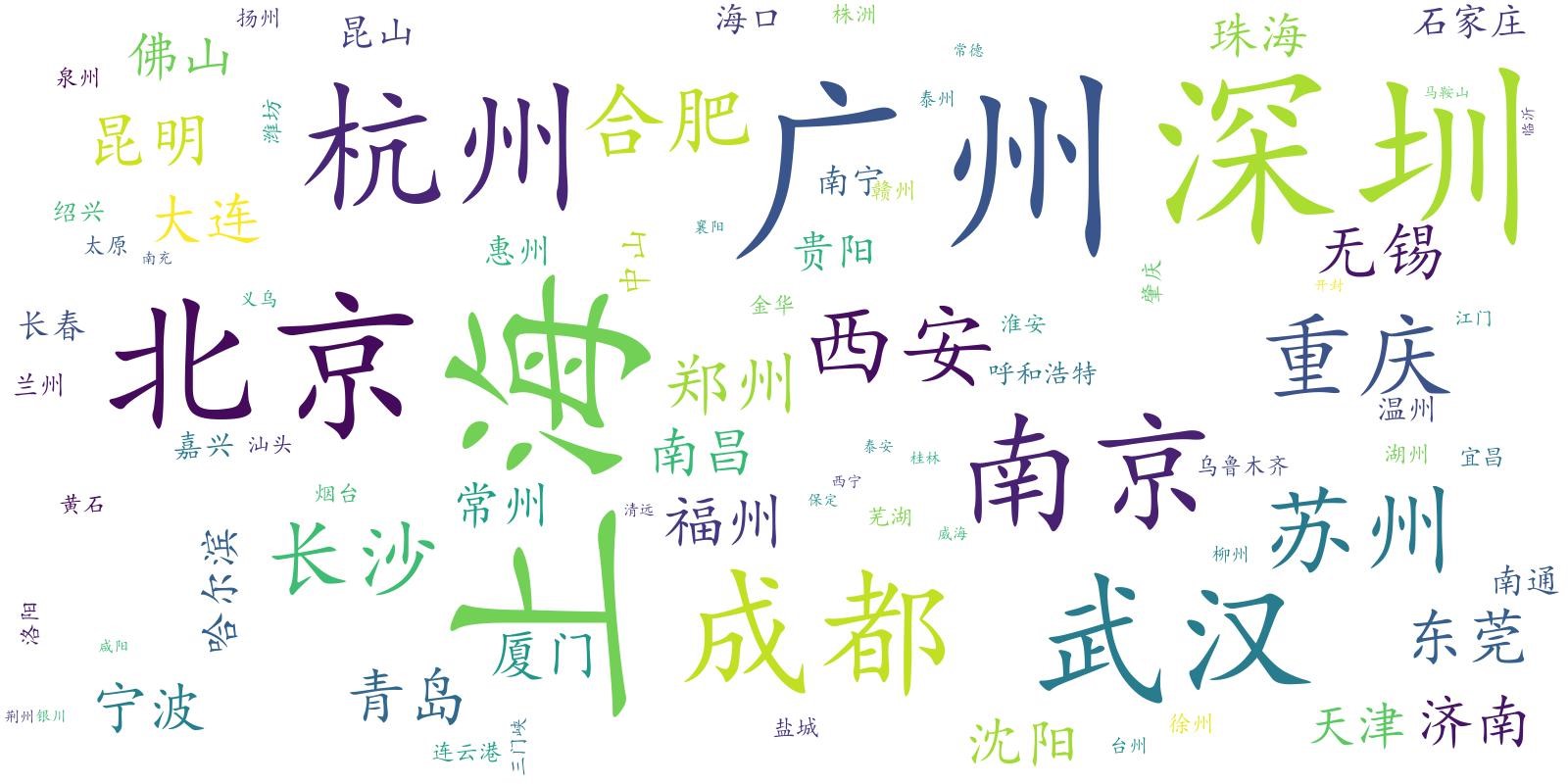

大数据岗位的城市分布情况

-

1 # -*- coding: utf-8 -*-

2 """

3 Created on Wed Nov 1 20:15:56 2019

4

5 @author: loo

6 """

7

8 import matplotlib.pyplot as plt

9 import csv

10 import numpy as np

11 import re

12 from wordcloud import WordCloud,STOPWORDS

13

14

15 def readFile():

16 """

17 读取清洗后的文件

18 """

19 data = []

20 with open("cleaned_51jobs.csv",encoding='gbk') as f:

21 csv_reader = csv.reader(f) # 使用csv.reader读取f中的文件

22 data_header = next(csv_reader) # 读取第一行每一列的标题

23 for row in csv_reader: # 将csv文件中的数据保存到data中

24 data.append(row)

25

26 nd_data = np.array(data) # 将list数组转化成array数组便于查看数据结构

27 jobName = nd_data[:, 0]

28 locality = nd_data[:, 1]

29 minSalary = nd_data[:, 2]

30 maxSalary = nd_data[:, 3]

31 return data, jobName, locality, minSalary, maxSalary

32

33

34

35 def salary_locality(data):

36 """

37 计算城市对应的职位数和平均薪资,并打印

38 """

39 city_num = dict()

40

41 for job in data:

42 loc, minSa, maxSa = job[1], float(job[2]), float(job[3])

43 if loc not in city_num:

44 avg_salary = minSa*maxSa/2

45 city_num[loc] = (1, avg_salary)

46 else:

47 num = city_num[loc][0]

48 avg_salary = (minSa*maxSa/2 + num * city_num[loc][1])/(num+1)

49 city_num[loc] = (num+1, avg_salary)

50

51 # 将其按职位数降序排列

52 title_sorted = sorted(city_num.items(), key=lambda x:x[1], reverse=True)

53 title_sorted = dict(title_sorted)

54

55 # 将其按平均薪资降序排列

56 salary_sorted = sorted(city_num.items(), key=lambda x:x[1][1], reverse=True)

57 salary_sorted = dict(salary_sorted)

58

59

60 allCity1, allCity2, allNum, allAvg = [], [], [], []

61 i, j = 1, 1

62 # 取职位数前20

63 for city in title_sorted:

64 if i<=20:

65 allCity1.append(city)

66 allNum.append(title_sorted[city][0])

67 i += 1

68

69 # 取平均薪资前20

70 for city in salary_sorted:

71 if j<=20:

72 allCity2.append(city)

73 allAvg.append(salary_sorted[city][1])

74 j += 1

75

76 #解决中文显示问题

77 plt.rcParams['font.sans-serif']=['SimHei']

78 plt.rcParams['axes.unicode_minus'] = False

79

80 # 柱状图在横坐标上的位置

81 x = np.arange(20)

82

83 # 设置图的大小

84 plt.figure(figsize=(13, 11))

85

86 # 列出你要显示的数据,数据的列表长度与x长度相同

87 y1 = allNum

88 y2 = allAvg

89

90 bar_width=0.8 # 设置柱状图的宽度

91 tick_label1 = allCity1

92 tick_label2 = allCity2

93

94

95 # 绘制柱状图

96 plt.subplot(211)

97 plt.title('51job——大数据职位数前20名城市')

98 plt.xlabel(u"城市")

99 plt.ylabel(u"职位数")

100 plt.xticks(x,tick_label1) # 显示x坐标轴的标签,即tick_label

101 plt.bar(x,y1,bar_width,color='salmon')

102

103 plt.subplot(212)

104 plt.title('51job——大数据职位平均薪资的前20名城市')

105 plt.xlabel(u"城市")

106 plt.ylabel(u"平均薪资(千元/月)")

107 plt.xticks(x,tick_label2) # 显示x坐标轴的标签,即tick_label

108 plt.bar(x,y2,bar_width,color='orchid')

109

110 plt.legend() # 显示图例,即label

111 # plt.savefig('city.jpg', dpi=500) # 指定像素保存

112 plt.show()

113

114

115 def jobTitle(jobName):

116

117 word="".join(jobName);

118

119 # 图片模板和字体

120 # image=np.array(Image.open('model.jpg'))#显示中文的关键步骤

121 font='simkai.ttf'

122

123 # 去掉英文,保留中文

124 resultword=re.sub("[A-Za-z0-9\[\`\~\!\@\#\$\^\&\*\(\)\=\|\{\}\'\:\;\'\,\[\]\.\<\>\/\?\~\。\@\#\\\&\*\%\-]", " ",word)

125 # 已经中文和标点符号

126 wl_space_split = resultword

127 # 设置停用词

128 sw = set(STOPWORDS)

129 sw.add("高提成");sw.add("底薪");sw.add("五险");sw.add("双休")

130 sw.add("五险一金");sw.add("社保");sw.add("上海");sw.add("广州")

131 sw.add("无责底薪");sw.add("月薪");sw.add("急聘");sw.add("急招")

132 sw.add("资深");sw.add("包吃住");sw.add("周末双休");sw.add("代招")

133 sw.add("高薪");sw.add("高底薪");sw.add("校招");sw.add("月均")

134 sw.add("可实习");sw.add("年薪");sw.add("北京");sw.add("经理")

135 sw.add("包住");sw.add("应届生");sw.add("南京");sw.add("专员")

136 sw.add("提成");sw.add("方向")

137

138 # 关键一步

139 my_wordcloud = WordCloud(font_path=font,stopwords=sw,scale=4,background_color='white',

140 max_words = 100,max_font_size = 60,random_state=20).generate(wl_space_split)

141 #显示生成的词云

142 plt.imshow(my_wordcloud)

143 plt.axis("off")

144 plt.show()

145

146 #保存生成的图片

147 # my_wordcloud.to_file('title.jpg')

148

149

150 def localityWordCloud(locality):

151 font='simkai.ttf'

152 locality = " ".join(locality)

153

154 # 关键一步

155 my_wordcloud = WordCloud(font_path=font,scale=4,background_color='white',

156 max_words = 100,max_font_size = 60,random_state=20).generate(locality)

157

158 #显示生成的词云

159 plt.imshow(my_wordcloud)

160 plt.axis("off")

161 plt.show()

162

163 #保存生成的图片

164 # my_wordcloud.to_file('place.jpg')

165

166

167 def main():

168 # 得到清洗后的数据数据

169 data, jobName, locality, minSalary, maxSalary = readFile()

170 # 进行分析

171 salary_locality(data)

172 jobTitle(jobName)

173 localityWordCloud(locality)

174

175

176 if __name__ == '__main__':

177 main()

可视化图片如下

-

结论

1) 职位数排名前三的城市:上海、广州、深圳

2) 平均薪资排名前三的城市:福建、滁州、三亚

3) 大数据职位需求多的城市其平均薪资不一定高。相反,大数据职位需求多的城市的平均薪资更低,而大数据职位需求少的城市的平均薪资更高。

4) 大数据岗位需求最大的职称是:开发工程师