1. background

in most cases, we want to execute sql script in doris routinely. using azkaban, to load data,etc.And we want to pass parameters to the sql script file. we can easily handle such situation in hive.

1.1 hive usage:

using -hiveconf: or -hivevar:

shell file:

we want to pass 2 parameters into hive sql script: p_partition_d & p_partition_to_delete: which pass two parameters into hive.sql file using -hivevar {variable_name}={variable_value}

#!/bin/bash

CURRENT_DIR=$(cd `dirname $0`; pwd)

echo "CURRENT_DIR:"${CURRENT_DIR}

APPLICATION_ROOT_DIR=$(cd ${CURRENT_DIR}/..;pwd)

echo "APPLICATION_ROOT_DIR:"${APPLICATION_ROOT_DIR}

source ${APPLICATION_ROOT_DIR}/globle_config.sh

#1-入口参数,获取脚本运行时间参数,默认参数为今天,与当前调度中的参数一致

echo $#

if [ $# = 0 ]; then

p_partition_d=$(date -d "0 days" +%Y%m%d)

p_partition_to_delete=`date -d "-8 days" +%Y%m%d`

fi

if [ $# = 1 ]; then

p_partition_d=$(date -d "$1" +%Y%m%d)

p_partition_to_delete=`date -d "$1 -8 days" +%Y%m%d`

fi

echo "p_partition_d: "${p_partition_d}

echo "p_partition_to_delete: "${p_partition_to_delete}

$HIVE_HOME_BIN/hive -hivevar p_partition_d="${p_partition_d}" \

-hivevar p_partition_to_delete="${p_partition_to_delete}" \

-f ${CURRENT_DIR}/points_core_tb_acc_rdm_rel_incremental.sql

if [ $? != 0 ];then

exit -1

fi

the conresponding hive sql script shows as follows:

set hive.auto.convert.join = true;

SET hive.mapjoin.smalltable.filesize=200000000;

SET hive.auto.convert.join.noconditionaltask=true;

SET hive.auto.convert.join.noconditionaltask.size=20000000;

SET hive.exec.mode.local.auto=true;

SET hive.exec.mode.local.auto.inputbytes.max=200000000;

SET hive.exec.mode.local.auto.input.files.max=200;

set mapred.max.split.size=268435456;

set mapred.min.split.size.per.node=167772160;

set mapred.min.split.size.per.rack=167772160;

set hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat;

set hive.merge.mapfiles = true;

set hive.merge.mapredfiles = true;

set hive.merge.size.per.task = 268435456;

set hive.merge.smallfiles.avgsize=167772160;

set hive.exec.reducers.bytes.per.reducer= 1073741824;

SET mapreduce.job.jvm.numtasks=5;

--handles the inserted data.

-- points_core.tb_acc_rdm_rel is append only, so no update and delete is related!!!!

INSERT OVERWRITE TABLE ods.points_core_tb_acc_rdm_rel_incremental PARTITION(pt_log_d = '${hivevar:p_partition_d}')

SELECT

id,

txn_id,

rdm_id,

acc_id,

point_val,

add_time,

update_time,

add_user,

update_user,

last_update_timestamp

FROM staging.staging_points_core_tb_acc_rdm_rel AS a

WHERE

pt_log_d = '${hivevar:p_partition_d}';

---------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------------------------------------------------------

-- delete hive table partition, the delete file job would be done in shell script,since this table is external table.

ALTER TABLE staging.staging_points_core_tb_acc_rdm_rel DROP IF EXISTS PARTITION(pt_log_d='${hivevar:p_partition_to_delete}');

---------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------------------------------------------------------

in the hql file ,we reference the passing parameter using ${hivevar:variable_name}.

Note: we can also using hiveconf instead of hivevar, but for each parameter, we should use it as the style the parameter passed in. the difference between hivevar and hiveconf is:

- hivevar: only contains user parameters.

- hiveconf: contains both the hive system variables and user parameters.

1.2 doris requerirements

common requierment like:

- to load data into specified partition which the partition paramerter is to be passed in.

- load data into doris table from external system, such hdfs, etc. In the load statement, the load label paramerter should be pass in to the sql script.

2. solution

2.1 doris common scripts impmentation

I have implemented a common shell script, by calling such shell script, we can pass in parameter values just as the style we used in hive.

the implementation code shows as follows:

globle_config.sh

#!/bin/bash

OP_HOME_BIN=/opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/bin

HIVE_HOME_BIN=/opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/bin

MYSQL_HOME_BIN=/usr/local/mysql/bin

DORIS_HOST=192.168.1.101

DORIS_PORT=9030

DORIS_USER_NAME=dev_readonly

DORIS_PASSWORD=dev_readonly#

# this function provide functionality to copy the provided file

# input parameter:

# $1: the working directory

# $2: the absolute path of the file to be copyed.

# result: the absolute path the copyed file, the copyed file was located at the working folder.

function func_copy_file() {

if [ $# != 2 ]; then

echo "missing parameter, type in like: repaceContent /opt/ /opt/a.sql"

exit -1

fi

working_dir=$1

source_file=$2

# check(s) if the file to be copyed exists.

if [ ! -f $source_file ]; then

echo "file : " $source_file " to be copied does not exist"

exit -1

fi

# check(s) if the working dir exists.

if [ ! -d "$working_dir" ]; then

echo "the working directory : " $source_file " does not exist"

exit -1

fi

# checks if the file already exists, $result holds the copied file name(absolute path)

result=${working_dir}/$(generate_datatime_random)

while [ -f $result ]; do

result=${working_dir}/$(generate_datatime_random)

done

# copy file

cp ${source_file} ${result}

echo ${result}

}

# this function provide functionality to generate a ramdom string based on current system timestamp.

# input parameter:

# N/A

# result: ramdom string based on current system timestamp.

function generate_datatime_random() {

# date=$(date -d -0days +%Y%m%d)

# #随机数以时间戳纳秒用于防止目录冲突

# randnum=$(date +%s%N)

echo $(date -d -0days +%Y%m%d)_$(date +%s%N)

}

#replace the specifed string to the target string in the provided file

# $1: the absolute path of the file to be replaced.

# $2: the source_string for the replacement.

# $3: the target string for the replacement.

# result: none

function func_repace_content() {

if [ $# != 3 ]; then

echo "missing parameter, type in like: repaceContent /opt/a.sql @name 'lenmom'"

exit -1

fi

echo "begin replacement"

file_path=$1

#be careful of regex expression.

source_content=$2

replace_content=$3

if [ ! -f $file_path ]; then

echo "file : " $file_path " to be replaced does not exist"

exit -1

fi

echo "repalce all ["${source_content} "] in file: "${file_path} " to [" ${replace_content}"]"

sed -i "s/${source_content}/${replace_content}/g" $file_path

}

# this function provide(s) functionality to execute doris sql script file

# Input parameters:

# $1: the absolute path of the .sql file to be executed.

# other paramer(s) are optional, of provided, it's the parameters paire to pass in the script file before execution.

# result: 0, if execute success; otherwise, -1.

function func_execute_doris_sql_script() {

echo "imput parameters: "$@

parameter_number=$#

if [ $parameter_number -lt 1 ]; then

echo "missing parameter, must contain the script file to be executed. other parameters are optional,such as"

echo "func_execute_doris_sql_script /opt/a.sql @name 'lenmom'"

exit -1

fi

# copy the file to be executed and wait for parameter replacement.

working_dir="$(

cd $(dirname $0)

pwd

)"

file_to_execute=$(func_copy_file "${working_dir}" "$1")

if [ $? != 0 ]; then

exit -1

fi

if [ $parameter_number -gt 1 ]; then

for ((i = 2; i <= $parameter_number; i += 2)); do

case $i in

2)

func_repace_content "$file_to_execute" "$2" "$3"

;;

4)

func_repace_content "$file_to_execute" "$4" "$5"

;;

6)

func_repace_content "$file_to_execute" "$6" "$7"

;;

8)

func_repace_content "$file_to_execute" "$8" "$9"

;;

esac

done

fi

if [ $? != 0 ]; then

exit -1

fi

echo "begin to execute script in doris, the content is:"

cat $file_to_execute

echo

MYSQL_HOME="$MYSQL_HOME_BIN/mysql"

if [ ! -f $MYSQL_HOME ]; then

# `which is {app_name}` return code is 1, so we should ignore it.

MYSQL_HOME=$(which is mysql)

# print mysql location in order to override the globle shell return code to 0 ($?)

echo "mysql location is: "$MYSQL_HOME

fi

$MYSQL_HOME -h $DORIS_HOST -P $DORIS_PORT -u$DORIS_USER_NAME -p$DORIS_PASSWORD <"$file_to_execute"

if [ $? != 0 ]; then

rm -f $file_to_execute

echo execute failed

exit -1

else

rm -f $file_to_execute

echo execute success

exit 0

fi

}

# this function provide(s) functionality to load data into doris by execute the specified load sql script file.

# Input parameters:

# $1: the absolute path of the .sql file to be executed.ll

# $2: the label holder to be replaced.

# result: 0, if execute success; otherwise, -1.

function doris_load_data() {

if [ $# -lt 2 ]; then

echo "missing parameter, type in like: doris_load_data /opt/a.sql label_place_holder"

exit -1

fi

if [ ! -f $1 ]; then

echo "file : " $1 " to execute does not exist"

exit -1

fi

func_execute_doris_sql_script $@ $(generate_datatime_random)

}

2.2 usage

sql script file wich name load_user_label_from_hdfs.sql

LOAD LABEL user_label.fct_usr_label_label_place_holder

( DATA INFILE("hdfs://nameservice1/user/hive/warehouse/usr_label.db/usr_label/*")

INTO TABLE fct_usr_label

COLUMNS TERMINATED BY "\\x01"

FORMAT AS "parquet"

(member_id ,mobile ,corp ,province ,channel_name ,new_usr_type ,gender ,age_type ,last_login_type)

)

WITH BROKER 'doris-hadoop'

(

"dfs.nameservices"="nameservice1",

"dfs.ha.namenodes.nameservice1"="namenode185,namenode38",

"dfs.namenode.rpc-address.nameservice1.namenode185"="hadoop-datanode06:8020",

"dfs.namenode.rpc-address.nameservice1.namenode38"="hadoop-namenode01:8020",

"dfs.client.failover.proxy.provider"="org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

)

PROPERTIES ( "timeout"="3600", "max_filter_ratio"="0");

in this file, the load label has a place holder named label_place_holder of which the value should be passed in by the invoking shell file.

shell file:

user_label_load.sh

#!/bin/bash

CURRENT_DIR=$(cd `dirname $0`; pwd)

echo "CURRENT_DIR:"${CURRENT_DIR}

APPLICATION_ROOT_DIR=$(cd ${CURRENT_DIR}/..;pwd)

echo "APPLICATION_ROOT_DIR:"${APPLICATION_ROOT_DIR}

source ${APPLICATION_ROOT_DIR}/globle_config.sh

#load doris data by calling common shell function

doris_load_data $CURRENT_DIR/load_user_label_from_hdfs.sql "\label_place_holder"

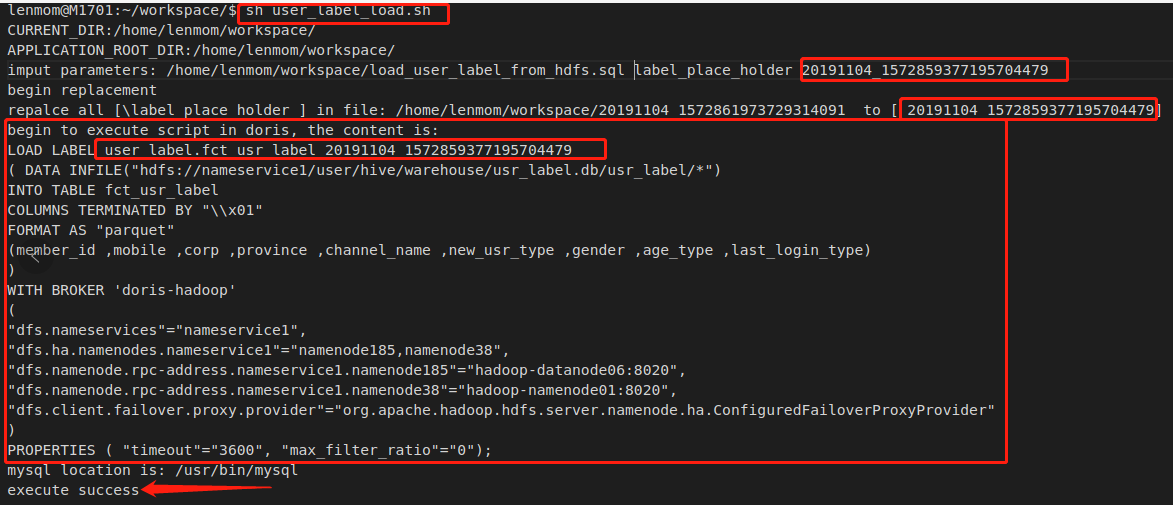

2.3 execute

in the shell terminal, just execute the shell file would be fine.

sh user_label_load.sh

the shell file include the passed in parameters for invoking the sql script in doris.

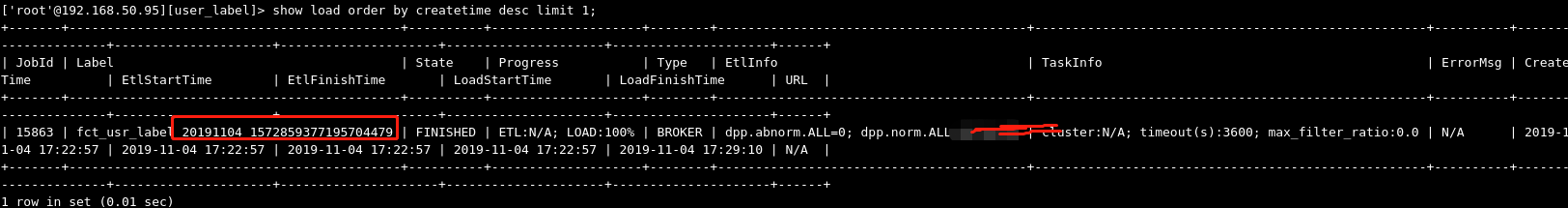

query the load result in doris: