How do I use the Tensorboard callback of Keras?

I have built a neural network with Keras. I would visualize its data by Tensorboard, therefore I have utilized:

keras.

-

Create the Tensorboard callback:

from keras.callbacks import TensorBoard from datetime import datetime logDir = "./Graph/" + datetime.now().strftime("%Y%m%d-%H%M%S") + "/" tb = TensorBoard(log_dir=logDir, histogram_freq=2, write_graph=True, write_images=True, write_grads=True)Pass the Tensorboard callback to the fit call:

history = model.fit(X_train, y_train, epochs=200, callbacks=[tb])When running the model, if you get a Keras error of

"You must feed a value for placeholder tensor"

try reseting the Keras session before the model creation by doing:

import keras.backend as K K.clear_session()讨论(0) -

There are few things.

First, not

/Graphbut./GraphSecond, when you use the TensorBoard callback, always pass validation data, because without it, it wouldn't start.

Third, if you want to use anything except scalar summaries, then you should only use the

fitmethod becausefit_generatorwill not work. Or you can rewrite the callback to work withfit_generator.To add callbacks, just add it to

model.fit(..., callbacks=your_list_of_callbacks)讨论(0) -

Here is some code:

K.set_learning_phase(1) K.set_image_data_format('channels_last') tb_callback = keras.callbacks.TensorBoard( log_dir=log_path, histogram_freq=2, write_graph=True ) tb_callback.set_model(model) callbacks = [] callbacks.append(tb_callback) # Train net: history = model.fit( [x_train], [y_train, y_train_c], batch_size=int(hype_space['batch_size']), epochs=EPOCHS, shuffle=True, verbose=1, callbacks=callbacks, validation_data=([x_test], [y_test, y_test_coarse]) ).history # Test net: K.set_learning_phase(0) score = model.evaluate([x_test], [y_test, y_test_coarse], verbose=0)Basically,

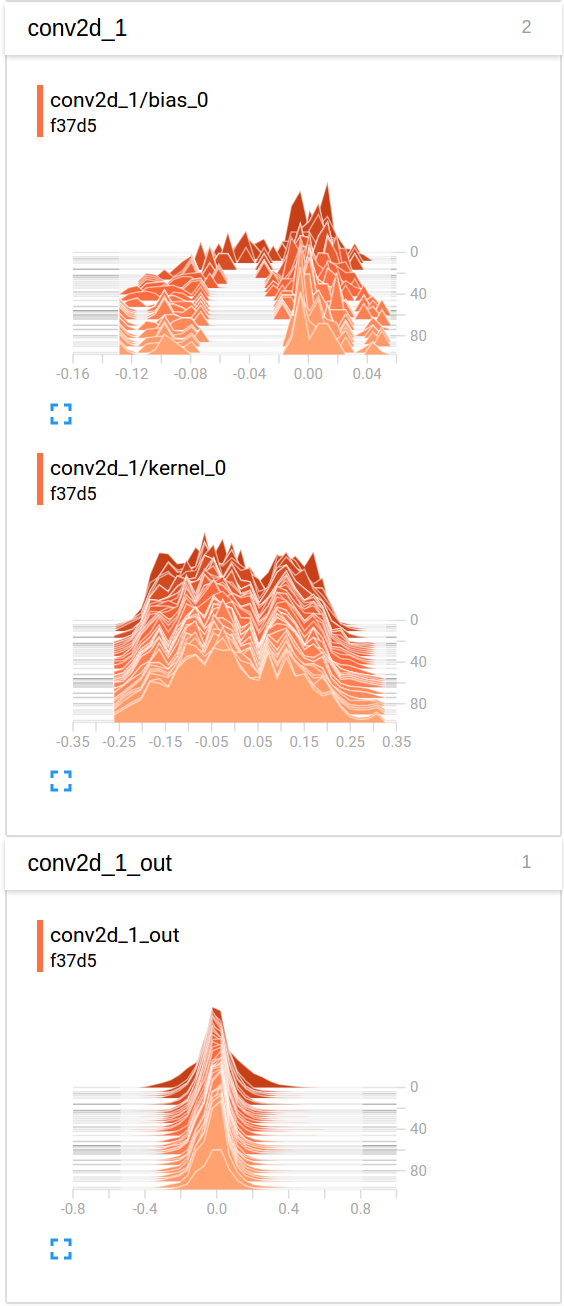

histogram_freq=2is the most important parameter to tune when calling this callback: it sets an interval of epochs to call the callback, with the goal of generating fewer files on disks.So here is an example visualization of the evolution of values for the last convolution throughout training once seen in TensorBoard, under the "histograms" tab (and I found the "distributions" tab to contain very similar charts, but flipped on the side):

In case you would like to see a full example in context, you can refer to this open-source project: https://github.com/Vooban/Hyperopt-Keras-CNN-CIFAR-100

讨论(0) -

Change

keras.callbacks.TensorBoard(log_dir='/Graph', histogram_freq=0, write_graph=True, write_images=True)to

tbCallBack = keras.callbacks.TensorBoard(log_dir='Graph', histogram_freq=0, write_graph=True, write_images=True)and set your model

tbCallback.set_model(model)Run in your terminal

tensorboard --logdir Graph/讨论(0) -

You wrote

log_dir='/Graph'did you mean./Graphinstead? You sent it to/home/user/Graphat the moment.讨论(0) -

If you are working with Keras library and want to use tensorboard to print your graphs of accuracy and other variables, Then below are the steps to follow.

step 1: Initialize the keras callback library to import tensorboard by using below command

from keras.callbacks import TensorBoardstep 2: Include the below command in your program just before "model.fit()" command.

tensor_board = TensorBoard(log_dir='./Graph', histogram_freq=0, write_graph=True, write_images=True)Note: Use "./graph". It will generate the graph folder in your current working directory, avoid using "/graph".

step 3: Include Tensorboard callback in "model.fit()".The sample is given below.

model.fit(X_train,y_train, batch_size=batch_size, epochs=nb_epoch, verbose=1, validation_split=0.2,callbacks=[tensor_board])step 4 : Run your code and check whether your graph folder is there in your working directory. if the above codes work correctly you will have "Graph" folder in your working directory.

step 5 : Open Terminal in your working directory and type the command below.

tensorboard --logdir ./Graphstep 6: Now open your web browser and enter the address below.

http://localhost:6006After entering, the Tensorbaord page will open where you can see your graphs of different variables.

讨论(0)

- 热议问题

加载中...

加载中...