PS:小编是为了参加大数据技能大赛而学习网络爬虫,对爬虫感兴趣的可以关注我哦,每周更新一篇~(这周迎来第一个粉丝,为了庆祝多发布一篇✌)

👉直奔主题👈

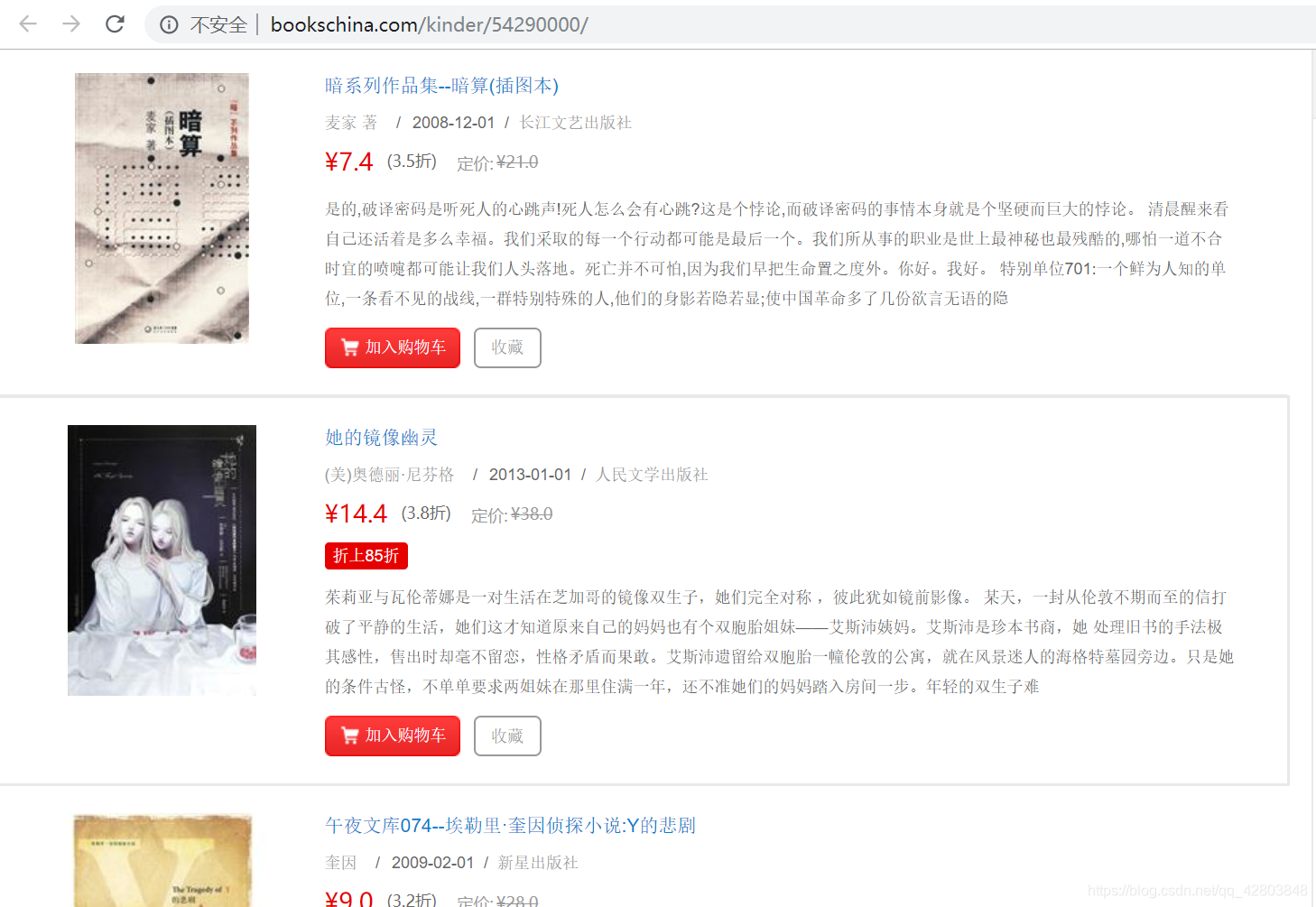

首先,我们来分析目标网页http://www.bookschina.com/kinder/54290000/

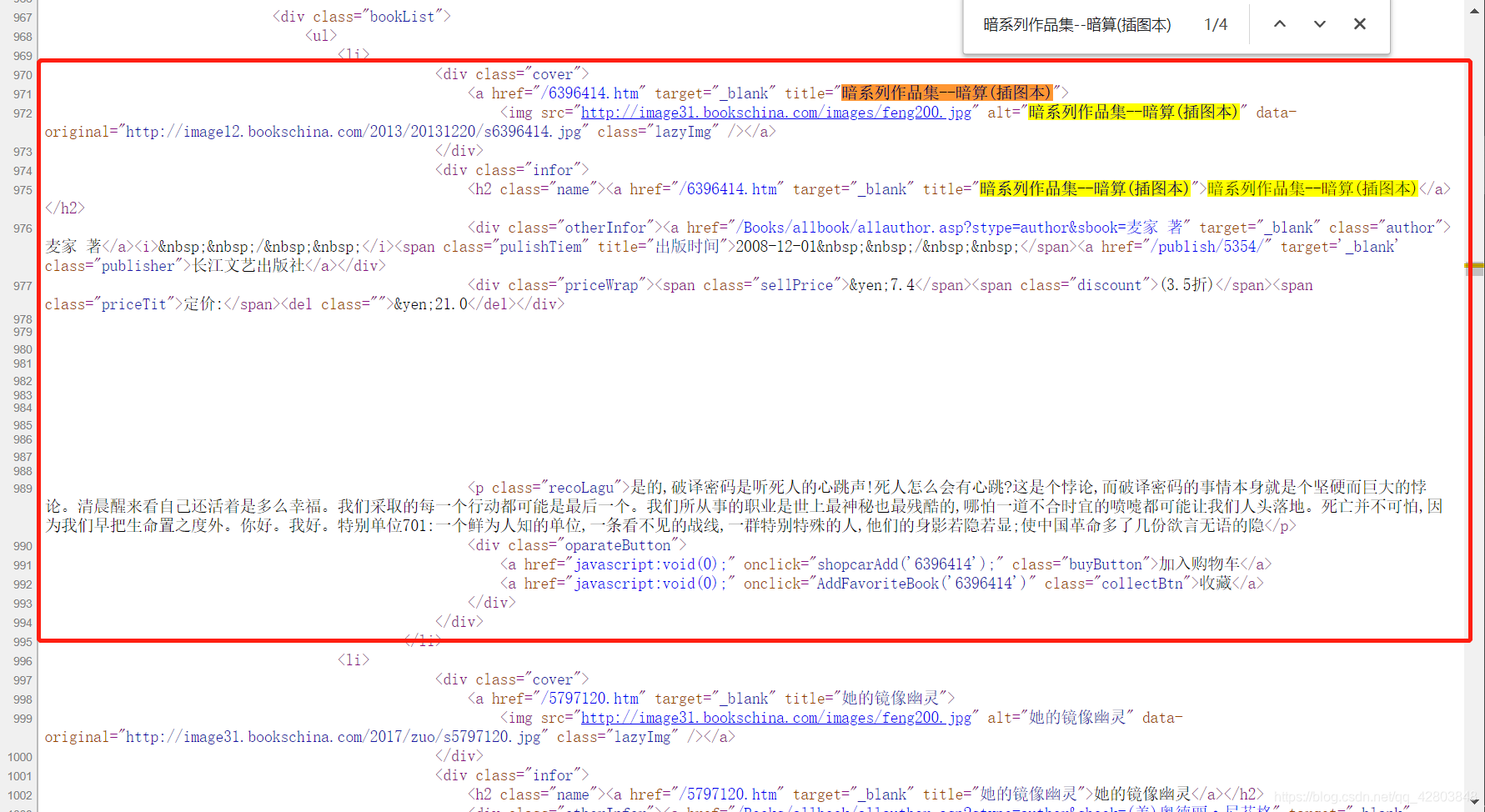

查看网页源代码,搜索关键字的快捷键是(Ctrl+F)

现在已经找到我们所需的信息块,下面开始go~ go~ go~

开始操作实战

第一步:创建bookstore项目,还是熟悉的三句命令:

(PS:记住是在cmd.exe下执行)

scrapy startproject bookstore

cd bookstore

scrapy genspider store "bookschina.com"

第二步:编写代码

一、 编写spider.py模块

# -*- coding: utf-8 -*-

import scrapy

import time

from scrapy import Request,Selector

from bookstore.items import BookstoreItem

class StoreSpider(scrapy.Spider):

name = 'store'

# allowed_domains = ['bookschina.com']

# start_urls = ['http://bookschina.com/']

next_url = 'http://www.bookschina.com'

url = 'http://www.bookschina.com/kinder/54290000/' #初始URL

# 伪装

headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64) AppleWebKit/537.36 (KHTML , like Gecko) Chrome/67.0.3396.62 Safari/537.36'}

def start_requests(self):

yield Request(url=self.url,headers=self.headers,callback=self.parse)

def parse(self, response):

item = BookstoreItem()

selector = Selector(response)

books = selector.xpath('//div[@class="infor"]')

for book in books:

name = book.xpath('h2/a/text()').extract()

if name: # 这里的处理是因为有些推荐书籍的信息不全,我们将其过滤掉

name = name

else:

continue

author = book.xpath('div[@class="otherInfor"]/a[@class="author"]/text()').extract()

press = book.xpath('div[@class="otherInfor"]/a[@class="publisher"]/text()').extract()

publication_data = book.xpath('div[@class="otherInfor"]/span/text()').extract()

price = book.xpath('div/span[@class="sellPrice"]/text()').extract()

item['name'] = ''.join(name)

item['author'] = ''.join(author)

item['press'] = ''.join(press)

item['publication_data'] = publication_data

item['publication_data'] = ''.join(publication_data).replace('\xa0','').replace('/','')

item['price'] = ''.join(price)

yield item

# 获取下一页的URL

nextpage = selector.xpath('//div[@class="topPage"]/a[@class="nextPage"]/@href').extract()

print(nextpage) # 这里实时查看我们接下来爬取的URL链接

time.sleep(3) # 休眠3秒,减缓网站服务器的压力

if nextpage: # 如果还有下一页,继续爬取

nextpage = nextpage[0]

yield Request(self.next_url + str(nextpage),headers=self.headers,callback=self.parse)

二、 编写 items.py

import scrapy

class BookstoreItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

name = scrapy.Field() # 书名

author = scrapy.Field() # 作者

press = scrapy.Field() # 出版社

publication_data = scrapy.Field() # 出版时间

price = scrapy.Field() # 价格

三、 编写 pipeline.py

import json

class BookstorePipeline(object):

def process_item(self, item, spider):

with open('E:/study/python/pycodes/bookstore/book.json','a+',encoding='utf-8') as f:

lines = json.dumps(dict(item),ensure_ascii=False) + '\n'

f.write(lines)

return item

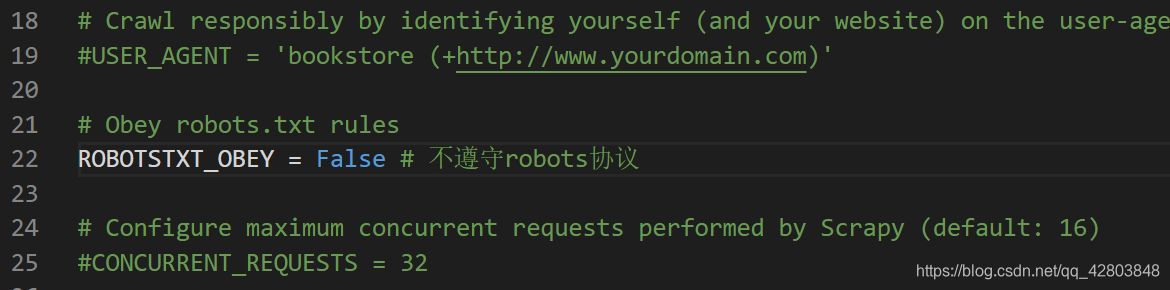

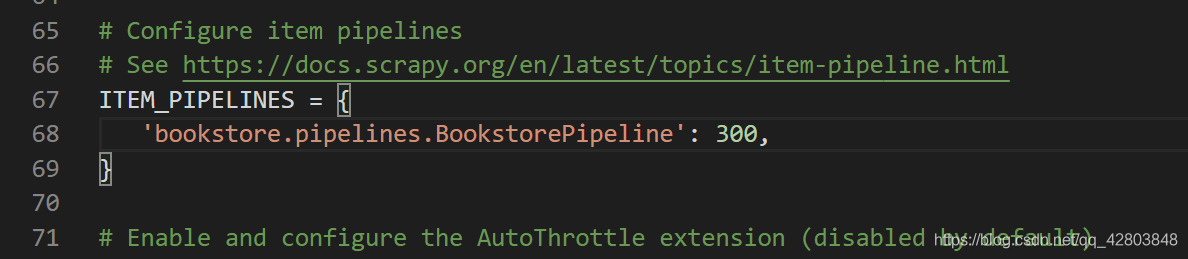

四、 设置setting.py

- 设置不遵守 robots.txt 协议

- 打开 item_pipelines

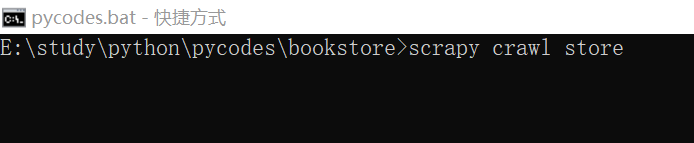

最后运行程序:scrapy crawl store

(PS: 要切换至项目目录下执行)

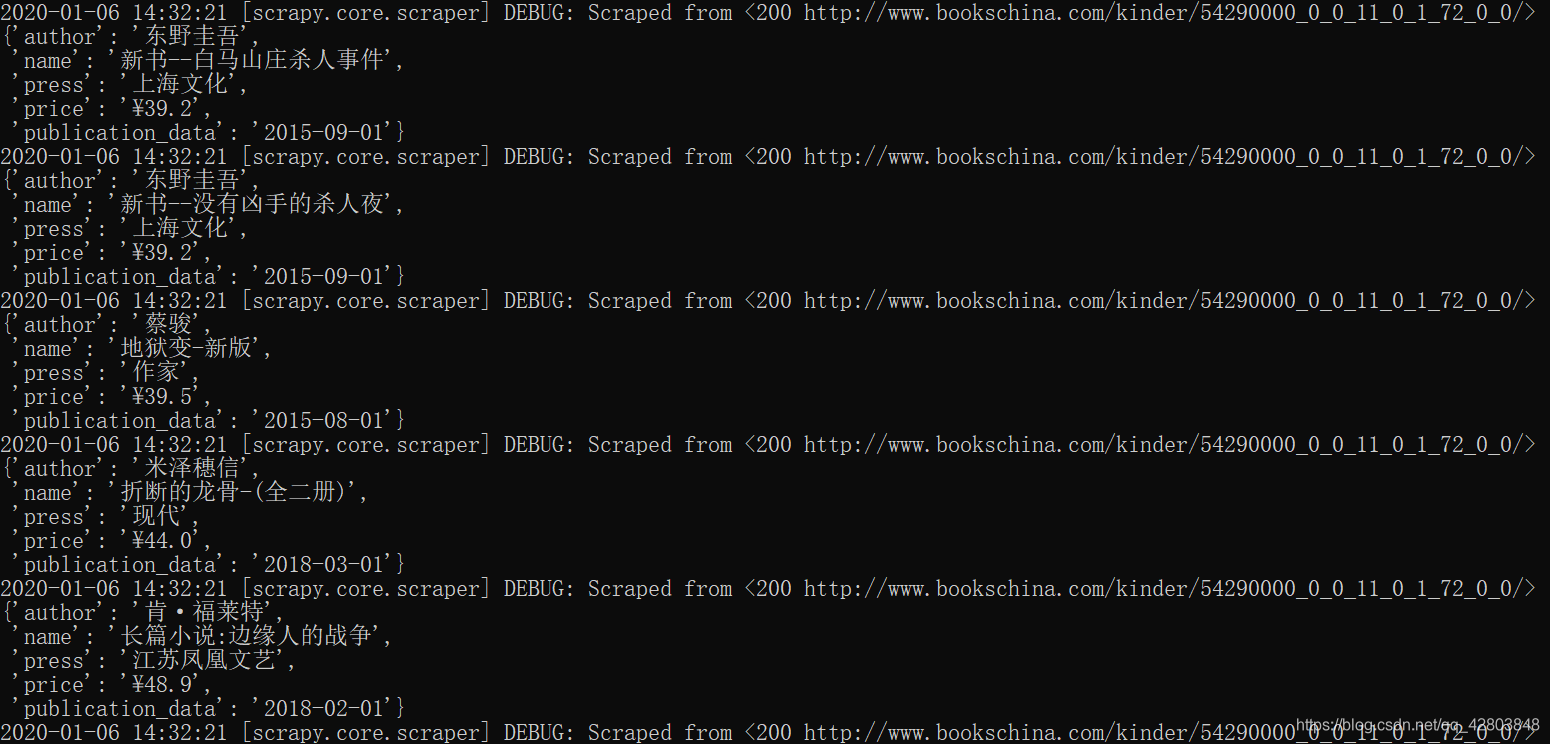

我们可以看到数据在疯狂下载

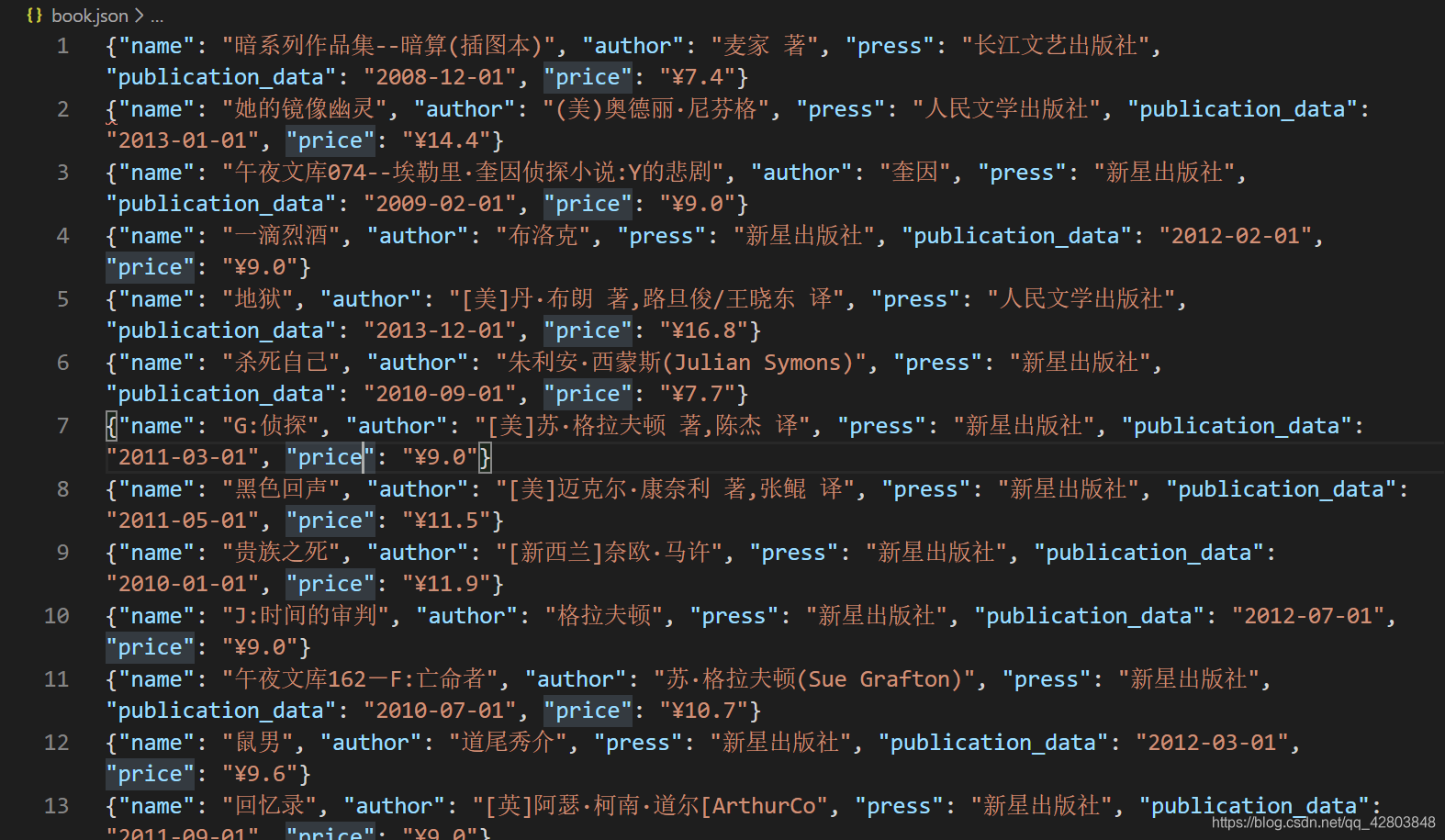

接下来看看我们的成果

哈哈~

这都是一些简单入门级别的,遇到困难不要慌慢慢来

来源:CSDN

作者:爬虫小彪

链接:https://blog.csdn.net/qq_42803848/article/details/103857167