【推荐】2019 Java 开发者跳槽指南.pdf(吐血整理) >>>

一、总述

为了应对日志实时分析的需求,选择的实时处理框架为kafka + SparkStreaming + mongoDB. 具体流程是将数据(此次demo是从文件中读取某一行数据)实时写入到kafka中,SparkStreaming 按一定时间间隔(1s, 5s, 10s等) 从kafka中读取数据,经过加工处理,最后将数据实时写入到mongoDB中,同时会将数据沉淀到hdfs中,做为历史数据用于后期hive的批量加工分析。

二、集群部署

需要部署Kafka集群+Spark集群+Mongo ,具体部署过程可参考其它文档

三、SparkStreaming运行原理

此次案例中使用SparkStreaming,spark streaming是将持续不断输入的数据流转换成多个batch分片,使用一批spark应用实例进行处理。

四、流程实现

1. Kafka部分

1.1 说明

从文件中随机读取某一行数据写入到kafka中

1.2 实现

1) 创建maven 项目

在pom.xml中添加如下依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>1.0.1</version>

</dependency>- 3 Kafka 生产类实现

关键类: ProducerRecord ,KafkaProducer

设置kafka运行基本参数,生成kafkaproducer对象

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "kafka001:9092, kafka002:9092, kafka003:9092");

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName()); //序列化key

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName()); //序列化value

return new KafkaProducer<String, String>(props);

生产者核心代码:

record = new ProducerRecord<String, String>(topic, String.valueOf(date), value); //参数为要写入的topic,key, value

producer.send(record);

- 4 多线程写入

设定线程池的线程数量

ExecutorService executor = Executors.newFixedThreadPool(30);设定多线程,线程个数为ThreadNum

for (int i = 0; i < ThreadNum; i++) {

Runnable run = new RunnerObject(i);

executor.execute(run);

}

每个线程生成消息,消息数为count

for (int i = 0; i < count; i++) {

value = readTxt("/home/hadoop/macmilun.txt");

record = new ProducerRecord<String, String>(topic, String.valueOf(date), value);

producer.send(record);

}

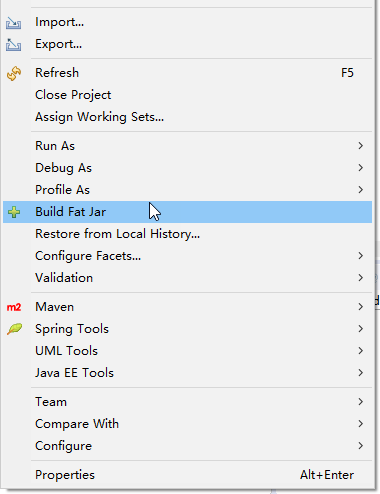

1.5 打包上传服务器

此处选择fat jar工具进行打包,工具可以去网上下载。也可以使用maven工具进行打包。

运行程序

java -jar KafkaThreads.jar

2. Spark部分

2.1 说明

SparkStreaming 读取kafka的消息数据,经过加工处理(此处只做简单的数据长度统计),将结果数据写入到mongoDB中

2.2 创建maven项目

pom.xml 依赖如下:

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>2.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.3.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.5</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>3.1.1</version>

</dependency>

<dependency>

<groupId>org.mongodb.mongo-hadoop</groupId>

<artifactId>mongo-hadoop-spark</artifactId>

<version>2.0.1</version>

</dependency>2.3 实现与kafka的信息交互

1) 说明

SparkStreaming 获取kafka的消息有Receiver和Direct两种方式,因为direct方式有更多的优势,所以此处选择direct方式进行处理。

注:使用direct方式,spark版本 > 1.3

2) 原理

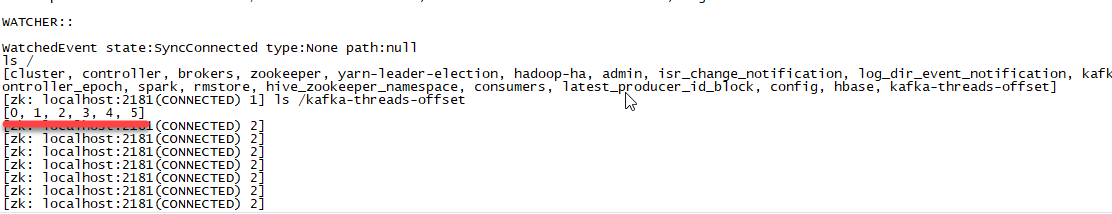

Direct方式可以将kafka的offset 信息写入到zookeeper节点中,以此来保证数据不丢失。kafka每个分区对应zookeeper上topic节点下的一个子节点

如下图所示表示该topic下存在5个分区

3) 核心代码实现(后面附完整代码)

导入jar包

因为ide本身并不完善,import jar时,有时会引入跟所需不一致的jar包,可参考以下引入结果(部分)

import org.apache.spark.streaming.kafka010.KafkaUtils

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.streaming.Seconds

import org.apache.spark.streaming.StreamingContext

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.streaming.dstream.InputDStream

import org.apache.spark.streaming.kafka010.HasOffsetRanges

import org.apache.spark.streaming.kafka010.OffsetRange

初始化spark相关变量; 初始化SparkStreaming时会设置窗口时间,即批处理的时间间隔. Seconds(3)表示每次处理3秒之内所获取到的数据

val conf = new SparkConf().setMaster("spark://hadoop001:7077").setAppName("KafkaThreads");

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(3));

设置kafka参数

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "kafka001:9092, kafka002:9092, kafka003:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "test",

"auto.offset.reset" -> "latest",

"enable.auto.commit" -> (false: java.lang.Boolean))

//判断zookeeper上是否存在kafka的topic节点,如果没有则创建

if(!isNodeExists(znodeFather)){

zk.create(s"${znodeFather}", null, Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT)

}

判断zookeeper节点上是否有kafka各分区的offset信息,如果有则分别读取分区的offset信息,并从该offset处从相应分区中读取数据

//节点非空,并存在子节点; 遍历各子节点获取kafka各分区的offset; 一个分区对应一个子节点

if(!children.isEmpty()){

for(i <- 0 until children.size()){

val nodeData = new String(zk.getData(s"${znodeFather}/${i}", true, null), "utf-8").split(":")

val tp = new TopicPartition(nodeData(0).toString(), i) // 0:topic 1:offset

newOffsets += (tp -> nodeData(1).toLong)

}

KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams, newOffsets))

//节点为空,则说明是第一次读取;每个分区对应一个子节点,读取时分别从offset的 0值开始读取

newOffsets += (new TopicPartition("kafka-threads", 0) -> 0)

KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams, newOffsets))

//获取各分区的offset值;如果子节点存在则进行offset值更新,如果子节点不存在,则进行创建并赋值

Array[OffsetRange]()

kafkaStreamRecords.transform{ consumerRecords =>

offsetRanges = consumerRecords.asInstanceOf[HasOffsetRanges].offsetRanges

consumerRecords

}.foreachRDD{ partitions =>

for(o <- offsetRanges){

val flag = isNodeExists(s"${znodeFather}/${o.partition}")

if(flag){

zk.setData(s"${znodeFather}/${o.partition}", s"${o.topic}:${o.untilOffset}".getBytes, -1)

}else{

zk.create(s"${znodeFather}/${o.partition}", s"${o.topic}:${o.untilOffset}".getBytes, Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT)

}

}

将数据写入到mongo中

1) 导入jar包, 注:引入时一定要按照以下包导入,不要跟java的驱动包搞混

import com.mongodb.casbah.commons.MongoDBObject

import com.mongodb.DBObject

import com.mongodb.casbah.MongoClient

2) 初始化连接

var mongoCli = MongoClient("192.168.11.7", 27017)

val mongoDB = mongoCli.getDB("hadoop")

val mongoTB = mongoDB.getCollection(collectionName)

3) 封装数据写入DB中

将数据封装成DBObject格式数据写入mongo

partitions.

foreach{

record =>

val records:DBObject = MongoDBObject("key" -> record.key(), "record" -> record.value(), "length" -> record.value().length())

insert(records, "mytest3") ----- mytest3是要写入的表名

}

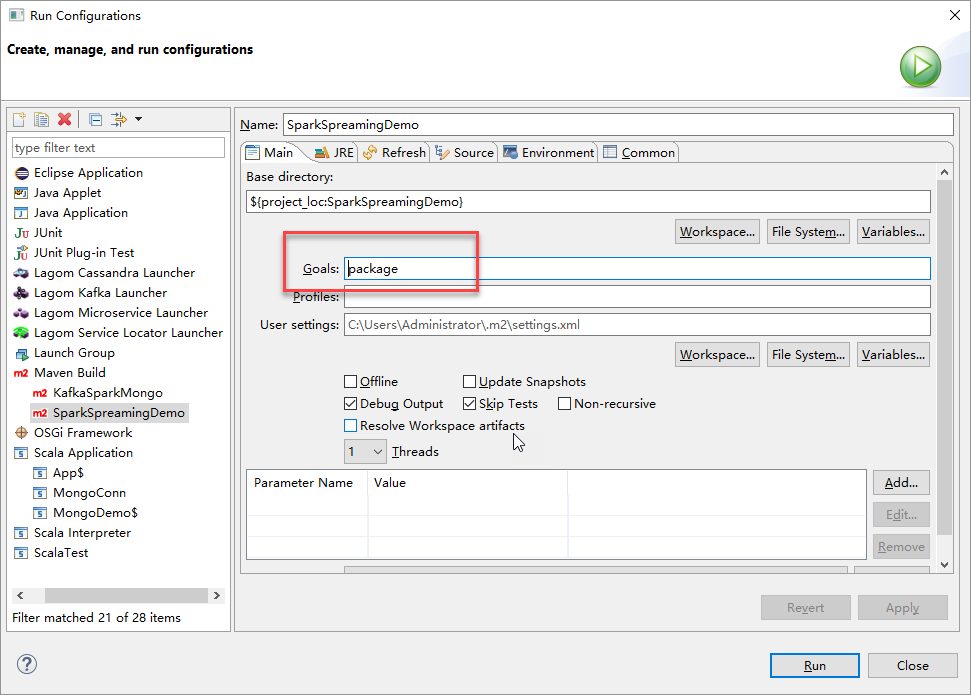

2.5 程序打包

选择maven进行打包,

右键项目 -> [run as] -> [Run Configurations] 如下图所示:

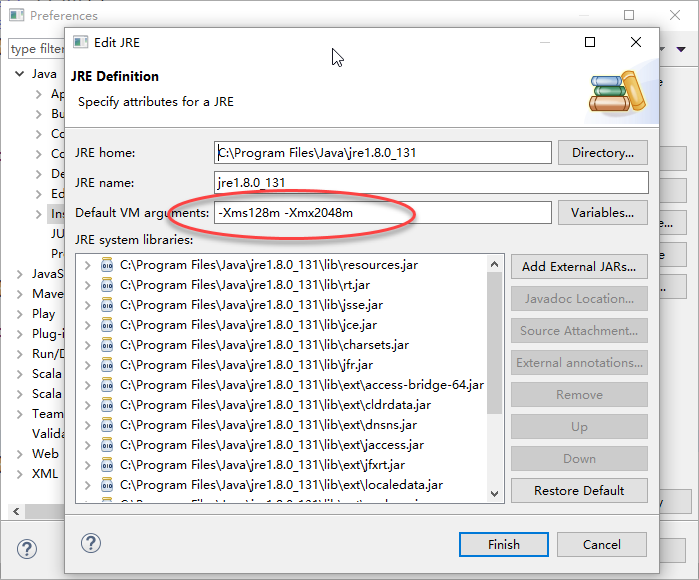

打包时如果遇到内存不足的错误,可在[window] -> [preferences] -> [java] -> [Installed Jars] 选中右面的jar文件,选择edit, 设置jvm的大小,如下图所示:

将程序上传到服务器,使用docker命令拷贝到spark相应容器中

2.6

运行jar包

cd /.../spark/bin

./spark-submit --class SparkDemo.sparkdemo.PersistKafkaSparkZk --executor-memory 2G /home/hadoop/sparkdemo-0.0.1-SNAPSHOT.jar

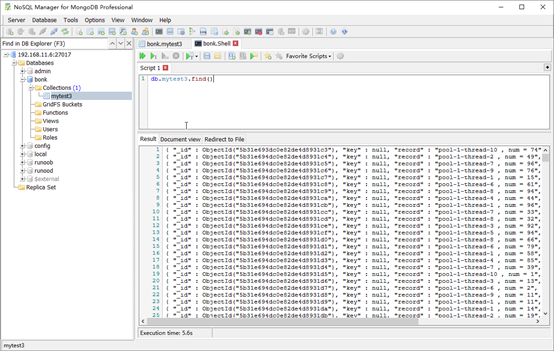

2.7 连接到mongo中,查看数据

附:SparkStreaming读写kafka完整DEMO代码

PersistKafkaSparkZk 类

import org.apache.zookeeper._

import org.apache.kafka.common.TopicPartition

import org.apache.spark.streaming.kafka010.KafkaUtils

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.streaming.Seconds

import org.apache.spark.streaming.StreamingContext

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.streaming.dstream.InputDStream

import org.apache.spark.streaming.kafka010.HasOffsetRanges

import org.apache.spark.streaming.kafka010.OffsetRange

import org.apache.spark.TaskContext

import org.apache.zookeeper.ZooDefs.Ids

import com.mongodb.casbah.commons.MongoDBObject

import com.mongodb.DBObject

import com.mongodb.casbah.MongoClient

import org.apache.log4j.Level

import org.apache.log4j.Logger

import java.util.Date

object PersistKafkaSparkZk {

val znodeFather = "/kafka-threads-offset"

val topics = Array("kafka-threads")

var mongoCli = MongoClient("192.168.11.7", 27017)

var zk : ZooKeeper = _

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("spark://hadoop001:7077").setAppName("KafkaThreads");

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(3));

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "kafka001:9092, kafka002:9092, kafka003:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "test",

"auto.offset.reset" -> "latest",

"enable.auto.commit" -> (false: java.lang.Boolean))

zk = new ZooKeeper("kafka001:2181", 5000 , new Watcher(){

def process(event:WatchedEvent ) {

}

})

if(!isNodeExists(znodeFather)){

zk.create(s"${znodeFather}", null, Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT)

}

val children = zk.getChildren(znodeFather, true)

var newOffsets: Map[TopicPartition, Long] = Map()

val kafkaStreamRecords = if(!children.isEmpty()){

for(i <- 0 until children.size()){

val nodeData = new String(zk.getData(s"${znodeFather}/${i}", true, null), "utf-8").split(":")

val tp = new TopicPartition(nodeData(0).toString(), i) // 0:topic 1:offset

newOffsets += (tp -> nodeData(1).toLong)

}

KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams, newOffsets))

}else {

newOffsets += (new TopicPartition("kafka-threads", 0) -> 0)

KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams, newOffsets))

}

val now = new Date()

val start = now.getTime

var offsetRanges = Array[OffsetRange]()

kafkaStreamRecords.transform{ consumerRecords =>

offsetRanges = consumerRecords.asInstanceOf[HasOffsetRanges].offsetRanges

consumerRecords

}.foreachRDD{ partitions =>

for(o <- offsetRanges){

val flag = isNodeExists(s"${znodeFather}/${o.partition}")

if(flag){

zk.setData(s"${znodeFather}/${o.partition}", s"${o.topic}:${o.untilOffset}".getBytes, -1)

}else{

zk.create(s"${znodeFather}/${o.partition}", s"${o.topic}:${o.untilOffset}".getBytes, Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT)

}

}

println("partitions.isEmpty() =========================================== " +partitions.isEmpty())

partitions.

foreach{

record =>

val records:DBObject = MongoDBObject("key" -> record.key(), "record" -> record.value(), "length" -> record.value().length())

insert(records, "mytest3")

}

}

ssc.start()

ssc.awaitTermination()

}

def insert(row : DBObject, collectionName : String){

val mongoDB = mongoCli.getDB("mydb")

val mongoTB = mongoDB.getCollection(collectionName)

mongoTB.insert(row)

}

def isNodeExists(znode : String) : Boolean = {

val flag = zk.exists(znode, true) match {

case null => false

case _ => true

}

flag

}

}

zookeeper工具类

Zk

import org.apache.zookeeper.Watcher

import org.apache.zookeeper.ZooKeeper

import org.apache.zookeeper.WatchedEvent

import org.apache.zookeeper.ZooDefs.Ids

import org.apache.zookeeper.CreateMode

import scala.util.Try

import org.apache.log4j.Logger

import org.apache.log4j.Level

object Zk {

/*创建 zookeeper对象 */

val SESSION_TIMEOUT = 5000

var zk:ZooKeeper = _

def watcher = new Watcher(){

def process(event:WatchedEvent ) {

}

}

def init(){

zk = new ZooKeeper("kafka001:2181", SESSION_TIMEOUT , watcher)

}

def znodeCreate(znode: String, data: String){

zk.create(s"/$znode", data.getBytes, Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT)

}

def znodeDataSet(znode: String, data: String) {

zk.setData(s"/$znode", data.getBytes(), -1)

}

def znodeDataGet(znode: String): Array[String] = {

init()

try {

new String(zk.getData(s"/$znode", true, null), "utf-8").split(",")

} catch {

case _: Exception => Array()

}

}

def znodeIsExists(znode: String): Boolean ={

init()

zk.exists(s"/$znode", true) match {

case null => false

case _ => true

}

}

def offsetWork(znode: String, data: String) {

init()

zk.exists(s"/$znode", true) match {

case null => znodeCreate(znode, data)

case _ => znodeDataSet(znode, data)

}

zk.close()

}

}

pom.xml 完整内容

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>SparkDemo</groupId>

<artifactId>sparkdemo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>${project.artifactId}</name>

<description>My wonderfull scala app</description>

<inceptionYear>2018</inceptionYear>

<licenses>

<license>

<name>My License</name>

<url>http://....</url>

<distribution>repo</distribution>

</license>

</licenses>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<scala.version>2.11.11</scala.version>

<scala.compat.version>2.11</scala.compat.version>

<spec2.version>4.2.0</spec2.version>

</properties>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- Test -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.scalatest</groupId>

<artifactId>scalatest_${scala.compat.version}</artifactId>

<version>3.0.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs2</groupId>

<artifactId>specs2-core_${scala.compat.version}</artifactId>

<version>${spec2.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs2</groupId>

<artifactId>specs2-junit_${scala.compat.version}</artifactId>

<version>${spec2.version}</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>2.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.3.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.5</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>3.1.1</version>

</dependency>

<dependency>

<groupId>org.mongodb.mongo-hadoop</groupId>

<artifactId>mongo-hadoop-spark</artifactId>

<version>2.0.1</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<!-- see http://davidb.github.com/scala-maven-plugin -->

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.3.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.21.0</version>

<configuration>

<argLine>-Xmx1024m -XX:MaxPermSize=1024m -noverify</argLine>

<!-- Tests will be run with scalatest-maven-plugin instead -->

<skipTests>true</skipTests>

</configuration>

</plugin>

<plugin>

<groupId>org.scalatest</groupId>

<artifactId>scalatest-maven-plugin</artifactId>

<version>2.0.0</version>

<configuration>

<reportsDirectory>${project.build.directory}/surefire-reports</reportsDirectory>

<junitxml>.</junitxml>

<filereports>TestSuiteReport.txt</filereports>

<!-- Comma separated list of JUnit test class names to execute -->

<jUnitClasses>samples.AppTest</jUnitClasses>

</configuration>

<executions>

<execution>

<id>test</id>

<goals>

<goal>test</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- 去掉重复引用的jar包 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>1.4</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.fxc.rpc.impl.member.MemberProvider</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

<!-- 将依赖的jar包打进jar文件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>util.Microseer</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

来源:oschina

链接:https://my.oschina.net/snowpipe/blog/3144501