0.基础环境准备

准备三台机器ip192.168.213.150、192.168.213.151、192.168.213.152

操作系统:CentOS Linux release 7.5.1804 (Core)

1.安装java环境

# tar -zxvf jdk-8u231-linux-x64.tar.gz

# vim /etc/profile.d/java.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_231

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export PATH=${JAVA_HOME}/bin:$PATH

#source /etc/profile

请按照上面配置java环境变量,否则后面启动集群是会报如下错误

Spark , JAVA_HOME is not set

2.安装scala

https://www.scala-lang.org/download/

#wget https://downloads.lightbend.com/scala/2.13.1/scala-2.13.1.rpm

#rpm -ivh scala-2.13.1.rpm

#vim /etc/profile.d/scala.sh

export SCALA_HOME=/usr/share/scala

export PATH=${SCALA_HOME}/bin:$PATH

#source /etc/profile

检查环境变量

# echo $SCALA_HOME

# scala -version

3.安装spark

wget http://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.0.0-preview/spark-3.0.0-preview-bin-hadoop2.7.tgz

#tar xf spark-3.0.0-preview-bin-hadoop2.7.tgz -C /usr/share

#ln -s /usr/share/spark-3.0.0-preview-bin-hadoop2.7 /usr/share/spark

#vim /etc/profile.d/spark.sh

export SPARK_HOME=/usr/share/spark

export PATH=${SPARK_HOME}/bin:$PATH

#source /etc/profile

检查环境变量

# echo $SPARK_HOME

# spark-shell -version

测试

# spark-submit --class org.apache.spark.examples.SparkPi --master local[*] /usr/share/spark/examples/jars/spark-examples_2.12-3.0.0-preview.jar

4.搭建集群

vm1:

#vi /usr/share/spark/conf/spark-env.sh

加入如下内容

#!/usr/bin/env bash

export SPARK_MASTER_HOST=192.168.213.150

#vi /usr/share/spark/conf/slaves

加入如下内容

192.168.213.151

192.168.213.152

5.免密设置

vm1、vm2、vm3免密设置

设置方法见另一篇博客:

https://my.oschina.net/guiguketang/blog/730182

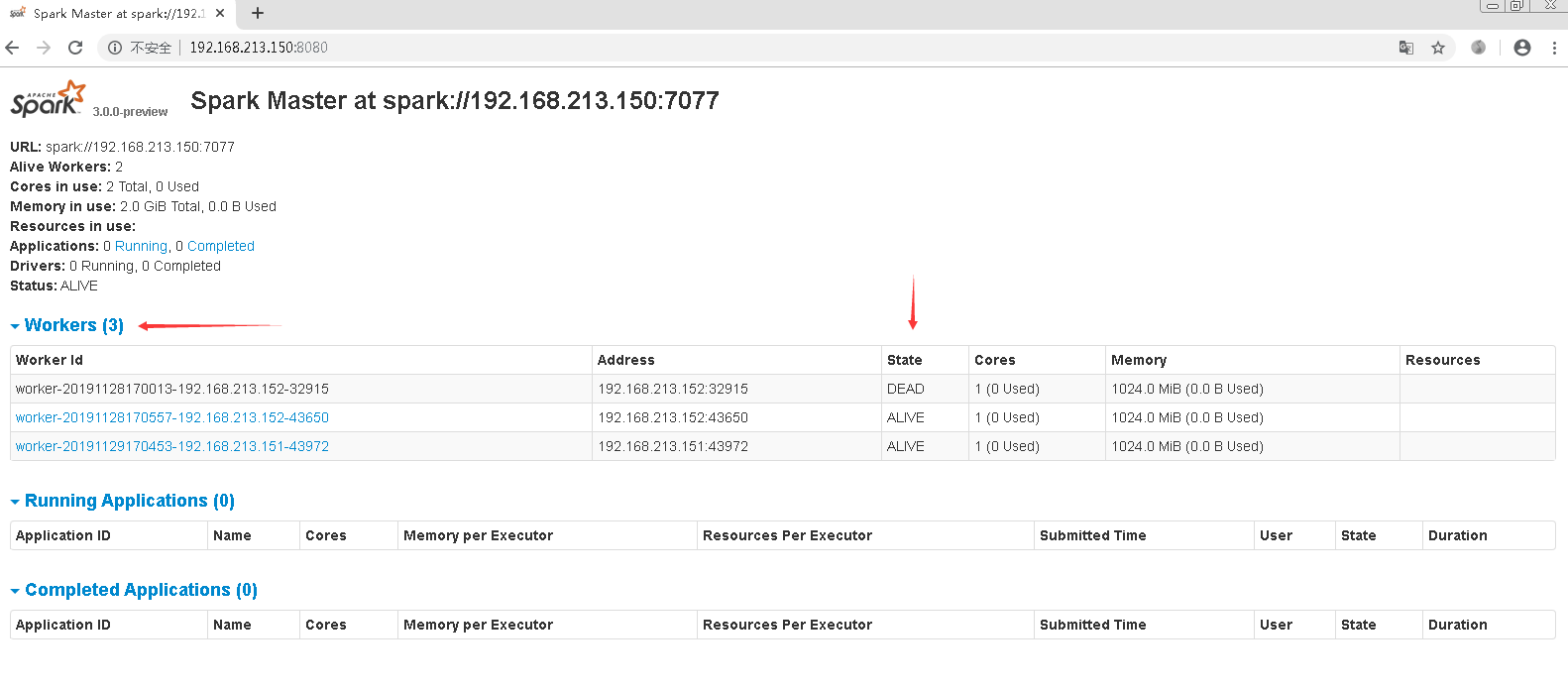

6.启动集群

sh /usr/share/spark/sbin/start-master.sh

sh /usr/share/spark/sbin/start-slaves.sh

查看日志位置

/usr/share/spark/logs

通过如下地址访问控制台:

http://192.168.213.150:8080/