逻辑回归算法的参数说明

LogisticRegression 逻辑回归线性/分类算法,它的相关参数设置说明如下:

<1> setMaxIter():设置最大迭代次数

<2> setRegParam(): 设置正则项的参数,控制损失函数与惩罚项的比例,防止整个训练过程过拟合,默认为0

<3> setElasticNetParam():使用L1范数还是L2范数

setElasticNetParam=0.0 为L2正则化;

setElasticNetParam=1.0 为L1正则化;

setElasticNetParam=(0.0,1.0) 为L1,L2组合

<4> setFeaturesCol():指定特征列的列名,传入Array类型,默认为features

<5>setLabelCol():指定标签列的列名,传入String类型,默认为label

<6>setPredictionCol():指定预测列的列名,默认为prediction

<7>setFitIntercept(value:Boolean):是否需要偏置,默认为true(即是否需要y=wx+b中的b)

<8>setStandardization(value:Boolean):模型训练时,是否对各特征值进行标准化处理,默认为true

<9>fit:基于训练街训练出模型

<10>transform:基于训练出的模型对测试集进行预测

<11>setTol(value:Double):设置迭代的收敛公差。值越小准确性越高但是迭代成本增加。默认值为1E-6。(即损失函数)

<12>setWeightCol(value:String):设置某特征列的权重值,如果不设置或者为空,默认所有实例的权重为1。

上面与线性回归一致,还有一些特殊的:

<1> setFamily:值为"auto",根据类的数量自动选择系列,如果numClasses=1或者numClasses=2,设置为二项式,否则设置为多项式;

值为"binomial",为二元逻辑回归;

值为"multinomial",为多元逻辑回归

<2> setProbabilityCol:设置预测概率值的列名,默认为probability(即每个类别预测的概率值)

<3> setRawPredictionCol:指定原始预测列名,默认为rawPrediction

<4>setThreshold(value:Double):二元类阈值[0-1],默认为0.5,如果预测值大于0.5则为1,否则为0

<5>setThresholds(value:Array[Double]):多元分类阈值[0-1],默认为0.5

下面通过例子熟悉下逻辑回归分类的用法,以及它的一些模型评估方法。

目标数据集预览

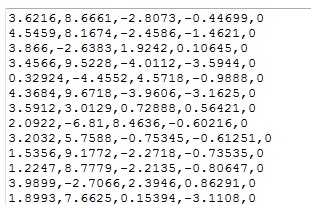

本文所使用的测试数据集来自 UCI 的 banknote authentication data set ,这是一个从纸币鉴别过程中的图片里提取的数据集,总共包含五个列,前 4 列是指标值 (连续型),最后一列是真假标识。

测试数据集格式:

四列依次是小波变换图像的方差,小波变换图像的偏态,小波变换图像的峰度,图像熵,类别标签。其实读者并不需要知道什么是小波变换及其相关改变,只需要知道这是四个特征指标的值,我们将根据这些指标训练模型使用模型预测类别。对于该数据集的更多信息,可以参考 UCI 官网的描述。

spark中实现

import org.apache.spark.SparkConf

import org.apache.spark.ml.classification.{BinaryLogisticRegressionSummary, LogisticRegression, LogisticRegressionSummary}

import org.apache.spark.ml.feature.VectorAssembler

import org.apache.spark.mllib.evaluation.MulticlassMetrics

import org.apache.spark.sql.{SparkSession, functions}

val resource = ClassLoader.getSystemResource("classification/data_banknote_authentication.txt")

val resourceLocation = resource.toURI.toString

val sparkConf = new SparkConf()

.setAppName("BinLogisticRegression")

.setMaster("local")

val spark = SparkSession.builder()

.config(sparkConf)

.getOrCreate()

spark.sparkContext.setLogLevel("ERROR")

// Load data, rdd

import spark.implicits._

val parsedRDD = spark.read

.textFile(resourceLocation)

.map(_.split(","))

.map(eachRow => {

val a = eachRow.map(x => x.toDouble)

// 返回4元组

(a(0), a(1), a(2), a(3), a(4))

})

val df = parsedRDD.toDF(

"f0", "f1", "f2", "f3", "label").cache()

/**

* Define a VectorAssembler transformer to transform source features data to be a vector

* This is helpful when raw input data contains non-feature columns, and it is common for

* such a input data file to contain columns such as "ID", "Date", etc.

*/

val vectorAssembler = new VectorAssembler()

.setInputCols(Array("f0", "f1", "f2", "f3"))

.setOutputCol("features")

val dataset = vectorAssembler.transform(df)

val lr = new LogisticRegression()

.setLabelCol("label")

.setFeaturesCol("features")

.setRegParam(0.2)

.setElasticNetParam(0.8)

.setMaxIter(10)

//数据随机拆分成训练集和测试集

val Array(trainingData, testData) = dataset.randomSplit(Array(0.8, 0.2))

val lrModel = lr.fit(trainingData)

println("*******************模型训练的报告*******************")

println("模型当前使用的分类阈值:" + lrModel.getThreshold)

// println("模型当前使用的多层分类阈值:" + lrModel.getThresholds)

println("模型特征列:" + lrModel.getFeaturesCol)

println("模型标签列:" + lrModel.getLabelCol)

println("逻辑回归模型系数的向量: " + lrModel.coefficients)

println("逻辑回归模型的截距: " + lrModel.intercept)

println("类的数量(标签可以使用的值): " + lrModel.numClasses)

println("模型所接受的特征的数量: " + lrModel.numFeatures)

val trainingSummary = lrModel.binarySummary

//损失函数,可以看到损失函数随着循环是逐渐变小的,损失函数越小,模型就越好

println(s"总的迭代次数:${trainingSummary.totalIterations}")

println("===============损失函数每轮迭代的值================")

val objectiveHistory = trainingSummary.objectiveHistory

objectiveHistory.foreach(loss => println(loss))

//roc的值

val trainingRocSummary = trainingSummary.roc

println("roc曲线描点值行数:" + trainingRocSummary.count())

println("=====================roc曲线的值=================")

trainingRocSummary.show(false)

//ROC曲线下方的面积:auc, 越接近1说明模型越好

val trainingAUC = trainingSummary.areaUnderROC

println(s"AUC(areaUnderRoc): ${trainingAUC}")

// F1值就是precision和recall的调和均值, 越高越好

val trainingFMeasure = trainingSummary.fMeasureByThreshold

println("fMeasure的行数: " + trainingFMeasure.collect().length)

println("threshold --- F-Measure 的关系:")

trainingFMeasure.show(10)

val trainingMaxFMeasure = trainingFMeasure.select(functions.max("F-Measure"))

.head()

.getDouble(0)

println("最大的F-Measure的值为: " + trainingMaxFMeasure)

//最优的阈值

val trainingBestThreshold = trainingFMeasure.where($"F-Measure" === trainingMaxFMeasure)

.select("threshold")

.head()

.getDouble(0)

println("最优的阈值为:" + trainingBestThreshold)

// 设置模型最优阈值

lrModel.setThreshold(trainingBestThreshold)

println("模型调优后使用的分类阈值:" + lrModel.getThreshold)

println("************************************************************")

// 通过使用测试集做评估

println("**********************测试集数据获取的模型评价报告*******************")

val testSummary: LogisticRegressionSummary = lrModel.evaluate(testData)

val testBinarySummary: BinaryLogisticRegressionSummary = testSummary.asBinary

//获取预测后的数据情况

val predictionAndLabels = testSummary.predictions.select($"prediction", $"label")

.as[(Double, Double)]

.cache()

// 显示 label prediction 分组后的统计

println("测试集的数据量:" + testSummary.predictions.count())

println("label prediction 分组后的统计:")

predictionAndLabels.groupBy("label", "prediction").count().show()

predictionAndLabels.show(false)

// precision-recall 的关系

println("============precision-recall================")

val pr = testBinarySummary.pr

pr.show(false)

//roc的值

val rocSummary = testBinarySummary.roc

println("roc曲线描点值行数:" + rocSummary.count())

println("=====================roc曲线的值=================")

rocSummary.show(false)

//ROC曲线下方的面积:auc, 越接近1说明模型越好

val auc = testBinarySummary.areaUnderROC

println(s"ROC 曲线下的面积auc为: ${auc}")

val AUC = testBinarySummary.areaUnderROC

println(s"areaUnderRoc:${AUC}")

// F1值就是precision和recall的调和均值, 越高越好

val fMeasure = testBinarySummary.fMeasureByThreshold

println("fMeasure的行数: " + fMeasure.collect().length)

println("threshold --- F-Measure 的关系:")

fMeasure.show(10)

val maxFMeasure = fMeasure.select(functions.max("F-Measure"))

.head()

.getDouble(0)

println("最大的F-Measure的值为: " + maxFMeasure)

//最优的阈值

val bestThreshold = fMeasure.where($"F-Measure" === maxFMeasure)

.select("threshold")

.head()

.getDouble(0)

println("最优的阈值为:" + bestThreshold)

// // 设置模型最优阈值

// lrModel.setThreshold(bestThreshold)

//

// println("模型调优后使用的分类阈值:" + lrModel.getThreshold)

// 多分类指标

val multiclassMetrics = new MulticlassMetrics(predictionAndLabels.rdd)

println("混淆矩阵 Confusion matrix:")

val confusionMatrix = multiclassMetrics.confusionMatrix

println(confusionMatrix)

println(s"TN(True negative: 预测为负例,实际为负例):${confusionMatrix.apply(0, 0)}")

println(s"FP(False Positive: 预测为正例,实际为负例):${confusionMatrix.apply(0, 1)}")

println(s"FN(False negative: 预测为负例,实际为正例):${confusionMatrix.apply(1, 0)}")

println(s"TP(True Positive: 预测为正例,实际为正例):${confusionMatrix.apply(1, 1)}")

println("准确率:" + multiclassMetrics.accuracy)

spark.close()输出:

*******************模型训练的报告*******************

模型当前使用的分类阈值:0.5

模型特征列:features

模型标签列:label

逻辑回归模型系数的向量: [-0.27413251916250636,-0.0037569963697539777,0.0,0.0]

逻辑回归模型的截距: -0.07610485889310371

类的数量(标签可以使用的值): 2

模型所接受的特征的数量: 4

总的迭代次数:11

===============损失函数每轮迭代的值================

0.6891148016622942

0.6675216750241839

0.6112539551370807

0.6108014882929144

0.6107771981366225

0.6107730850552548

0.6107720451514589

0.6107718320770769

0.6107718248698443

0.6107718223536319

0.6107718218246714

roc曲线描点值行数:110

=====================roc曲线的值=================

+---------------------+--------------------+

|FPR |TPR |

+---------------------+--------------------+

|0.0 |0.0 |

|0.0 |0.02012072434607646 |

|0.0 |0.04024144869215292 |

|0.0 |0.060362173038229376|

|0.0 |0.08048289738430583 |

|0.0 |0.1006036217303823 |

|0.0016806722689075631|0.11871227364185111 |

|0.0016806722689075631|0.13883299798792756 |

|0.0016806722689075631|0.158953722334004 |

|0.0016806722689075631|0.1790744466800805 |

|0.0016806722689075631|0.19919517102615694 |

|0.0016806722689075631|0.2193158953722334 |

|0.0016806722689075631|0.23943661971830985 |

|0.0016806722689075631|0.2595573440643863 |

|0.0016806722689075631|0.2796780684104628 |

|0.0033613445378151263|0.2977867203219316 |

|0.0033613445378151263|0.317907444668008 |

|0.005042016806722689 |0.33601609657947684 |

|0.008403361344537815 |0.35412474849094566 |

|0.008403361344537815 |0.37424547283702214 |

+---------------------+--------------------+

only showing top 20 rows

AUC(areaUnderRoc): 0.9383460426424091

fMeasure的行数: 108

threshold --- F-Measure 的关系:

+------------------+-------------------+

| threshold| F-Measure|

+------------------+-------------------+

|0.8605420534122726|0.03944773175542406|

|0.8293045873891609|0.07736943907156672|

|0.8003323873069613|0.11385199240986718|

|0.7819554473082397|0.14897579143389197|

|0.7650539433726142|0.18281535648994518|

|0.7511658271272458|0.21184919210053862|

|0.7400145430197022|0.24338624338624337|

|0.7307273869415193|0.27383015597920274|

|0.7261378841608245| 0.303236797274276|

|0.7182237416884641| 0.3316582914572864|

+------------------+-------------------+

only showing top 10 rows

最大的F-Measure的值为: 0.8529698149951315

最优的阈值为:0.4606221757332402

模型调优后使用的分类阈值:0.4606221757332402

************************************************************

**********************测试集数据获取的模型评价报告*******************

测试集的数据量:280

label prediction 分组后的统计:

+-----+----------+-----+

|label|prediction|count|

+-----+----------+-----+

| 1.0| 1.0| 94|

| 0.0| 1.0| 35|

| 1.0| 0.0| 19|

| 0.0| 0.0| 132|

+-----+----------+-----+

+----------+-----+

|prediction|label|

+----------+-----+

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

|1.0 |1.0 |

+----------+-----+

only showing top 20 rows

============precision-recall================

+--------------------+---------+

|recall |precision|

+--------------------+---------+

|0.0 |1.0 |

|0.017699115044247787|1.0 |

|0.035398230088495575|1.0 |

|0.05309734513274336 |1.0 |

|0.07079646017699115 |1.0 |

|0.08849557522123894 |1.0 |

|0.10619469026548672 |1.0 |

|0.12389380530973451 |1.0 |

|0.1415929203539823 |1.0 |

|0.1592920353982301 |1.0 |

|0.17699115044247787 |1.0 |

|0.19469026548672566 |1.0 |

|0.21238938053097345 |1.0 |

|0.23008849557522124 |1.0 |

|0.24778761061946902 |1.0 |

|0.26548672566371684 |1.0 |

|0.2831858407079646 |1.0 |

|0.3008849557522124 |1.0 |

|0.3185840707964602 |1.0 |

|0.336283185840708 |1.0 |

+--------------------+---------+

only showing top 20 rows

roc曲线描点值行数:142

=====================roc曲线的值=================

+---+--------------------+

|FPR|TPR |

+---+--------------------+

|0.0|0.0 |

|0.0|0.017699115044247787|

|0.0|0.035398230088495575|

|0.0|0.05309734513274336 |

|0.0|0.07079646017699115 |

|0.0|0.08849557522123894 |

|0.0|0.10619469026548672 |

|0.0|0.12389380530973451 |

|0.0|0.1415929203539823 |

|0.0|0.1592920353982301 |

|0.0|0.17699115044247787 |

|0.0|0.19469026548672566 |

|0.0|0.21238938053097345 |

|0.0|0.23008849557522124 |

|0.0|0.24778761061946902 |

|0.0|0.26548672566371684 |

|0.0|0.2831858407079646 |

|0.0|0.3008849557522124 |

|0.0|0.3185840707964602 |

|0.0|0.336283185840708 |

+---+--------------------+

only showing top 20 rows

ROC 曲线下的面积auc为: 0.9053839224206452

areaUnderRoc:0.9053839224206452

fMeasure的行数: 140

threshold --- F-Measure 的关系:

+------------------+--------------------+

| threshold| F-Measure|

+------------------+--------------------+

|0.8603123973044042|0.034782608695652174|

|0.8509252211709998| 0.06837606837606837|

|0.8387972361837948| 0.10084033613445377|

|0.7815325267974487| 0.13223140495867766|

|0.7802284634461283| 0.1626016260162602|

|0.7570167186789863| 0.192|

|0.7424079254838089| 0.2204724409448819|

|0.7377313184342764| 0.24806201550387597|

| 0.735751455910333| 0.2748091603053435|

| 0.717659937811015| 0.3007518796992481|

+------------------+--------------------+

only showing top 10 rows

最大的F-Measure的值为: 0.7935222672064777

最优的阈值为:0.45449799571146066

混淆矩阵 Confusion matrix:

132.0 35.0

19.0 94.0

准确率:0.8071428571428572混淆矩阵:

上面的实际与预测的数量统计结果是:

+-----+----------+-----+

|label|prediction|count|

+-----+----------+-----+

| 1.0| 1.0| 121|

| 0.0| 1.0| 37|

| 1.0| 0.0| 12|

| 0.0| 0.0| 118|

+-----+----------+-----+混淆矩阵输出是

混淆矩阵 Confusion matrix:

118.0 37.0

12.0 121.0

TN(True negative: 预测为负例,实际为负例):118.0

FP(False Positive: 预测为正例,实际为负例):37.0

FN(False negative: 预测为负例,实际为正例):12.0

TP(True Positive: 预测为正例,实际为正例):121.0由此可知混淆矩阵的排列结果是这样子的:

预测 0 1

实际

0

1由此可知混淆矩阵各结果

TN(True negative: 预测为负例,实际为负例): 118

FP(False Positive: 预测为正例,实际为负例): 37

FN (False negative: 预测为负例,实际为正例): 12

TP (True Positive: 预测为正例,实际为正例): 121

为了搞清楚随着数据不断灌到模型,模型的一些评价报告数据会不会改变,我在得出模型训练报告后,再一次用一批评估数据灌入模型,再次获取模型训练报告,发现报告中的内容和生成模型时的训练报告是一样的。