1、分析

(1)为什么使用代理池?

- 许多网站上有专门的反爬虫措施,可能遇到封IP等问题

- 互联网上公开了大量免费代理,利用好资源

- 通过定时的检测维护同样可以得到多个可用代理

(2)代理池的需求

- 多站抓取

- 异步检测

- 定时筛选

- 持续更新

- 提供接口

- 易于提取

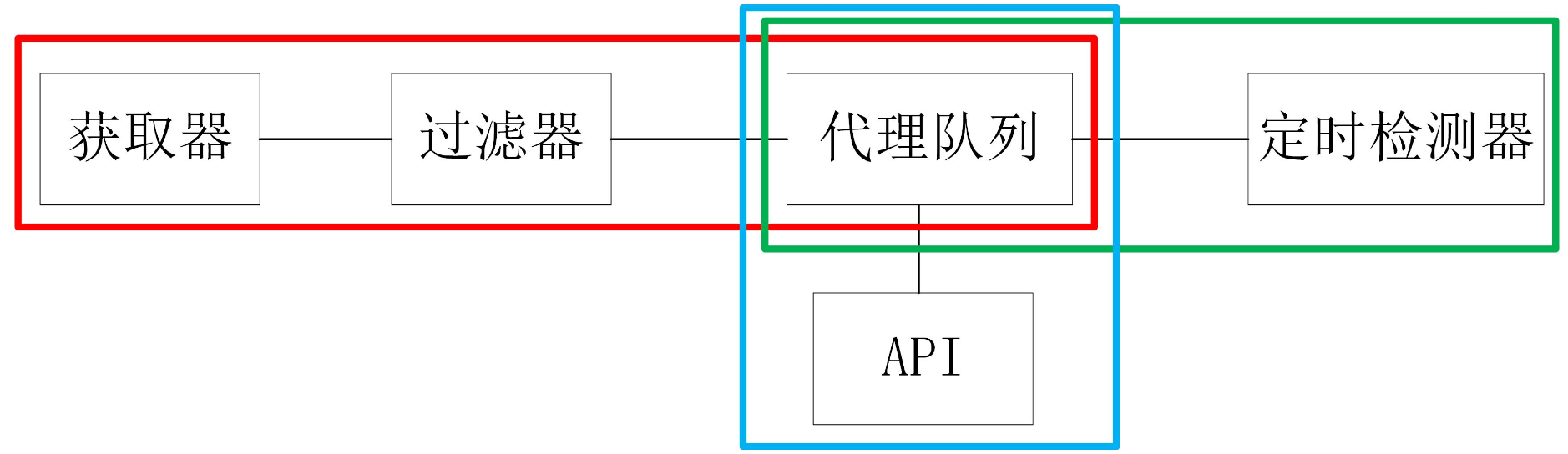

(3)代理池的框架

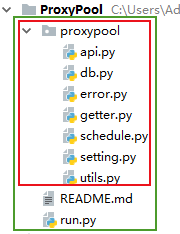

2、文件夹中的分布

3、各个代码的内容以及作用

(3-1)README.md

# ProxyPool此代理池在获取IP的时候使用了pop方法一次性使用,如果是想多次可用可以移步优化后的代理池:[https://github.com/Python3WebSpider/ProxyPool],推荐使用。

## 安装

(1)安装Python,至少Python3.5以上

(2)安装Redis,安装好之后将Redis服务开启

## 配置代理池

进入proxypool目录,修改settings.py文件:PASSWORD为Redis密码,如果为空,则设置为None

## 安装依赖

aiohttp>=1.3.3 Flask>=0.11.1 redis>=2.10.5 requests>=2.13.0 pyquery>=1.2.17

## 打开代理池和API

直接运行:run.py

## 获取代理:利用requests获取方法如下

import requests

PROXY_POOL_URL = 'http://localhost:5000/get'

def get_proxy():

try:

response = requests.get(PROXY_POOL_URL)

if response.status_code == 200:

return response.text

except ConnectionError:

return None

## 各模块功能

(1) getter.py 爬虫模块

* class proxypool.getter.FreeProxyGetter

> 爬虫类,用于抓取代理源网站的代理,用户可复写和补充抓取规则。

(2) schedule.py 调度器模块

* class proxypool.schedule.ValidityTester

> 异步检测类,可以对给定的代理的可用性进行异步检测。

* class proxypool.schedule.PoolAdder

> 代理添加器,用来触发爬虫模块,对代理池内的代理进行补充,代理池代理数达到阈值时停止工作。

* class proxypool.schedule.Schedule

> 代理池启动类,运行RUN函数时,会创建两个进程,负责对代理池内容的增加和更新。

(3) db.py Redis数据库连接模块

* class proxypool.db.RedisClient

> 数据库操作类,维持与Redis的连接和对数据库的增删查该,

(4) error.py 异常模块

* class proxypool.error.ResourceDepletionError

> 资源枯竭异常,如果从所有抓取网站都抓不到可用的代理资源,则抛出此异常。

* class proxypool.error.PoolEmptyError

> 代理池空异常,如果代理池长时间为空,则抛出此异常。

(5) api.py API模块,启动一个Web服务器,使用Flask实现,对外提供代理的获取功能。

(6) utils.py 工具箱

(7) setting.py 设置

(3-2)run.py

#整个代码的入口

from proxypool.api import app

from proxypool.schedule import Schedule

def main():

s = Schedule() #调度器

s.run()

app.run()

if __name__ == '__main__':

main()

(3-3)proxypool/getter.py #爬虫模块

from .utils import get_page

from pyquery import PyQuery as pq

import re

class ProxyMetaclass(type):

"""

元类,在FreeProxyGetter类中加入

__CrawlFunc__和__CrawlFuncCount__

两个参数,分别表示爬虫函数,和爬虫函数的数量。

"""

def __new__(cls, name, bases, attrs):

count = 0

attrs['__CrawlFunc__'] = []

for k, v in attrs.items():

if 'crawl_' in k:

attrs['__CrawlFunc__'].append(k)

count += 1

attrs['__CrawlFuncCount__'] = count

return type.__new__(cls, name, bases, attrs)

class FreeProxyGetter(object, metaclass=ProxyMetaclass): #从各大网站,免费代理抓取,元类是:ProxyMetaclass

def get_raw_proxies(self, callback): #callback为获取代理的函数的名称

proxies = []

print('Callback', callback)

for proxy in eval("self.{}()".format(callback)):

print('Getting', proxy, 'from', callback)

proxies.append(proxy)

return proxies

def crawl_ip181(self):

start_url = 'http://www.ip181.com/'

html = get_page(start_url)

ip_adress = re.compile('<tr.*?>\s*<td>(.*?)</td>\s*<td>(.*?)</td>')

# \s* 匹配空格,起到换行作用

re_ip_adress = ip_adress.findall(str(html))

for adress, port in re_ip_adress:

result = adress + ':' + port

yield result.replace(' ', '')

def crawl_kuaidaili(self):

for page in range(1, 4):

# 国内高匿代理

start_url = 'https://www.kuaidaili.com/free/inha/{}/'.format(page)

html = get_page(start_url)

ip_adress = re.compile(

'<td data-title="IP">(.*)</td>\s*<td data-title="PORT">(\w+)</td>'

)

re_ip_adress = ip_adress.findall(str(html))

for adress, port in re_ip_adress:

result = adress + ':' + port

yield result.replace(' ', '')

def crawl_xicidaili(self):

for page in range(1, 4):

start_url = 'http://www.xicidaili.com/wt/{}'.format(page)

html = get_page(start_url)

ip_adress = re.compile(

'<td class="country"><img src="http://fs.xicidaili.com/images/flag/cn.png" alt="Cn" /></td>\s*<td>(.*?)</td>\s*<td>(.*?)</td>'

)

# \s* 匹配空格,起到换行作用

re_ip_adress = ip_adress.findall(str(html))

for adress, port in re_ip_adress:

result = adress + ':' + port

yield result.replace(' ', '')

def crawl_daili66(self, page_count=4):

start_url = 'http://www.66ip.cn/{}.html'

urls = [start_url.format(page) for page in range(1, page_count + 1)]

for url in urls:

print('Crawling', url)

html = get_page(url)

if html:

doc = pq(html)

trs = doc('.containerbox table tr:gt(0)').items()

for tr in trs:

ip = tr.find('td:nth-child(1)').text()

port = tr.find('td:nth-child(2)').text()

yield ':'.join([ip, port])

def crawl_data5u(self):

for i in ['gngn', 'gnpt']:

start_url = 'http://www.data5u.com/free/{}/index.shtml'.format(i)

html = get_page(start_url)

ip_adress = re.compile(

' <ul class="l2">\s*<span><li>(.*?)</li></span>\s*<span style="width: 100px;"><li class=".*">(.*?)</li></span>'

)

# \s * 匹配空格,起到换行作用

re_ip_adress = ip_adress.findall(str(html))

for adress, port in re_ip_adress:

result = adress + ':' + port

yield result.replace(' ', '')

def crawl_kxdaili(self):

for i in range(1, 4):

start_url = 'http://www.kxdaili.com/ipList/{}.html#ip'.format(i)

html = get_page(start_url)

ip_adress = re.compile('<tr.*?>\s*<td>(.*?)</td>\s*<td>(.*?)</td>')

# \s* 匹配空格,起到换行作用

re_ip_adress = ip_adress.findall(str(html))

for adress, port in re_ip_adress:

result = adress + ':' + port

yield result.replace(' ', '')

def crawl_premproxy(self):

for i in ['China-01', 'China-02', 'China-03', 'China-04', 'Taiwan-01']:

start_url = 'https://premproxy.com/proxy-by-country/{}.htm'.format(

i)

html = get_page(start_url)

if html:

ip_adress = re.compile('<td data-label="IP:port ">(.*?)</td>')

re_ip_adress = ip_adress.findall(str(html))

for adress_port in re_ip_adress:

yield adress_port.replace(' ', '')

def crawl_xroxy(self):

for i in ['CN', 'TW']:

start_url = 'http://www.xroxy.com/proxylist.php?country={}'.format(

i)

html = get_page(start_url)

if html:

ip_adress1 = re.compile(

"title='View this Proxy details'>\s*(.*).*")

re_ip_adress1 = ip_adress1.findall(str(html))

ip_adress2 = re.compile(

"title='Select proxies with port number .*'>(.*)</a>")

re_ip_adress2 = ip_adress2.findall(html)

for adress, port in zip(re_ip_adress1, re_ip_adress2):

adress_port = adress + ':' + port

yield adress_port.replace(' ', '')

(3-4)proxypool/schedule.py #调度器模块

import time

from multiprocessing import Process

import asyncio

import aiohttp

try:

from aiohttp.errors import ProxyConnectionError,ServerDisconnectedError,ClientResponseError,ClientConnectorError

except:

from aiohttp import ClientProxyConnectionError as ProxyConnectionError,ServerDisconnectedError,ClientResponseError,ClientConnectorError

from proxypool.db import RedisClient

from proxypool.error import ResourceDepletionError

from proxypool.getter import FreeProxyGetter

from proxypool.setting import *

from asyncio import TimeoutError

class ValidityTester(object):

test_api = TEST_API # 测试API,用百度来测试 TEST_API='http://www.baidu.com'

def __init__(self):

self._raw_proxies = None

self._usable_proxies = []

def set_raw_proxies(self, proxies):

self._raw_proxies = proxies

self._conn = RedisClient()

async def test_single_proxy(self, proxy):

"""

text one proxy, if valid, put them to usable_proxies.

"""

try:

async with aiohttp.ClientSession() as session: #aiohttp异步请求

try:

if isinstance(proxy, bytes):

proxy = proxy.decode('utf-8')

real_proxy = 'http://' + proxy #设置代理,将其用于测试

print('Testing', proxy)

async with session.get(self.test_api, proxy=real_proxy, timeout=get_proxy_timeout) as response: #异步请求进行测试,利用百度来进行检测

if response.status == 200:

self._conn.put(proxy) #将代理加到右侧

print('Valid proxy', proxy) #有效代理

except (ProxyConnectionError, TimeoutError, ValueError):

print('Invalid proxy', proxy) #无效代理

except (ServerDisconnectedError, ClientResponseError,ClientConnectorError) as s:

print(s)

def test(self):

"""

aio test all proxies.

"""

print('ValidityTester is working')

try:

loop = asyncio.get_event_loop()

tasks = [self.test_single_proxy(proxy) for proxy in self._raw_proxies]

loop.run_until_complete(asyncio.wait(tasks))

except ValueError:

print('Async Error')

class PoolAdder(object):

"""

add proxy to pool

"""

def __init__(self, threshold):

self._threshold = threshold

self._conn = RedisClient()

self._tester = ValidityTester()

self._crawler = FreeProxyGetter() #从各大网站,免费代理抓取

def is_over_threshold(self):

"""

judge if count is overflow.

"""

if self._conn.queue_len >= self._threshold:

return True

else:

return False

def add_to_queue(self):

print('PoolAdder is working')

proxy_count = 0

while not self.is_over_threshold():

for callback_label in range(self._crawler.__CrawlFuncCount__): #__CrawlFuncCount__只获取代理方法的数量,就是crawl开头的爬取函数

callback = self._crawler.__CrawlFunc__[callback_label]

raw_proxies = self._crawler.get_raw_proxies(callback)

# test crawled proxies

self._tester.set_raw_proxies(raw_proxies)

self._tester.test()

proxy_count += len(raw_proxies)

if self.is_over_threshold():

print('IP is enough, waiting to be used')

break

if proxy_count == 0:

raise ResourceDepletionError

class Schedule(object):

@staticmethod

def valid_proxy(cycle=VALID_CHECK_CYCLE):

"""

Get half of proxies which in redis

"""

conn = RedisClient() #建立redis数据库连接

tester = ValidityTester()

while True:

print('Refreshing ip')

count = int(0.5 * conn.queue_len) #取出redis数据库中一半的代理

if count == 0:

print('Waiting for adding')

time.sleep(cycle) #等待添加可用的代理

continue

raw_proxies = conn.get(count)

tester.set_raw_proxies(raw_proxies)

tester.test()

time.sleep(cycle)

@staticmethod

def check_pool(lower_threshold=POOL_LOWER_THRESHOLD,

upper_threshold=POOL_UPPER_THRESHOLD,

cycle=POOL_LEN_CHECK_CYCLE):

#从各大网站获取代理,然后检测该代理是否可用,再将可用的代理放到数据库中

"""

If the number of proxies less than lower_threshold, add proxy

"""

conn = RedisClient()

adder = PoolAdder(upper_threshold)

while True:

if conn.queue_len < lower_threshold:

adder.add_to_queue()

time.sleep(cycle)

def run(self):

print('Ip processing running')

valid_process = Process(target=Schedule.valid_proxy)

check_process = Process(target=Schedule.check_pool)

valid_process.start()

check_process.start()

(3-5)proxypool/db.py #Redis数据库连接模块

import redis

from proxypool.error import PoolEmptyError

from proxypool.setting import HOST, PORT, PASSWORD

class RedisClient(object):

def __init__(self, host=HOST, port=PORT):

if PASSWORD:

self._db = redis.Redis(host=host, port=port, password=PASSWORD)

else: #注:我的redis数据库没有设置密码

self._db = redis.Redis(host=host, port=port)

def get(self, count=1):

"""

get proxies from redis

"""

proxies = self._db.lrange("proxies", 0, count - 1)

self._db.ltrim("proxies", count, -1)

return proxies

def put(self, proxy): #将代理放到右侧

"""

add proxy to right top

"""

self._db.rpush("proxies", proxy)

def pop(self): #api从右侧拿出代理 【右侧的都是最新的代理】

"""

get proxy from right.

"""

try:

return self._db.rpop("proxies").decode('utf-8')

except:

raise PoolEmptyError

@property

def queue_len(self):

"""

get length from queue.

"""

return self._db.llen("proxies")

def flush(self):

"""

flush db

"""

self._db.flushall()

if __name__ == '__main__':

conn = RedisClient()

print(conn.pop())

(3-6)proxypool/error.py #异常模块

class ResourceDepletionError(Exception):

def __init__(self):

Exception.__init__(self)

def __str__(self):

return repr('The proxy source is exhausted')

class PoolEmptyError(Exception):

def __init__(self):

Exception.__init__(self)

def __str__(self):

return repr('The proxy pool is empty')

(3-7)proxypool/api.py #API模块,启动一个Web服务器,使用Flask实现:对外提供代理的获取能力

from flask import Flask, g

from .db import RedisClient

__all__ = ['app']

app = Flask(__name__)

def get_conn():

"""

Opens a new redis connection if there is none yet for the

current application context.

"""

if not hasattr(g, 'redis_client'):

g.redis_client = RedisClient()

return g.redis_client

@app.route('/')

def index():

return '<h2>Welcome to Proxy Pool System</h2>'

@app.route('/get')

def get_proxy():

"""

Get a proxy

"""

conn = get_conn()

return conn.pop()

@app.route('/count')

def get_counts():

"""

Get the count of proxies

"""

conn = get_conn()

return str(conn.queue_len)

if __name__ == '__main__':

app.run()

(3-8)proxypool/utils.py #工具箱

import requests

import asyncio

import aiohttp

from requests.exceptions import ConnectionError

from fake_useragent import UserAgent,FakeUserAgentError

import random

def get_page(url, options={}):

try:

ua = UserAgent()

except FakeUserAgentError:

pass

base_headers = {

'User-Agent': ua.random,

'Accept-Encoding': 'gzip, deflate, sdch',

'Accept-Language': 'zh-CN,zh;q=0.8'

}

headers = dict(base_headers, **options)

print('Getting', url)

try:

r = requests.get(url, headers=headers)

print('Getting result', url, r.status_code)

if r.status_code == 200:

return r.text

except ConnectionError:

print('Crawling Failed', url)

return None

class Downloader(object):

"""

一个异步下载器,可以对代理源异步抓取,但是容易被BAN。

"""

def __init__(self, urls):

self.urls = urls

self._htmls = []

async def download_single_page(self, url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

self._htmls.append(await resp.text())

def download(self):

loop = asyncio.get_event_loop()

tasks = [self.download_single_page(url) for url in self.urls]

loop.run_until_complete(asyncio.wait(tasks))

@property

def htmls(self):

self.download()

return self._htmls

(3-9)proxypool/settings.py #设置

# Redis数据库的地址和端口 HOST = 'localhost' PORT = 6379 # 如果Redis有密码,则添加这句密码,否则设置为None或'' PASSWORD = '' # 获得代理测试时间界限 get_proxy_timeout = 9 # 代理池数量界限 POOL_LOWER_THRESHOLD = 20 POOL_UPPER_THRESHOLD = 100 # 检查周期 VALID_CHECK_CYCLE = 60 POOL_LEN_CHECK_CYCLE = 20 # 测试API,用百度来测试 TEST_API='http://www.baidu.com'

来源:oschina

链接:https://my.oschina.net/u/4045790/blog/3098856