问题

I’ve been looking into OpenCV and Pillow (and ImageMagick outside of Python, especially Fred's Image Magick Scripts) to achieve the following:

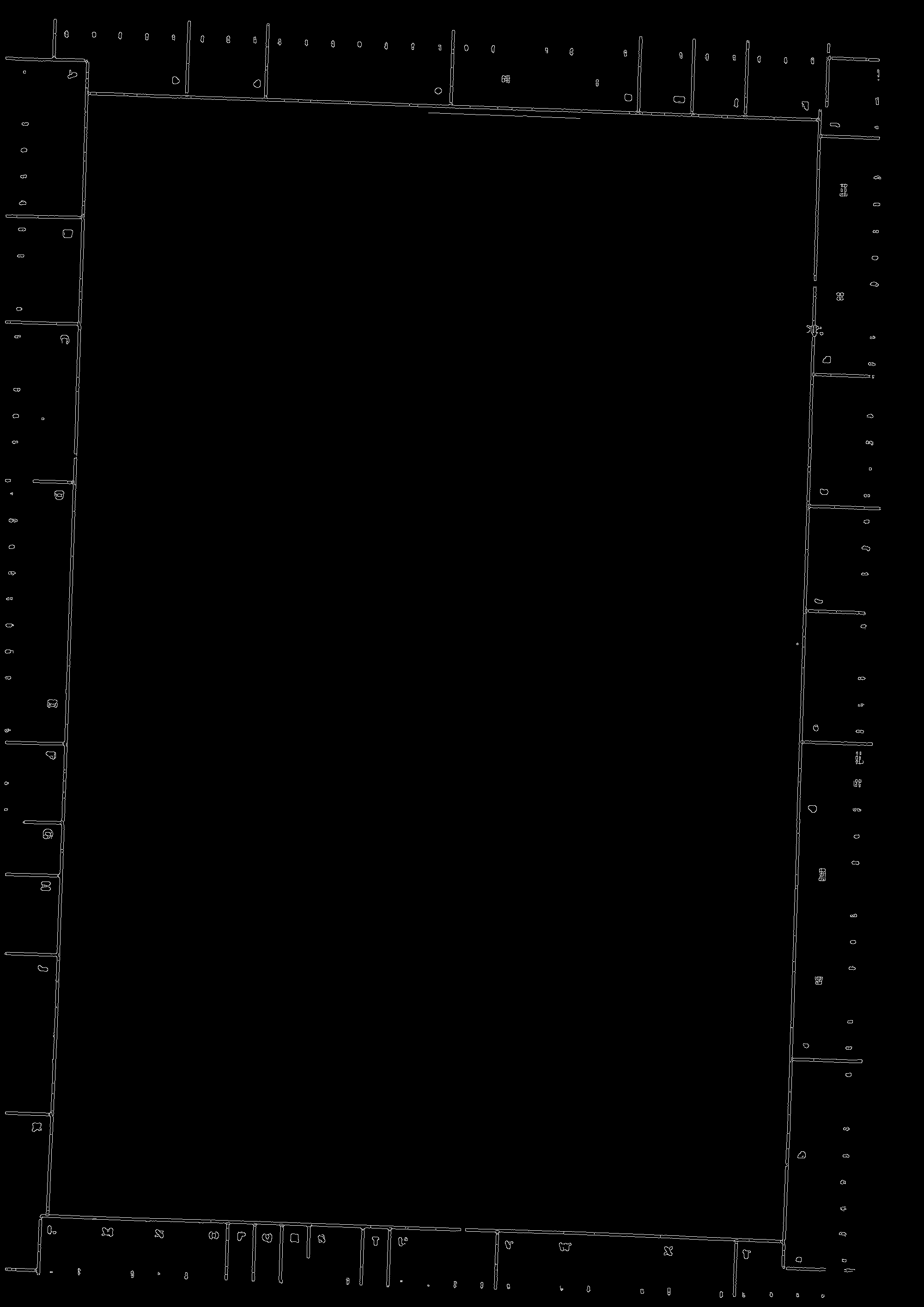

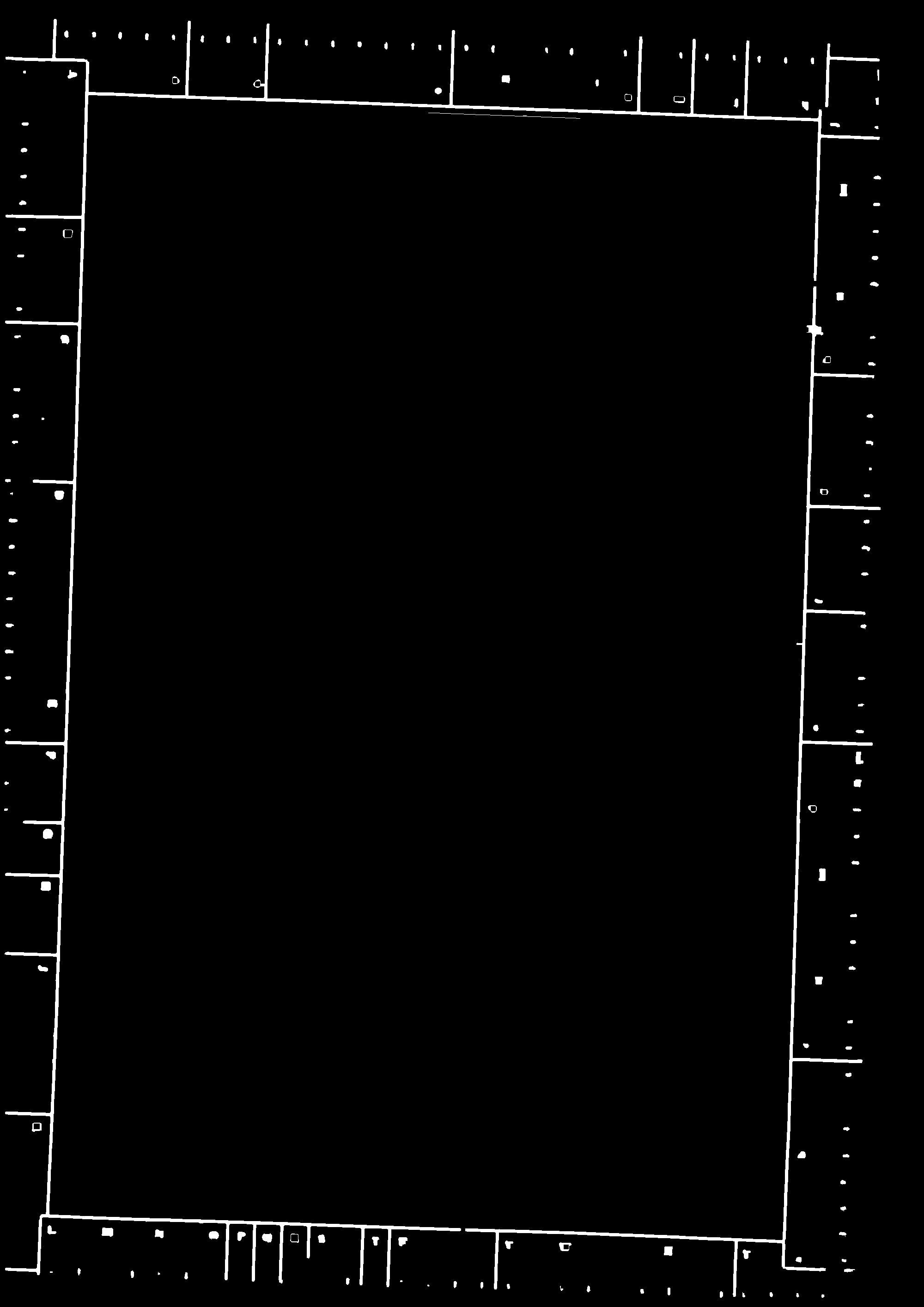

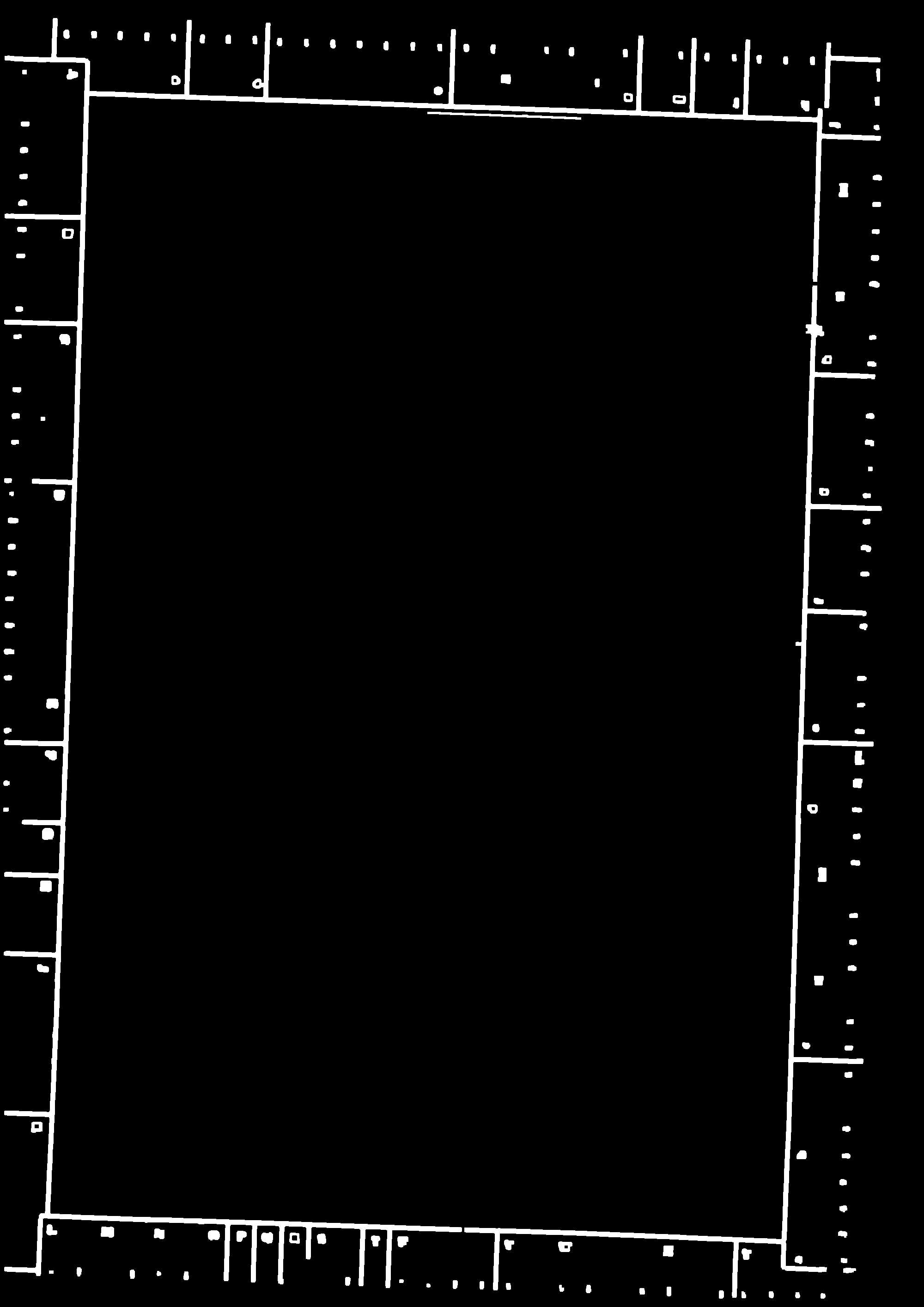

Auto identification of an inner black border in scanned Images and cropping of the images to this said border. Here is a blacked out example Image, the first is the "original" and the second one with a red highlight around the black border is what I am looking to achieve:

The problem is that the border is not on the outside of the images and the scans differ greatly in quality, meaning the border is never on the same spot and it’s not possible to crop by means of pixels.

Edit: I’m looking for a way to crop the image only keeping everything inside the black border (what is blurred right now)

I’m looking for help on how a) if it is possible to do such a cropping and b) how to do it preferably with Python.

Thanks!

回答1:

Here is a pretty simple way to do that in Imagemagick.

Get the center coordinates

Clone the image and do the following on the clone

Threshold the image so that the inside of the black lines is white.

(If necessary use -connected-components to merge smaller black features into the white in the center)

Apply some morphology open to make sure that the black lines are continuous

Floodfill the image with red starting in the center

Convert non-red to black and red to white

Put the processed clone into the alpha channel of the input

Input:

center=$(convert img.jpg -format "%[fx:w/2],%[fx:h/2]\n" info:)

convert img.jpg \

\( +clone -auto-level -threshold 35% \

-morphology open disk:5 \

-fill red -draw "color $center floodfill" -alpha off \

-fill black +opaque red -fill white -opaque red \) \

-alpha off -compose copy_opacity -composite result.png

Here is Python Wand code that is the equivalent to the above:

#!/bin/python3.7

from wand.image import Image

from wand.drawing import Drawing

from wand.color import Color

from wand.display import display

with Image(filename='black_rect.jpg') as img:

with img.clone() as copied:

copied.auto_level()

copied.threshold(threshold=0.35)

copied.morphology(method='open', kernel='disk:5')

centx=round(0.5*copied.width)

centy=round(0.5*copied.height)

with Drawing() as draw:

draw.fill_color='red'

draw.color(x=centx, y=centy, paint_method='floodfill')

draw(copied)

copied.opaque_paint(target='red', fill='black', fuzz=0.0, invert=True)

copied.opaque_paint(target='red', fill='white', fuzz=0.0, invert=False)

display(copied)

copied.alpha_channel = 'copy'

img.composite(copied, left=0, top=0, operator='copy_alpha')

img.format='png'

display(img)

img.save(filename='black_rect_interior.png')

For OpenCV, I would suggest that the following processing could be one way to do it. Sorry, I am not proficient with OpenCV

Threshold the image so that the inside of the black lines is white.

Apply some morphology open to make sure that the black lines are continuous

Get the contours of the white regions.

Get the largest interior contour and fill the inside with white

Put that result into the alpha channel of the input

ADDITION:

For those interested, here is a longer method that would be conducive to perspective rectification. I do something similar to what nathancy has done, but in Imagemagick.

First, threshold the image and do morphology open to be sure the black lines are continuous.

Then do connected components to get the ID number of the largest white region

Then extract that region

id=$(convert img.jpg -auto-level -threshold 35% \

-morphology open disk:5 -type bilevel \

-define connected-components:mean-color=true \

-define connected-components:verbose=true \

-connected-components 8 null: | grep "gray(255)" | head -n 1 | awk '{print $1}' | sed 's/[:]*$//')

echo $id

convert img.jpg -auto-level -threshold 35% \

-morphology open disk:5 -type bilevel \

-define connected-components:mean-color=true \

-define connected-components:keep=$id \

-connected-components 8 \

-alpha extract -morphology erode disk:5 \

region.png

Now do Canny edge detection and hough line transform. Here I save the canny image, the hough lines as red lines and the lines overlaid on the image and the line information, which is saved in the .mvg file.

convert region.png \

\( +clone -canny 0x1+10%+30% +write region_canny.png \

-background none -fill red -stroke red -strokewidth 2 \

-hough-lines 9x9+400 +write region_lines.png +write lines.mvg \) \

-compose over -composite region_hough.png

convert region_lines.png -alpha extract region_bw_lines.png

# Hough line transform: 9x9+400

viewbox 0 0 2000 2829

# x1,y1 x2,y2 # count angle distance

line 0,202.862 2000,272.704 # 763 92 824

line 204.881,0 106.09,2829 # 990 2 1156

line 1783.84,0 1685.05,2829 # 450 2 2734

line 0,2620.34 2000,2690.18 # 604 92 3240

Next I use a script that I wrote to do corner detection. Here I use the Harris detector.

corners=$(corners -m harris -t 40 -d 5 -p yes region_bw_lines.png region_bw_lines_corners.png)

echo "$corners"

pt=1 coords=195.8,207.8

pt=2 coords=1772.8,262.8

pt=3 coords=111.5,2622.5

pt=4 coords=1688.5,2677.5

Next I extract and sort just the corners in clockwise fashion. The following is some code I wrote that I converted from here

list=$(echo "$corners" | sed -n 's/^.*=\(.*\)$/\1/p' | tr "\n" " " | sed 's/[ ]*$//' )

echo "$list"

195.8,207.8 1772.8,262.8 111.5,2622.5 1688.5,2677.5

# sort on x

xlist=`echo "$list" | tr " " "\n" | sort -n -t "," -k1,1`

leftmost=`echo "$xlist" | head -n 2`

rightmost=`echo "$xlist" | tail -n +3`

rightmost1=`echo "$rightmost" | head -n 1`

rightmost2=`echo "$rightmost" | tail -n +2`

# sort leftmost on y

leftmost2=`echo "$leftmost" | sort -n -t "," -k2,2`

topleft=`echo "$leftmost2" | head -n 1`

btmleft=`echo "$leftmost2" | tail -n +2`

# get distance from topleft to rightmost1 and rightmost2; largest is bottom right

topleftx=`echo "$topleft" | cut -d, -f1`

toplefty=`echo "$topleft" | cut -d, -f2`

rightmost1x=`echo "$rightmost1" | cut -d, -f1`

rightmost1y=`echo "$rightmost1" | cut -d, -f2`

rightmost2x=`echo "$rightmost2" | cut -d, -f1`

rightmost2y=`echo "$rightmost2" | cut -d, -f2`

dist1=`convert xc: -format "%[fx:hypot(($topleftx-$rightmost1x),($toplefty-$rightmost1y))]" info:`

dist2=`convert xc: -format "%[fx:hypot(($topleftx-$rightmost2x),($toplefty-$rightmost2y))]" info:`

test=`convert xc: -format "%[fx:$dist1>$dist2?1:0]" info:`

if [ $test -eq 1 ]; then

btmright=$rightmost1

topright=$rightmost2

else

btmright=$rightmost2

topright=$rightmost1

fi

sort_corners="$topleft $topright $btmright $btmleft"

echo $sort_corners

195.8,207.8 1772.8,262.8 1688.5,2677.5 111.5,2622.5

Finally, I use the corner coordinates to draw a white filled polygon on a black background and put that result into the alpha channel of the input image.

convert img.jpg \

\( +clone -fill black -colorize 100 \

-fill white -draw "polygon $sort_corners" \) \

-alpha off -compose copy_opacity -composite result.png

回答2:

Alright long post incoming buckle up. Here's the strategy

- Convert image to grayscale and median blur

- Perform canny edge detection

- Perform morphological transformations to smooth image

- Dilate to enhance and connect contours

- Perform line detection and draw desired rectangle ROI onto mask

- Perform Shi-Tomasi corner detection to detect 4 corners

- Order corner points clockwise

- Draw corner points onto 2nd mask and find contour to obtain perfect ROI

- Perform perspective transform on ROI to obtain birds-eye view

- Rotate image to obtain final result

Canny edge detection

Next we perform morphological transformations to close gaps and smooth image (left). Then dilate to enhance contours (right)

From here, we perform line detection with cv2.HoughLinesP() with minimum line length and maximum line gap filters to obtain the large rectangular ROI. We draw this ROI onto a mask

minLineLength = 150

maxLineGap = 250

lines = cv2.HoughLinesP(dilate,1,np.pi/180,100,minLineLength,maxLineGap)

for line in lines:

for x1,y1,x2,y2 in line:

cv2.line(mask,(x1,y1),(x2,y2),(255,255,255),2)

mask = cv2.dilate(mask, kernel, iterations=2)

mask = cv2.cvtColor(mask, cv2.COLOR_BGR2GRAY)

Now we perform Shi-Tomasi corner detection with cv2.goodFeaturesToTrack() to detect the four corner coordinates

corners = cv2.goodFeaturesToTrack(mask,4,0.5,1000)

c_list = []

for corner in corners:

x,y = corner.ravel()

c_list.append([int(x), int(y)])

cv2.circle(image,(x,y),40,(36,255,12),-1)

Unordered corner coordinates

[[1690, 2693], [113, 2622], [1766, 269], [197, 212]]

From here, we reorder the four corner points clockwise by sorting the coordinates and rearranging them in (top-left, top-right, bottom-right, bottom-left) order. This step is important to obtain a top-down view of the ROI when we perform the perspective transform.

Ordered corner coordinates

[[197,212], [1766,269], [1690,2693], [113,2622]]

After reordering, we draw the points onto a 2nd mask to obtain the perfect ROI

We now perform a perspective transform on the ROI to obtain a top-down view of the image

Finally we rotate it -90 degrees to obtain our desired result

import cv2

import numpy as np

def rotate_image(image, angle):

# Grab the dimensions of the image and then determine the center

(h, w) = image.shape[:2]

(cX, cY) = (w / 2, h / 2)

# grab the rotation matrix (applying the negative of the

# angle to rotate clockwise), then grab the sine and cosine

# (i.e., the rotation components of the matrix)

M = cv2.getRotationMatrix2D((cX, cY), -angle, 1.0)

cos = np.abs(M[0, 0])

sin = np.abs(M[0, 1])

# Compute the new bounding dimensions of the image

nW = int((h * sin) + (w * cos))

nH = int((h * cos) + (w * sin))

# Adjust the rotation matrix to take into account translation

M[0, 2] += (nW / 2) - cX

M[1, 2] += (nH / 2) - cY

# Perform the actual rotation and return the image

return cv2.warpAffine(image, M, (nW, nH))

def order_points_clockwise(pts):

# sort the points based on their x-coordinates

xSorted = pts[np.argsort(pts[:, 0]), :]

# grab the left-most and right-most points from the sorted

# x-roodinate points

leftMost = xSorted[:2, :]

rightMost = xSorted[2:, :]

# now, sort the left-most coordinates according to their

# y-coordinates so we can grab the top-left and bottom-left

# points, respectively

leftMost = leftMost[np.argsort(leftMost[:, 1]), :]

(tl, bl) = leftMost

# now, sort the right-most coordinates according to their

# y-coordinates so we can grab the top-right and bottom-right

# points, respectively

rightMost = rightMost[np.argsort(rightMost[:, 1]), :]

(tr, br) = rightMost

# return the coordinates in top-left, top-right,

# bottom-right, and bottom-left order

return np.array([tl, tr, br, bl], dtype="int32")

def perspective_transform(image, corners):

def order_corner_points(corners):

# Separate corners into individual points

# Index 0 - top-right

# 1 - top-left

# 2 - bottom-left

# 3 - bottom-right

corners = [(corner[0][0], corner[0][1]) for corner in corners]

top_r, top_l, bottom_l, bottom_r = corners[0], corners[1], corners[2], corners[3]

return (top_l, top_r, bottom_r, bottom_l)

# Order points in clockwise order

ordered_corners = order_corner_points(corners)

top_l, top_r, bottom_r, bottom_l = ordered_corners

# Determine width of new image which is the max distance between

# (bottom right and bottom left) or (top right and top left) x-coordinates

width_A = np.sqrt(((bottom_r[0] - bottom_l[0]) ** 2) + ((bottom_r[1] - bottom_l[1]) ** 2))

width_B = np.sqrt(((top_r[0] - top_l[0]) ** 2) + ((top_r[1] - top_l[1]) ** 2))

width = max(int(width_A), int(width_B))

# Determine height of new image which is the max distance between

# (top right and bottom right) or (top left and bottom left) y-coordinates

height_A = np.sqrt(((top_r[0] - bottom_r[0]) ** 2) + ((top_r[1] - bottom_r[1]) ** 2))

height_B = np.sqrt(((top_l[0] - bottom_l[0]) ** 2) + ((top_l[1] - bottom_l[1]) ** 2))

height = max(int(height_A), int(height_B))

# Construct new points to obtain top-down view of image in

# top_r, top_l, bottom_l, bottom_r order

dimensions = np.array([[0, 0], [width - 1, 0], [width - 1, height - 1],

[0, height - 1]], dtype = "float32")

# Convert to Numpy format

ordered_corners = np.array(ordered_corners, dtype="float32")

# Find perspective transform matrix

matrix = cv2.getPerspectiveTransform(ordered_corners, dimensions)

# Return the transformed image

return cv2.warpPerspective(image, matrix, (width, height))

image = cv2.imread('1.jpg')

original = image.copy()

mask = np.zeros(image.shape, np.uint8)

clean_mask = np.zeros(image.shape, np.uint8)

blur = cv2.medianBlur(image, 9)

gray = cv2.cvtColor(blur, cv2.COLOR_BGR2GRAY)

canny = cv2.Canny(gray, 120, 255, 1)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5,5))

close = cv2.morphologyEx(canny, cv2.MORPH_CLOSE, kernel, iterations=2)

dilate = cv2.dilate(close, kernel, iterations=1)

minLineLength = 150

maxLineGap = 250

lines = cv2.HoughLinesP(dilate,1,np.pi/180,100,minLineLength,maxLineGap)

for line in lines:

for x1,y1,x2,y2 in line:

cv2.line(mask,(x1,y1),(x2,y2),(255,255,255),2)

mask = cv2.dilate(mask, kernel, iterations=2)

mask = cv2.cvtColor(mask, cv2.COLOR_BGR2GRAY)

cv2.imwrite('mask.png', mask)

corners = cv2.goodFeaturesToTrack(mask,4,0.5,1000)

c_list = []

for corner in corners:

x,y = corner.ravel()

c_list.append([int(x), int(y)])

cv2.circle(image,(x,y),40,(36,255,12),-1)

cv2.imwrite('corner_points.png', image)

corner_points = np.array([c_list[0], c_list[1], c_list[2], c_list[3]])

ordered_corner_points = order_points_clockwise(corner_points)

ordered_corner_points = np.array(ordered_corner_points).reshape((-1,1,2)).astype(np.int32)

cv2.drawContours(clean_mask, [ordered_corner_points], -1, (255, 255, 255), 2)

cv2.imwrite('clean_mask.png', clean_mask)

clean_mask = cv2.cvtColor(clean_mask, cv2.COLOR_BGR2GRAY)

cnts = cv2.findContours(clean_mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

# approximate the contour

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.015 * peri, True)

if len(approx) == 4:

transformed = perspective_transform(original, approx)

# Rotate image

result = rotate_image(transformed, -90)

cv2.imshow('image', image)

cv2.imwrite('close.png', close)

cv2.imwrite('canny.png', canny)

cv2.imwrite('dilate.png', dilate)

cv2.imshow('clean_mask', clean_mask)

cv2.imwrite('image.png', image)

cv2.imshow('result', result)

cv2.imwrite('result.png', result)

cv2.waitKey()

Edit: Another method

Very similar to the above method but instead of using corner detection to find the ROI, we can extract the largest internal contour using contour area as a filter then use a mask to the get same results. The method is the same to get the perspective transform

import cv2

import numpy as np

def rotate_image(image, angle):

# Grab the dimensions of the image and then determine the center

(h, w) = image.shape[:2]

(cX, cY) = (w / 2, h / 2)

# grab the rotation matrix (applying the negative of the

# angle to rotate clockwise), then grab the sine and cosine

# (i.e., the rotation components of the matrix)

M = cv2.getRotationMatrix2D((cX, cY), -angle, 1.0)

cos = np.abs(M[0, 0])

sin = np.abs(M[0, 1])

# Compute the new bounding dimensions of the image

nW = int((h * sin) + (w * cos))

nH = int((h * cos) + (w * sin))

# Adjust the rotation matrix to take into account translation

M[0, 2] += (nW / 2) - cX

M[1, 2] += (nH / 2) - cY

# Perform the actual rotation and return the image

return cv2.warpAffine(image, M, (nW, nH))

def perspective_transform(image, corners):

def order_corner_points(corners):

# Separate corners into individual points

# Index 0 - top-right

# 1 - top-left

# 2 - bottom-left

# 3 - bottom-right

corners = [(corner[0][0], corner[0][1]) for corner in corners]

top_r, top_l, bottom_l, bottom_r = corners[0], corners[1], corners[2], corners[3]

return (top_l, top_r, bottom_r, bottom_l)

# Order points in clockwise order

ordered_corners = order_corner_points(corners)

top_l, top_r, bottom_r, bottom_l = ordered_corners

# Determine width of new image which is the max distance between

# (bottom right and bottom left) or (top right and top left) x-coordinates

width_A = np.sqrt(((bottom_r[0] - bottom_l[0]) ** 2) + ((bottom_r[1] - bottom_l[1]) ** 2))

width_B = np.sqrt(((top_r[0] - top_l[0]) ** 2) + ((top_r[1] - top_l[1]) ** 2))

width = max(int(width_A), int(width_B))

# Determine height of new image which is the max distance between

# (top right and bottom right) or (top left and bottom left) y-coordinates

height_A = np.sqrt(((top_r[0] - bottom_r[0]) ** 2) + ((top_r[1] - bottom_r[1]) ** 2))

height_B = np.sqrt(((top_l[0] - bottom_l[0]) ** 2) + ((top_l[1] - bottom_l[1]) ** 2))

height = max(int(height_A), int(height_B))

# Construct new points to obtain top-down view of image in

# top_r, top_l, bottom_l, bottom_r order

dimensions = np.array([[0, 0], [width - 1, 0], [width - 1, height - 1],

[0, height - 1]], dtype = "float32")

# Convert to Numpy format

ordered_corners = np.array(ordered_corners, dtype="float32")

# Find perspective transform matrix

matrix = cv2.getPerspectiveTransform(ordered_corners, dimensions)

# Return the transformed image

return cv2.warpPerspective(image, matrix, (width, height))

image = cv2.imread('1.jpg')

original = image.copy()

mask = np.zeros(image.shape, np.uint8)

clean_mask = np.zeros(image.shape, np.uint8)

blur = cv2.medianBlur(image, 9)

gray = cv2.cvtColor(blur, cv2.COLOR_BGR2GRAY)

canny = cv2.Canny(gray, 120, 255, 1)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5,5))

close = cv2.morphologyEx(canny, cv2.MORPH_CLOSE, kernel, iterations=2)

dilate = cv2.dilate(close, kernel, iterations=1)

minLineLength = 150

maxLineGap = 250

lines = cv2.HoughLinesP(dilate,1,np.pi/180,100,minLineLength,maxLineGap)

for line in lines:

for x1,y1,x2,y2 in line:

cv2.line(mask,(x1,y1),(x2,y2),(255,255,255),2)

mask = cv2.dilate(mask, kernel, iterations=2)

mask = cv2.cvtColor(mask, cv2.COLOR_BGR2GRAY)

cv2.imwrite('mask.png', mask)

cnts = cv2.findContours(mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[:10]

for c in cnts:

cv2.drawContours(clean_mask, [c], -1, (255, 255, 255), -1)

clean_mask = cv2.morphologyEx(clean_mask, cv2.MORPH_OPEN, kernel, iterations=5)

result_no_transform = cv2.bitwise_and(clean_mask, image)

clean_mask = cv2.cvtColor(clean_mask, cv2.COLOR_BGR2GRAY)

cnts = cv2.findContours(clean_mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

# approximate the contour

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.015 * peri, True)

if len(approx) == 4:

transformed = perspective_transform(original, approx)

result = rotate_image(transformed, -90)

cv2.imwrite('transformed.png', transformed)

cv2.imwrite('result_no_transform.png', result_no_transform)

cv2.imwrite('result.png', result)

cv2.imwrite('clean_mask.png', clean_mask)

来源:https://stackoverflow.com/questions/57158869/autocropping-images-to-extract-inner-black-border-roi-using-python