Write parquet from AWS Kinesis firehose to AWS S3

I would like to ingest data into s3 from kinesis firehose formatted as parquet. So far I have just find a solution that implies creating an EMR, but I am looking for somethi

-

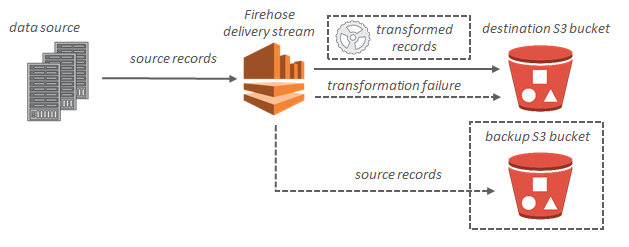

Amazon Kinesis Firehose receives streaming records and can store them in Amazon S3 (or Amazon Redshift or Amazon Elasticsearch Service).

Each record can be up to 1000KB.

However, records are appended together into a text file, with batching based upon time or size. Traditionally, records are JSON format.

You will be unable to send a parquet file because it will not conform to this file format.

It is possible to trigger a Lambda data transformation function, but this will not be capable of outputting a parquet file either.

In fact, given the nature of parquet files, it is unlikely that you could build them one record at a time. Being a columnar storage format, I suspect that they really need to be created in a batch rather than having data appended per-record.

Bottom line: Nope.

- 热议问题

加载中...

加载中...