Wait for bash background jobs in script to be finished

To maximize CPU usage (I run things on a Debian Lenny in EC2) I have a simple script to launch jobs in parallel:

#!/bin/bash

for i in apache-200901*.log; do

-

Using GNU Parallel will make your script even shorter and possibly more efficient:

parallel 'echo "Processing "{}" ..."; do_something_important {}' ::: apache-*.logThis will run one job per CPU core and continue to do that until all files are processed.

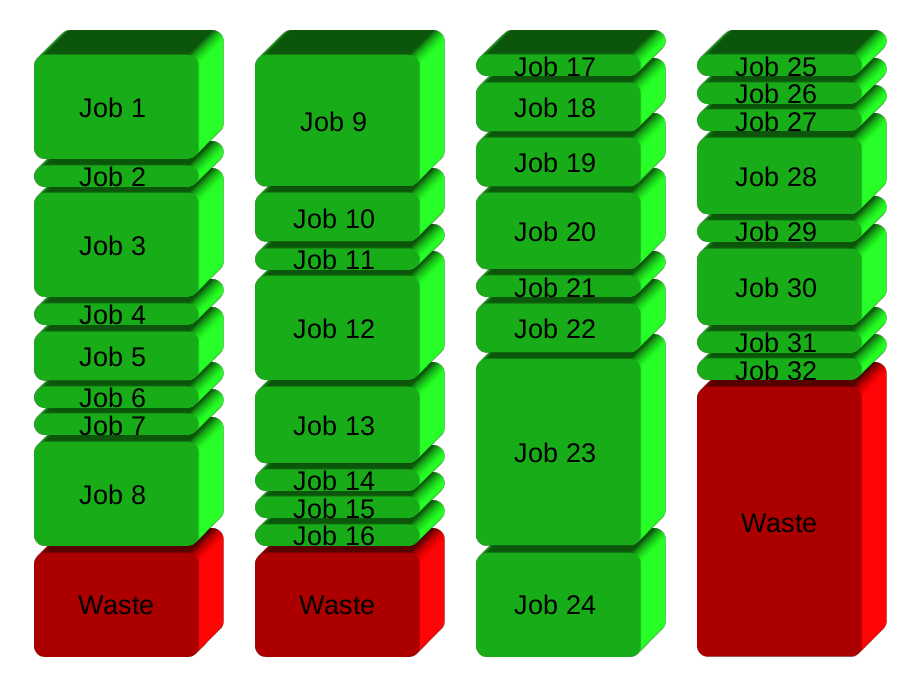

Your solution will basically split the jobs into groups before running. Here 32 jobs in 4 groups:

GNU Parallel instead spawns a new process when one finishes - keeping the CPUs active and thus saving time:

To learn more:

- Watch the intro video for a quick introduction: https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1

- Walk through the tutorial (man parallel_tutorial). You command line will love you for it.

- 热议问题

加载中...

加载中...