文章目录

打印模型结构

from torchsummaryX import summary

from torchvision.models import alexnet, resnet34, resnet50, densenet121, vgg16, vgg19, resnet18

import torch

summary(resnet18(pretrained=False), torch.zeros((1, 3, 224, 224)))

====================================================================================================

Kernel Shape Output Shape \

Layer

0_conv1 [3, 64, 7, 7] [1, 64, 112, 112]

1_bn1 [64] [1, 64, 112, 112]

2_relu - [1, 64, 112, 112]

3_maxpool - [1, 64, 56, 56]

4_layer1.0.Conv2d_conv1 [64, 64, 3, 3] [1, 64, 56, 56]

5_layer1.0.BatchNorm2d_bn1 [64] [1, 64, 56, 56]

6_layer1.0.ReLU_relu - [1, 64, 56, 56]

7_layer1.0.Conv2d_conv2 [64, 64, 3, 3] [1, 64, 56, 56]

8_layer1.0.BatchNorm2d_bn2 [64] [1, 64, 56, 56]

9_layer1.0.ReLU_relu - [1, 64, 56, 56]

10_layer1.1.Conv2d_conv1 [64, 64, 3, 3] [1, 64, 56, 56]

11_layer1.1.BatchNorm2d_bn1 [64] [1, 64, 56, 56]

12_layer1.1.ReLU_relu - [1, 64, 56, 56]

13_layer1.1.Conv2d_conv2 [64, 64, 3, 3] [1, 64, 56, 56]

14_layer1.1.BatchNorm2d_bn2 [64] [1, 64, 56, 56]

15_layer1.1.ReLU_relu - [1, 64, 56, 56]

16_layer2.0.Conv2d_conv1 [64, 128, 3, 3] [1, 128, 28, 28]

17_layer2.0.BatchNorm2d_bn1 [128] [1, 128, 28, 28]

18_layer2.0.ReLU_relu - [1, 128, 28, 28]

19_layer2.0.Conv2d_conv2 [128, 128, 3, 3] [1, 128, 28, 28]

20_layer2.0.BatchNorm2d_bn2 [128] [1, 128, 28, 28]

21_layer2.0.downsample.Conv2d_0 [64, 128, 1, 1] [1, 128, 28, 28]

22_layer2.0.downsample.BatchNorm2d_1 [128] [1, 128, 28, 28]

23_layer2.0.ReLU_relu - [1, 128, 28, 28]

24_layer2.1.Conv2d_conv1 [128, 128, 3, 3] [1, 128, 28, 28]

25_layer2.1.BatchNorm2d_bn1 [128] [1, 128, 28, 28]

26_layer2.1.ReLU_relu - [1, 128, 28, 28]

27_layer2.1.Conv2d_conv2 [128, 128, 3, 3] [1, 128, 28, 28]

28_layer2.1.BatchNorm2d_bn2 [128] [1, 128, 28, 28]

29_layer2.1.ReLU_relu - [1, 128, 28, 28]

30_layer3.0.Conv2d_conv1 [128, 256, 3, 3] [1, 256, 14, 14]

31_layer3.0.BatchNorm2d_bn1 [256] [1, 256, 14, 14]

32_layer3.0.ReLU_relu - [1, 256, 14, 14]

33_layer3.0.Conv2d_conv2 [256, 256, 3, 3] [1, 256, 14, 14]

34_layer3.0.BatchNorm2d_bn2 [256] [1, 256, 14, 14]

35_layer3.0.downsample.Conv2d_0 [128, 256, 1, 1] [1, 256, 14, 14]

36_layer3.0.downsample.BatchNorm2d_1 [256] [1, 256, 14, 14]

37_layer3.0.ReLU_relu - [1, 256, 14, 14]

38_layer3.1.Conv2d_conv1 [256, 256, 3, 3] [1, 256, 14, 14]

39_layer3.1.BatchNorm2d_bn1 [256] [1, 256, 14, 14]

40_layer3.1.ReLU_relu - [1, 256, 14, 14]

41_layer3.1.Conv2d_conv2 [256, 256, 3, 3] [1, 256, 14, 14]

42_layer3.1.BatchNorm2d_bn2 [256] [1, 256, 14, 14]

43_layer3.1.ReLU_relu - [1, 256, 14, 14]

44_layer4.0.Conv2d_conv1 [256, 512, 3, 3] [1, 512, 7, 7]

45_layer4.0.BatchNorm2d_bn1 [512] [1, 512, 7, 7]

46_layer4.0.ReLU_relu - [1, 512, 7, 7]

47_layer4.0.Conv2d_conv2 [512, 512, 3, 3] [1, 512, 7, 7]

48_layer4.0.BatchNorm2d_bn2 [512] [1, 512, 7, 7]

49_layer4.0.downsample.Conv2d_0 [256, 512, 1, 1] [1, 512, 7, 7]

50_layer4.0.downsample.BatchNorm2d_1 [512] [1, 512, 7, 7]

51_layer4.0.ReLU_relu - [1, 512, 7, 7]

52_layer4.1.Conv2d_conv1 [512, 512, 3, 3] [1, 512, 7, 7]

53_layer4.1.BatchNorm2d_bn1 [512] [1, 512, 7, 7]

54_layer4.1.ReLU_relu - [1, 512, 7, 7]

55_layer4.1.Conv2d_conv2 [512, 512, 3, 3] [1, 512, 7, 7]

56_layer4.1.BatchNorm2d_bn2 [512] [1, 512, 7, 7]

57_layer4.1.ReLU_relu - [1, 512, 7, 7]

58_avgpool - [1, 512, 1, 1]

59_fc [512, 1000] [1, 1000]

Params (K) Mult-Adds (M)

Layer

0_conv1 9.408 118.014

1_bn1 0.128 6.4e-05

2_relu - -

3_maxpool - -

4_layer1.0.Conv2d_conv1 36.864 115.606

5_layer1.0.BatchNorm2d_bn1 0.128 6.4e-05

6_layer1.0.ReLU_relu - -

7_layer1.0.Conv2d_conv2 36.864 115.606

8_layer1.0.BatchNorm2d_bn2 0.128 6.4e-05

9_layer1.0.ReLU_relu - -

10_layer1.1.Conv2d_conv1 36.864 115.606

11_layer1.1.BatchNorm2d_bn1 0.128 6.4e-05

12_layer1.1.ReLU_relu - -

13_layer1.1.Conv2d_conv2 36.864 115.606

14_layer1.1.BatchNorm2d_bn2 0.128 6.4e-05

15_layer1.1.ReLU_relu - -

16_layer2.0.Conv2d_conv1 73.728 57.8028

17_layer2.0.BatchNorm2d_bn1 0.256 0.000128

18_layer2.0.ReLU_relu - -

19_layer2.0.Conv2d_conv2 147.456 115.606

20_layer2.0.BatchNorm2d_bn2 0.256 0.000128

21_layer2.0.downsample.Conv2d_0 8.192 6.42253

22_layer2.0.downsample.BatchNorm2d_1 0.256 0.000128

23_layer2.0.ReLU_relu - -

24_layer2.1.Conv2d_conv1 147.456 115.606

25_layer2.1.BatchNorm2d_bn1 0.256 0.000128

26_layer2.1.ReLU_relu - -

27_layer2.1.Conv2d_conv2 147.456 115.606

28_layer2.1.BatchNorm2d_bn2 0.256 0.000128

29_layer2.1.ReLU_relu - -

30_layer3.0.Conv2d_conv1 294.912 57.8028

31_layer3.0.BatchNorm2d_bn1 0.512 0.000256

32_layer3.0.ReLU_relu - -

33_layer3.0.Conv2d_conv2 589.824 115.606

34_layer3.0.BatchNorm2d_bn2 0.512 0.000256

35_layer3.0.downsample.Conv2d_0 32.768 6.42253

36_layer3.0.downsample.BatchNorm2d_1 0.512 0.000256

37_layer3.0.ReLU_relu - -

38_layer3.1.Conv2d_conv1 589.824 115.606

39_layer3.1.BatchNorm2d_bn1 0.512 0.000256

40_layer3.1.ReLU_relu - -

41_layer3.1.Conv2d_conv2 589.824 115.606

42_layer3.1.BatchNorm2d_bn2 0.512 0.000256

43_layer3.1.ReLU_relu - -

44_layer4.0.Conv2d_conv1 1179.65 57.8028

45_layer4.0.BatchNorm2d_bn1 1.024 0.000512

46_layer4.0.ReLU_relu - -

47_layer4.0.Conv2d_conv2 2359.3 115.606

48_layer4.0.BatchNorm2d_bn2 1.024 0.000512

49_layer4.0.downsample.Conv2d_0 131.072 6.42253

50_layer4.0.downsample.BatchNorm2d_1 1.024 0.000512

51_layer4.0.ReLU_relu - -

52_layer4.1.Conv2d_conv1 2359.3 115.606

53_layer4.1.BatchNorm2d_bn1 1.024 0.000512

54_layer4.1.ReLU_relu - -

55_layer4.1.Conv2d_conv2 2359.3 115.606

56_layer4.1.BatchNorm2d_bn2 1.024 0.000512

57_layer4.1.ReLU_relu - -

58_avgpool - -

59_fc 513 0.512

----------------------------------------------------------------------------------------------------

Params (K): 11689.511999999999

Mult-Adds (M): 1814.0781440000007

====================================================================================================

| Kernel Shape | Output Shape | Params (K) | Mult-Adds (M) | |

|---|---|---|---|---|

| Layer | ||||

| 0_conv1 | [3, 64, 7, 7] | [1, 64, 112, 112] | 9.408 | 118.013952 |

| 1_bn1 | [64] | [1, 64, 112, 112] | 0.128 | 0.000064 |

| 2_relu | - | [1, 64, 112, 112] | NaN | NaN |

| 3_maxpool | - | [1, 64, 56, 56] | NaN | NaN |

| 4_layer1.0.Conv2d_conv1 | [64, 64, 3, 3] | [1, 64, 56, 56] | 36.864 | 115.605504 |

| 5_layer1.0.BatchNorm2d_bn1 | [64] | [1, 64, 56, 56] | 0.128 | 0.000064 |

| 6_layer1.0.ReLU_relu | - | [1, 64, 56, 56] | NaN | NaN |

| 7_layer1.0.Conv2d_conv2 | [64, 64, 3, 3] | [1, 64, 56, 56] | 36.864 | 115.605504 |

| 8_layer1.0.BatchNorm2d_bn2 | [64] | [1, 64, 56, 56] | 0.128 | 0.000064 |

| 9_layer1.0.ReLU_relu | - | [1, 64, 56, 56] | NaN | NaN |

| 10_layer1.1.Conv2d_conv1 | [64, 64, 3, 3] | [1, 64, 56, 56] | 36.864 | 115.605504 |

| 11_layer1.1.BatchNorm2d_bn1 | [64] | [1, 64, 56, 56] | 0.128 | 0.000064 |

| 12_layer1.1.ReLU_relu | - | [1, 64, 56, 56] | NaN | NaN |

| 13_layer1.1.Conv2d_conv2 | [64, 64, 3, 3] | [1, 64, 56, 56] | 36.864 | 115.605504 |

| 14_layer1.1.BatchNorm2d_bn2 | [64] | [1, 64, 56, 56] | 0.128 | 0.000064 |

| 15_layer1.1.ReLU_relu | - | [1, 64, 56, 56] | NaN | NaN |

| 16_layer2.0.Conv2d_conv1 | [64, 128, 3, 3] | [1, 128, 28, 28] | 73.728 | 57.802752 |

| 17_layer2.0.BatchNorm2d_bn1 | [128] | [1, 128, 28, 28] | 0.256 | 0.000128 |

| 18_layer2.0.ReLU_relu | - | [1, 128, 28, 28] | NaN | NaN |

| 19_layer2.0.Conv2d_conv2 | [128, 128, 3, 3] | [1, 128, 28, 28] | 147.456 | 115.605504 |

| 20_layer2.0.BatchNorm2d_bn2 | [128] | [1, 128, 28, 28] | 0.256 | 0.000128 |

| 21_layer2.0.downsample.Conv2d_0 | [64, 128, 1, 1] | [1, 128, 28, 28] | 8.192 | 6.422528 |

| 22_layer2.0.downsample.BatchNorm2d_1 | [128] | [1, 128, 28, 28] | 0.256 | 0.000128 |

| 23_layer2.0.ReLU_relu | - | [1, 128, 28, 28] | NaN | NaN |

| 24_layer2.1.Conv2d_conv1 | [128, 128, 3, 3] | [1, 128, 28, 28] | 147.456 | 115.605504 |

| 25_layer2.1.BatchNorm2d_bn1 | [128] | [1, 128, 28, 28] | 0.256 | 0.000128 |

| 26_layer2.1.ReLU_relu | - | [1, 128, 28, 28] | NaN | NaN |

| 27_layer2.1.Conv2d_conv2 | [128, 128, 3, 3] | [1, 128, 28, 28] | 147.456 | 115.605504 |

| 28_layer2.1.BatchNorm2d_bn2 | [128] | [1, 128, 28, 28] | 0.256 | 0.000128 |

| 29_layer2.1.ReLU_relu | - | [1, 128, 28, 28] | NaN | NaN |

| 30_layer3.0.Conv2d_conv1 | [128, 256, 3, 3] | [1, 256, 14, 14] | 294.912 | 57.802752 |

| 31_layer3.0.BatchNorm2d_bn1 | [256] | [1, 256, 14, 14] | 0.512 | 0.000256 |

| 32_layer3.0.ReLU_relu | - | [1, 256, 14, 14] | NaN | NaN |

| 33_layer3.0.Conv2d_conv2 | [256, 256, 3, 3] | [1, 256, 14, 14] | 589.824 | 115.605504 |

| 34_layer3.0.BatchNorm2d_bn2 | [256] | [1, 256, 14, 14] | 0.512 | 0.000256 |

| 35_layer3.0.downsample.Conv2d_0 | [128, 256, 1, 1] | [1, 256, 14, 14] | 32.768 | 6.422528 |

| 36_layer3.0.downsample.BatchNorm2d_1 | [256] | [1, 256, 14, 14] | 0.512 | 0.000256 |

| 37_layer3.0.ReLU_relu | - | [1, 256, 14, 14] | NaN | NaN |

| 38_layer3.1.Conv2d_conv1 | [256, 256, 3, 3] | [1, 256, 14, 14] | 589.824 | 115.605504 |

| 39_layer3.1.BatchNorm2d_bn1 | [256] | [1, 256, 14, 14] | 0.512 | 0.000256 |

| 40_layer3.1.ReLU_relu | - | [1, 256, 14, 14] | NaN | NaN |

| 41_layer3.1.Conv2d_conv2 | [256, 256, 3, 3] | [1, 256, 14, 14] | 589.824 | 115.605504 |

| 42_layer3.1.BatchNorm2d_bn2 | [256] | [1, 256, 14, 14] | 0.512 | 0.000256 |

| 43_layer3.1.ReLU_relu | - | [1, 256, 14, 14] | NaN | NaN |

| 44_layer4.0.Conv2d_conv1 | [256, 512, 3, 3] | [1, 512, 7, 7] | 1179.648 | 57.802752 |

| 45_layer4.0.BatchNorm2d_bn1 | [512] | [1, 512, 7, 7] | 1.024 | 0.000512 |

| 46_layer4.0.ReLU_relu | - | [1, 512, 7, 7] | NaN | NaN |

| 47_layer4.0.Conv2d_conv2 | [512, 512, 3, 3] | [1, 512, 7, 7] | 2359.296 | 115.605504 |

| 48_layer4.0.BatchNorm2d_bn2 | [512] | [1, 512, 7, 7] | 1.024 | 0.000512 |

| 49_layer4.0.downsample.Conv2d_0 | [256, 512, 1, 1] | [1, 512, 7, 7] | 131.072 | 6.422528 |

| 50_layer4.0.downsample.BatchNorm2d_1 | [512] | [1, 512, 7, 7] | 1.024 | 0.000512 |

| 51_layer4.0.ReLU_relu | - | [1, 512, 7, 7] | NaN | NaN |

| 52_layer4.1.Conv2d_conv1 | [512, 512, 3, 3] | [1, 512, 7, 7] | 2359.296 | 115.605504 |

| 53_layer4.1.BatchNorm2d_bn1 | [512] | [1, 512, 7, 7] | 1.024 | 0.000512 |

| 54_layer4.1.ReLU_relu | - | [1, 512, 7, 7] | NaN | NaN |

| 55_layer4.1.Conv2d_conv2 | [512, 512, 3, 3] | [1, 512, 7, 7] | 2359.296 | 115.605504 |

| 56_layer4.1.BatchNorm2d_bn2 | [512] | [1, 512, 7, 7] | 1.024 | 0.000512 |

| 57_layer4.1.ReLU_relu | - | [1, 512, 7, 7] | NaN | NaN |

| 58_avgpool | - | [1, 512, 1, 1] | NaN | NaN |

| 59_fc | [512, 1000] | [1, 1000] | 513.000 | 0.512000 |

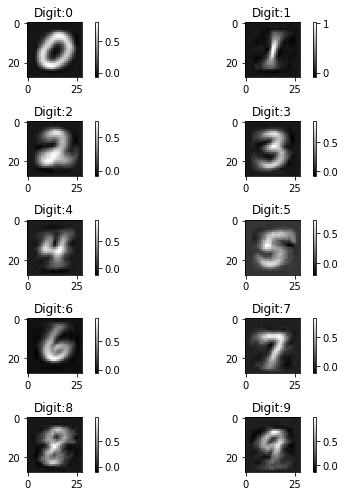

生成类原型 (Classes Prototype Generation)

思路非常简单,其实就是说呢,原来是你把大象的图片给神经网络,问他是什么动物。在神经网络训练好之后换一种方式,也就是告诉神经网络现在如果有一张图片是大象,请告诉我它长什么样子。

创建模型

from torch import nn

class MNIST_DNN(nn.Module):

def __init__(self):

super(MNIST_DNN, self).__init__()

self.dense1 = nn.Linear(784, 512)

self.dense2 = nn.Linear(512, 100)

self.dense3 = nn.Linear(100, 10)

def forward(self, x):

x = self.dense1(x)

x = self.dense2(x)

x = self.dense3(x)

return x

加载已经训练好的模型

训练好的模型在测试集上能够达到百分之九十三的准确率

model = MNIST_DNN()

model.load_state_dict(torch.load('./class_prototype/MNIST_CNN.pkl'))

生成图片

为了依次生成数字0到9对应的原型图,我们采取以下方式:

- 用随机噪声初始化输入,这里我们采取标准正态分布。

- 在反向传播损失的过程中,冻结模型的中间层参数,只对最开始的input做调整。

为了让模型的分布更加接近于真实情况,我们计算每一类图片的像素值的均值并记做 ,损失函数写成如下形式:

KaTeX parse error: No such environment: equation at position 8: \begin{̲e̲q̲u̲a̲t̲i̲o̲n̲}̲ \mathcal{L}=cr…

其中, 代表预训练模型, 代表输入, 代表对应标签。

import torch

from torch.autograd import Variable

from torch import nn, optim

import matplotlib.pyplot as plt

import numpy as np

from utils.utils import set_parameters_static

from utils.utils import get_img_means

lmda = 0.1

# 把模型的参数冻住

def set_parameters_static(model):

for para in list(model.parameters()):

para.requires_grad = False

return model

# 获取图像均值, 返回10*784的图像均值列表

def get_img_means(path):

train_mnist = datasets.MNIST(path, train=True, download=True, transform=transforms.ToTensor())

mnist_imgs = [data[0].view(1, 784).numpy() for data in train_mnist]

mnist_labels = [data[1] for data in train_mnist]

# 图片大小: 28*28

imgs_means = np.zeros((10, 784))

for i in range(10):

imgs = []

for index, label in enumerate(mnist_labels):

if label == i:

imgs.append(mnist_imgs[index])

imgs_means[i, :] = np.mean(imgs, axis=0)

return torch.from_numpy(imgs_means).type(torch.FloatTensor)

class Prototype(nn.Module):

def __init__(self):

super(Prototype, self).__init__()

criterion = nn.CrossEntropyLoss()

regular = nn.MSELoss()

# 冻结参数,确保参数不会被更新

model = set_parameters_static(model)

# 初始化图片和标签:0-9

x_prototype = torch.zeros(10, 784)

y_prototype = torch.linspace(0, 9, 10)

y_prototype = y_prototype.type(torch.LongTensor)

# 赋予x_prototype以梯度更新

x_prototype = Variable(x_prototype, requires_grad=True)

y_prototype = Variable(y_prototype)

imgs_means = get_img_means('./dataset/mnist')

imgs_means = Variable(imgs_means)

# 确保模型只会更新输入的原型

optimizer = optim.Adam([x_prototype], lr=0.01)

print('begin training...')

for i in range(10000):

optimizer.zero_grad()

logits_prototype = model(x_prototype)

cost_protype = criterion(logits_prototype, y_prototype) + lmda * regular(x_prototype, imgs_means)

cost_protype.backward()

optimizer.step()

if i % 500 == 0:

print('cost_protype={:.6f}'.format(cost_protype.item()))

begin training...

cost_protype=3.027934

cost_protype=0.004735

cost_protype=0.003305

cost_protype=0.002228

cost_protype=0.001450

cost_protype=0.000912

cost_protype=0.000562

cost_protype=0.000353

cost_protype=0.000238

cost_protype=0.000181

cost_protype=0.000156

cost_protype=0.000147

cost_protype=0.000143

cost_protype=0.000142

cost_protype=0.000142

cost_protype=0.000142

cost_protype=0.000142

cost_protype=0.000142

cost_protype=0.000142

cost_protype=0.000142

等到loss不再减小之后,就把原型图画出来。

x_prototype = x_prototype.data.numpy()

# cmap代表色块图,interpolation代表差值方式

assert x_prototype.shape == (10, 784)

plt.figure(figsize=(7, 7))

for i in range(5):

left = x_prototype[2*i,:].reshape(28, 28)

plt.subplot(5, 2, 2 * i +1)

plt.imshow(left, cmap='gray', interpolation='none')

plt.title('Digit:{}'.format(2 * i))

plt.colorbar()

right = x_prototype[2*i+1,:].reshape(28, 28)

plt.subplot(5, 2, 2 * i + 2)

plt.imshow(right, cmap='gray', interpolation='none')

plt.title('Digit:{}'.format(2 * i + 1))

plt.colorbar()

plt.tight_layout()

plt.show()

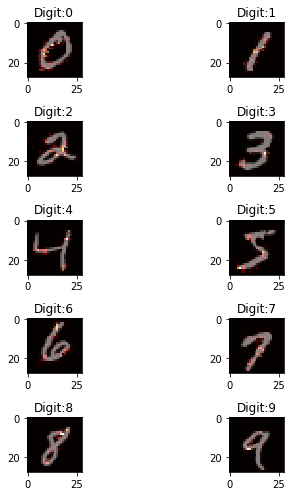

基于梯度的方法(Gradient based Methods)

基于梯度的算法的原理很简单,就是计算输出相对于输入的梯度,梯度越大,贡献越大。

热力图(Saliency Map)

输入图像, 以及其类别, 假设分类网络输出的结果可以用来表示, 我们可以根据其对的影响对的每一个像素进行排序,计算输入图像的每一个像素点对于最终结果的贡献程度。

对于类别,考虑如下的线性模型:

从上面的例子中可以看出来,每一个像素所对应的weight权重越大,它对结果的影响就越显著。

在深度神经网络中, 与呈现出高度的非线性相关关系, 因此并不能得到像上面一样简洁明了的公式,但也可以通过下面的一阶泰勒展开来逼近:

其中:

定义网络

import torch

from torch.autograd import Variable

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from torch import nn, optim

class MNIST_CNN(nn.Module):

def __init__(self):

super(MNIST_CNN, self).__init__()

self.layer1 = nn.Sequential(nn.Conv2d(1, 32, 3, stride=1, padding=1), nn.ReLU(True),

nn.MaxPool2d(2, 2, padding=1), nn.Dropout(p=0.7))

self.layer2 = nn.Sequential(nn.Conv2d(32, 64, 3, stride=1, padding=1), nn.ReLU(True),

nn.MaxPool2d(2, 2, padding=1), nn.Dropout(p=0.7))

self.layer3 = nn.Sequential(nn.Conv2d(64, 128, 3, stride=1, padding=1), nn.ReLU(True),

nn.MaxPool2d(2, 2, padding=1), nn.Dropout(p=0.7))

self.layer4 = nn.Sequential(nn.Linear(128 * 5 * 5, 625), nn.ReLU(True),

nn.Dropout(0.5))

self.layer5 = nn.Sequential(nn.Linear(625, 10))

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = x.view(-1, 128 * 5 * 5)

x = self.layer4(x)

x = self.layer5(x)

return x

计算热力图

使用训练好的参数,其准确率有99%。

import torch

from torch.autograd import Variable

from sensitivity_analysis.model import MNIST_CNN

from utils.utils import get_all_digit_imgs

import numpy as np

import matplotlib.pyplot as plt

# the model is trained and saved on gpu and load weight on cpu

is_training_on_gpu = False

model = MNIST_CNN()

if is_training_on_gpu:

weights = torch.load('sensitivity_analysis/cnn_weights.pkl')

else:

# 把所有的张量加载到CPU中

weights = torch.load('sensitivity_analysis/cnn_weights.pkl', map_location=lambda storage, loc: storage)

model.load_state_dict(weights)

imgs = get_all_digit_imgs('./dataset/mnist')

print(imgs.shape)

imgs = Variable(imgs, requires_grad=True)

logits = model(imgs)

# 权重设为1

logits.backward(torch.ones(logits.size()))

gradients = imgs.grad.data.numpy()

torch.Size([10, 1, 28, 28])

Now, we can show the results using plt package.

assert gradients.shape == (10, 1, 28, 28)

gradients = np.squeeze(np.square(gradients), 1).reshape(10, 784)

sample_imgs = imgs.data.numpy()

plt.figure(figsize=(7, 7))

for i in range(5):

plt.subplot(5, 2, 2 * i +1)

# 原始图像采用gray作为cmap,梯度图像采用hot作为cmap,对两者进行叠加

plt.imshow(np.reshape(sample_imgs[2 * i,:], (28, 28)), cmap='gray')

plt.imshow(np.reshape(gradients[2 * i,:], (28, 28)), cmap='hot', alpha=0.5)

plt.title('Digit:{}'.format(2 * i))

plt.subplot(5, 2, 2 * i + 2)

plt.imshow(np.reshape(sample_imgs[2 *i + 1, :], (28, 28)), cmap='gray')

plt.imshow(np.reshape(gradients[2*i + 1, :], (28, 28)), cmap='hot', alpha=0.5)

plt.title('Digit:{}'.format(2 * i + 1))

plt.tight_layout()

plt.show()

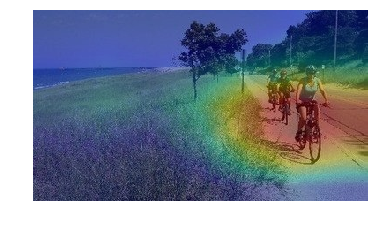

特征激活图(Feature activation map)

Class Activation Map(CAM)

CAM的思路是利用GAP(Global Average Pooling)替换掉了全连接层。可以把GAP视为一个特殊的average pool层,只不过其pool size和整个特征图一样大,其实说白了就是求每张特征图所有像素的均值。

由于没有了全连接层,输入就不用固定大小了,因此可支持任意大小的输入;此外,引入GAP更充分的利用了空间信息,且没有了全连接层的各种参数,鲁棒性强,也不容易产生过拟合;还有很重要的一点是,在最后的 mlpconv层(也就是最后一层卷积层)强制生成了和目标类别数量一致的特征图,经过GAP以后再通过softmax层得到结果。

为了让结果更加可靠,weight参数只选择了排名前二十的结果。

注意到,这里使用的模型是resnet18,最后两层恰好是avgpool和fc,本质上是一个CAM网络。

# simple implementation of CAM in PyTorch for the networks such as ResNet, DenseNet, SqueezeNet, Inception

import io

import requests

import matplotlib.pyplot as plt

from PIL import Image

from torchvision import models, transforms

from torch.autograd import Variable

from torch.nn import functional as F

import numpy as np

import cv2

# 输入图片

LABELS_URL = 'https://s3.amazonaws.com/outcome-blog/imagenet/labels.json'

IMG_URL = 'http://media.mlive.com/news_impact/photo/9933031-large.jpg'

# 分别加载不同的模型,并且将finalconv_name赋值为最后一个卷积层的名字

model_id = 2

if model_id == 1:

net = models.squeezenet1_1(pretrained=True)

finalconv_name = 'features'

elif model_id == 2:

net = models.resnet18(pretrained=True)

finalconv_name = 'layer4'

elif model_id == 3:

net = models.densenet161(pretrained=True)

finalconv_name = 'features'

net.eval()

features_blobs = []

def hook_feature(module, input, output):

features_blobs.append(output.data.cpu().numpy())

# register_forward_hook的作用是在不改变模型结构的情况下获取某一层的输出,如果用register_backward_hook的话还可以获得梯度哦

net._modules.get(finalconv_name).register_forward_hook(hook_feature)

# 获取softmax weight

params = list(net.parameters())

weight_softmax = np.squeeze(params[-2].data.numpy())

def returnCAM(feature_conv, weight_softmax, class_idx):

# 生成上采样到256x256大小的类激活图

size_upsample = (256, 256)

bz, nc, h, w = feature_conv.shape

output_cam = []

for _ in class_idx:

lastweight = weight_softmax[class_idx]

# lindex = lastweight.argsort()[::-1][0:20]

lindex = np.argsort(-lastweight)

lindex = lindex[0,0:20]

lastweight = lastweight[0,lindex]

feature_conv = feature_conv[0,lindex,:,:]

print(lastweight.shape)

print(feature_conv.shape)

cam = lastweight.dot(feature_conv.reshape((20, h*w)))

cam = cam.reshape(h, w)

# 把cam转换到0到1之间

cam = cam - np.min(cam)

cam_img = cam / np.max(cam)

# 把cam转换到0到255之间

cam_img = np.uint8(255 * cam_img)

# 上采样到和原始图像一般大小

output_cam.append(cv2.resize(cam_img, size_upsample))

return output_cam

normalize = transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

preprocess = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

normalize

])

response = requests.get(IMG_URL)

img_pil = Image.open(io.BytesIO(response.content))

img_pil.save('./cam_based/test.jpg')

img_tensor = preprocess(img_pil)

img_variable = Variable(img_tensor.unsqueeze(0))

logit = net(img_variable)

# download the imagenet category list

classes = {int(key):value for (key, value)

in requests.get(LABELS_URL).json().items()}

h_x = F.softmax(logit, dim=1).data.squeeze()

probs, idx = h_x.sort(0, True)

# 输出预测类

print('predicted classes: {}. probability:{:.3f}'.format(classes[idx[0].item()], probs[0]))

img = cv2.imread('./cam_based/test.jpg')

height, width, _ = img.shape

CAMs = returnCAM(features_blobs[0], weight_softmax, [idx[0].item()])

heatmap = cv2.applyColorMap(cv2.resize(CAMs[0],(width, height)), cv2.COLORMAP_JET)

result = heatmap * 0.3 + img * 0.5

cv2.imwrite('./cam_based/CAM.jpg', result)

plt.imshow(Image.open('./cam_based/CAM.jpg'))

plt.axis('off')

plt.show()

predicted classes: mountain bike, all-terrain bike, off-roader. probability:0.416

(20,)

(20, 7, 7)

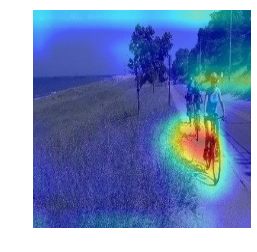

Gradient-weighted Class Activation Mapping (Grad-CAM)

前面看到CAM的解释效果已经很不错了,但是它有一个致使伤,就是它要求修改原模型的结构,导致需要重新训练该模型,这大大限制了它的使用场景。如果模型已经上线了,或着训练的成本非常高,我们几乎是不可能为了它重新训练的。于是乎,Grad-CAM横空出世,解决了这个问题。

Grad-CAM的基本思路和CAM是一致的,也是通过得到每对特征图对应的权重,最后求一个加权和。但是它与CAM的主要区别在于求权重wck的过程。CAM通过替换全连接层为GAP层,重新训练得到权重,而Grad-CAM另辟蹊径,用梯度的全局平均来计算权重。事实上,经过严格的数学推导,Grad-CAM与CAM计算出来的权重是等价的。

import matplotlib.pyplot as plt

import torch

from PIL import Image

from torch.autograd import Variable

from torch.autograd import Function

from torchvision import models

from torchvision import utils

import cv2

import sys

import numpy as np

class FeatureExtractor():

"""

提取目标层的梯度

"""

def __init__(self, model, target_layers):

self.model = model

self.target_layers = target_layers

self.gradients = []

def save_gradient(self, grad):

self.gradients.append(grad)

def __call__(self, x):

outputs = []

self.gradients = []

for name, module in self.model._modules.items():

x = module(x)

if name in self.target_layers:

x.register_hook(self.save_gradient)

outputs += [x]

return outputs, x

class ModelOutputs():

"""

Class for making a forward pass, and getting:

1. The network output.

2. Activations from intermeddiate targetted layers.

3. Gradients from intermeddiate targetted layers.

"""

def __init__(self, model, target_layers):

self.model = model

self.feature_extractor = FeatureExtractor(self.model.features, target_layers)

def get_gradients(self):

return self.feature_extractor.gradients

def __call__(self, x):

target_activations, output = self.feature_extractor(x)

output = output.view(output.size(0), -1)

output = self.model.classifier(output)

return target_activations, output

def preprocess_image(img):

means = [0.485, 0.456, 0.406]

stds = [0.229, 0.224, 0.225]

preprocessed_img = img.copy()[:, :, ::-1]

for i in range(3):

preprocessed_img[:, :, i] = preprocessed_img[:, :, i] - means[i]

preprocessed_img[:, :, i] = preprocessed_img[:, :, i] / stds[i]

# 返回一个地址连续的数组

preprocessed_img = \

np.ascontiguousarray(np.transpose(preprocessed_img, (2, 0, 1)))

preprocessed_img = torch.from_numpy(preprocessed_img)

preprocessed_img.unsqueeze_(0)

input = Variable(preprocessed_img, requires_grad=True)

return input

def show_cam_on_image(img, mask):

heatmap = cv2.applyColorMap(np.uint8(255 * mask), cv2.COLORMAP_JET)

heatmap = np.float32(heatmap) / 255

cam = heatmap + np.float32(img)

cam = cam / np.max(cam)

cv2.imwrite("./cam_based/grad_cam.jpg", np.uint8(255 * cam))

plt.imshow(Image.open('./cam_based/grad_cam.jpg'))

plt.axis('off')

plt.show()

class GradCam:

def __init__(self, model, target_layer_names, use_cuda):

self.model = model

self.model.eval()

self.cuda = use_cuda

if self.cuda:

self.model = model.cuda()

self.extractor = ModelOutputs(self.model, target_layer_names)

def forward(self, input):

return self.model(input)

def __call__(self, input, index=None):

if self.cuda:

features, output = self.extractor(input.cuda())

else:

features, output = self.extractor(input)

if index == None:

index = np.argmax(output.cpu().data.numpy())

one_hot = np.zeros((1, output.size()[-1]), dtype=np.float32)

one_hot[0][index] = 1

one_hot = Variable(torch.from_numpy(one_hot), requires_grad=True)

if self.cuda:

one_hot = torch.sum(one_hot.cuda() * output)

else:

one_hot = torch.sum(one_hot * output)

self.model.features.zero_grad()

self.model.classifier.zero_grad()

one_hot.backward(retain_graph=True)

grads_val = self.extractor.get_gradients()[-1].cpu().data.numpy()

target = features[-1]

target = target.cpu().data.numpy()[0, :]

# 对梯度取均值

weights = np.mean(grads_val, axis=(2, 3))[0, :]

cam = np.zeros(target.shape[1:], dtype=np.float32)

for i, w in enumerate(weights):

cam += w * target[i, :, :]

cam = np.maximum(cam, 0)

cam = cv2.resize(cam, (224, 224))

cam = cam - np.min(cam)

cam = cam / np.max(cam)

return cam

if __name__ == '__main__':

image_path = './cam_based/test.jpg'

# Can work with any model, but it assumes that the model has a

# feature method, and a classifier method,

# as in the VGG models in torchvision.

grad_cam = GradCam(model=models.vgg19(pretrained=True), target_layer_names=["35"], use_cuda=False)

img = cv2.imread(image_path, 1)

img = np.float32(cv2.resize(img, (224, 224))) / 255

input = preprocess_image(img)

# If None, returns the map for the highest scoring category.

# Otherwise, targets the requested index.

target_index = None

mask = grad_cam(input, target_index)

show_cam_on_image(img, mask)

Downloading: "https://download.pytorch.org/models/vgg19-dcbb9e9d.pth" to C:\Users\13371/.torch\models\vgg19-dcbb9e9d.pth

574673361it [00:44, 12840048.61it/s]

来源:https://blog.csdn.net/jining11/article/details/100827371