本次实验要求

- JDK安装配置:1.8以上版本

- Scala安装配置:Scala 2.11

- Intellij IDEA:下载最新版本

参考链接:

Spark 开发环境|Spark开发指南 https://taoistwar.gitbooks.io/spark-developer-guide/spark_base/spark_dev_environment.html

IDEA中使用Maven开发Spark应用程序 https://blog.csdn.net/yu0_zhang0/article/details/80112846

使用IntelliJ IDEA配置Spark应用开发环境及源码阅读环境 https://blog.tomgou.xyz/shi-yong-intellij-ideapei-zhi-sparkying-yong-kai-fa-huan-jing-ji-yuan-ma-yue-du-huan-jing.html

IDEA导入一个已有的项目:

欢迎界面有Import Project,如果在项目中使用下面步骤,

1.File----->Close Project.

2.在欢迎界面点击Import Project.

一、企业开发Spark作业方式

1.Spark开发测试

- IDEA通过Spark Local模式开发(不能远程提交到集群)

- Spark Shell交互式分析(可以远程连接集群)

2.Spark生产环境运行

- 打成assembly jar

- 使用bin/spark-submit.sh提交

二、通过已有项目搭建Spark开发环境

1.配置JDK,Scala,IDEA

1)下载JDK

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

选择自己需要的版本下载

2)下载Scala

https://www.scala-lang.org/download/2.11.12.html

选择自己需要的版本下载

3)下载IDEA

IDEA选择最新版本下载即可

4)安装IDEA插件

IDEA搜索安装Scala插件、Maven Integration插件

File--->Settings--->搜索框输入Plugins搜索

2.在工程模板基础上修改

打开已经创建好的工程模板,在IDEA中直接创建见下一小节(三、通过IDEA直接创建)。

更新相应的pom.xml依赖 设置自动导入Maven依赖 https://blog.csdn.net/Gnd15732625435/article/details/81062381

开发

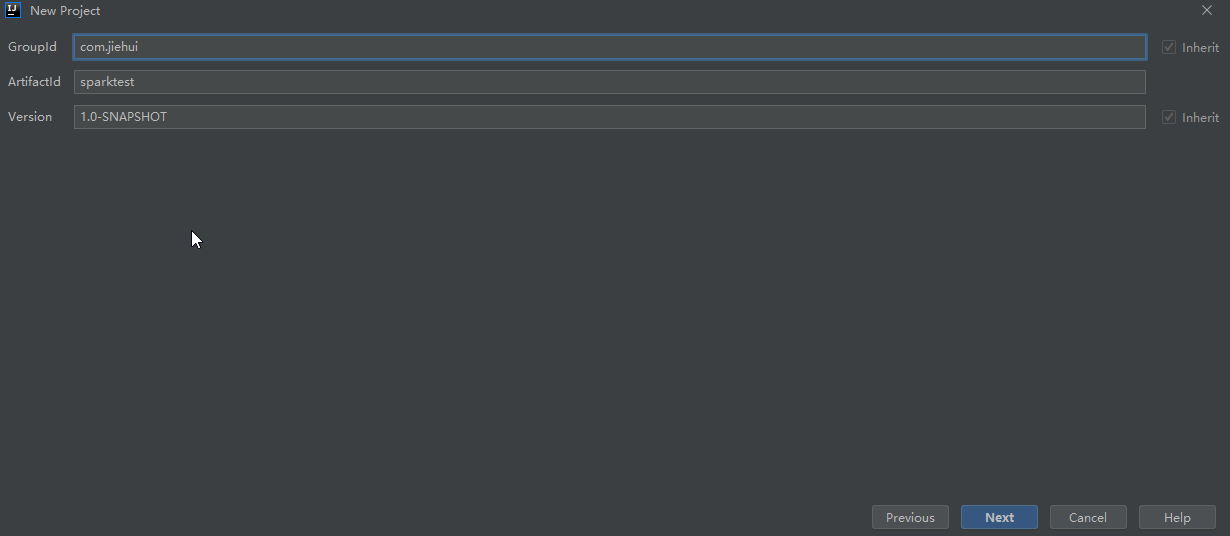

三、通过IDEA直接创建

1.DEA创建一个新的maven项目

File--->New--->Project--->Maven

2.填充和修改依赖

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.jiehui</groupId>

<artifactId>sparktest</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<spark.version>2.4.0</spark.version>

<fastjson.version>1.2.14</fastjson.version>

<scala.version>2.11.8</scala.version>

<java.version>1.8</java.version>

</properties>

<repositories>

<repository>

<id>nexus-aliyun</id>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

<dependency>

<groupId>commons-dbcp</groupId>

<artifactId>commons-dbcp</artifactId>

<version>1.4</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-compiler</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-reflect</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-actors</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scalap</artifactId>

<version>${scala.version}</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<version>2.3</version>

<configuration>

<classifier>dist</classifier>

<appendAssemblyId>true</appendAssemblyId>

<descriptorRefs>

<descriptor>jar-with-dependencies</descriptor>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.7</source>

<target>1.7</target>

</configuration>

</plugin>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<id>scala-compile-first</id>

<phase>process-resources</phase>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<recompileMode>incremental</recompileMode>

<useZincServer>true</useZincServer>

<args>

<arg>-unchecked</arg>

<arg>-deprecation</arg>

<arg>-feature</arg>

</args>

<jvmArgs>

<jvmArg>-Xms1024m</jvmArg>

<jvmArg>-Xmx1024m</jvmArg>

</jvmArgs>

<javacArgs>

<javacArg>-source</javacArg>

<javacArg>${java.version}</javacArg>

<javacArg>-target</javacArg>

<javacArg>${java.version}</javacArg>

<javacArg>-Xlint:all,-serial,-path</javacArg>

</javacArgs>

</configuration>

</plugin>

</plugins>

</build>

</project>

注意scala版本,maven中的版本要和IDEA中设置的版本相一致,如果不一致,编译会报错

比如,maven中设置了2.11.8

IDEA中File--->Project Structure--->Libraries,点+按钮,出现如下的Scala版本,系统安装的是2.12.8,但我们应选择最下面的2.11.8

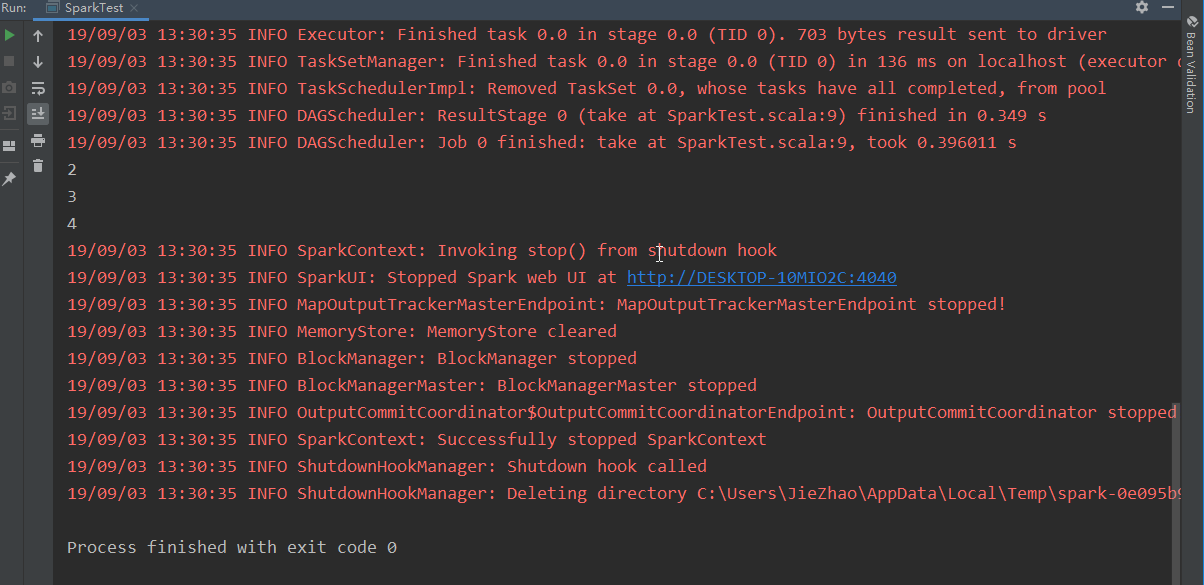

3.编写spark程序

在src目录下创建scala文件夹,创建com.jiehui.test包

编写Spark测试程序

package com.jiehui.test import org.apache.spark._

object SparkTest {

def main(args: Array[String]): Unit = {

val master = if (args.length > 0) args(0).toString else "local"

val conf = new SparkConf().setMaster(master).setAppName("test")

val sc = new SparkContext(conf)

val rdd = sc.parallelize(Seq(1,2,3)).foreach(println(_))

}

}

运行

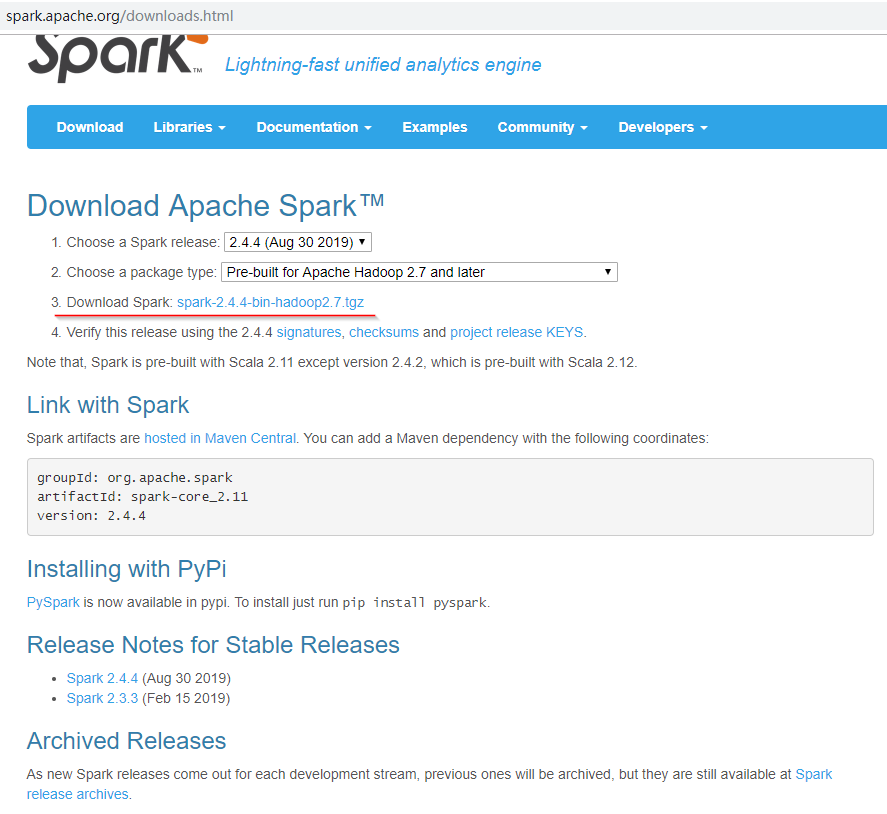

四、作业提交

1.集群配置

因为使用yarn,因此需要安装好Hadoop,Hadoop需要安装zookeeper,由于工程使用maven构建,还需要安装maven。

本实验相关配置:

Zookeeper:3.4.10

Hadoop:2.8.4

Maven:3.6.1

Yarn和Maven的环境变量已经配置好

下载spark二进制包 http://spark.apache.org/downloads.html

选择相应版本,点击3进入下载地址

复制镜像链接,在服务器中下载并解压

[root@node01 bigdata]# wget http://mirror.bit.edu.cn/apache/spark/spark-2.2.0/spark-2.2.0-bin-hadoop2.7.tgz[root@node01 bigdata]# tar -zxvf spark-2.2.0-bin-hadoop2.7.tgz

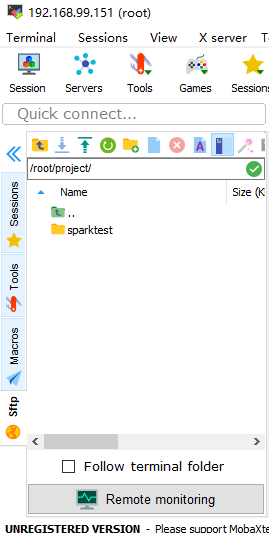

2.作业上传并打包

文件比较小可以直接上传到服务器,文件大打包一下

进入项目目录,用maven打包

[root@node01 project]# cd sparktest/ [root@node01 sparktest]#mvn package

打包好后,target目录下有打好的jar包

[root@node01 sparktest]# ll target 总用量 136852 drwxr-xr-x. 2 root root 4096 9月 3 14:38 archive-tmp drwxr-xr-x. 3 root root 4096 9月 3 14:31 classes drwxr-xr-x. 3 root root 4096 9月 3 14:31 generated-sources drwxr-xr-x. 2 root root 4096 9月 3 14:37 maven-archiver drwxr-xr-x. 3 root root 4096 9月 3 14:37 maven-status -rw-r--r--. 1 root root 6023 9月 3 14:37 sparktest-1.0-SNAPSHOT.jar -rw-r--r--. 1 root root 140099592 9月 3 14:39 sparktest-1.0-SNAPSHOT-jar-with-dependencies.jar

3.执行作业

进入spark的bin目录

[root@node01 bigdata]# cd spark-2.2.0-bin-hadoop2.7 [root@node01 spark-2.2.0-bin-hadoop2.7]# cd bin [root@node01 bin]# ll 总用量 100 -rwxr-xr-x. 1 hadoop hadoop 1089 7月 1 2017 beeline -rw-r--r--. 1 hadoop hadoop 899 7月 1 2017 beeline.cmd -rw-r--r--. 1 root root 734 6月 11 00:39 derby.log -rwxr-xr-x. 1 hadoop hadoop 1933 7月 1 2017 find-spark-home -rw-r--r--. 1 hadoop hadoop 1909 7月 1 2017 load-spark-env.cmd -rw-r--r--. 1 hadoop hadoop 2133 7月 1 2017 load-spark-env.sh drwxr-xr-x. 5 root root 4096 6月 11 00:39 metastore_db -rwxr-xr-x. 1 hadoop hadoop 2989 7月 1 2017 pyspark -rw-r--r--. 1 hadoop hadoop 1493 7月 1 2017 pyspark2.cmd -rw-r--r--. 1 hadoop hadoop 1002 7月 1 2017 pyspark.cmd -rwxr-xr-x. 1 hadoop hadoop 1030 7月 1 2017 run-example -rw-r--r--. 1 hadoop hadoop 988 7月 1 2017 run-example.cmd -rwxr-xr-x. 1 hadoop hadoop 3196 7月 1 2017 spark-class -rw-r--r--. 1 hadoop hadoop 2467 7月 1 2017 spark-class2.cmd -rw-r--r--. 1 hadoop hadoop 1012 7月 1 2017 spark-class.cmd -rwxr-xr-x. 1 hadoop hadoop 1039 7月 1 2017 sparkR -rw-r--r--. 1 hadoop hadoop 1014 7月 1 2017 sparkR2.cmd -rw-r--r--. 1 hadoop hadoop 1000 7月 1 2017 sparkR.cmd -rwxr-xr-x. 1 hadoop hadoop 3017 7月 1 2017 spark-shell -rw-r--r--. 1 hadoop hadoop 1530 7月 1 2017 spark-shell2.cmd -rw-r--r--. 1 hadoop hadoop 1010 7月 1 2017 spark-shell.cmd -rwxr-xr-x. 1 hadoop hadoop 1065 7月 1 2017 spark-sql -rwxr-xr-x. 1 hadoop hadoop 1040 7月 1 2017 spark-submit -rw-r--r--. 1 hadoop hadoop 1128 7月 1 2017 spark-submit2.cmd -rw-r--r--. 1 hadoop hadoop 1012 7月 1 2017 spark-submit.cmd

[root@node01 bin]# cd ..

[root@node01 spark-2.2.0-bin-hadoop2.7]# ./bin/spark-submit --class com.jiehui.test.SpakTest --master yarn --deploy-mode cluster /root/project/sparktest/target/sparktest-1.0-SNAPSHOT-jar-with-dependencies.jar yarn

使用sprk-submit提交作业

| 执行的类 | --class com.jiehui.test.SparkTest |

| 使用yarn |

--master yarn |

| 部署方式是集群 | --deploy-mode cluster |

| jar包的路径 | /root/project/sparktest/target/sparktest-1.0-SNAPSHOT-jar-with-dependencies.jar |

| 参数 |

yarn是参数 |

提交作业的namenode状态必须是active的,如果是standby就会报错:org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyExceptio

查看namenode状态

hdfs haadmin -getServiceState nn1

激活namenode

hdfs haadmin -transitionToActive --forcemanual nn1

我们在命令中输入的是com.jiehui.test.SpakTest,由于我们输错了类的名字,因此程序不能正常运行

报错 Container exited with a non-zero exit code 10

client token: N/A

diagnostics: Application application_1567500736308_0001 failed 2 times due to AM Container for appattempt_1567500736308_0001_000002 exited w

Failing this attempt.Diagnostics: Exception from container-launch.

Container id: container_1567500736308_0001_02_000001

Exit code: 10

Stack trace: ExitCodeException exitCode=10:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:972)

at org.apache.hadoop.util.Shell.run(Shell.java:869)

...

Container exited with a non-zero exit code 10

For more detailed output, check the application tracking page: http://node01:8088/cluster/app/application_1567500736308_0001 Then click on links to l

. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1567500885640

final status: FAILED

tracking URL: http://node01:8088/cluster/app/application_1567500736308_0001

user: root

Exception in thread "main" org.apache.spark.SparkException: Application application_1567500736308_0001 finished with failed status

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1104)

at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1150)

...19/09/03 16:56:59 INFO util.ShutdownHookManager: Shutdown hook called

19/09/03 16:56:59 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-57505652-7dc4-4bc3-9751-dbb3335025f8

通过上面的说明无法判断错误出在哪里,必须使用yarn命令查看作业日志

通过查看日志发现错误:

19/09/03 17:01:13 ERROR yarn.ApplicationMaster: Uncaught exception: java.lang.ClassNotFoundException: com.jiehui.test.SpakTest

找不到我们指定的类,经过观察发现名字出错,改正命令

./bin/spark-submit --class com.jiehui.test.SparkTest --master yarn --deploy-mode cluster /root/project/sparktest/target/sparktest-1.0-SNAPSHOT-jar-with-dependencies.jar yarn

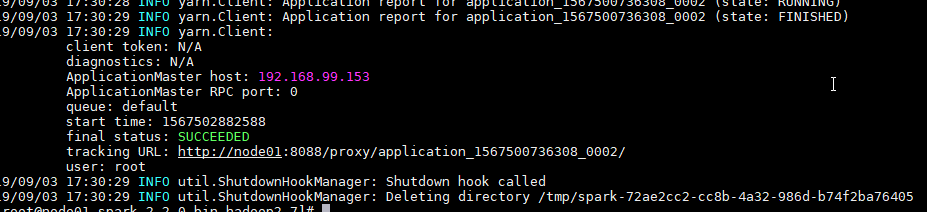

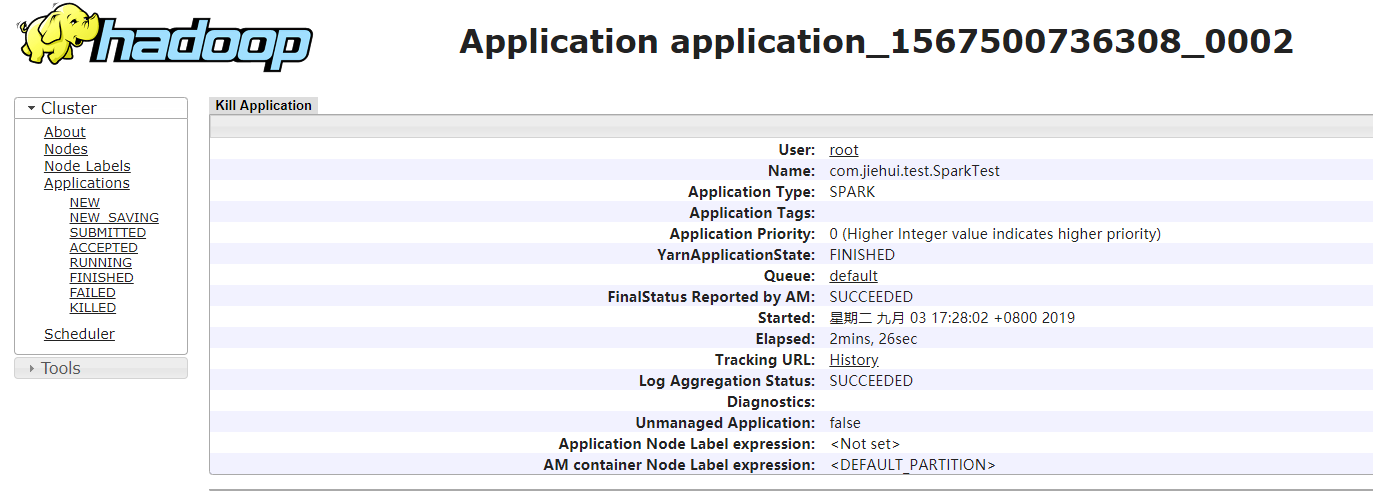

程序成功运行,运行成功截图如下:

我们的Spark环境搭建成功了。