HDFS的Java API

Java API应用

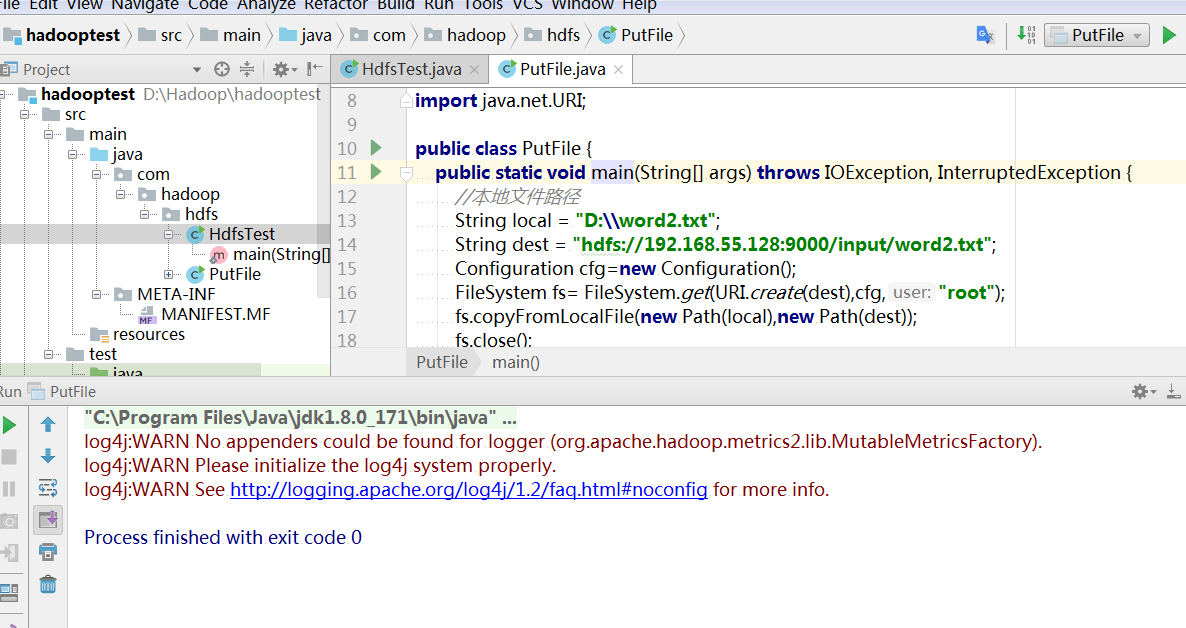

上传文件

先在本地(客户端)一个文件,比如在D盘下新建一个word2.txt文件,内容随便写

somewhere

palyer

Hadoop

you belong to me在IDEA中编写Java程序

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

public class PutFile {

public static void main(String[] args) throws IOException, InterruptedException {

//本地文件路径

String local = "D:\\word2.txt";

String dest = "hdfs://192.168.55.128:9000/input/word2.txt";

Configuration cfg=new Configuration();

FileSystem fs= FileSystem.get(URI.create(dest),cfg,"root");

fs.copyFromLocalFile(new Path(local),new Path(dest));

fs.close();

}

}再次说明,String dest="hdfs://192.168.80.128:9000/user/root/input/word2.txt"要与core-site.xml文件中的fs.defaultFS配置对应,其值是hdfs://node1:9000。由于本地Windows系统的hosts文件没有配置node1,所以这里需要IP地址表示。

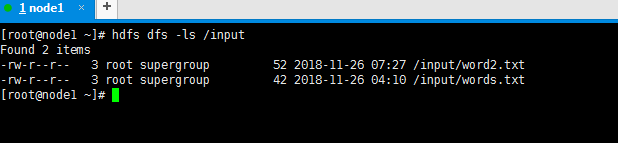

执行结果

[root@node1 ~]# hdfs dfs -ls /input

Found 2 items

-rw-r--r-- 3 root supergroup 52 2018-11-26 07:27 /input/word2.txt

-rw-r--r-- 3 root supergroup 42 2018-11-26 04:10 /input/words.txt

[root@node1 ~]#

FileSystem fs= FileSystem.get(URI.create(dest),cfg,"root");语句中指定了root用户,这是因为Windows系统默认用户是Administrator。如果程序中不指定用户名root,则可能抛出异常:Permission denied: user=Administrator

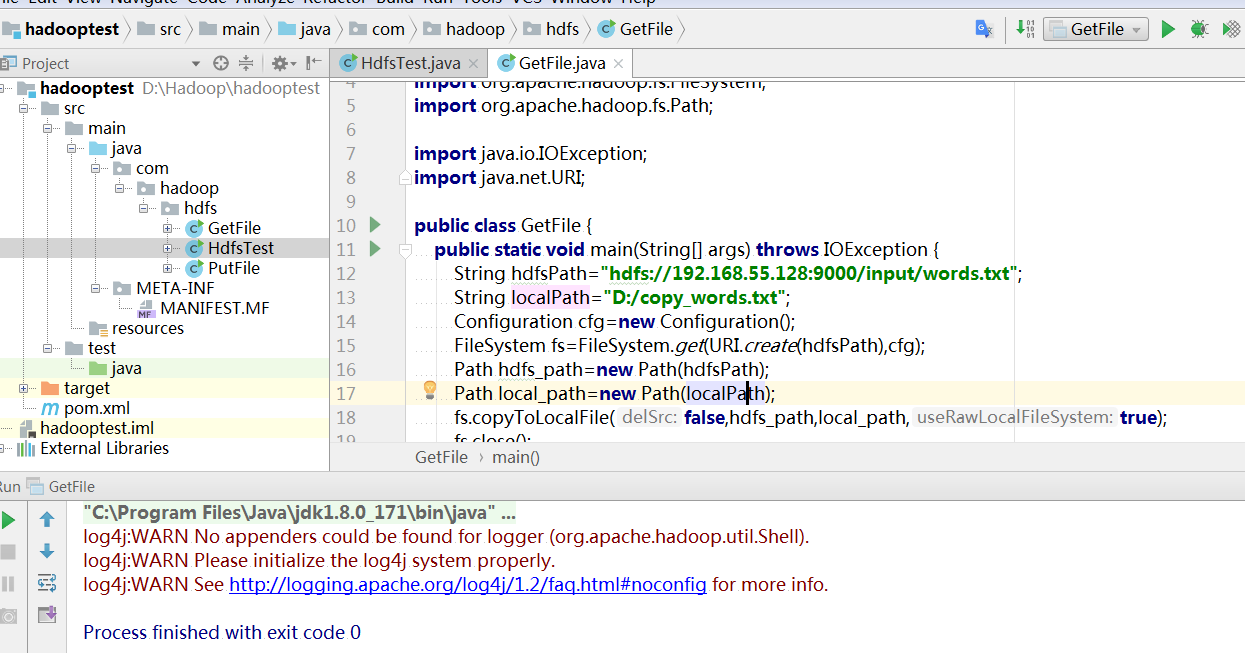

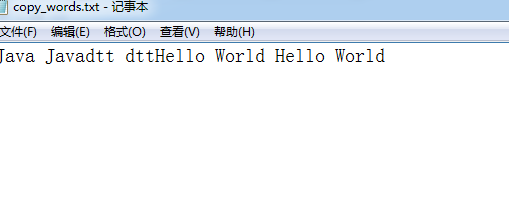

下载文件

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

public class GetFile {

public static void main(String[] args) throws IOException {

String hdfsPath="hdfs://192.168.55.128:9000/input/words.txt";

String localPath="D:/copy_words.txt";

Configuration cfg=new Configuration();

FileSystem fs=FileSystem.get(URI.create(hdfsPath),cfg);

Path hdfs_path=new Path(hdfsPath);

Path local_path=new Path(localPath);

fs.copyToLocalFile(false,hdfs_path,local_path,true);

fs.close();

}

}

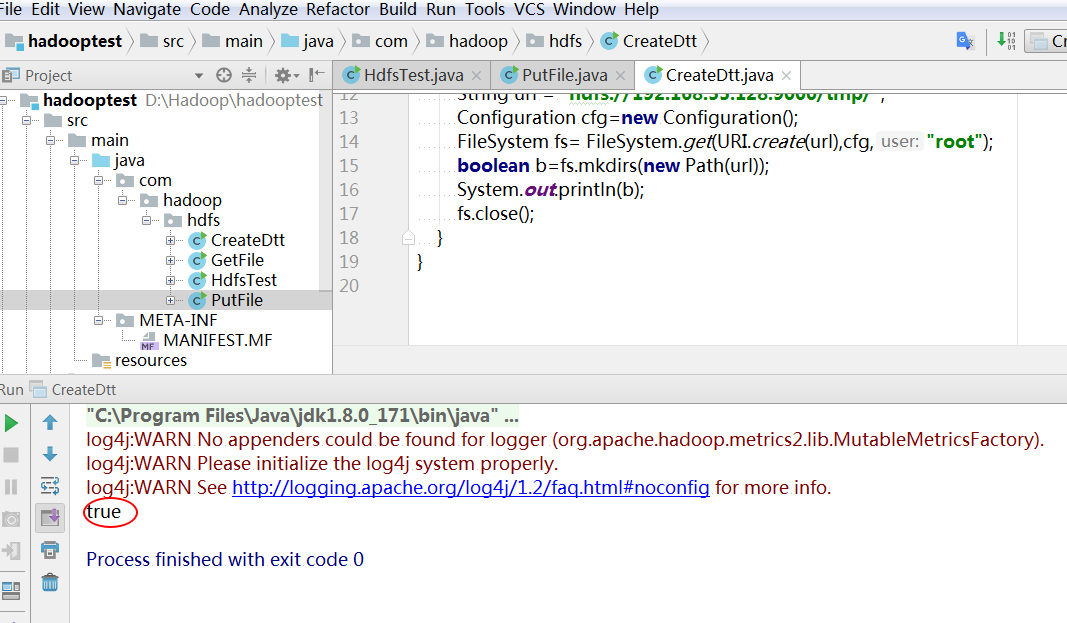

创建HDFS目录

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

public class CreateDtt {

public static void main(String[] args) throws IOException, InterruptedException {

String url = "hdfs://192.168.55.128:9000/tmp/";

Configuration cfg=new Configuration();

FileSystem fs= FileSystem.get(URI.create(url),cfg,"root");

boolean b=fs.mkdirs(new Path(url));

System.out.println(b);

fs.close();

}

}

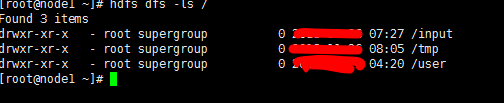

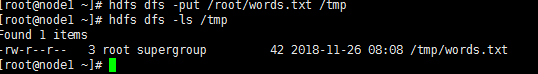

删除HDFS文件或文件夹

先上传一个文件到HDFS的/tmp目录

[root@node1 ~]# hdfs dfs -put /root/words.txt /tmp

[root@node1 ~]# hdfs dfs -ls /tmp

Found 1 items

-rw-r--r-- 3 root supergroup 42 2018-11-26 08:08 /tmp/words.txt

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

public class DeleteFile {

public static void main(String[] args) throws IOException, InterruptedException {

String url = "hdfs://192.168.55.128:9000/tmp/";

Configuration cfg=new Configuration();

FileSystem fs= FileSystem.get(URI.create(url),cfg,"root");

//参数true表示递归删除文件夹及文件夹下的文件

boolean b = fs.delete(new Path(url), true);

System.out.println(b);

fs.close();

}

}

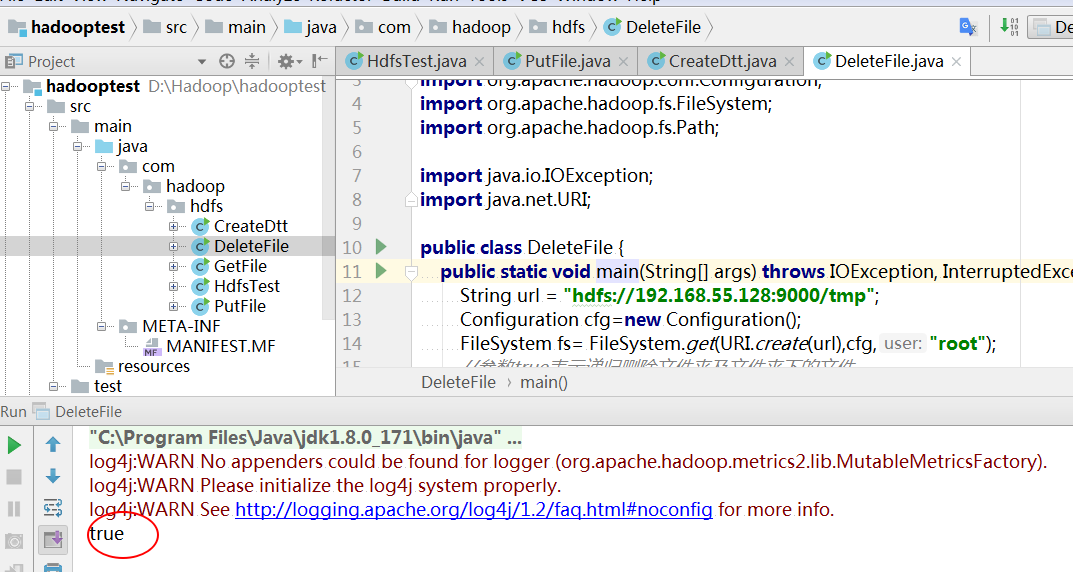

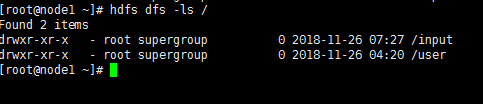

通过命令查看HDFS目录,显然HDFS的/tmp目录已经被删除

[root@node1 ~]# hdfs dfs -ls /

Found 2 items

drwxr-xr-x - root supergroup 0 2018-11-26 07:27 /input

drwxr-xr-x - root supergroup 0 2018-11-26 04:20 /user

[root@node1 ~]#

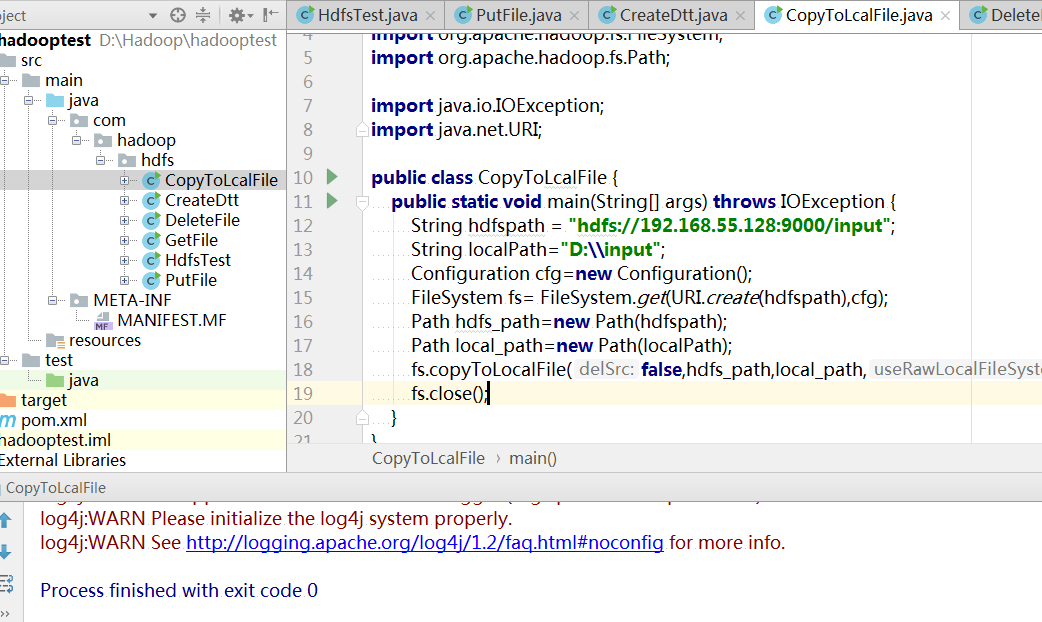

下载HDFS目录

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

public class CopyToLcalFile {

public static void main(String[] args) throws IOException {

String hdfspath = "hdfs://192.168.55.128:9000/input";

String localPath="D:\\input";

Configuration cfg=new Configuration();

FileSystem fs= FileSystem.get(URI.create(hdfspath),cfg);

Path hdfs_path=new Path(hdfspath);

Path local_path=new Path(localPath);

fs.copyToLocalFile(false,hdfs_path,local_path,true);

fs.close();

}

}

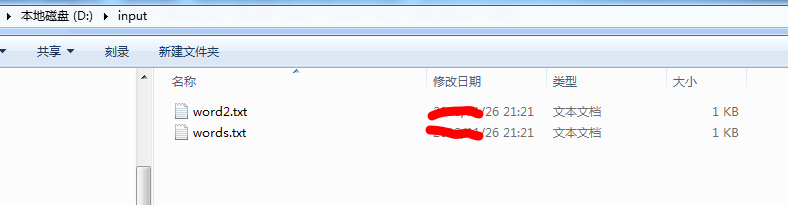

在D盘可以查看到input目录文件

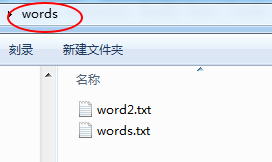

上传本地目录(文件夹)

先在本地准备一个待上传的目录,这里将刚才下载的input目录重命名为words

package com.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.IOException;

import java.net.URI;

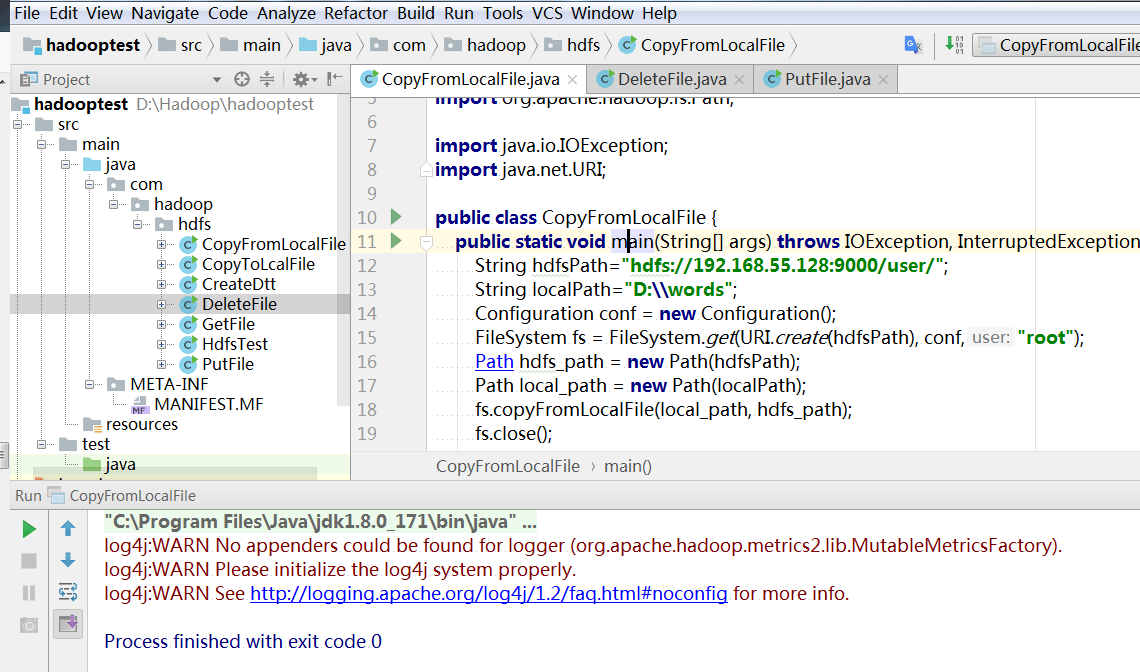

public class CopyFromLocalFile {

public static void main(String[] args) throws IOException, InterruptedException {

String hdfsPath="hdfs://192.168.55.128:9000/user/";

String localPath="D:\\words";

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf,"root");

Path hdfs_path = new Path(hdfsPath);

Path local_path = new Path(localPath);

fs.copyFromLocalFile(local_path, hdfs_path);

fs.close();

}

}

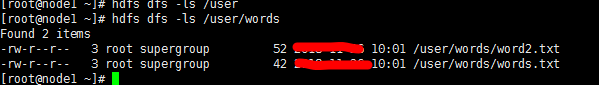

[root@node1 ~]# hdfs dfs -ls /user/words

Found 2 items

-rw-r--r-- 3 root supergroup 52 10:01 /user/words/word2.txt

-rw-r--r-- 3 root supergroup 42 10:01 /user/words/words.txt

[root@node1 ~]#

来源:oschina

链接:https://my.oschina.net/u/4368017/blog/3747096