run.py配置以下内容,仅仅可以运行单一爬虫,如果想要一次运行多个爬虫 ,就需要换一种方式。

from scrapy import cmdline

"""

单一爬虫运行

"""

cmdline.execute('scrapy crawl runoob'.split())- 第一步:

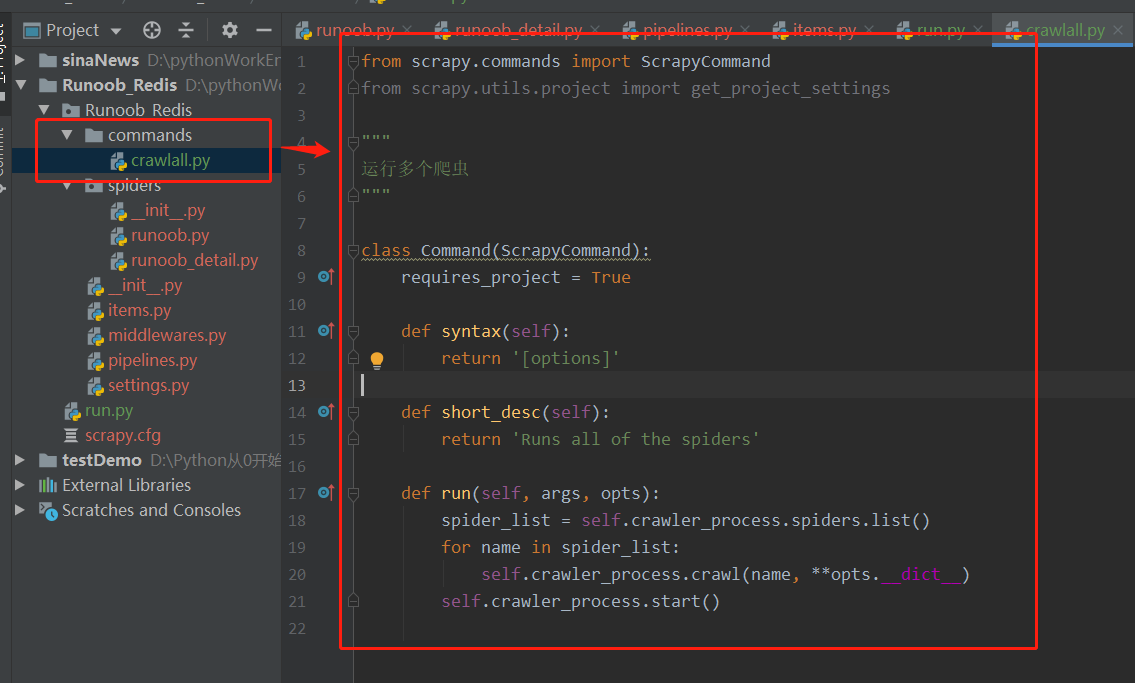

from scrapy.commands import ScrapyCommand from scrapy.utils.project import get_project_settings """ 运行多个爬虫 """ class Command(ScrapyCommand): requires_project = True def syntax(self): return '[options]' def short_desc(self): return 'Runs all of the spiders' def run(self, args, opts): spider_list = self.crawler_process.spiders.list() for name in spider_list: self.crawler_process.crawl(name, **opts.__dict__) self.crawler_process.start() - 第二步setting添加如下代码:

# 运行多个爬虫设置 COMMANDS_MODULE = 'Runoob_Redis.commands' -

执行----->

scrapy crawlall

来源:oschina

链接:https://my.oschina.net/u/4710565/blog/4945495