1.window上无法连接到hive,linux上能连接到hive(推荐法二)

法一

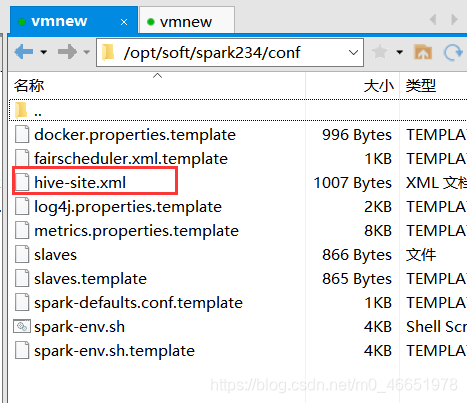

(1)把/opt/soft/hive110/conf/hive-site.xml复制到/opt/soft/spark234/conf/hive-site.xml

hive-site.xml不用改变任何东西

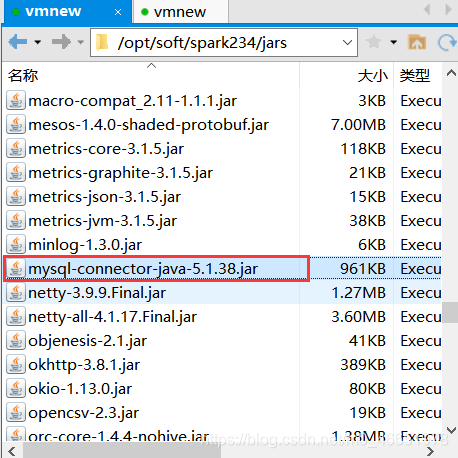

(2)把mysql的驱动包复制到/opt/soft/spark234/jar下面

(3)开始启动pyspark

[root@joy sbin]# ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/soft/spark234/logs/spark-root-org.apache.spark.deploy.master.Master-1-joy.out

localhost: starting org.apache.spark.deploy.worker.Worker, logging to /opt/soft/spark234/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-joy.out

[root@joy sbin]# cd ../bin/

[root@joy bin]# ./pyspark

Python 2.7.5 (default, Nov 6 2016, 00:28:07)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-11)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

2020-12-24 11:20:23 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

2020-12-24 11:20:24 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.user does not exist

2020-12-24 11:20:24 WARN HiveConf:2753 - HiveConf of name hive.metastore.local does not exist

2020-12-24 11:20:24 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client does not exist

2020-12-24 11:20:24 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.password does not exist

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.3.4

/_/

Using Python version 2.7.5 (default, Nov 6 2016 00:28:07)

SparkSession available as 'spark'.

>>> spark.sql("select * from dwd_events.dwd_events limit 3").show

2020-12-24 11:21:29 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.user does not exist

2020-12-24 11:21:29 WARN HiveConf:2753 - HiveConf of name hive.metastore.local does not exist

2020-12-24 11:21:29 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client does not exist

2020-12-24 11:21:29 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.password does not exist

2020-12-24 11:21:30 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.user does not exist

2020-12-24 11:21:30 WARN HiveConf:2753 - HiveConf of name hive.metastore.local does not exist

2020-12-24 11:21:30 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client does not exist

2020-12-24 11:21:30 WARN HiveConf:2753 - HiveConf of name hive.server2.thrift.client.password does not exist

2020-12-24 11:21:31 ERROR ObjectStore:6684 - Version information found in metastore differs 1.1.0-cdh5.14.2 from expected schema version 1.2.0. Schema verififcation is disabled hive.metastore.schema.verification so setting version.

2020-12-24 11:21:32 WARN ObjectStore:568 - Failed to get database global_temp, returning NoSuchObjectException

<bound method DataFrame.show of DataFrame[eventid: string, starttime: bigint, city: string, province: string, country: string, lat: string, lng: string, userid: string, features: string]>

>>> spark.sql("select * from dwd_events.dwd_events limit 3").show()

+----------+----------+------+--------+---------+-------+-------+----------+--------------------+

| eventid| starttime| city|province| country| lat| lng| userid| features|

+----------+----------+------+--------+---------+-------+-------+----------+--------------------+

|1000000778|1349348400| Iasi| | Romania| 47.162| 27.587| 781622845|0, 0, 0, 0, 0, 0,...|

|1000001188|1350738000|Kassel| | Germany| 51.315| 9.48|4191368038|0, 0, 0, 0, 0, 0,...|

|1000003504|1353632400|Sydney| NSW|Australia|-33.883|151.217|1445909915|2, 0, 2, 1, 1, 0,...|

+----------+----------+------+--------+---------+-------+-------+----------+--------------------+

(4)把/opt/soft/hive110/conf的conf全部复制到本地的D:\soft\spark-2.3.4-bin-hadoop2.6/conf下

修改为本地的路径

(5)把mysql的驱动包复制到D:\soft\spark-2.3.4-bin-hadoop2.6/jar下面

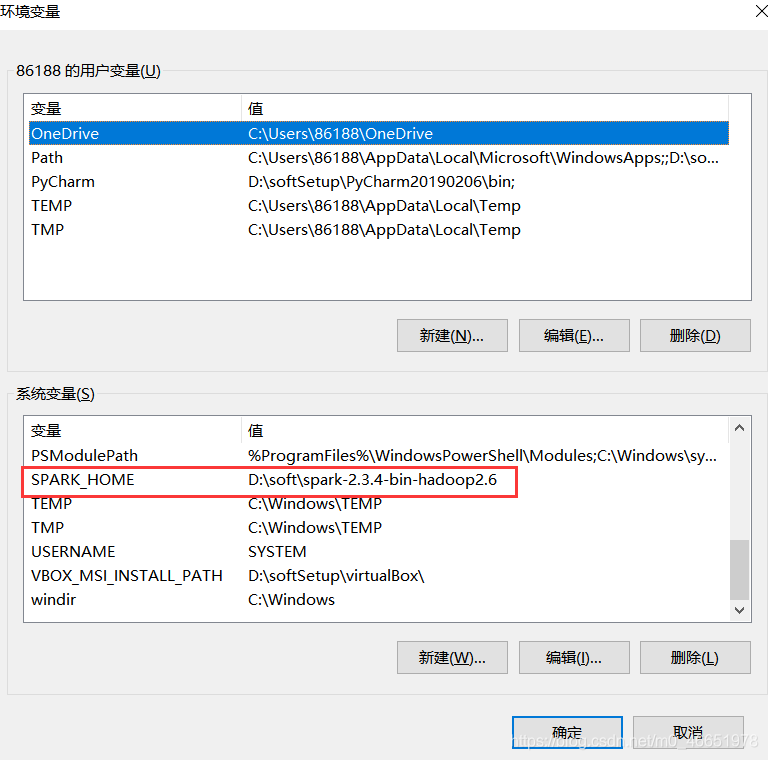

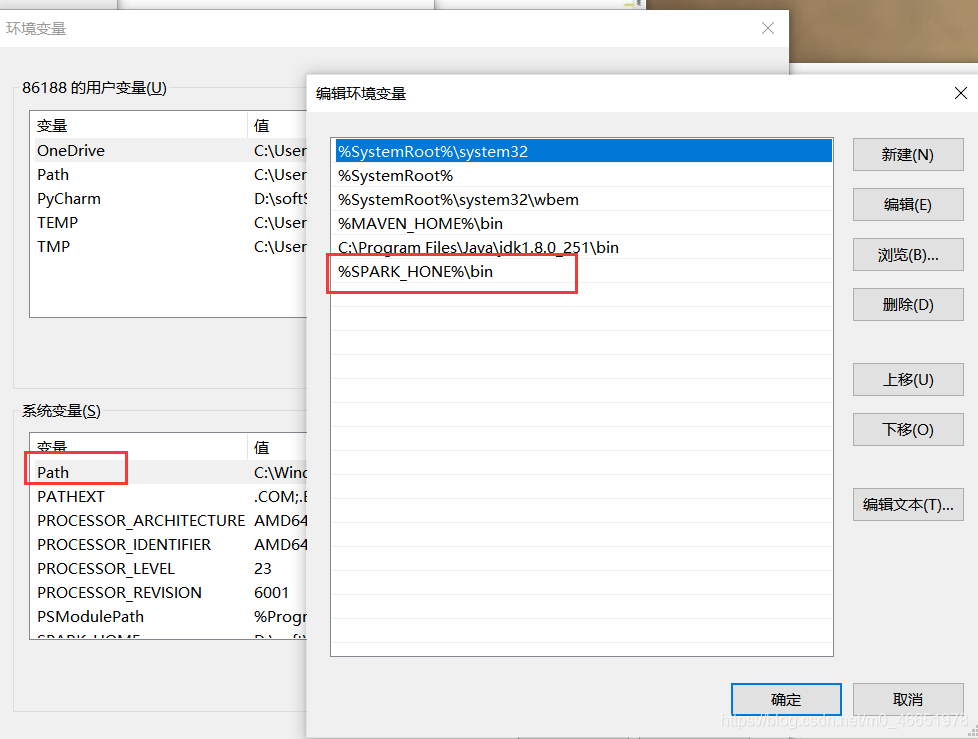

(6)关掉python,配置spark的环境变量

法二

if __name__ == '__main__':

spark = SparkSession.builder.appName("test")\

.master("local[*]")\

.enableHiveSupport().getOrCreate()

df = spark.sql("select * from dws_events.dws_temp_train limit 3")

df.show()

if __name__ == '__main__':

spark = SparkSession.builder.appName("test")\

.master("local[*]")\

.config("hive.metastore.uris","thrift://192.168.72.170:9083")\ #加上这个hive.metastore.uris,thrift://

.enableHiveSupport().getOrCreate()

df = spark.sql("select * from dws_events.dws_temp_train limit 3")

df.show()

来源:oschina

链接:https://my.oschina.net/u/4392850/blog/4842024