一、公共类抽取

熟悉Gatling的同学都知道Gatling脚本的同学都知道,Gatling的脚本包含三大部分:

- http head配置

- Scenario 执行细节

- setUp 组装

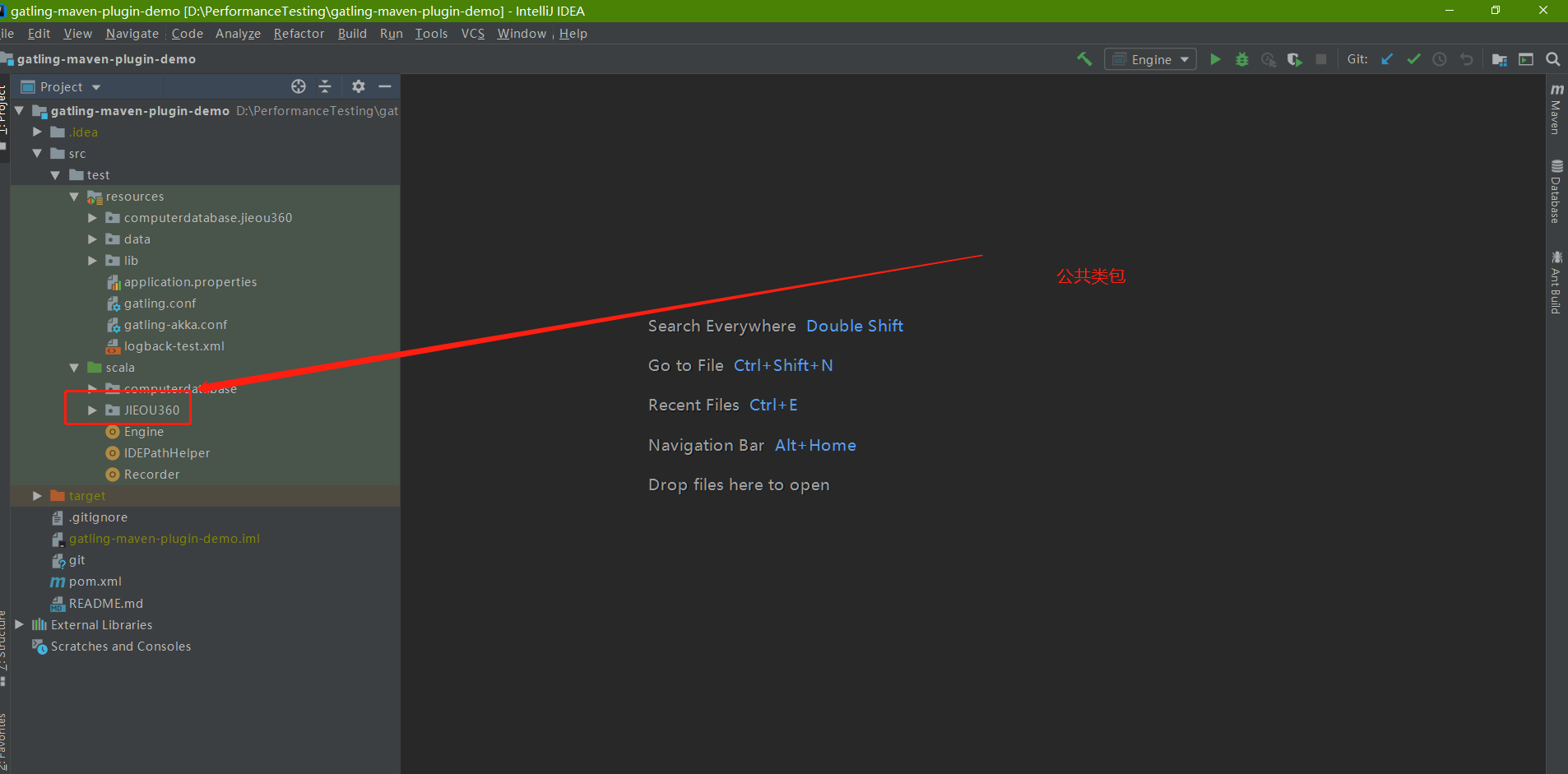

那么针对三部分我们需要在一套全流程测试当中把公共的部分提取出来写成Scala脚本公共类,来避免重复的工作和代码冗余:在工程创建一个单独的目录如下图所示:

定义一个报头的基本格式:

package computerdatabase.JieOuData

import io.gatling.core.Predef._

import io.gatling.http.Predef._

import io.gatling.http.config.HttpProtocolBuilder

object phHttpProtocol {

implicit val noneWhiteList: io.gatling.core.filter.WhiteList = WhiteList ()

implicit

val noneBlackList: io.gatling.core.filter.BlackList = BlackList ()

implicit

val staticBlackList: io.gatling.core.filter.BlackList = BlackList (""".*\.js""", """.*\.css""", """.*\.gif""", """.*\.jpeg""", """.*\.jpg""", """.*\.ico""", """.*\.woff""", """.*\.(t|o)tf""", """.*\.png""")

implicit

val staticWhiteList: io.gatling.core.filter.WhiteList = WhiteList (""".*\.js""", """.*\.css""", """.*\.gif""", """.*\.jpeg""", """.*\.jpg""", """.*\.ico""", """.*\.woff""", """.*\.(t|o)tf""", """.*\.png""")

def apply(host: String)(

implicit blackLst: io.gatling.core.filter.BlackList

, whiteLst: io.gatling.core.filter.WhiteList

): HttpProtocolBuilder = {

http.baseURL (host)

.inferHtmlResources (blackLst, whiteLst)

.acceptHeader ("application/json, text/javascript, */*; q=0.01")

.acceptEncodingHeader ("gzip, deflate")

.acceptLanguageHeader ("zh-CN,zh;q=0.9,zh-TW;q=0.8")

.doNotTrackHeader ("1")

.userAgentHeader ("Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36")

}

}

定义报头的请求格式:

package computerdatabase.JieOuData

import io.gatling.http.config.HttpProtocolBuilder

object phHeaders {

val headers_base = Map ("Accept" -> "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8", "Upgrade-Insecure-Requests" -> "1")

}定义一个home的接口:

package com.pharbers.gatling.scenario

import io.gatling.core.Predef._

import io.gatling.http.Predef._

import io.gatling.core.structure.ChainBuilder

import com.pharbers.gatling.base.phHeaders.headers_base

object getHome {

val getHome: ChainBuilder = exec (http ("home").get ("/").headers (headers_base))

}

定义login 接口:

package com.pharbers.gatling.scenario

import io.gatling.core.Predef._

import io.gatling.http.Predef._

import io.gatling.core.structure.ChainBuilder

import com.pharbers.gatling.base.phHeaders.headers_json

object userLogin {

val feeder = csv ("loginUser.csv").random println (feeder)

val login: ChainBuilder = exec (http ("login")

.get ("/api/user/login").headers (headers_json)

.body (StringBody ("""{ "condition" : { "email" : "nhwa", "password" : "nhwa" } }""")).asJSON)

}

一个完整的脚本:

package com.pharbers.gatling.simulation

import io.gatling.core.Predef._

import scala.concurrent.duration._

import com.pharbers.gatling.scenario._

import com.pharbers.gatling.base.phHttpProtocol

import com.pharbers.gatling.base.phHttpProtocol.{noneBlackList, noneWhiteList}

class userLogin extends Simulation {

val httpProtocol = phHttpProtocol ("http://192.168.100.141:9000")

val scn = scenario ("user_login")

.exec (getHome.getHome.pause (5 seconds), userLogin.login.pause (60 seconds))

setUp (scn.inject (rampUsers (1000) over (3 seconds))).protocols (httpProtocol)

}

这样看起来你的脚本是不是清爽很多

二、常用接口

并发量控制:

| 函数 | 解释 |

| atOnceUsers(100) | 使用100并发量测试目标服务器 |

| rampUsers(100) over (10 seconds) | 循序渐进的增大压力,在10s中内线性增加用户数达到最大压力100并发量 |

| nothingFor(10 seconds) | 等待10s |

| constantUsersPerSec(rate) during(duration) | 在指定duration内,以固定频率注入用户,每秒注入rate个用户,默认固定间隔 |

| constantUsersPerSec(rate) during(duration) randomized | 与上面不同的是用户以随机间隔注入 |

| rampUsersPerSec(rate1) to (rate2) during(duration) | 在指定duration内,以递增频率注入用户,每秒注入 rate1 ~ rate2 个用户 |

用户行为控制:

| 函数 | 解释 |

| exec() | 实际的用户行为 |

| pause(20) | 用户滞留20s,模拟用户思考或者浏览内容 |

| pause(min: Duration, max: Duration) | 用户随机滞留,滞留时间在min ~ max 之间 |

流程控制:

| 函数 | 解释 |

| repeat(time, counterName) | 内置循环器 |

| foreach(seq, elem, counterName) | foreach循环器 |

| csv("file").random | 创建填充器 |

| doIf("", "") | 判断语句 |

数据操作:

JDBC数据

jdbcFeeder("databaseUrl", "username", "password", "SELECT * FROM users")Redis数据

import com.redis._import io.gatling.redis.Predef._

val redisPool = new RedisClientPool("localhost", 6379)

// use a list, so there's one single value per record, which is here named "foo"// same as redisFeeder(redisPool, "foo").LPOPval feeder = redisFeeder(redisPool, "foo")

// read data using SPOP command from a set named "foo"val feeder = redisFeeder(redisPool, "foo").SPOP

// read data using SRANDMEMBER command from a set named "foo"val feeder = redisFeeder(redisPool, "foo").SRANDMEMBER文件数据

import java.io.{ File, PrintWriter }import io.gatling.redis.util.RedisHelper._

def generateOneMillionUrls(): Unit = {

val writer = new PrintWriter(new File("/tmp/loadtest.txt"))

try {

for (i <- 0 to 1000000) {

val url = "test?id=" + i

// note the list name "URLS" here

writer.write(generateRedisProtocol("LPUSH", "URLS", url))

}

} finally {

writer.close()

}}迭代数据

import io.gatling.core.feeder._import java.util.concurrent.ThreadLocalRandom

// index records by projectval recordsByProject: Map[String, Seq[Record[Any]]] =

csv("projectIssue.csv").readRecords.groupBy { record => record("project").toString }

// convert the Map values to get only the issues instead of the full recordsval issuesByProject: Map[String, Seq[Any]] =

recordsByProject.mapValues { records => records.map { record => record("issue") } }

// inject projectfeed(csv("userProject.csv"))

.exec { session =>

// fetch project from session

session("project").validate[String].map { project =>

// fetch project's issues

val issues = issuesByProject(project)

// randomly select an issue

val selectedIssue = issues(ThreadLocalRandom.current.nextInt(issues.length))

// inject the issue in the session

session.set("issue", selectedIssue)

}

}

来源:oschina

链接:https://my.oschina.net/u/4286318/blog/3320702