1 前言

基于keras的双层LSTM网络和双向LSTM网络中,都会用到 LSTM层,主要参数如下:

LSTM(units,input_shape,return_sequences=False)

- units:隐藏层神经元个数

- input_shape=(time_step, input_feature):time_step是序列递归的步数,input_feature是输入特征维数

- return_sequences: 取值为True,表示每个时间步的值都返回;取值为False,表示只返回最后一个时间步的取值

本文以MNIST手写数字分类为例,讲解双层LSTM网络和双向LSTM网络的实现。关于MNIST数据集的说明,见使用TensorFlow实现MNIST数据集分类。

笔者工作空间如下:

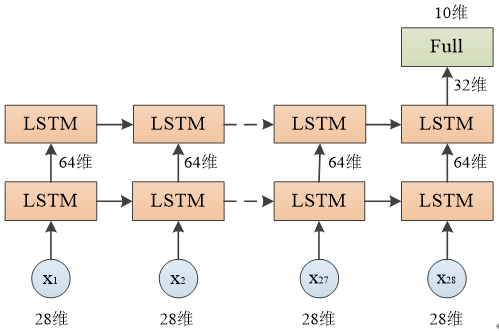

2 双层LSTM网络

DoubleLSTM.py

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Sequential

from keras.layers import Dense,LSTM

#载入数据

def read_data(path):

mnist=input_data.read_data_sets(path,one_hot=True)

train_x,train_y=mnist.train.images.reshape(-1,28,28),mnist.train.labels,

valid_x,valid_y=mnist.validation.images.reshape(-1,28,28),mnist.validation.labels,

test_x,test_y=mnist.test.images.reshape(-1,28,28),mnist.test.labels

return train_x,train_y,valid_x,valid_y,test_x,test_y

#双层LSTM模型

def DoubleLSTM(train_x,train_y,valid_x,valid_y,test_x,test_y):

#创建模型

model=Sequential()

model.add(LSTM(64,input_shape=(28,28),return_sequences=True)) #返回所有节点的输出

model.add(LSTM(32,return_sequences=False)) #返回最后一个节点的输出

model.add(Dense(10,activation='softmax'))

#查看网络结构

model.summary()

#编译模型

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

#训练模型

model.fit(train_x,train_y,batch_size=500,nb_epoch=15,verbose=1,validation_data=(valid_x,valid_y))

#评估模型

pre=model.evaluate(test_x,test_y,batch_size=500,verbose=1)

print('test_loss:',pre[0],'- test_acc:',pre[1])

train_x,train_y,valid_x,valid_y,test_x,test_y=read_data('MNIST_data')

DoubleLSTM(train_x,train_y,valid_x,valid_y,test_x,test_y)

每层网络输出尺寸:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_5 (LSTM) (None, 28, 64) 23808

_________________________________________________________________

lstm_6 (LSTM) (None, 32) 12416

_________________________________________________________________

dense_5 (Dense) (None, 10) 330

=================================================================

Total params: 36,554

Trainable params: 36,554

Non-trainable params: 0

由于第一个LSTM层设置了 return_sequences=True,每个节点的输出值都会返回,因此输出尺寸为 (None, 28, 64)

由于第二个LSTM层设置了 return_sequences=False,只有最后一个节点的输出值会返回,因此输出尺寸为 (None, 32)

训练结果:

Epoch 13/15

55000/55000 [==============================] - 17s 308us/step - loss: 0.0651 - acc: 0.9810 - val_loss: 0.0658 - val_acc: 0.9780

Epoch 14/15

55000/55000 [==============================] - 17s 314us/step - loss: 0.0599 - acc: 0.9825 - val_loss: 0.0655 - val_acc: 0.9818

Epoch 15/15

55000/55000 [==============================] - 19s 339us/step - loss: 0.0585 - acc: 0.9824 - val_loss: 0.0607 - val_acc: 0.9802

10000/10000 [==============================] - 1s 69us/step

test_loss: 0.07325475453399122 - test_acc: 0.97950000166893

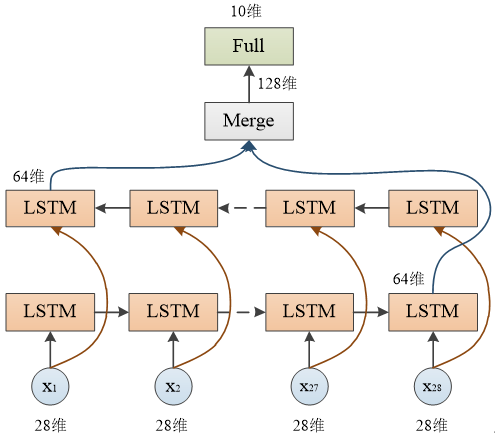

3 双向LSTM网络

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Sequential

from keras.layers import Dense,LSTM,Bidirectional

#载入数据

def read_data(path):

mnist=input_data.read_data_sets(path,one_hot=True)

train_x,train_y=mnist.train.images.reshape(-1,28,28),mnist.train.labels,

valid_x,valid_y=mnist.validation.images.reshape(-1,28,28),mnist.validation.labels,

test_x,test_y=mnist.test.images.reshape(-1,28,28),mnist.test.labels

return train_x,train_y,valid_x,valid_y,test_x,test_y

#双向LSTM模型

def BiLSTM(train_x,train_y,valid_x,valid_y,test_x,test_y):

#创建模型

model=Sequential()

lstm=LSTM(64,input_shape=(28,28),return_sequences=False) #返回最后一个节点的输出

model.add(Bidirectional(lstm)) #双向LSTM

model.add(Dense(10,activation='softmax'))

#编译模型

model.compile(optimizer='adam',loss='categorical_crossentropy',metrics=['accuracy'])

#训练模型

model.fit(train_x,train_y,batch_size=500,nb_epoch=15,verbose=1,validation_data=(valid_x,valid_y))

#查看网络结构

model.summary()

#评估模型

pre=model.evaluate(test_x,test_y,batch_size=500,verbose=1)

print('test_loss:',pre[0],'- test_acc:',pre[1])

train_x,train_y,valid_x,valid_y,test_x,test_y=read_data('MNIST_data')

BiLSTM(train_x,train_y,valid_x,valid_y,test_x,test_y)

每层网络输出尺寸:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

bidirectional_5 (Bidirection (None, 128) 47616

_________________________________________________________________

dense_6 (Dense) (None, 10) 1290

=================================================================

Total params: 48,906

Trainable params: 48,906

Non-trainable params: 0

由于LSTM层设置了 return_sequences=False,只有最后一个节点的输出值会返回,每层LSTM返回64维向量,两层合并共128维,因此输出尺寸为 (None, 128)

训练结果:

Epoch 13/15

55000/55000 [==============================] - 19s 337us/step - loss: 0.0525 - acc: 0.9837 - val_loss: 0.0616 - val_acc: 0.9800

Epoch 14/15

55000/55000 [==============================] - 16s 299us/step - loss: 0.0454 - acc: 0.9854 - val_loss: 0.0593 - val_acc: 0.9818

Epoch 15/15

55000/55000 [==============================] - 19s 336us/step - loss: 0.0422 - acc: 0.9874 - val_loss: 0.0592 - val_acc: 0.9820

来源:CSDN

作者:little_fat_sheep

链接:https://blog.csdn.net/m0_37602827/article/details/104790741