一、hadoop单机分布式

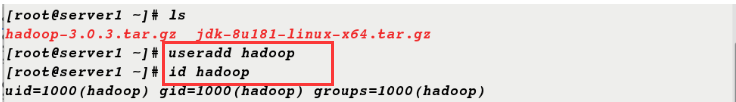

1.建立hadoop用户并设置hadoop用户密码

[root@server1 ~]# ls

hadoop-3.0.3.tar.gz jdk-8u181-linux-x64.tar.gz

[root@server1 ~]# useradd hadoop

[root@server1 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) groups=1000(hadoop)

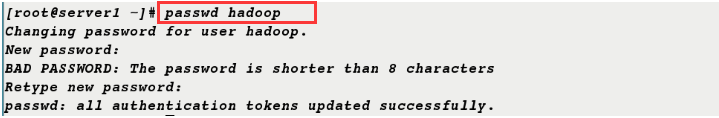

[root@server1 ~]# passwd hadoop

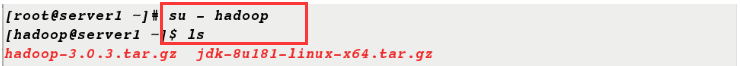

2.hadoop的安装配置不建议在超户下进行,所以切换到hadoop用户下再进行配置

[root@server1 ~]# mv * /home/hadoop/

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ ls

hadoop-3.0.3.tar.gz jdk-8u181-linux-x64.tar.gz

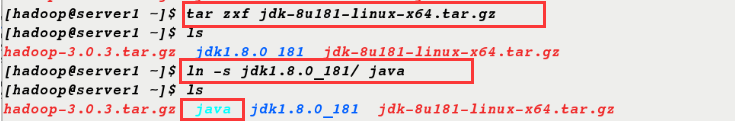

3.在hadoop用户下解压jdk安装包,并制作软链接

[hadoop@server1 ~]$ tar zxf jdk-8u181-linux-x64.tar.gz

[hadoop@server1 ~]$ ls

hadoop-3.0.3.tar.gz jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.8.0_181/ java

[hadoop@server1 ~]$ ls

hadoop-3.0.3.tar.gz java jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

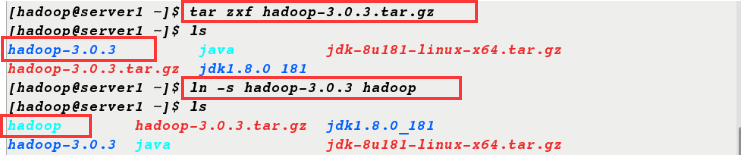

4.在hadoop用户下解压hadoop安装包,并制作软链接

[hadoop@server1 ~]$ tar zxf hadoop-3.0.3.tar.gz

[hadoop@server1 ~]$ ls

hadoop-3.0.3 java jdk-8u181-linux-x64.tar.gz

hadoop-3.0.3.tar.gz jdk1.8.0_181

[hadoop@server1 ~]$ ln -s hadoop-3.0.3 hadoop

[hadoop@server1 ~]$ ls

hadoop hadoop-3.0.3.tar.gz jdk1.8.0_181

hadoop-3.0.3 java jdk-8u181-linux-x64.tar.gz

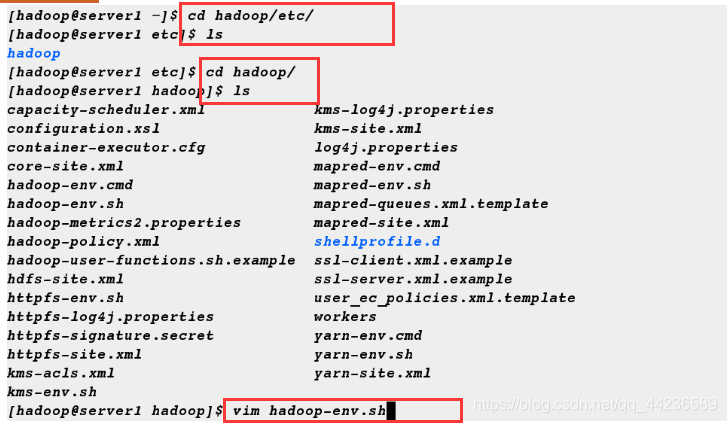

5.配置环境变量

[hadoop@server1 ~]$ cd hadoop/etc/

[hadoop@server1 etc]$ ls

hadoop

[hadoop@server1 etc]$ cd hadoop/

[hadoop@server1 hadoop]$ ls

capacity-scheduler.xml kms-log4j.properties

configuration.xsl kms-site.xml

container-executor.cfg log4j.properties

core-site.xml mapred-env.cmd

hadoop-env.cmd mapred-env.sh

hadoop-env.sh mapred-queues.xml.template

hadoop-metrics2.properties mapred-site.xml

hadoop-policy.xml shellprofile.d

hadoop-user-functions.sh.example ssl-client.xml.example

hdfs-site.xml ssl-server.xml.example

httpfs-env.sh user_ec_policies.xml.template

httpfs-log4j.properties workers

httpfs-signature.secret yarn-env.cmd

httpfs-site.xml yarn-env.sh

kms-acls.xml yarn-site.xml

kms-env.sh

[hadoop@server1 hadoop]$ vim hadoop-env.sh

export JAVA_HOME=/home/hadoop/java

[hadoop@server1 ~]$ vim .bash_profile

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$HOME/java/bin

[hadoop@server1 ~]$ source .bash_profile

[hadoop@server1 ~]$ jps ##配置成功可以调用

1125 Jps

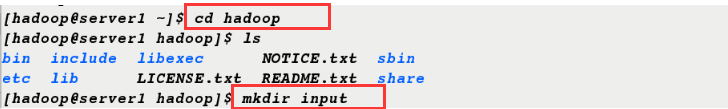

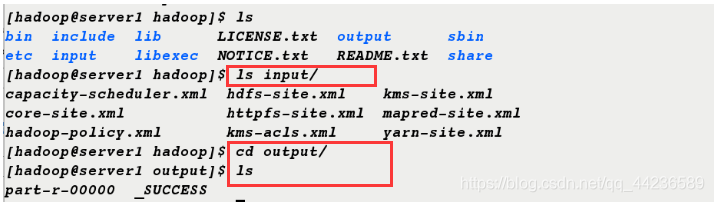

6.在hadoop目录下,新建目录input,把etc/hadoop/目录下所有以.xml结尾的文件复制到input下

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ ls

bin include libexec NOTICE.txt sbin

etc lib LICENSE.txt README.txt share

[hadoop@server1 hadoop]$ mkdir input

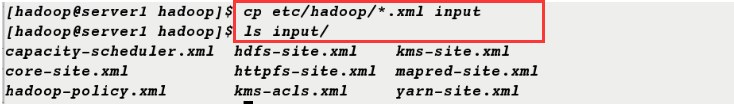

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ ls input/

capacity-scheduler.xml hdfs-site.xml kms-site.xml

core-site.xml httpfs-site.xml mapred-site.xml

hadoop-policy.xml kms-acls.xml yarn-site.xml

7.执行这个命令

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.3.jar grep input output 'dfs[a-z.]+'

8.查看output目录下内容

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt output sbin

etc input libexec NOTICE.txt README.txt share

[hadoop@server1 hadoop]$ ls input/

capacity-scheduler.xml hdfs-site.xml kms-site.xml

core-site.xml httpfs-site.xml mapred-site.xml

hadoop-policy.xml kms-acls.xml yarn-site.xml

[hadoop@server1 hadoop]$ cd output/

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

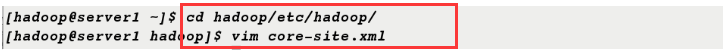

二、伪分布式

1.编辑配置文件

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

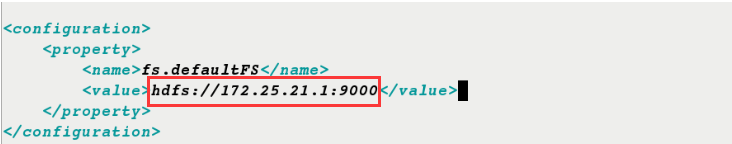

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.25.21.1:9000</value>

</property>

</configuration>

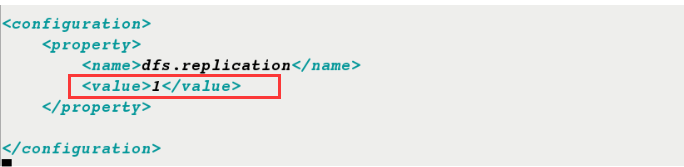

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

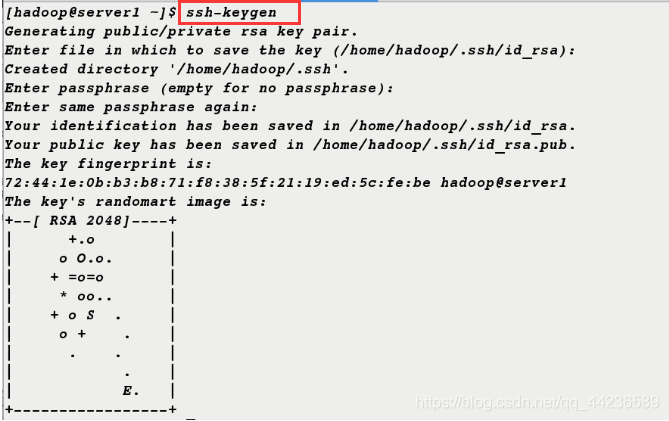

2.生成密钥做免密连接

[hadoop@server1 ~]$ ssh-keygen

[hadoop@server1 ~]$ ssh-copy-id localhost

[hadoop@server1 ~]$ ssh-copy-id 172.25.21.1

[hadoop@server1 ~]$ ssh-copy-id server1

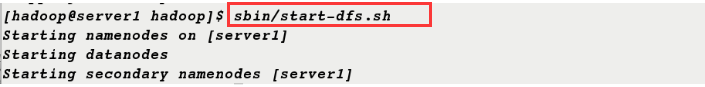

3.格式化并开启服务

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

Starting datanodes

Starting secondary namenodes [server1]

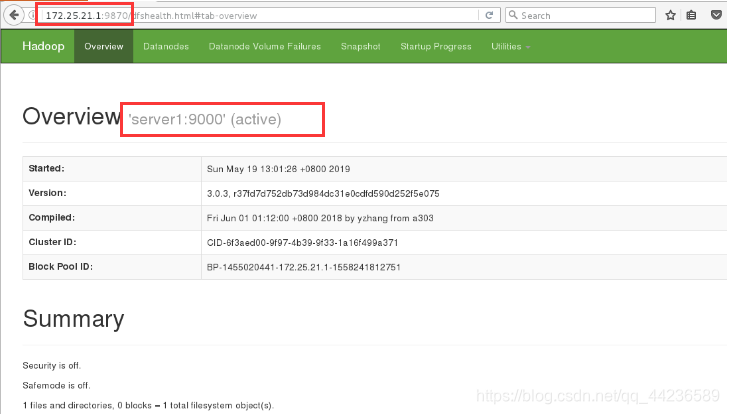

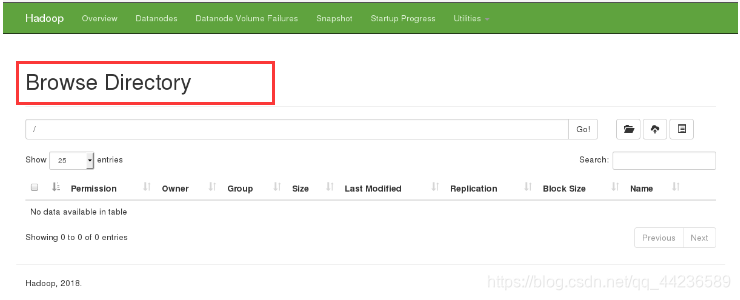

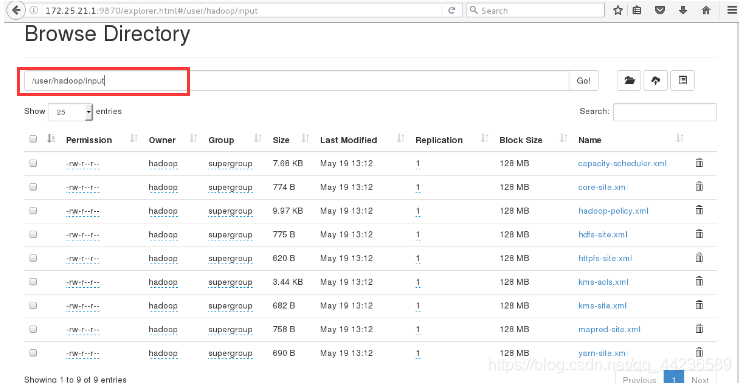

4.浏览器访问172.25.21.1:9870

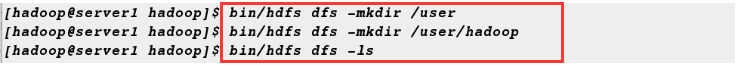

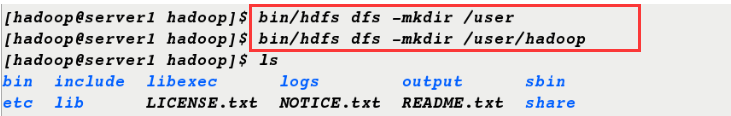

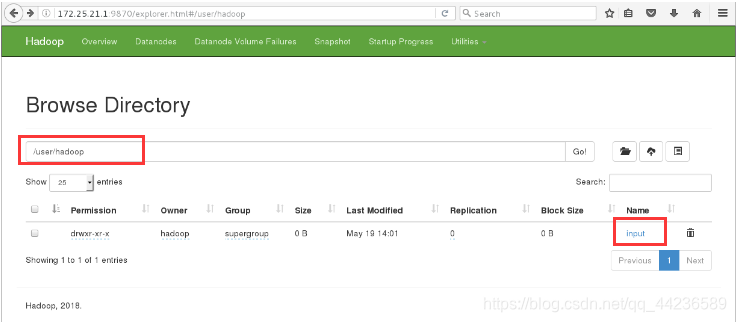

5.创建一个目录并上传,可以在浏览器中查看到新建的目录

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

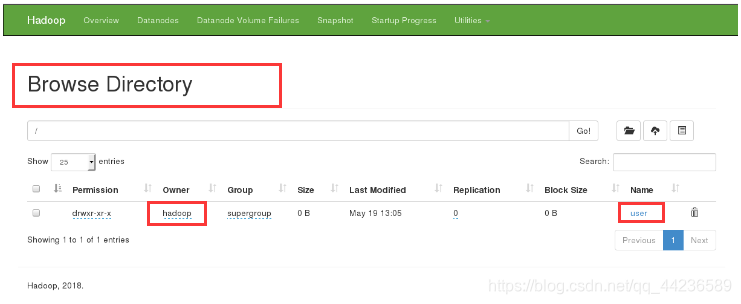

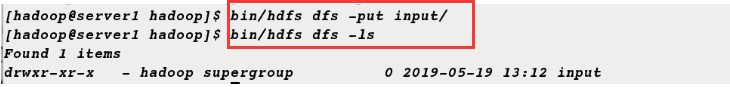

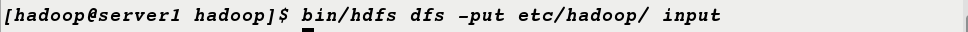

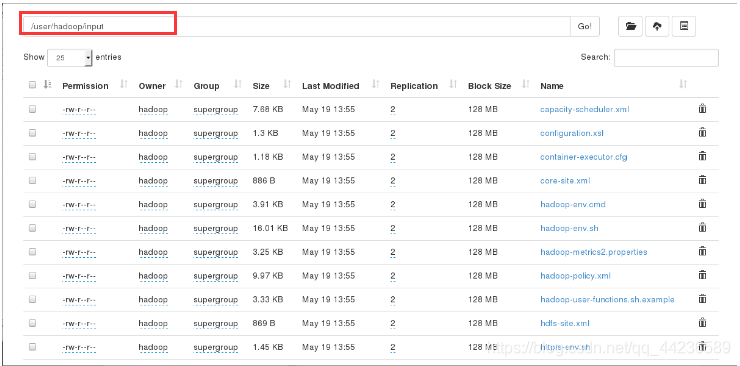

6.上传input/目录,可以在浏览器中查看到目录下内容

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input/

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2019-05-19 13:12 input

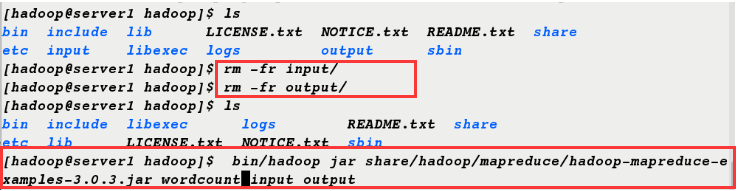

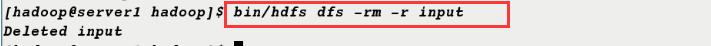

7.删除input和output文件,重新执行命令

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

[hadoop@server1 hadoop]$ rm -fr input/

[hadoop@server1 hadoop]$ rm -fr output/

[hadoop@server1 hadoop]$ ls

bin include libexec logs README.txt share

etc lib LICENSE.txt NOTICE.txt sbin

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.3.jar wordcount input output ##wordcount统计数量

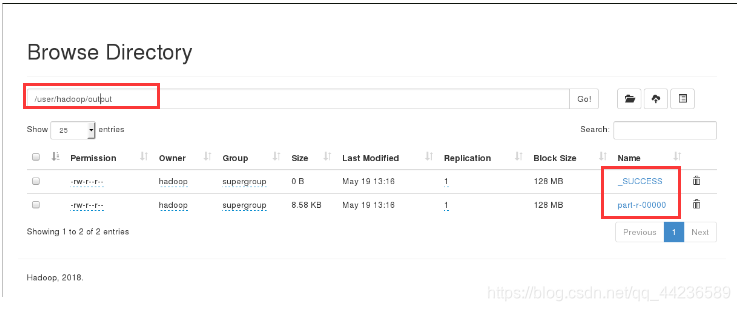

8.此时input和output不会出现在当前目录下,而是上传到了分布式文件系统中,在浏览器中可以查看到

[hadoop@server1 hadoop]$ ls

bin include libexec logs README.txt share

etc lib LICENSE.txt NOTICE.txt sbin

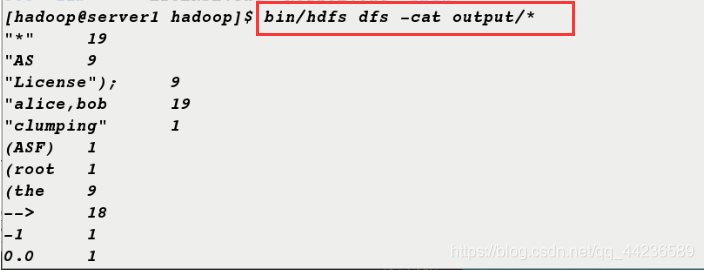

9.可以直接查看output目录下内容

[hadoop@server1 hadoop]$ bin/hdfs dfs -cat output/*

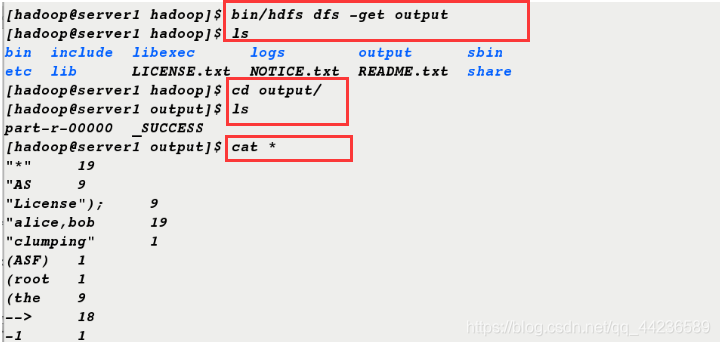

10.也可以从分布式文件系统中先下载output目录,再查看目录下内容

[hadoop@server1 hadoop]$ bin/hdfs dfs -get output

[hadoop@server1 hadoop]$ ls

bin include libexec logs output sbin

etc lib LICENSE.txt NOTICE.txt README.txt share

[hadoop@server1 hadoop]$ cd output/

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 output]$ cat *

三、完全分布式

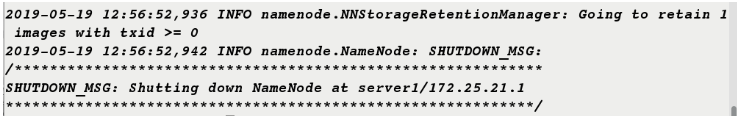

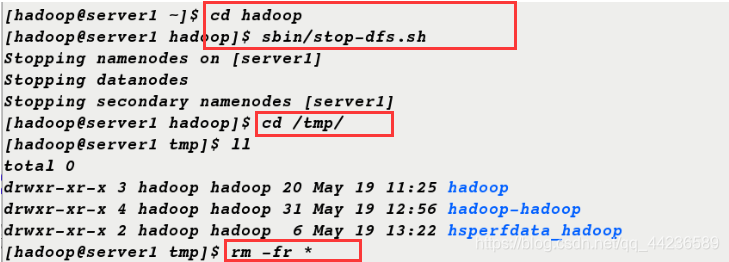

1.先停掉服务,清除原来的数据

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

Stopping namenodes on [server1]

Stopping datanodes

Stopping secondary namenodes [server1]

[hadoop@server1 hadoop]$ cd /tmp/

[hadoop@server1 tmp]$ ll

total 0

drwxr-xr-x 3 hadoop hadoop 20 May 19 11:25 hadoop

drwxr-xr-x 4 hadoop hadoop 31 May 19 12:56 hadoop-hadoop

drwxr-xr-x 2 hadoop hadoop 6 May 19 13:22 hsperfdata_hadoop

[hadoop@server1 tmp]$ rm -fr *

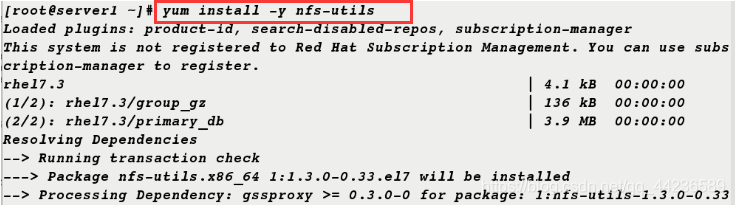

2.在server1上安装nfs-utils并开启rpcbind服务

[root@server1 ~]# yum install -y nfs-utils

[root@server1 ~]# systemctl start rpcbind

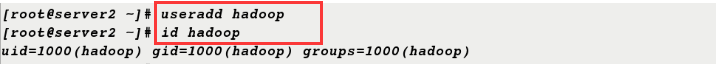

3.server2充当节点,新建hadoop用户

[root@server2 ~]# useradd hadoop

[root@server2 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) groups=1000(hadoop)

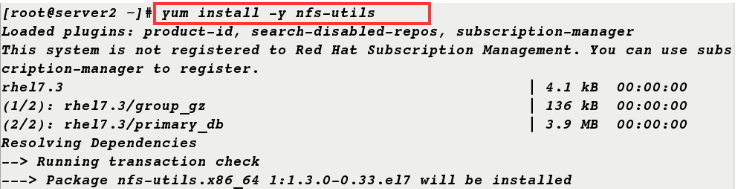

4.server2上也安装nfs-utils软件并开启rpcbind服务

[root@server2 ~]# yum install -y nfs-utils

[root@server2 ~]# systemctl start rpcbind

5.server3充当节点,新建hadoop用户

[root@server3 ~]# useradd hadoop

[root@server3 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) groups=1000(hadoop)

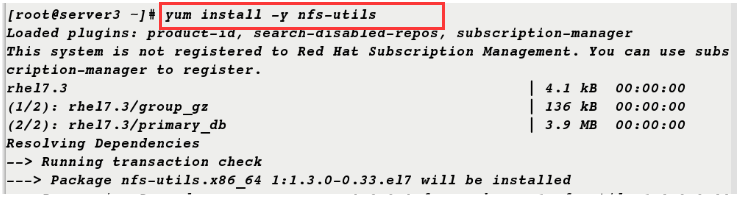

6.server3上也安装nfs-utils并开启rpcbind服务

[root@server3 ~]# yum install -y nfs-utils

[root@server3 ~]# systemctl start rpcbind

7.查看hadoop用户id ,编辑/etc/exports文件并开启nfs服务

[root@server1 ~]# id hadoop

uid=1000(hadoop) gid=1000(hadoop) groups=1000(hadoop)

[root@server1 ~]# vim /etc/exports

/home/hadoop *(rw,anonuid=1000,anongid=1000)

[root@server1 ~]# systemctl start nfs

[root@server1 ~]# exportfs -v

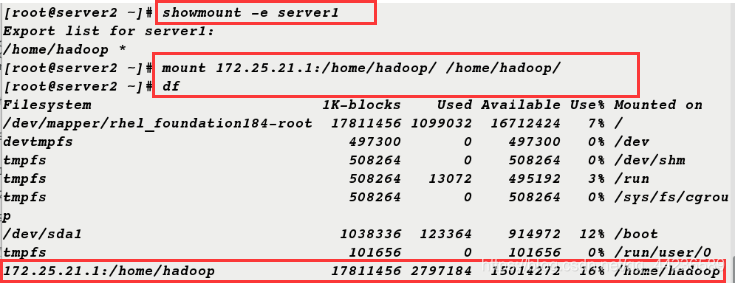

8.server2上可以获取到server1发来的信息,挂载成功

[root@server2 ~]# showmount -e server1

Export list for server1:

/home/hadoop *

[root@server2 ~]# mount 172.25.21.1:/home/hadoop/ /home/hadoop/

[root@server2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel_foundation184-root 17811456 1099032 16712424 7% /

devtmpfs 497300 0 497300 0% /dev

tmpfs 508264 0 508264 0% /dev/shm

tmpfs 508264 13072 495192 3% /run

tmpfs 508264 0 508264 0% /sys/fs/cgroup

/dev/sda1 1038336 123364 914972 12% /boot

tmpfs 101656 0 101656 0% /run/user/0

172.25.21.1:/home/hadoop 17811456 2797184 15014272 16% /home/hadoop

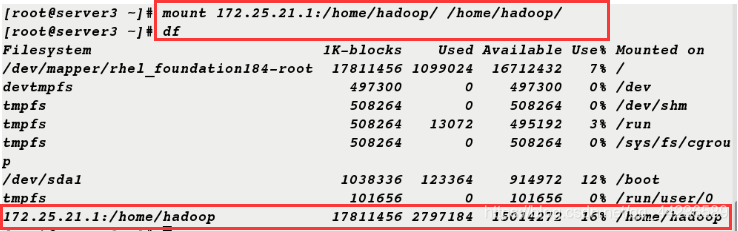

9.server3上也挂载成功

[root@server3 ~]# mount 172.25.21.1:/home/hadoop/ /home/hadoop/

[root@server3 ~]# df

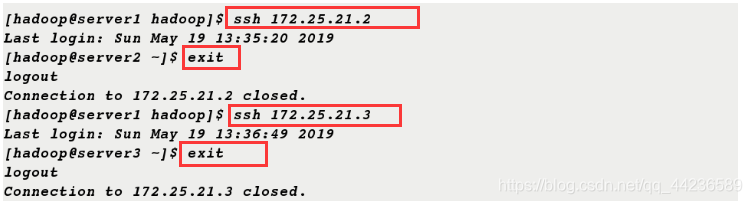

10.此时发现可以免密登陆,是因为挂载上了可以共享信息

[hadoop@server1 hadoop]$ ssh 172.25.21.2

Last login: Sun May 19 13:35:20 2019

[hadoop@server2 ~]$ exit

logout

Connection to 172.25.21.2 closed.

[hadoop@server1 hadoop]$ ssh 172.25.21.3

Last login: Sun May 19 13:36:49 2019

[hadoop@server3 ~]$ exit

logout

Connection to 172.25.21.3 closed.

10.重新编辑配置文件

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value> ##两个节点

</property>

</configuration>

[hadoop@server1 hadoop]$ vim workers

172.25.21.2

172.25.21.3

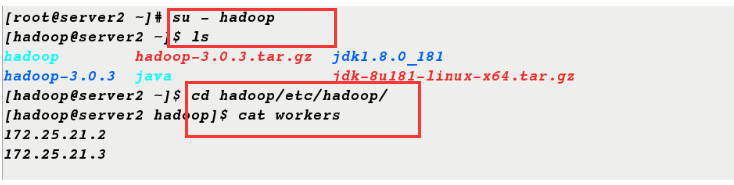

11.在server1上编辑,server2和server3两个节点也可以查看到

[root@server2 ~]# su - hadoop

[hadoop@server2 ~]$ ls

hadoop hadoop-3.0.3.tar.gz jdk1.8.0_181

hadoop-3.0.3 java jdk-8u181-linux-x64.tar.gz

[hadoop@server2 ~]$ cd hadoop/etc/hadoop/

[hadoop@server2 hadoop]$ cat workers

172.25.21.2

172.25.21.3

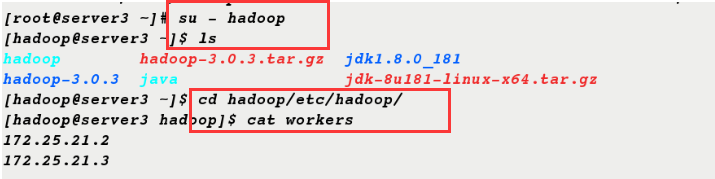

[root@server3 ~]# su - hadoop

[hadoop@server3 ~]$ ls

hadoop hadoop-3.0.3.tar.gz jdk1.8.0_181

hadoop-3.0.3 java jdk-8u181-linux-x64.tar.gz

[hadoop@server3 ~]$ cd hadoop/etc/hadoop/

[hadoop@server3 hadoop]$ cat workers

172.25.21.2

172.25.21.3

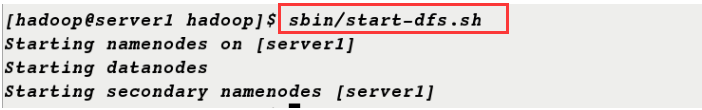

12.格式化并启动服务

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

Starting datanodes

Starting secondary namenodes [server1]

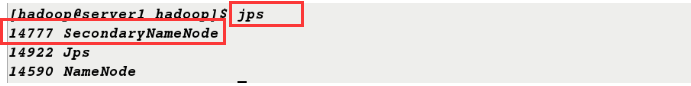

13.jps命令查看server1出现SecondaryNameNode

[hadoop@server1 hadoop]$ jps

14777 SecondaryNameNode

14922 Jps

14590 NameNode

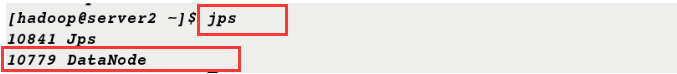

server2和server3出现DataNode

[hadoop@server2 ~]$ jps

10841 Jps

10779 DataNode

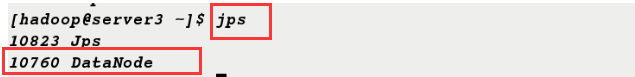

[hadoop@server3 ~]$ jps

10823 Jps

10760 DataNode

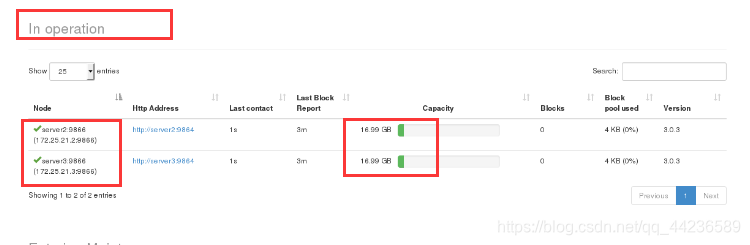

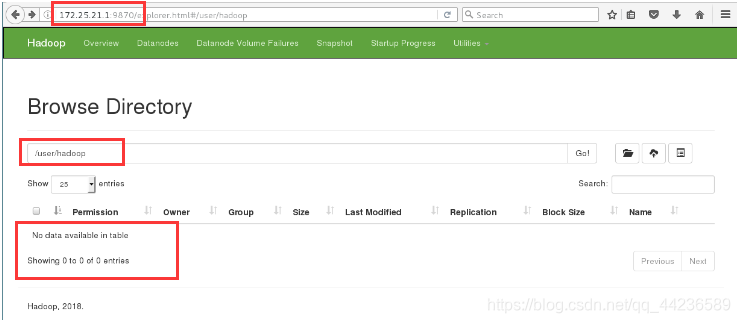

14.在浏览器中查看有两个节点,且数据已经上传

15.新建目录,可以在浏览器中查看到目录信息

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ ls

bin include libexec logs output sbin

etc lib LICENSE.txt NOTICE.txt README.txt share

16.把etc/hadoop/文件上传到分布式文件系统中并重命名为input

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/ input

17.浏览器中可以查看到/user/hadoop/input文件信息

18.删除input文件,浏览器中查看不到input文件信息

[hadoop@server1 hadoop]$ bin/hdfs dfs -rm -r input

Deleted input

来源:https://blog.csdn.net/qq_44236589/article/details/90380641