1.创建scrapy项目

2.安装scrapy redis

pip install scrapy-redis

3.设置setting.py

3.1 添加item_piplines

ITEM_PIPELINES = {

# scrapyredis配置

'scrapy_redis.pipelines.RedisPipeline':400

}

3.2 添加scrapy-redis属性配置

""" scrapy-redis配置 """

# Enables scheduling storing requests queue in redis.

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# Ensure all spiders share same duplicates filter through redis.

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

# 调度器启用Redis存储Requests队列

#SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# 确保所有的爬虫实例使用Redis进行重复过滤

#DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

# 将Requests队列持久化到Redis,可支持暂停或重启爬虫

#SCHEDULER_PERSIST = True

# Requests的调度策略,默认优先级队列

#SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.PriorityQueue'

3.3 添加redis配置

# 指定Redis的主机名和端口

REDIS_HOST = 'ip'

REDIS_PORT = port

4.修改爬虫文件

4.1 将crawspider父类换成rediscrawlspider

4.2 设置 redis_key 用于redis推送爬取任务

4.3 设置动态域名,也可使用allow_domin,两者选一个

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from youyuan.items import YouyuanItem

from scrapy_redis.spiders import RedisCrawlSpider

#class YouyuancomSpider(CrawlSpider):

class YouyuancomSpider(RedisCrawlSpider):

name = 'youyuancom'

allowed_domains = ['youyuan.com']

# scrapy_redis分布式时,将redis_key 代替 start_urls

#start_urls = ['http://www.youyuan.com/find/zhejiang/mm18-0/advance-0-0-0-0-0-0-0/p1/']

redis_key = "YouyuancomSpider:start_urls"

rules = (

Rule(LinkExtractor(allow=r'youyuan.com/find/zhejiang/mm18-0/p\d+/')),

Rule(LinkExtractor(allow=r'/\d+-profile/'), callback='parse_personitem', follow=True),

)

# scrapy redis 动态域名

def __init__(self, *args, **kwargs):

# Dynamically define the allowed domains list.

domain = kwargs.pop('domain', '')

self.allowed_domains = filter(None, domain.split(','))

super(YouyuancomSpider, self).__init__(*args, **kwargs)

def parse_personitem(self, response):

item = YouyuanItem()

item["username"] = response.xpath("//div[@class='con']/dl[@class='personal_cen']/dd/div/strong/text()").extract()

item["introduce"] = response.xpath("//div[@class='con']/dl[@class='personal_cen']/dd/p/text()").extract()

item["imgsrc"] = response.xpath("//div[@class='con']/dl[@class='personal_cen']/dt/img/@src").extract()

item["persontag"] = response.xpath("//div[@class='pre_data']/ul/li/p/text()").extract()

item["sourceUrl"] = response.url

yield item

def parse_item(self, response):

item = {}

#item['domain_id'] = response.xpath('//input[@id="sid"]/@value').get()

#item['name'] = response.xpath('//div[@id="name"]').get()

#item['description'] = response.xpath('//div[@id="description"]').get()

return item

5.直接进入爬虫项目下运行 scrapy runspider xx.py

6.redis端推送爬取任务

lpush redis_key 网址

redis_key 是在爬虫文件里设置的值

注意点:

1.scrapy runspider xx.py xx是你的爬虫文件,也就是sracpy genspider xx 对应

2.如果你是在pycharm里直接创建的项目,导入items.py时使用的是 from ..items import xxItmes

会报错,attempted relative import with no known parent package,大意就是找不到上一层父类,

解决方案:

1.选中你的爬虫子项目->右键mark directory as->选择 source root

2.修改你的爬虫文件,将 from ..items import xxItmes 修改为 from 项目名.items import xxItems 会没有提示,需要自己手动打入

然后再运行 runspider命令

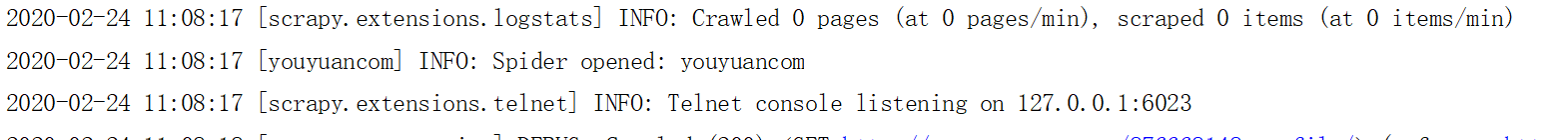

出现类似 如图字样表示爬虫已经等待接收任务

3.如果出现

scrapy-redis中DEBUG: Filtered offsite request to xxx

需要在setting.py设置

SPIDER_MIDDLEWARES = {

'youyuan.middlewares.YouyuanSpiderMiddleware': 543,

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware': None, 将这个设置为none

}

=====================分割线=====================

redis保存入mqsql

安装mysql python3.7 对应的是pymysql pip install pymysql

比较简单,直接帖代码

import redis

import json

from pymysql import *

def process_item():

#传概念redis数据库

rediscli = redis.Redis(host="",port=6379,db=0)

mysqlcli = connect(host='127.0.0.1',port=3306,user='root',password='root',database='test',charset='utf8')

offset = 0

while True:

#将数据从redis中pop出来

source,data = rediscli.blpop("youyuancom:items")

#创建mysql操作游标对象,可以执行mysql语句

cursor = mysqlcli.cursor()

sql = "insert into scrapyredis_youyuan(username,persontag,imgsrc,url) values(%s,%s,%s,%s)"

jsonitem = json.loads(data)

params = [jsonitem["username"],jsonitem["persontag"],jsonitem["imgsrc"],jsonitem["sourceUrl"]] # 参数化

result = cursor.execute(sql, params)

mysqlcli.commit()

cursor.close()

offset +=1

print("保存入数据库:"+str(offset))

if __name__ == "__main__":

process_item()

来源:oschina

链接:https://my.oschina.net/myithome/blog/3171540