requests

- response = request.get(url)

- print(response) // 获取请求状态码

- response.text

- response.encoding // 推测网页编码

- response.content // byte形式显示原始网页

- response.content.decode() //解码

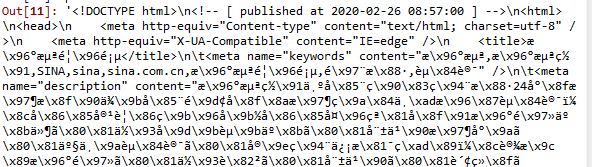

p = requests.get("https://www.sina.com.cn/")

print(p)

p.text

p.content.endoce()

p.ecoding

p.ecode

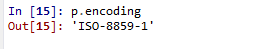

p.encoding

p.content.decode(encoding='ISO-8859-1')

p.content.decode(encoding='utf-8')

7.存储图片

with open("a.png","wb") as f:

f.write(img1.content)

8.贴吧爬取实例

# -*- coding: utf-8 -*-

"""

Created on Wed Feb 26 09:16:08 2020

@author: Administrator

"""

import requests

import os

os.chdir(r"H:\实操\学习\01\spyder")

class TieSpyder:

def __init__(self,name):

self.name = name

self.url_temp = "https://tieba.baidu.com/f?kw="+self.name+"&ie=utf-8&pn={}"

self.headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.100 Safari/537.36'}

#获取url_list池塘

def get_url_list(self):

url_list = []

for i in range(0,1000):

url_list.append(self.url_temp.format(i*50))

return url_list

def html_parse(self,url):

print(url)

response = requests.get(url,self.headers)

return response.content.decode()

def save_html(self,html_str,pageNum):

filepath = "{}-第{}页".format(self.name,pageNum)

with open(filepath,"w",encoding = "utf-8") as f:

f.write(html_str)

def run(self):

pageNum = 1

#1.获取url_list

url_list = self.get_url_list()

#2.遍历list,发送请求,获取响应

for url in url_list:

html_str = self.html_parse(url)

#3.存储数据

self.save_html(html_str,pageNum)

pageNum++

if __name__ =="__main__":

liyi = TieSpyder("摄影")

liyi.run()

-----------视频学习 小酥仙儿 2020.2.26------------------------------------------------------------------

来源:CSDN

作者:小酥仙儿

链接:https://blog.csdn.net/qq_40278637/article/details/104510452