一、安装装备

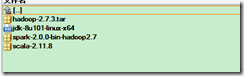

下载安装包:

vmware workstations pro 12

三台centos7.1 mini 虚拟机

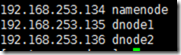

网络配置NAT网络如下:

二、创建hadoop用户和hadoop用户组

1. groupadd hadoop

2. useradd hadoop

3. 给hadoop用户设置密码

在root用户下:passwd hadoop设置新密码

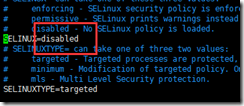

三、关闭防火墙和selinux

1. yum install -y firewalld

2. systemctl stop firewalld

3. systemctl disable firewalld

4. vi /etc/selinux/config

5.全部设置好重启虚拟机

四、三台虚拟机之间ssh互通

1. 在hadoop用户下

1. ssh-keygen -t rsa

2. ssh-copy-id -i /home/hadoop/.ssh/id_rsa.pub hadoop@虚拟机ip

3. ssh namenode/dnode1/dnode2

五、安装Java

1. 官网下载jdk1.8.rpm包

2. rpm -ivh jdk1.8.rpm

六、安装hadoop

1. 官网下载hadoop2.7.3.tar.gz

2. tar xzvf hadoop2.7.3.tar.gz

3. mv hadoop2.7.3 /usr/local/

4. chown -R hadoop:hadoop /usr/local/hadoop-2.7.3

七、环境变量配置

1.vim /home/hadoop/.bash_profile

source /home/hadoop/.bash_profile

export JAVA_HOME=/usr/java/jdk1.8.0_101export JRE_HOME=$JAVA_HOME/jreexport CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jarexport HADOOP_HOME=/usr/local/hadoop-2.7.3export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoopexport YARN_CONF_DIR=$HADOOP_HOME/etc/hadoopexport SCALA_HOME=/usr/local/scala-2.11.8export SPARK_HOME=/usr/local/spark-2.0.0PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SCALA_HOME/bin:$SPARK_HOME/binexport PATH

八、建立hadoop相关目录

mkdir -p /home/hadoop/hd_space/tmpmkdir -p /home/hadoop/hd_space/hdfs/namemkdir -p /home/hadoop/hd_space/hdfs/datamkdir -p /home/hadoop/hd_space/mapred/localmkdir -p /home/hadoop/hd_space/mapred/systemchown -R hadoop:hadoop /home/hadoop

注意:至此,可以克隆虚拟机,不必每台都安装centos,克隆之后再建立互通

九、配置hadoop

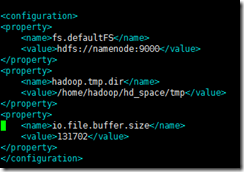

1. 配置core-site.xml

vim /usr/local/hadoop-2.7.3/etc/hadoop/core-site.xml

<configuration><property> <name>fs.defaultFS</name> <value>hdfs://namenode:9000</value></property><property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/hd_space/tmp</value></property><property> <name>io.file.buffer.size</name> <value>131702</value></property></configuration>

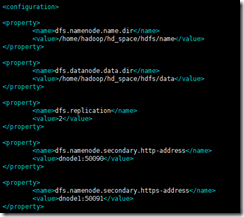

2. 配置hdfs-site.xml

<property> <name>dfs.namenode.name.dir</name> <value>/home/hadoop/hd_space/hdfs/name</value>?</property><property> <name>dfs.datanode.data.dir</name> <value>/home/hadoop/hd_space/hdfs/data</value></property><property> <name>dfs.replication</name> <value>2</value></property><property> <name>dfs.namenode.secondary.http-address</name> <value>dnode1:50090</value></property><property> <name>dfs.namenode.secondary.https-address</name> <value>dnode1:50091</value></property>

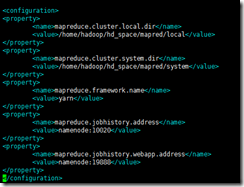

3. 配置mapred-site.xml

<configuration><property> <name>mapreduce.cluster.local.dir</name> <value>/home/hadoop/hd_space/mapred/local</value></property><property> <name>mapreduce.cluster.system.dir</name> <value>/home/hadoop/hd_space/mapred/system</value></property><property> <name>mapreduce.framework.name</name> <value>yarn</value></property><property> <name>mapreduce.jobhistory.address</name> <value>namenode:10020</value></property>?<property> <name>mapreduce.jobhistory.webapp.address</name> <value>namenode:19888</value></property>?</configuration>

4.配置yarn-site.xml

<configuration><!-- Site specific YARN configuration properties --><property> <description>The?hostname?of?the?RM.</description> <name>yarn.resourcemanager.hostname</name> <value>namenode</value></property><property> <description>the?valid?service?name?should?only?contain?a-zA-Z0-9_?and?can?not?start?with?numbers</description> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value></property>?</configuration>

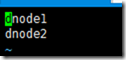

5. 配置slaves,两个数据节点dnode1,dnode2

6. 拷贝配置文件目录到另外两台数据节点

for target in dnode1 dnode2 do scp -r /usr/local/hadoop-2.7.3/etc/hadoop $target:/usr/local/hadoop-2.7.3/etcdone

十、启动hadoop

1. 用户登陆hadoop

2. 格式化hdfs

hdfs namenode -format

3. 启动dfs

start-dfs.sh

4. 启动yarn

start-yarn.sh

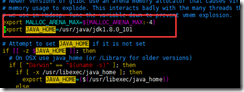

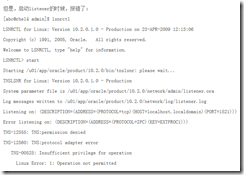

十一、错误解决

1. 出现以下环境变量JAVA_HOME找不到。

解决办法:需要重新配置/usr/local/hadoop-2.7.3/libexec/hadoop-config.sh

2. oracle数据出现以下错误解决

TNS-12555: TNS:permission denied,监听器启动不了 lsnrctl start(启动)/status(查看状态)/stop(停止)

解决办法:chown -R hadoop:hadoop /var/tmp/.oracle

chmod 777 /var/tmp/.oracle

问题:

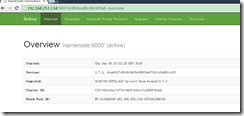

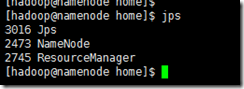

十二、客户端验证

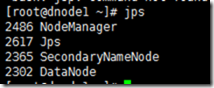

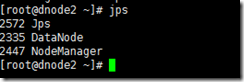

1. jps检查进程

至此,hadoop已经安装完毕

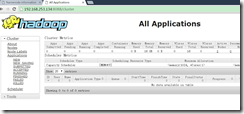

十三、测试hadoop,hadoop用户登陆

运行hadoop自带的wordcount实例

1. 建立输入文件

mkdir -p -m 755 /home/hadoop/test

cd /home/hadoop/test

echo "My first hadoop example. Hello Hadoop in input. " > testfile.txt

2. 建立目录

hadoop fs -mkdir /test

#hadoop fs -rmr /test 删除目录

3. 上传文件

hadoop fs -put testfile.txt /test

4. 执行wordcount程序

hadoop jar /usr/local/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount /test/testfile.txt /test/output

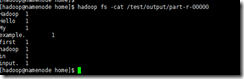

5. 查看结果

hadoop fs -cat /test/output/part-r-00000

十四、安装Spark2.0.0

1. 解压scala-2.11.8.tar.gz到/usr/local

tar xzvf scala-2.11.8

mv scala-2.11.8 /usr/local

2. 解压spark-2.0..0.tgz 到/usr/local

tar xzvf spark-2.0.0.tar.gz

mv spark-2.0.0 /usr/local

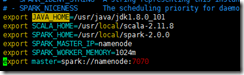

3.配置spark

cd /usr/local/spark-2.0.0/conf

vim spark-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_101export SCALA_HOME=/usr/local/scala-2.11.8export SPARK_HOME=/usr/local/spark-2.0.0export SPARK_MASTER_IP=namenodeexport SPARK_WORKER_MEMORY=1024mexport master=spark://namenode:7070

vim slaves

同步资源及配置文件到其它两个节点(hadoop用户)

for target in dnode1 dnode2 do scp -r /usr/local/scala-2.11.8 $target:/usr/local scp -r /usr/local/spark-2.0.0 $target:/usr/local done

4.启动spark集群

cd $SPARK_HOME

# Start Master

./sbin/start-master.sh

# Start Workers

./sbin/start-slaves.sh

5.客户端验证

来源:https://www.cnblogs.com/panliu/p/6093195.html