CFS实现的公平的基本原理是这样的:指定一个周期,根据进程的数量,大家”平分“这个调度周期内的CPU使用权,调度器保证在一个周期内所有进程都能被执行到。CFS和之前O(n)调度器不同,优先级高的进程能获得更多运行时间,但不代表优先级高的进程一定就先运行:

调度器使用vruntime来统计进程运行的累计时间,理想状态下,所有进程的vruntime是相等时代表当前CPU的时间分配是完全公平的。但事实上,即使是多核的系统一般进程数也是大于核心数的,所以一旦有进程占用CPU运行势必会造成不公平,完全公平调度器通过让当前遭受不公最严重(vruntime最小)的进程优先运行来缓解不公平的情况。当然,vruntime所指的运行时间并未非和以往一样每个或每几个cpu tick周期增加1,需要经过优先级加权换算,优先级高的进程可能运行10个tick之后vruntime才加1,反之优先级低的进程可能运行1个tick之后vruntime就被加了10。

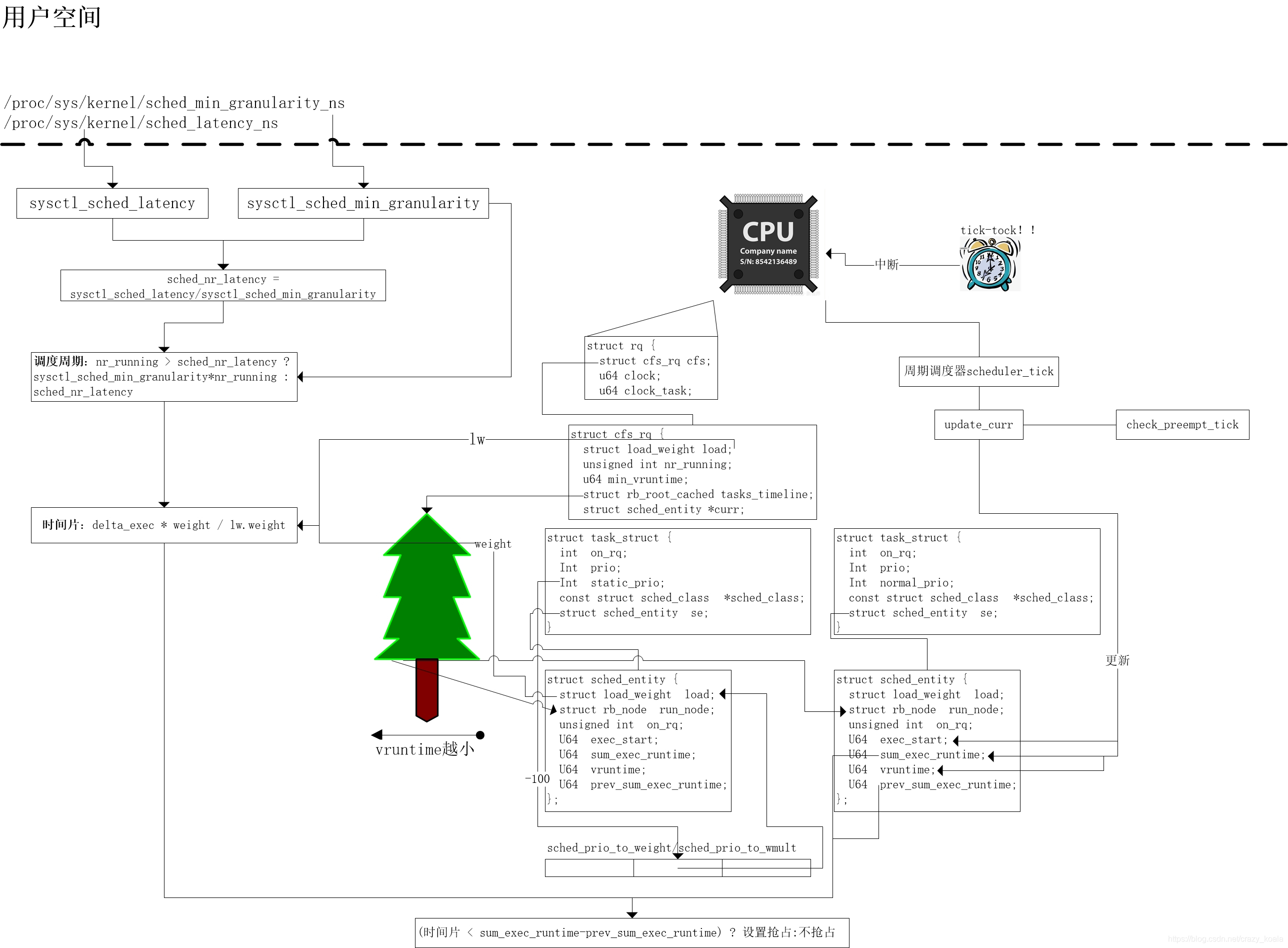

附一张图:

1.调度周期如何规定?

CFS引入了一个动态变化的调度周期:period。看两个CFS开放给用户的参数:

{//字面意思调度最小粒度,即进程每次被调度到最少要占用多长时间CPU

.procname = "sched_min_granularity_ns",

.data = &sysctl_sched_min_granularity,

},

{//字面意思调度延迟,即每个进程最长不等待超过调度延迟会被再次调度

.procname = "sched_latency_ns",

.data = &sysctl_sched_latency,

},

设想在最坏的情况下:只有一个CPU,等待运行的所有进程优先级相同所分得时间片相同,当一个进程运行过之后就要等待所有其他进程都运行到之后才能再次被调度。所以用户设置的这两个参数其实只有在rq上面进程数nr_running大于sysctl_sched_latency/sysctl_sched_min_granularity时才能被满足。

//每次更新最小调度粒度和调度延迟两个参数,都会更新sched_nr_latency

sched_nr_latency = DIV_ROUND_UP(sysctl_sched_latency, sysctl_sched_min_granularity);

//获取动态调度周期period需要根据sched_nr_latency计算

static u64 __sched_period(unsigned long nr_running)

{

if (unlikely(nr_running > sched_nr_latency))

return nr_running * sysctl_sched_min_granularity;

else

return sysctl_sched_latency;

}

从上面代码可以看出来,当进程太多时CFS只能先不管调度延迟,只保证最小调度粒度。为什么不保证用户设置调度延迟而保证最小调度粒度?因为最小调度粒度不保证的话,频繁抢占进程只会让更多时间浪费在context switch上,进一步恶化CPU资源紧促的情况。

2.CFS是如何分配时间片的?

CFS引入了vruntime虚拟运行时间的概念,为了让所有进程vruntime趋于相等,每次pick_next_task挑选下个要被运行的进程总会挑vruntime最小的进程出来运行。

但是vruntime和wall-time是不相等的,还要通过优先级加权,也就是说同样运行了10ms,高优先级进程vruntime+1低优先级进程可能要+10。

看看se中和cfs_rq中相关的成员:

tast_struct.se:

struct sched_entity {

struct load_weight load;

unsigned int on_rq;

};

cfs_rq:

struct cfs_rq {

struct load_weight load;

unsigned int nr_running, h_nr_running;

u64 min_vruntime;

}

load_weight:

struct load_weight {

unsigned long weight;

u32 inv_weight;

};

主要就是load_weight这个结构,load_weight字面意思就是权重,调度实体中的load_weight代表的该进程的权重,工作列队里的load_weight代表了整个列队的总权重。

看看CFS如何分配时间片:

static u64 sched_slice(struct cfs_rq *cfs_rq, struct sched_entity *se)

{

//获得当前的调度周期

u64 slice = __sched_period(cfs_rq->nr_running + !se->on_rq);

for_each_sched_entity(se) {

struct load_weight *load;

struct load_weight lw;

//获取se所在的rq

cfs_rq = cfs_rq_of(se);

//获取cfs_rq的load指针

load = &cfs_rq->load;

//如果se不在rq就绪列队中时,会将rq的load_weight和se的load_weight相加赋值给临时变量lw,临时指针load也指向lw

if (unlikely(!se->on_rq)) {

lw = cfs_rq->load;

lw->weight += se->load.weight;

lw->inv_weight = 0;

load = &lw;

}

//调用__calc_delta算出应该分到的时间片

slice = __calc_delta(slice, se->load.weight, load);

}

return slice;

}

/*

* delta_exec * weight / lw.weight

* OR

* (delta_exec * (weight * lw->inv_weight)) >> WMULT_SHIFT

*/

static u64 __calc_delta(u64 delta_exec, unsigned long weight, struct load_weight *lw)

{

......

}

通过代码看,sched_slice可以得出某个se在一个调度周期里需要占用多少时间,sched_slice准备了三个参数:

1.需要被瓜分的时间delta_exec

2.调度实体的权重weight

3.所有调度实体的总权重lw

最后调用__calc_delta算出se分得的时间,__calc_delta的实现很复杂但是注释写的很清楚,返回值有两种情况:

delta_exec * weight / lw.weight

或

(delta_exec * (weight * lw->inv_weight)) >> WMULT_SHIFT

第一种算法很好理解:假设两个进程分10s的CPU时间,AB进程权重分别是2和3,那么A进程分得10*2/(2+3)= 4s,B进程分得10*3/(2+3)= 6s。那如果权重分别是1和3就需要浮点运算了。

第二种算法就是要解决浮点运算的问题:

其实还是一样的结果,只是先2^WMULT_SHIFT/inv_weight得到的结果避免了weight / lw.weight 两个整形直接相除的结果精度太低罢了。

3.调度实体se中的load_weight是如何得来的?根据是什么?

在do_fork->sched_fork->set_load_weight方法中会对se中的load_weight做如下初始化:

load->weight = sched_prio_to_weight[prio];

load->inv_weight = sched_prio_to_wmult[prio];

从数组名的字面意思都可以猜到,weight是优先级换算来的,根据上面的分析inv_weight自然也可以通过weight换算得来,根据普通进程所有的优先级先把weight和inv_weight提前算好存起来,取的时候以优先级为索引直接获得,避免了频繁运算:

/*

* Nice levels are multiplicative, with a gentle 10% change for every

* nice level changed. I.e. when a CPU-bound task goes from nice 0 to

* nice 1, it will get ~10% less CPU time than another CPU-bound task

* that remained on nice 0.

*

* The "10% effect" is relative and cumulative: from _any_ nice level,

* if you go up 1 level, it's -10% CPU usage, if you go down 1 level

* it's +10% CPU usage. (to achieve that we use a multiplier of 1.25.

* If a task goes up by ~10% and another task goes down by ~10% then

* the relative distance between them is ~25%.)

*/

const int sched_prio_to_weight[40] = {

/* -20 */ 88761, 71755, 56483, 46273, 36291,

/* -15 */ 29154, 23254, 18705, 14949, 11916,

/* -10 */ 9548, 7620, 6100, 4904, 3906,

/* -5 */ 3121, 2501, 1991, 1586, 1277,

/* 0 */ 1024, 820, 655, 526, 423,

/* 5 */ 335, 272, 215, 172, 137,

/* 10 */ 110, 87, 70, 56, 45,

/* 15 */ 36, 29, 23, 18, 15,

};

/*

* Inverse (2^32/x) values of the sched_prio_to_weight[] array, precalculated.

*

* In cases where the weight does not change often, we can use the

* precalculated inverse to speed up arithmetics by turning divisions

* into multiplications:

*/

const u32 sched_prio_to_wmult[40] = {

/* -20 */ 48388, 59856, 76040, 92818, 118348,

/* -15 */ 147320, 184698, 229616, 287308, 360437,

/* -10 */ 449829, 563644, 704093, 875809, 1099582,

/* -5 */ 1376151, 1717300, 2157191, 2708050, 3363326,

/* 0 */ 4194304, 5237765, 6557202, 8165337, 10153587,

/* 5 */ 12820798, 15790321, 19976592, 24970740, 31350126,

/* 10 */ 39045157, 49367440, 61356676, 76695844, 95443717,

/* 15 */ 119304647, 148102320, 186737708, 238609294, 286331153,

};

注释写的非常清楚,nice值每提高1级就能多获取10%的cpu时间,套公式算一下:

假设有两个进程分别nice值分别是0和1,它们分得的调度周期百分比:

1024/(1024+820) = 55%

820/(1024+820) = 44%

55% - 44% = 10%

4.CFS是根据在什么情况下会抢占当前进程?

一个调度器类的方法实现中,主要和抢占相关的两个方法:

task_tick:周期调度器更新完统计值就会调用当前进程所在调度器类的task_tick检查抢占。

check_preempt_curr:传入进程描述符P,检查是否可以抢占当前进程,一般在fork新进程、唤醒进程、调整某个进程优先级等操作之后用于检测某个进程是否可以抢占当前正在运行的进程。

看下task_tick_fair:

static void task_tick_fair(struct rq *rq, struct task_struct *curr, int queued)

{

struct cfs_rq *cfs_rq;

struct sched_entity *se = &curr->se;

//不考虑组调度的情况,该循环只走一遍

for_each_sched_entity(se) {

cfs_rq = cfs_rq_of(se);

entity_tick(cfs_rq, se, queued);

}

}

entity_tick(struct cfs_rq *cfs_rq, struct sched_entity *curr, int queued)

{

/*

* Update run-time statistics of the 'current'.

*/

//先统计一把当前进程运行时间

update_curr(cfs_rq);

if (cfs_rq->nr_running > 1)

//检查要不要调度

check_preempt_tick(cfs_rq, curr);

}

update_curr负责统计当前cfs_rq运行时间相关的变量:

static void update_curr(struct cfs_rq *cfs_rq)

{

struct sched_entity *curr = cfs_rq->curr;

//获取当前rq去掉被中断的时间进程运行的总tick数

u64 now = rq_clock_task(rq_of(cfs_rq));

u64 delta_exec;

if (unlikely(!curr))

return;

/*+ 算出距离上次调用update_curr统计至今,tick的增量*/

delta_exec = now - curr->exec_start;

if (unlikely((s64)delta_exec <= 0))

return;

curr->exec_start = now;

/*- 算出距离上次调用update_curr统计至今,tick的增量*/

//把算出来的时间增量加到sum_exec_runtime

curr->sum_exec_runtime += delta_exec;

//把算出来的时间增量通过权重换算下加到vruntime

curr->vruntime += calc_delta_fair(delta_exec, curr);

//更新列队的min_vruntime

update_min_vruntime(cfs_rq);

if (entity_is_task(curr)) {

struct task_struct *curtask = task_of(curr);

trace_sched_stat_runtime(curtask, delta_exec, curr->vruntime);

cpuacct_charge(curtask, delta_exec);

account_group_exec_runtime(curtask, delta_exec);

}

account_cfs_rq_runtime(cfs_rq, delta_exec);

}

static inline u64 calc_delta_fair(u64 delta, struct sched_entity *se)

{

if (unlikely(se->load.weight != NICE_0_LOAD))

delta = __calc_delta(delta, NICE_0_LOAD, &se->load);

return delta;

}

calc_delta_fair负责将时间增量换算成vruntime:

看到这不难发现,CFS在处理高低优先级进程上CPU时间分配上,主要逻辑如下:

1.高优先级分配的CPU时间片更多

2.高优先级的vruntime流逝的更慢

回头看看check_preempt_tick:

check_preempt_tick(struct cfs_rq *cfs_rq, struct sched_entity *curr)

{

unsigned long ideal_runtime, delta_exec;

struct sched_entity *se;

s64 delta;

//如上文sched_slice算出来的时间片,至少是保证了最小调度粒度的

ideal_runtime = sched_slice(cfs_rq, curr);

//算出当前进程已经运行了多少tick

delta_exec = curr->sum_exec_runtime - curr->prev_sum_exec_runtime;

//超出了分配的时间片,设置抢占

if (delta_exec > ideal_runtime) {

resched_curr(rq_of(cfs_rq));

return;

}

/* 下面这段代码有点诡异,看是和唤醒抢占相关的

* Ensure that a task that missed wakeup preemption by a

* narrow margin doesn't have to wait for a full slice.

* This also mitigates buddy induced latencies under load.

*/

if (delta_exec < sysctl_sched_min_granularity)

return;

se = __pick_first_entity(cfs_rq);

delta = curr->vruntime - se->vruntime;

if (delta < 0)

return;

if (delta > ideal_runtime)

resched_curr(rq_of(cfs_rq));

}

但是从check_preempt_tick的情况来看,ideal_runtime也就是进程分得的时间片才是周期性调度器最终决定是否抢占的标准。

再看看check_preempt_wakeup:

static void check_preempt_wakeup(struct rq *rq, struct task_struct *p, int wake_flags)

{

...

update_curr(cfs_rq_of(se));

//se是当前进程的调度实体,pse是要判断的调度实体

if (wakeup_preempt_entity(se, pse) == 1) {

...

goto preempt;

}

return;

preempt:

resched_curr(rq);

...

}

wakeup_preempt_entity(struct sched_entity *curr, struct sched_entity *se)

{

s64 gran, vdiff = curr->vruntime - se->vruntime;

if (vdiff <= 0)

return -1;

gran = wakeup_gran(curr, se);

if (vdiff > gran)

return 1;

if (vdiff > 0) && (vdiff <= gran)

return 0;

}

wakeup_gran(struct sched_entity *curr, struct sched_entity *se)

{

unsigned long gran = sysctl_sched_wakeup_granularity;

return calc_delta_fair(gran, se);

}

sysctl_sched_wakeup_granularity也是开放给用户的一个参数,字面意思是唤醒调度粒度,用来防止短时间进出休眠状态的进程频繁抢占导致的性能浪费,默认是1ms,只有当当前进程vruntime大于尝试抢占的进程的vruntime且大于gran * (NICE_0_LOAD / se->load.weight)时才能抢占。

5.列队的min_vruntime用在哪?

列队的min_vruntime其实想算也可算出来,CFS在设计时特地留了个空间放整个列队的min_vruntime这种以空间换时间的操作证明min_vruntime肯定是有用的。

先看看min_vruntime如何求得的:

static void update_min_vruntime(struct cfs_rq *cfs_rq)

{

struct sched_entity *curr = cfs_rq->curr;

struct rb_node *leftmost = rb_first_cached(&cfs_rq->tasks_timeline);

u64 vruntime = cfs_rq->min_vruntime;

if (curr) {

if (curr->on_rq)

vruntime = curr->vruntime;

else

curr = NULL;

}

/**/

if (leftmost) { /* non-empty tree */

struct sched_entity *se;

se = rb_entry(leftmost, struct sched_entity, run_node);

/*curr既然都不在rq中了,那么它的vruntime自然就没有参考意义了*/

if (!curr)

vruntime = se->vruntime;

else /*curr->on_rq is true,先获取当前运行进程和队头进程中较小的vruntime*/

vruntime = min_vruntime(vruntime, se->vruntime);

}

/* ensure we never gain time by being placed backwards. 保证min_vruntime是单调递增的*/

cfs_rq->min_vruntime = max_vruntime(cfs_rq->min_vruntime, vruntime);

}

在CFS红黑树的进程都是就绪态或者运行态的,它们的vruntime会随着时间的推移不停地增加,但是有三种情况会给这棵树带来新的成员,需要特殊处理防止造成不公平(某个进程的vruntime过大或者过小导致自己被饿死或者饿死其他进程):

1.新进程的加入,会将父进程的当前的vruntime赋值给新进程,再加上一点惩罚值,如果设置内核先运行,那就交换父子进程的vruntime。因为得到了父进程的vruntime,但是子进程实际运行可能在其他cpu上rq,所以要先减去当前rq的min_vruntime,确定分配到哪个cpu时在加上所在cpu上rq的min_vruntime。

2.从其他调度策略切换到普通调度策略,参考1

3.进程唤醒重新加入到列队中运行,因为不在队中,睡眠进程的vruntime一段实际都不再增加,若不处理直接入队可能会导致其他进程被饿死。所以在唤醒进程入队时会以当前rq的min_vruntime再减去一点作为补偿。

上述三个问题的相关代码:

static void task_fork_fair(struct task_struct *p)

{

struct cfs_rq *cfs_rq;

struct sched_entity *se = &p->se, *curr;

struct rq *rq = this_rq();

struct rq_flags rf;

rq_lock(rq, &rf);

update_rq_clock(rq);

cfs_rq = task_cfs_rq(current);

curr = cfs_rq->curr;

if (curr) {

update_curr(cfs_rq);

//将父进程的vruntime拷贝给新进程

se->vruntime = curr->vruntime;

}

place_entity(cfs_rq, se, 1);

//如果设定了子进程先运行的参数,确保子进程的vruntime比父进程小

if (sysctl_sched_child_runs_first && curr && entity_before(curr, se)) {

swap(curr->vruntime, se->vruntime);

resched_curr(rq);

}

//进程在这个cpu创建并非一定会在这个进程运行,入队到真正要执行的CPU上的rq时,会加上rq的min_vruntime

se->vruntime -= cfs_rq->min_vruntime;

rq_unlock(rq, &rf);

}

place_entity(struct cfs_rq *cfs_rq, struct sched_entity *se, int initial)

{

u64 vruntime = cfs_rq->min_vruntime;

//新进程的vruntime要加上,自己时间片*(NICE_0_LOAD/weight)

if (initial && sched_feat(START_DEBIT))

vruntime += sched_vslice(cfs_rq, se);

//刚被唤醒的进程vruntime要减去调度延迟或者调度延迟的一半

if (!initial) {

unsigned long thresh = sysctl_sched_latency;

/*

* Halve their sleep time's effect, to allow

* for a gentler effect of sleepers:

*/

if (sched_feat(GENTLE_FAIR_SLEEPERS))

thresh >>= 1;

vruntime -= thresh;

}

/* ensure we never gain time by being placed backwards. */

se->vruntime = max_vruntime(se->vruntime, vruntime);

}

来源:CSDN

作者:crazy_koala

链接:https://blog.csdn.net/crazy_koala/article/details/104196387