Transform转换算子

1、map

val streamMap = stream.map { x => x * 2 }

2、flatMap

val streamFlatMap = stream.flatMap{

x => x.split(" ")

}

3、Filter

val streamFilter = stream.filter{

x => x != null

}

4、KeyBy

DataStream --> KeyedStream:输入必须是Tuple类型,逻辑地将一个流拆分成不相交的分区,每个分区包含具有相同key的元素,在内部以hash的形式实现的。

5、Reduce

KeyedStream --> DataStream:一个分组数据流的聚合操作,合并当前的元素和上次聚合的结果,产生一个新的值,返回的流中包含每一次聚合的结果,而不是只返回最后一次聚合的最终结果。

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.FlinkKafkaUtil

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.{DataStream, KeyedStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

object StartupApp02 {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

//求各个渠道的累计个数

val userLogDstream: DataStream[UserLog] = dstream.map{ JSON.parseObject(_,classOf[UserLog])}

val keyedStream: KeyedStream[(String, Int), Tuple] = userLogDstream.map(userLog=>(userLog.channel,1)).keyBy(0)

//reduce //sum

keyedStream.reduce{ (ch1,ch2)=>

(ch1._1,ch1._2+ch2._2)

} .print().setParallelism(1)

dstream.print()

environment.execute()

}

}

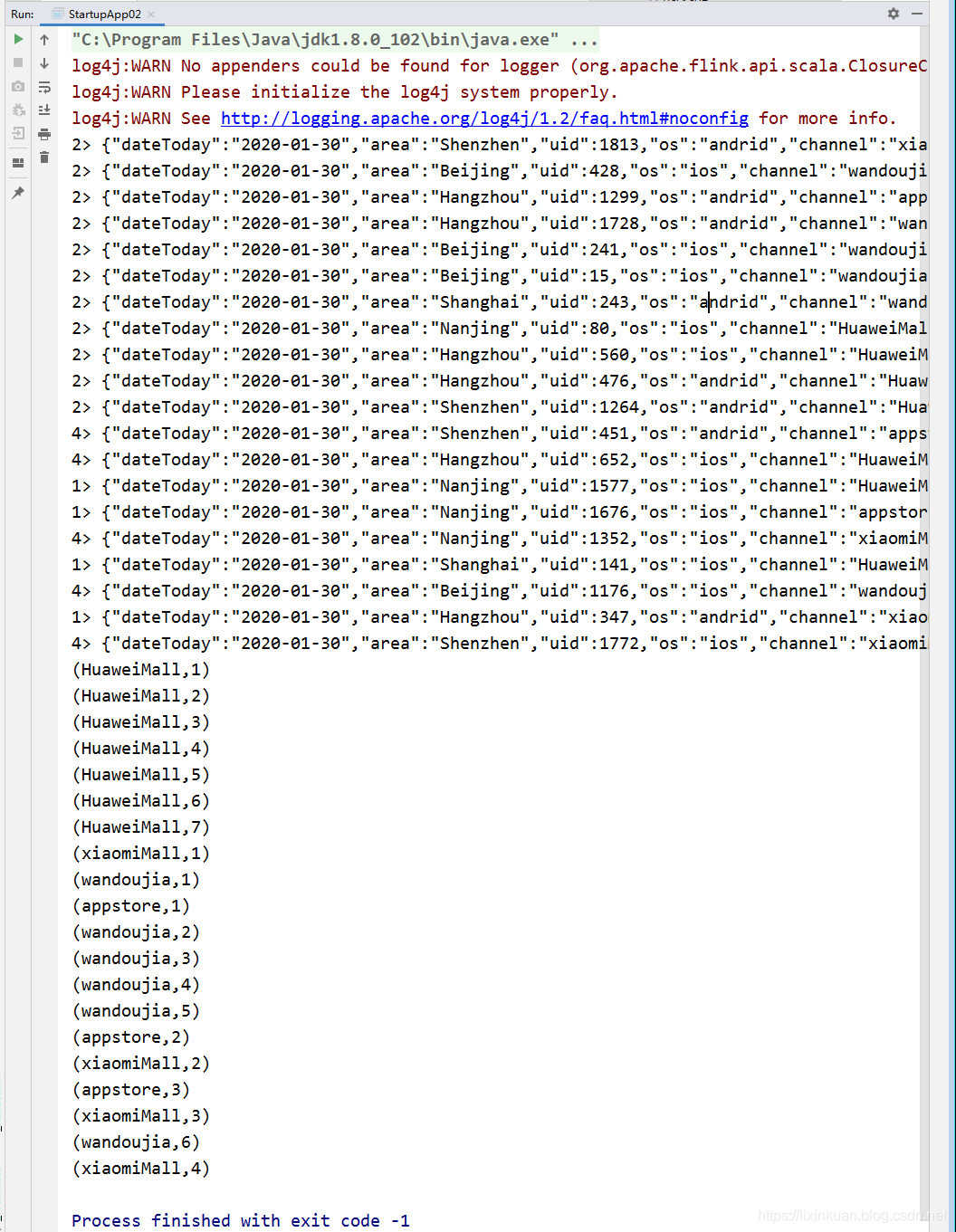

output:

flink是如何保存累计值的?

flink是一种有状态的流计算框架,其中说的状态包括两个层面:

- 1) operator state 主要是保存数据在流程中的处理状态,用于确保语义的exactly-once。

- 2) keyed state 主要是保存数据在计算过程中的累计值。

这两种状态都是通过checkpoint机制保存在StateBackend中,StateBackend可以选择保存在内存中(默认使用)或者保存在磁盘文件中。

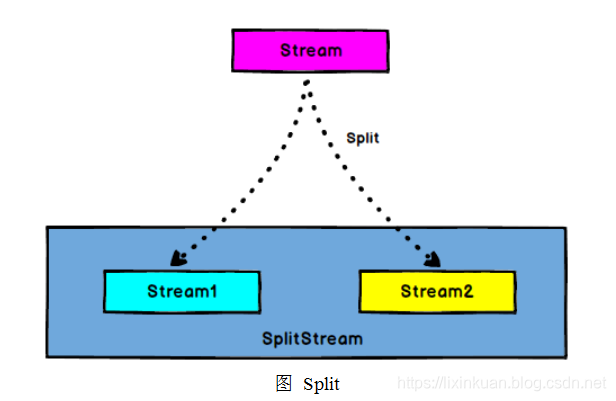

6、Split 和 Select

- Split

DataStream --> SplitStream:根据某些特征把一个DataStream拆分成两个或者多个DataStream。

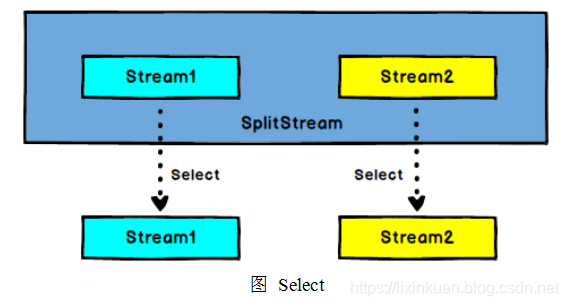

- Select

SplitStream --> DataStream:从一个SplitStream中获取一个或者多个DataStream。

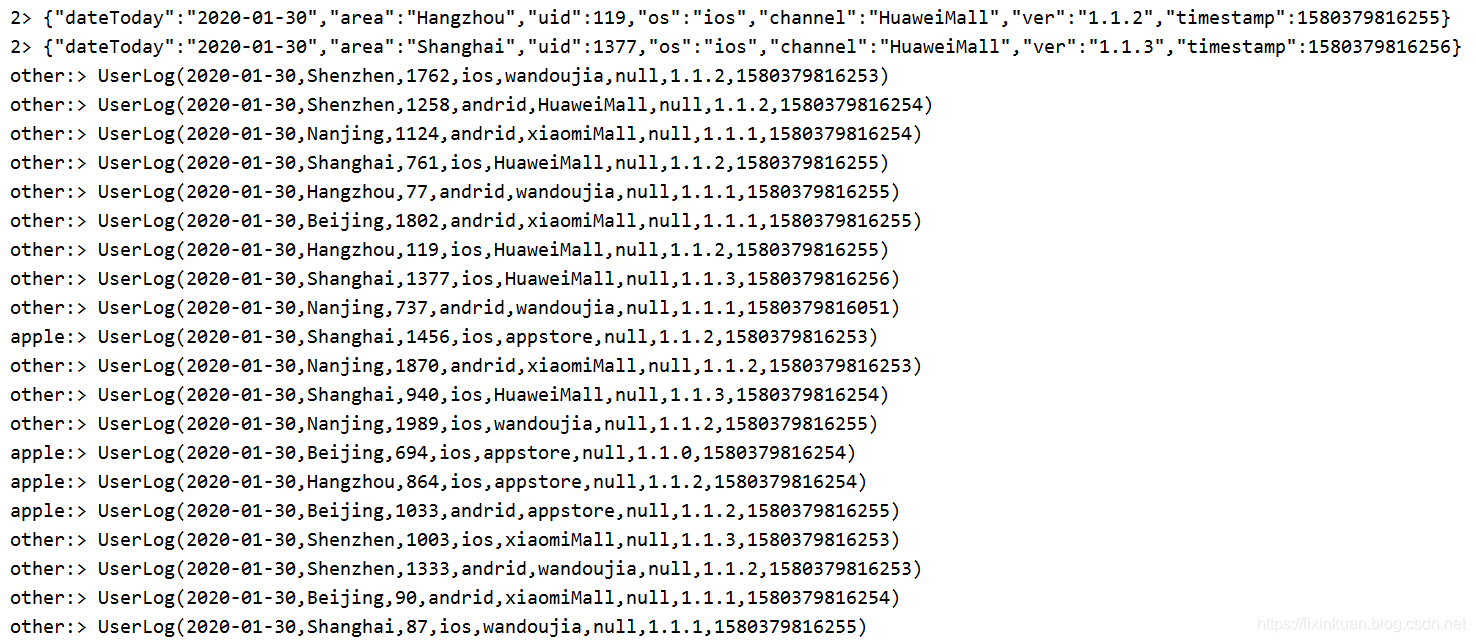

需求:把appstore和其他的渠道的数据单独拆分出来,做成两个流

package com.lxk.service

import com.alibaba.fastjson.JSON

import com.lxk.bean.UserLog

import com.lxk.util.FlinkKafkaUtil

import org.apache.flink.api.java.tuple.Tuple

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.{DataStream, KeyedStream, SplitStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

object StartupApp03 {

def main(args: Array[String]): Unit = {

val environment: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val kafkaConsumer: FlinkKafkaConsumer010[String] = FlinkKafkaUtil.getConsumer("GMALL_STARTUP")

val dstream: DataStream[String] = environment.addSource(kafkaConsumer)

// 将appstore与其他渠道拆分拆分出来 成为两个独立的流

val userLogDstream: DataStream[UserLog] = dstream.map{ JSON.parseObject(_,classOf[UserLog])}

val splitStream: SplitStream[UserLog] = userLogDstream.split { UserLog =>

var flags:List[String] = null

if ("appstore" == UserLog.channel) {

flags = List(UserLog.channel)

} else {

flags = List("other")

}

flags

}

val appStoreStream: DataStream[UserLog] = splitStream.select("appstore")

appStoreStream.print("apple:").setParallelism(1)

val otherStream: DataStream[UserLog] = splitStream.select("other")

otherStream.print("other:").setParallelism(1)

dstream.print()

environment.execute()

}

}

output:

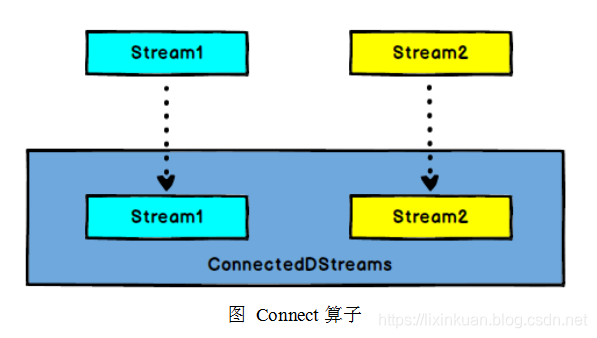

7、Connect和 CoMap

DataStream,DataStream --> ConnectedStreams:连接两个保持他们类型的数据流,两个数据流被Connect之后,只是被放在了一个同一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

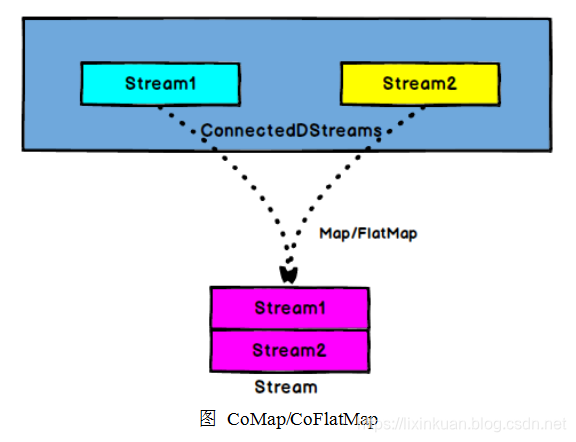

CoMap,CoFlatMap

ConnectedStreams --> DataStream:作用于ConnectedStreams上,功能与map和flatMap一样,对ConnectedStreams中的每一个Stream分别进行map和flatMap处理。

val appStoreStream: DataStream[UserLog] = splitStream.select("appstore")

// appStoreStream.print("apple:").setParallelism(1)

val otherStream: DataStream[UserLog] = splitStream.select("other")

// otherStream.print("other:").setParallelism(1)

//合并以后打印

val connStream: ConnectedStreams[UserLog, UserLog] = appStoreStream.connect(otherStream)

val allStream: DataStream[String] = connStream.map(

(log1: UserLog) => log1.channel,

(log2: UserLog) => log2.channel

)

allStream.print("copMap::")

output:

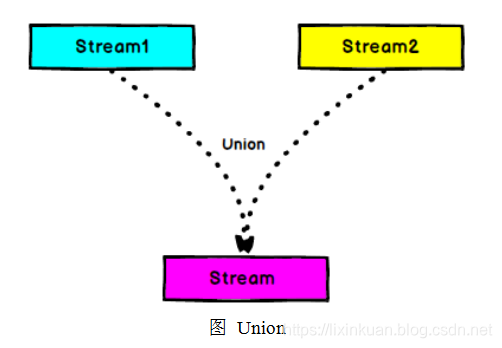

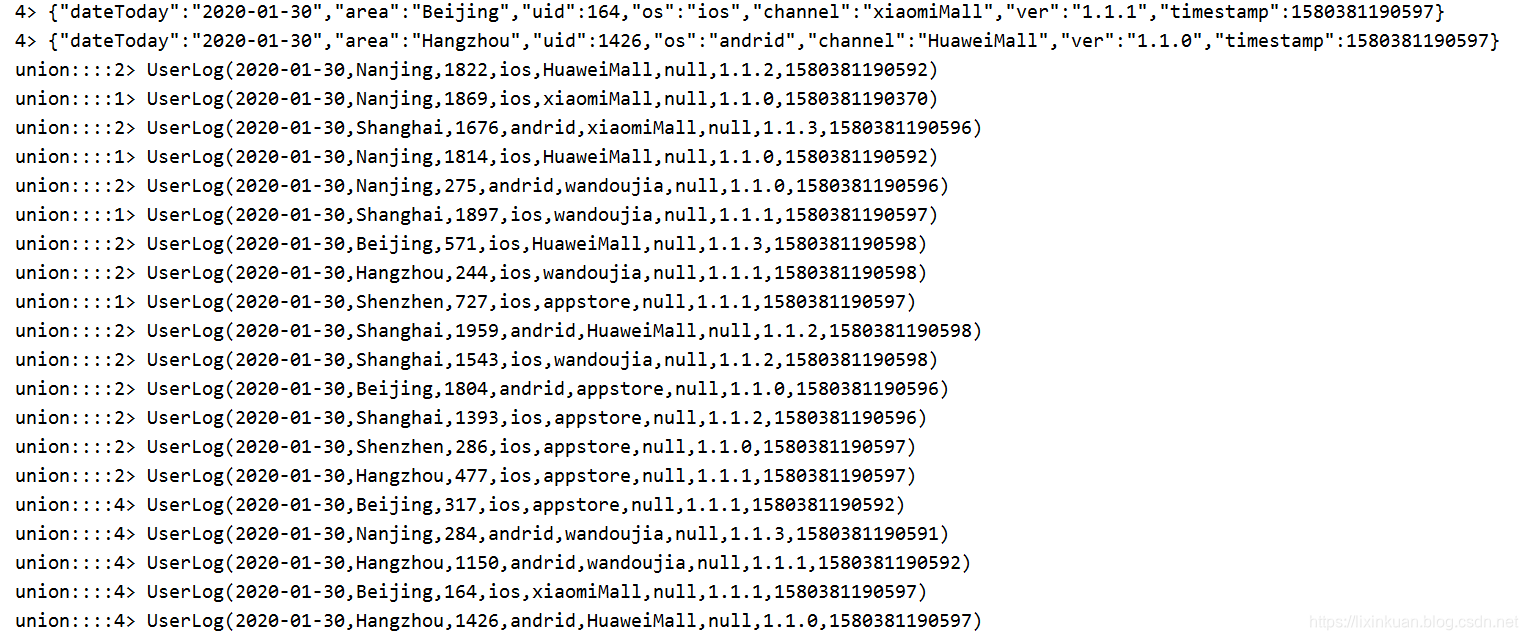

8、Union

DataStream → DataStream:对两个或者两个以上的DataStream进行union操作,产生一个包含所有DataStream元素的新DataStream。注意:如果你将一个DataStream跟它自己做union操作,在新的DataStream中,你将看到每一个元素都出现两次。

val appStoreStream: DataStream[UserLog] = splitStream.select("appstore")

// appStoreStream.print("apple:").setParallelism(1)

val otherStream: DataStream[UserLog] = splitStream.select("other")

// otherStream.print("other:").setParallelism(1)

//合并以后打印

/*val connStream: ConnectedStreams[UserLog, UserLog] = appStoreStream.connect(otherStream)

val allStream: DataStream[String] = connStream.map(

(log1: UserLog) => log1.channel,

(log2: UserLog) => log2.channel

)

allStream.print("copMap::")*/

//合并以后打印

val unionStream: DataStream[UserLog] = appStoreStream.union(otherStream)

unionStream.print("union:::")

output:

Connect与 Union 区别

- Union之前两个流的类型必须是一样,Connect可以不一样,在之后的coMap中再去调整成为一样的。

- Connect只能操作两个流,Union可以操作多个

来源:CSDN

作者:Jeremy_Lee123

链接:https://blog.csdn.net/lixinkuan328/article/details/104116888