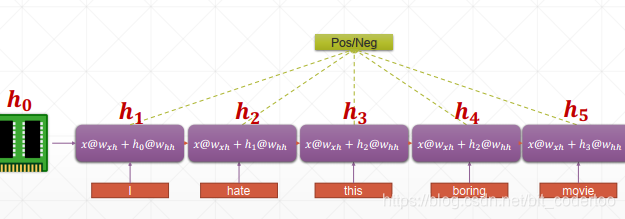

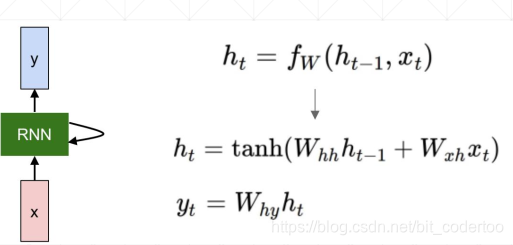

循环神经网络

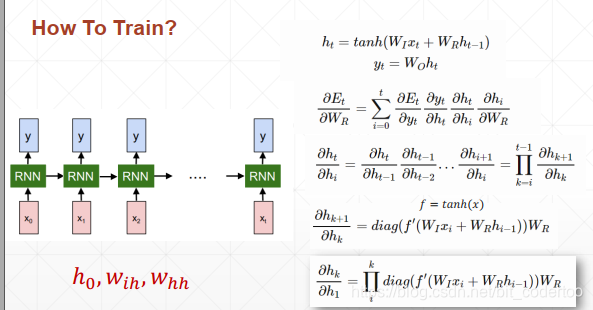

求解导数

RNN Layer

对应

[10,3,100]

[3,100],3批次,一个单词

rnn = nn.RNN(100,10) #词向量,隐藏层向量

rnn.weight_hh_l0.shape #一个RNN有四个参数

rnn.bias_ih_l0.shape

三个参数,input_size,hidden_size, num_layers

可以通过设置batch_first参数设置维度表示方式

out,ht = forward(x,h0)

#注意此处x即为[seq,batch,wordvec],直接将一句话喂进去

h0/ht -> [num_layers,batch,h_dim]

out -> [seq,batch,h_dim],他返回每个时间步的out

rnn = nn.RNN(100,10) #词向量,隐藏层向量

x = torch.randn(10,3,100)

out,h = rnn(x,torch.zeros(1,3,20)

多层RNN

注意上层的的尺度变为【hidden,hidden】

nn.RNNCell

更加灵活,手动喂多次

三个参数,input_size,hidden_size, num_layers 初始化相同

ht = rnncell(xt,ht_1)

# xt:[b,wordvec]

# ht_1/ht:[num_layers,b,h_dim]

#out = torch.stack([h1,h2...ht]), h的集合

cell1 = nn.RNNCell(100,20)

h1 = torch.zeros(3,20)

for xt in x:

h1 = cell1(xt,h1)

两层并且hidden不同

cell1 = nn.RNNCell(100,30)

cell2 = nn.RNNCell(30,20)

h1 = torch.zeros(3,30)

h2 = torch.zeros(3,20)

for xt in x:

h1 = cell1(xt,h1)

h2 = cell2(h1,h2)

梯度爆炸

对w.grad 进行clipping

loss = criterion(output,y)

model.zero_grad()

loss.backward()

for p in model.parameters():

print(p.grad.norm()) #检测是否爆炸

torch.nn.utils.clip_grad_norm_(p,10)

optimizer.step()

梯度离散

来源:CSDN

作者:bit_codertoo

链接:https://blog.csdn.net/bit_codertoo/article/details/103653652