起步

首先利用selenium获取登录知乎后的cookies,夹着cookies对知乎首页发送response请求,然后再对首页中的热榜(可以修改start_urls)下的所有问答页面进行爬取,并将字段异步插入到mysql中

爬取知乎热榜

通过selenium获取登录后的cookies,并将cookies以json的格式保存在zhihuCookies,json中:

def loginZhihu(self):

loginurl = 'https://www.zhihu.com/signin'

# 加载webdriver驱动,用于获取登录页面标签属性

driver = webdriver.Chrome()

driver.get(loginurl)

# 扫描二维码前,让程序休眠10s

time.sleep(10)

input("请点击并扫描页面二维码,手机确认登录后,回编辑器点击回车:")

# 获取登录后的cookies

cookies = driver.get_cookies()

driver.close()

# 保存cookies,之后请求从文件中读取cookies就可以省去每次都要登录一次的,也可以通过return返回,每次执行先运行登录方法

# 保存成本地json文件

jsonCookies = json.dumps(cookies)

with open('zhihuCookies.json', 'w') as f:

f.write(jsonCookies)

请求头中的字段:

import scrapy

import datetime

from urllib import parse

import re

import time

from scrapy.loader import ItemLoader

from PictureSpider.items import ZhihuAnswerItem,ZhihuQuestionItem

import json

from selenium import webdriver

class ZhihuSpider(scrapy.Spider):

name = 'zhihu'

allowed_domains = ['www.zhihu.com']

start_urls = ['https://www.zhihu.com/hot']

#question answer 的url请求

start_answer_url="https://www.zhihu.com/api/v4/questions/{0}/answers?include=data%5B%2A%5D.is_normal%2Cadmin_closed_comment%2Creward_info%2Cis_collapsed%2Cannotation_action%2Cannotation_detail%2Ccollapse_reason%2Cis_sticky%2Ccollapsed_by%2Csuggest_edit%2Ccomment_count%2Ccan_comment%2Ccontent%2Ceditable_content%2Cvoteup_count%2Creshipment_settings%2Ccomment_permission%2Ccreated_time%2Cupdated_time%2Creview_info%2Crelevant_info%2Cquestion%2Cexcerpt%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%2Cis_labeled%2Cis_recognized%2Cpaid_info%2Cpaid_info_content%3Bdata%5B%2A%5D.mark_infos%5B%2A%5D.url%3Bdata%5B%2A%5D.author.follower_count%2Cbadge%5B%2A%5D.topics&limit={1}&offset={2}"

headers = {

'Host': 'www.zhihu.com',

'Referer': 'https://www.zhihu.com/',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36'

}

读取本地保存的cookies中的name和value字段,并附加到知乎首页请求中:

def start_requests(self):

# 首次要执行登录知乎,用来保存cookies,

# 之后的请求可以注释该方法,每次请求都做登录那真的很烦

self.loginZhihu()

# 读取login保存的cookies值

with open('zhihuCookies.json', 'r', encoding='utf-8') as f:

listcookies = json.loads(f.read())

# 通过构建字典类型的cookies

cookies_dict = dict()

for cookie in listcookies:

cookies_dict[cookie['name']] = cookie['value']

# Tips 我们在提取cookies时,其实只要其中name和value即可,其他的像domain等可以不用

# yield发起知乎首页请求,带上cookies-->cookies=cookies_dict

yield scrapy.Request(url=self.start_urls[0], cookies=cookies_dict, headers=self.headers, dont_filter=True) # 不加callback,默认调用parse方法

对知乎首页进行爬取,用正则过滤获得question的url:

# 爬取知乎首页,这里我们要做的是讲所以是知乎问答的请求url筛选出来

def parse(self, response):

# 爬取知乎首页->热榜中的->所有urls

all_urls=response.css("a::attr(href)").extract()

# 大部分的url都是不带主域名的,所以通过parse函数拼接一个完整的url

all_urls=[parse.urljoin(response.url,url) for url in all_urls]

# 通过lambda函数筛选带https的url

all_urls = filter(lambda x:True if x.startswith("https") else False,all_urls)

# 筛选所有urls中是知乎问答的urls(分析问答urls格式发现是:/question/xxxxxxxxx)

for url in all_urls:

match_obj=re.match("(.*zhihu.com/question/(\d+))(/|$).*",url)

if match_obj:

requests_url =match_obj.group(1)

# 如果提取到question的url则通过yield请求question解析方法进一步解析question

yield scrapy.Request(requests_url,headers=self.headers,callback=self.parse_question)

else:

# 则说明当前知乎页面中没有question,那么在此请求知乎首页,相当于刷新,直到出现question

yield scrapy.Request(url, headers=self.headers, callback=self.parse)

对知乎首页中具体的question进行爬取:

def parse_question(self,response):

# 处理callback函数返回的question item

match_obj = re.match("(.*zhihu.com/question/(\d+))(/|$).*", response.url)

if match_obj:

question_id = int(match_obj.group(2))

time.sleep(1) # 进程休眠1s 防止被封ip

if "QuestionHeader-title" in response.text:

item_loader=ItemLoader(item=ZhihuQuestionItem(),response=response)

item_loader.add_css("title","h1.QuestionHeader-title::text")

item_loader.add_css("content", ".QuestionHeader-detail")

item_loader.add_value("url", response.url)

item_loader.add_value("zhihu_id", question_id)

item_loader.add_css("click_num", ".NumberBoard-itemValue::text")

item_loader.add_css("answer_num", ".List-headerText span::text")

item_loader.add_css("comments_num", ".QuestionHeader-Comment button::text")

item_loader.add_css("watch_user_num", ".NumberBoard-itemValue::text")

item_loader.add_css("topics", ".QuestionHeader-topics .Popover div::text")

question_item = item_loader.load_item()

yield scrapy.Request(self.start_answer_url.format(question_id,20,0),headers=self.headers,callback=self.parse_answer)

yield question_item

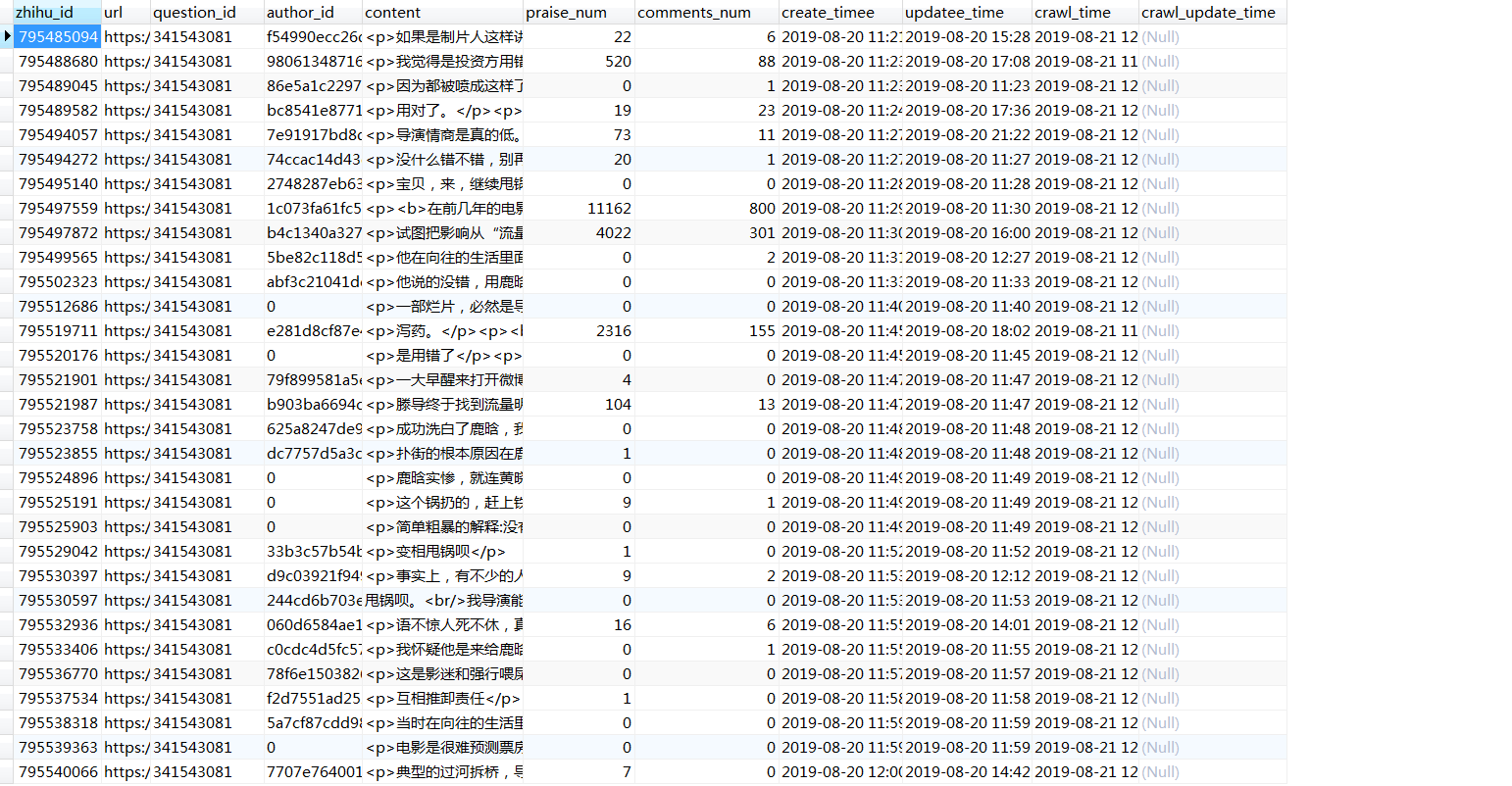

对该question下的answers进行爬取:

# 请求知乎问答的用户回答,api接口,返回是一个json数据

def parse_answer(self,response):

ans_json=json.loads(response.text)

is_end =ans_json["paging"]["is_end"]

next_url=ans_json["paging"]["next"]

#提取answer的具体字段

for answer in ans_json["data"]:

answer_item = ZhihuAnswerItem()

answer_item["zhihu_id"]=answer["id"]

answer_item["url"] = answer["url"]

answer_item["question_id"] = answer["question"]["id"]

answer_item["author_id"] = answer["author"]["id"] if "id" in answer["author"] else None

answer_item["content"] = answer["content"] if "content" in answer else None

answer_item["praise_num"] = answer["voteup_count"]

answer_item["comments_num"] = answer["comment_count"]

answer_item["create_timee"] = answer["created_time"]

answer_item["updatee_time"]= answer["updated_time"]

answer_item["crawl_time"] = datetime.datetime.now()

yield answer_item

if not is_end: # 如果不是下一页,则带上next_url回调该方法

yield scrapy.Request(next_url,headers=self.headers, callback=self.parse_answer)

对item字段及sql语句进行定义:

import scrapy

from PictureSpider.utils.common import extract_num

import datetime

from PictureSpider.settings import MYSQL_DATETIME_FORMAT

class ZhihuQuestionItem(scrapy.Item):

zhihu_id=scrapy.Field()

topics = scrapy.Field()

url = scrapy.Field()

title = scrapy.Field()

content= scrapy.Field()

create_time=scrapy.Field()

update_time=scrapy.Field()

answer_num=scrapy.Field()

comments_num = scrapy.Field()

watch_user_num = scrapy.Field()

click_num=scrapy.Field()

crawl_time=scrapy.Field()

crawl_update_time=scrapy.Field()

def get_insert_mysql(self):

# 插入知乎question的sql语句

insert_sql="""

insert into zhihu_question(zhihu_id,topics,url,title,content,answer_num,comments_num,watch_user_num,click_num,crawl_time)

VALUES (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)

ON DUPLICATE KEY UPDATE content=VALUES(content),answer_num=VALUES(answer_num),comments_num=values(comments_num),

watch_user_num=values(watch_user_num),click_num=VALUES(click_num)

"""

zhihu_id = self["zhihu_id"][0]

topics =",".join(self["topics"])

# url = "".join(self["url"])

url = self["url"][0]

title="".join(self["title"])

content = "".join(self["content"])

answer_num =extract_num("".join(self["answer_num"]))

comments_num=extract_num("".join(self["comments_num"]))

watch_user_num = extract_num("".join(self["watch_user_num"]))

click_num = extract_num("".join(self["click_num"][1]))

crawl_time=datetime.datetime.now().strftime(MYSQL_DATETIME_FORMAT)

params = (zhihu_id,topics,url,title,content,answer_num,comments_num,watch_user_num,click_num,crawl_time)

return insert_sql,params

class ZhihuAnswerItem(scrapy.Item):

zhihu_id = scrapy.Field()

url = scrapy.Field()

question_id= scrapy.Field()

author_id= scrapy.Field()

content= scrapy.Field()

praise_num = scrapy.Field()

comments_num = scrapy.Field()

create_timee = scrapy.Field()

updatee_time = scrapy.Field()

crawl_time = scrapy.Field()

crawl_update_time = scrapy.Field()

def get_insert_mysql(self):

insert_sql="""

insert into zhihu_answer(zhihu_id,url,question_id,author_id,content,praise_num,comments_num,

create_timee,updatee_time,crawl_time

) VALUES (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)

ON DUPLICATE KEY UPDATE content=VALUES(content),comments_num=VALUES(comments_num),praise_num=values(praise_num),

updatee_time=values(updatee_time)

"""

create_timee=datetime.datetime.fromtimestamp(self["create_timee"]).strftime(MYSQL_DATETIME_FORMAT)

updatee_time=datetime.datetime.fromtimestamp(self["updatee_time"]).strftime(MYSQL_DATETIME_FORMAT)

params = (self["zhihu_id"],self["url"],self["question_id"],

self["author_id"],self["content"],self["praise_num"],

self["comments_num"], create_timee,updatee_time,

self["crawl_time"].strftime(MYSQL_DATETIME_FORMAT),

)

return insert_sql, params

利用正则处理item字段的方法:

import re

def extract_num(text):# 数字处理

match_pg = re.match(".*?(\d+[,]*\d+).*", text)

if match_pg:

nums = (match_pg.group(1))

nums=int(nums.replace(',', ''))

else:

nums = 0

return nums

将获取的字段值异步插入到mysql中:

import pymysql

from twisted.enterprise import adbapi

pymysql.install_as_MySQLdb()

class MysqlatwistedPipline(object):

def __init__(self,dbpool):

self.dbpool =dbpool

@classmethod

def from_settings(cls,settings):

dbparms=dict(

host = settings["MYSQL_HOST"],

db =settings["MYSQL_DBNAME"],

user = settings["MYSQL_USER"],

passwd=settings["MYSQL_PASSWORD"],

charset='utf8mb4',

cursorclass=pymysql.cursors.DictCursor,

use_unicode=True,

)

dbpool=adbapi.ConnectionPool("MySQLdb",**dbparms)

return cls(dbpool)

def process_item(self, item, spider):

#使用twisted将mysql插入变成异步执行

query=self.dbpool.runInteraction(self.do_insert,item)

query.addErrback(self.handle_error,item,spider)

def handle_error(self,failure,item,spider):

print (failure)

def do_insert(self,cursor,item):

#执行具体的插入

#根据不同的item 构建不同的sql语句,并插入到mysql当中

insert_sql,params=item.get_insert_mysql()

cursor.execute(insert_sql,params)

setting中的管道及数据库具体配置:

ITEM_PIPELINES = {

'PictureSpider.pipelines.MysqlatwistedPipline':1

}

COOKIE = {'key1': 'value1', 'key2': 'value2'}

MYSQL_HOST='xxxxxx'

MYSQL_DBNAME='xxxxxx'

MYSQL_USER='xxxxxx'

MYSQL_PASSWORD='xxxxxx'

FEED_EXPORT_ENCODING = 'utf-8'

MYSQL_DATETIME_FORMAT="%Y-%m-%d %H:%M:%S"

MYSQL_DATE_FORMAT="%Y-%m-%d"

运行main.py文件,开始测试下吧:

#__author__ = 'Winston'

#date: 2019/8/11

# main.py

import sys

import os

from scrapy.cmdline import execute

sys.path.append(os.path.dirname(os.path.abspath(__file__)))

execute(["scrapy","crawl","zhihu"])

来源:CSDN

作者:Winston_R

链接:https://blog.csdn.net/qq_35289736/article/details/103964863