Apache Spark

什么是Spark?

Spark是Lightning-fast unified analytics engine- 快如闪电的统一的分析引擎(不参与数据持久化)。

快

(1)Spark基于内存的计算引擎,相比于MapReduce磁盘计算,速度自然

快- 大众认知

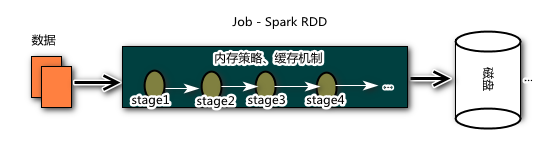

(2)Spark使用先进的DAG(矢量计算)计算模型,将一个复杂的任务拆分成若干个stage(阶段),

这样复杂的任务Spark只需要一个job即可完成。(如果使用MapReduce计算模型可能需要串连若干个Job)

(3) Spark实现DAG计算将任务划分为若干个阶段,同时也提供了对stage阶段计算数据的缓存能力,这样就极大提升计算效率和容错。

统一:Spark统一大数据常见计算例如:批处理(替代MapReduce)、流处理(替代Storm)、统一SQL(替代了Hive)、Machine Learning(替代Mahout 基于MapReduce计算方案)、支持GraphX存储-图形关系存储(存储数据结构)(替代了早期Neo4j的功能)

Spark VS Hadoop

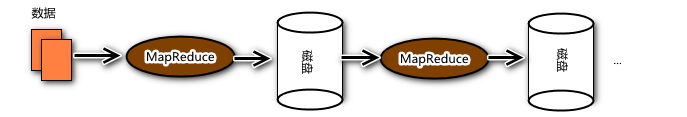

Spark的诞生仅仅是为了替换早期的Hadoop的MapReduce计算引擎。Spark并没有存储解决方案,在Spark的架构中,底层存储方案依然延续Hadooop的HDFS/Hbase.由于Hadoop的MapReduce是大数据时代出现的第一类大数据分析工具,因为出现比较早仅仅为了满足大数据计算的刚性需求(能够做到对大数据的计算,并且可以保证在一个合理的时间范围内)。因此伴随着科技的进步,互联网的快速发展,人们开始对大数据计算提出了更苛刻要求,例如 使用 MapReduce算法去实现 推荐、聚合、分类、回归等科学问题(算法),因为这些算法的实现较为复杂,计算过程比较繁琐,当使用MapReduce计算模型去实现的时候,延迟有无限的放大了,原因有两个:① MapReduce计算阶段过于简单 Map-> Reduce ②MapReduce 计算无法对计算结果缓存,必须将计算数据持久化到磁盘③ 应对复杂计算(迭代计算),MapReduce就变成了名副其实的磁盘迭代计算。

Spark的设计中汲取了Map Reduce的设计经验,在2009 年Spark在加州伯克利AMP实验室诞生,2010年首次开源(同年MapReduce进行一次改革,将classic MapRedcue 升级为Yarn),2013年6开始在Apache孵化,2014年2月份正式成为Apache顶级项目(于此同时有一款当时不怎么出名Apache Flink(流处理)也在2014年12月份也成为Apache顶级项目)。由于Spark计算底层引擎使用批处理计算模型实现,非常容易被广大深受MapReduce计算折磨程序员所接受,所以就导致了Spark 的关注度直线提升(甚至人们忽略Flink存在)。

从上图可以看到Spark是一个基于内存的迭代,使用先进DAG(有向无环图)讲一个任务拆分若干个stage,这样程序就可以极大地利用系统的计算资源,同时使用内存策略缓存数据,实现任务的故障容错。

为何Spark如此欢迎?

不仅如此,人们宣扬Spark的时候提出One Stack ruled them(mapreduce、storm、hive、mahout、neo4j) all.一站式解决方案让人们激动不已。于此同时Spark在架构设计上启动承上启下的作用,企业可以延续一代大数据架构,直接使用Spark接管计算架构,存储和资源管理可以复用,因此平台转变|迁移成本低。

Spark 架构

Map Reduce计算流程

clientNode:程序,Job的入口类

ResourceManager:资源的管理器,负责管理所有NodeManager,负责启动MrAppmaster

NodeManager:负责向ResourceManager汇报自身计算资源(一台物理主机运行NodeManager,该NodeManager默认会将该台物理主机的计算资源抽象成8个等分,一个等分称为Container)。负责启动Container进程(MRAppmaster|YarnChild)

MRAppMaster:一个job任务在启动初期都会先分配一个Container用于去运行MRAppMaster用于检测和管理当前job的计算进程YarnChild

YarnChild:具体负责计算的JVM进程,主要运行Map Task或者Reduice Task.

缺点:

1)任务划分粗糙Map -> Reduce

2 ) MapTask|ReduceTask是通过进程方式实现任务的计算,启动成本太高。

3)MapReduce会将Map阶段的计算中间结果溢写到本地磁盘,在Map节点磁盘IO操作频繁。

MapReduce计算资源是在任务运行过程中MRappMaster动态申请。

Spark 计算流程

Driver:用户的程序,其中包含DAGSchedule和TaskSchedule分别负责任务stage拆分和任务的提交。

ClusterManager:组要负责计算节点管理(Master|ResouceManager),以及资源的分配。

Executor-进程:Spark的每一个job再启动前期都会申请计算资源(Yarn申请JVM进程Container、Standalone申请Core线程)。

Task: 计算的线程(Spark的计算是以线程为单位)

Spark计算的优点

- Spark 使用DAG将任务拆分成若干个阶段,可以实现复杂迭代计算

2)Spark的计算中间结果是可以缓存在计算节点的内存中,加速计算速度

3)Spark使用线程的方式实现计算,实现更细粒度的计算资源管理。

Spark是在计算任务初期,就已经申请到计算资源。可以很好的解决资源竞争的问题(使用Executor进程实现任务的计算隔离)。

Spark 安装

Standalone

- 主机名和ip的映射关系

[root@CentOS ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.221.133 CentOS

- 关闭防火墙

[root@CentOS ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@CentOS ~]# chkconfig iptables off

- SSH免密码认证

[root@CentOS ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

d5:fc:7d:65:12:82:c1:02:d3:c2:46:1c:62:78:02:01 root@CentOS

The key's randomart image is:

+--[ RSA 2048]----+

|Eo .o+=+ ..o. . |

| o...=.o oo . . |

| o . . .. o . o|

| . . +.|

| S . o|

| .|

| |

| |

| |

+-----------------+

[root@CentOS ~]# ssh-copy-id CentOS

The authenticity of host 'centos (192.168.221.133)' can't be established.

RSA key fingerprint is 15:20:f6:5f:b5:0b:53:b1:cf:9b:09:45:53:ab:f1:a2.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'centos,192.168.221.133' (RSA) to the list of known hosts.

root@centos's password:

Now try logging into the machine, with "ssh 'CentOS'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

- JDK安装配置环境变量

[root@CentOS ~]# rpm -ivh jdk-8u191-linux-x64.rpm

warning: jdk-8u191-linux-x64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@CentOS ~]# vi .bashrc

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# jps

4803 Jps

- 配置HADOOP环境(HDFS环境即可)

[root@CentOS ~]# tar -zxf hadoop-2.9.2.tar.gz -C /usr/

[root@CentOS ~]# vi .bashrc

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

CLASSPATH=.

export HADOOP_HOME

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/core-site.xml

<!--nn访问入口-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://CentOS:9000</value>

</property>

<!--hdfs工作基础目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop-2.9.2/hadoop-${user.name}</value>

</property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/hdfs-site.xml

<!--block副本因子-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--配置Sencondary namenode所在物理主机-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>CentOS:50090</value>

</property>

<!--设置datanode最大文件操作数-->

<property>

<name>dfs.datanode.max.xcievers</name>

<value>4096</value>

</property>

<!--设置datanode并行处理能力-->

<property>

<name>dfs.datanode.handler.count</name>

<value>6</value>

</property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/slaves

CentOS

- 配置Spark

[root@CentOS ~]# tar -zxf spark-2.4.3-bin-without-hadoop.tgz -C /usr/

[root@CentOS ~]# mv /usr/spark-2.4.3-bin-without-hadoop/ /usr/spark-2.4.3

[root@CentOS ~]# cd /usr/spark-2.4.3/

[root@CentOS spark-2.4.3]# mv conf/spark-env.sh.template conf/spark-env.sh

[root@CentOS spark-2.4.3]# mv conf/slaves.template conf/slaves

[root@CentOS spark-2.4.3]# vi conf/spark-env.sh

SPARK_WORKER_INSTANCES=2

SPARK_MASTER_HOST=CentOS

SPARK_MASTER_PORT=7077

SPARK_WORKER_CORES=4

SPARK_WORKER_MEMORY=2g

LD_LIBRARY_PATH=/usr/hadoop-2.9.2/lib/native

SPARK_DIST_CLASSPATH=$(hadoop classpath)

export SPARK_MASTER_HOST

export SPARK_MASTER_PORT

export SPARK_WORKER_CORES

export SPARK_WORKER_MEMORY

export LD_LIBRARY_PATH

export SPARK_DIST_CLASSPATH

export SPARK_WORKER_INSTANCES

[root@CentOS spark-2.4.3]# vi conf/slaves

CentOS

[root@CentOS spark-2.4.3]# ./sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.master.Master-1-CentOS.out

CentOS: starting org.apache.spark.deploy.worker.Worker, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-CentOS.out

[root@CentOS spark-2.4.3]# jps

10752 Jps

7331 DataNode

7572 SecondaryNameNode

7223 NameNode

10680 Worker

10574 Master

安装成功后,可以访问http://ip:8080访问

- 测试一下计算环境

[root@CentOS spark-2.4.3]# ./bin/spark-shell --master spark://CentOS:7077 --deploy-mode client --total-executor-cores 2

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://CentOS:4040

Spark context available as 'sc' (master = spark://CentOS:7077, app id = app-20190812200525-0000).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.3

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_191)

Type in expressions to have them evaluated.

Type :help for more information.

scala> sc.textFile("hdfs:///demo/words/t_words.txt").flatMap(_.split(" ")).map((_,1)).groupBy(_._1).map(t=>(t._1,t._2.size)).sortBy(_._2,true,3).saveAsTextFile("hdfs:///demo/results")

Spark On Yarn

- 主机名和ip的映射关系

[root@CentOS ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.221.134 CentOS

- 关闭防火墙

[root@CentOS ~]# service iptables stop

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Flushing firewall rules: [ OK ]

iptables: Unloading modules: [ OK ]

[root@CentOS ~]# chkconfig iptables off

- SSH免密码认证

[root@CentOS ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

d5:fc:7d:65:12:82:c1:02:d3:c2:46:1c:62:78:02:01 root@CentOS

The key's randomart image is:

+--[ RSA 2048]----+

|Eo .o+=+ ..o. . |

| o...=.o oo . . |

| o . . .. o . o|

| . . +.|

| S . o|

| .|

| |

| |

| |

+-----------------+

[root@CentOS ~]# ssh-copy-id CentOS

The authenticity of host 'centos (192.168.221.133)' can't be established.

RSA key fingerprint is 15:20:f6:5f:b5:0b:53:b1:cf:9b:09:45:53:ab:f1:a2.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'centos,192.168.221.133' (RSA) to the list of known hosts.

root@centos's password:

Now try logging into the machine, with "ssh 'CentOS'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

- JDK安装配置环境变量

[root@CentOS ~]# rpm -ivh jdk-8u191-linux-x64.rpm

warning: jdk-8u191-linux-x64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@CentOS ~]# vi .bashrc

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin

CLASSPATH=.

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# jps

4803 Jps

- 配置HADOOP环境(HDFS|Yarn环境即可)

[root@CentOS ~]# tar -zxf hadoop-2.9.2.tar.gz -C /usr/

[root@CentOS ~]# vi .bashrc

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

CLASSPATH=.

export HADOOP_HOME

export JAVA_HOME

export PATH

export CLASSPATH

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/core-site.xml

<!--nn访问入口-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://CentOS:9000</value>

</property>

<!--hdfs工作基础目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop-2.9.2/hadoop-${user.name}</value>

</property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/hdfs-site.xml

<!--block副本因子-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--配置Sencondary namenode所在物理主机-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>CentOS:50090</value>

</property>

<!--设置datanode最大文件操作数-->

<property>

<name>dfs.datanode.max.xcievers</name>

<value>4096</value>

</property>

<!--设置datanode并行处理能力-->

<property>

<name>dfs.datanode.handler.count</name>

<value>6</value>

</property>

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/slaves

CentOS

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/yarn-site.xml

<!--配置MapReduce计算框架的核心实现Shuffle-洗牌-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--配置资源管理器所在的目标主机-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>CentOS</value>

</property>

<!--关闭物理内存检查-->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!--关闭虚拟内存检查-->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

[root@CentOS ~]# mv /usr/hadoop-2.9.2/etc/hadoop/mapred-site.xml.template /usr/hadoop-2.9.2/etc/hadoop/mapred-site.xml

[root@CentOS ~]# vi /usr/hadoop-2.9.2/etc/hadoop/mapred-site.xml

[root@CentOS ~]# vi .bashrc

HADOOP_HOME=/usr/hadoop-2.9.2

JAVA_HOME=/usr/java/latest

CLASSPATH=.

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export JAVA_HOME

export CLASSPATH

export PATH

export HADOOP_HOME

[root@CentOS ~]# source .bashrc

[root@CentOS ~]# hdfs namenode -format

[root@CentOS ~]# start-dfs.sh

[root@CentOS ~]# start-yarn.sh

[root@CentOS ~]# jps

5477 NameNode

5798 SecondaryNameNode

5591 DataNode

6201 NodeManager

6700 Jps

6094 ResourceManager

- 配置安装spark

[root@CentOS ~]# tar -zxf spark-2.4.3-bin-without-hadoop.tgz -C /usr/

[root@CentOS ~]# mv /usr/spark-2.4.3-bin-without-hadoop/ /usr/spark-2.4.3

[root@CentOS ~]# cd /usr/spark-2.4.3/

[root@CentOS spark-2.4.3]# mv conf/spark-env.sh.template conf/spark-env.sh

[root@CentOS spark-2.4.3]# mv conf/spark-defaults.conf.template conf/spark-defaults.conf

[root@CentOS spark-2.4.3]# vi conf/spark-env.sh

HADOOP_CONF_DIR=/usr/hadoop-2.9.2/etc/hadoop

YARN_CONF_DIR=/usr/hadoop-2.9.2/etc/hadoop

SPARK_EXECUTOR_CORES=4

SPARK_EXECUTOR_MEMORY=1g

SPARK_DRIVER_MEMORY=1g

LD_LIBRARY_PATH=/usr/hadoop-2.9.2/lib/native

SPARK_DIST_CLASSPATH=$(hadoop classpath)

SPARK_HISTORY_OPTS="-Dspark.history.fs.logDirectory=hdfs:///spark-logs"

export HADOOP_CONF_DIR

export YARN_CONF_DIR

export SPARK_EXECUTOR_CORES

export SPARK_DRIVER_MEMORY

export SPARK_EXECUTOR_MEMORY

export LD_LIBRARY_PATH

export SPARK_DIST_CLASSPATH

# 开启historyserver optional

export SPARK_HISTORY_OPTS

[root@CentOS spark-2.4.3]# vi /usr/spark-2.4.3/conf/spark-defaults.conf

# 开启spark history server日志记录功能

spark.eventLog.enabled=true

spark.eventLog.dir=hdfs:///spark-logs

[root@CentOS ~]# hdfs dfs -mkdir /spark-logs

[root@CentOS spark-2.4.3]# ./sbin/start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /usr/spark-2.4.3/logs/spark-root-org.apache.spark.deploy.history.HistoryServer-1-CentOS.out

[root@CentOS spark-2.4.3]# jps

10099 HistoryServer

5477 NameNode

5798 SecondaryNameNode

5591 DataNode

10151 Jps

6201 NodeManager

6094 ResourceManager

- 测试程序

[root@CentOS spark-2.4.3]# ./bin/spark-shell --master yarn --deploy-mode client --num-executors 2 --executor-cores 3

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

19/08/13 00:20:09 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

Spark context Web UI available at http://CentOS:4040

Spark context available as 'sc' (master = yarn, app id = application_1565625901385_0001).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.3

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_191)

Type in expressions to have them evaluated.

Type :help for more information.

scala> sc.textFile("hdfs:///demo/words/t_words.txt").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).sortBy(_._2,false,3).saveAsTextFile("hdfs:///demo/results")

--master制定资源管理方式--deploy-mode指是否需要额外的给Driver申请资源--num-executors向yarn申请多少进程。--executor-cores每个executor进程最大执行的并行度。

开发部署一个Spark程序

- 引入Maven依赖

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.4.3</version>

</dependency>

- 添加Maven打包插件

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>4.0.1</version>

<executions>

<execution>

<id>scala-compile-first</id>

<phase>process-resources</phase>

<goals>

<goal>add-source</goal>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

- 添加Scala支持

- 编写Spark Driver代码

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkRDDWordCount {

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val sparkConf = new SparkConf()

.setMaster("spark://CentOS:7077")

.setAppName("wordcount")

val sc=new SparkContext(sparkConf)

//2.创建并行集合 数据集 抽象成 Spark 并行集合 RDD (理解Scala集合升级版)

val linesRDD:RDD[String] = sc.textFile("hdfs:///demo/words/t_words.txt")

linesRDD.flatMap(line=>line.split(" "))

.map((_,1))

.groupBy(item=>item._1)

.map(item=>(item._1,item._2.map(item=>item._2).reduce(_+_)))

.sortBy(t=>t._2,false,1)

.saveAsTextFile("hdfs:///demo/results01")

//4.关闭SparkContext

sc.stop();

}

}

- 执行

mvn pacake指令打包程序 - 将打包的程序上传到远程集群执行以下脚本

[root@CentOS spark-2.4.3]# ./bin/spark-submit --master spark://CentOS:7077 --deploy-mode cluster --class com.baizhi.demo01.SparkRDDWordCount --driver-cores 2 --total-executor-cores 6 /root/spark-rdd-1.0-SNAPSHOT.jar

以上使我们在生产环境下的玩法,通过

spark-submit玩法。当然Spark也支持本地测试,无需构建Spark环境即可测试算子。

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkRDDWordCount02 {

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val sparkConf = new SparkConf()

.setMaster("local[6]")

.setAppName("wordcount")

val sc=new SparkContext(sparkConf)

//2.创建并行集合 数据集 抽象成 Spark 并行集合 RDD (理解Scala集合升级版)

val linesRDD:RDD[String] = sc.textFile("file:///D:/demo/words/t_words.txt")

linesRDD.flatMap(line=>line.split(" "))

.map((_,1))

.groupBy(item=>item._1)

.map(item=>(item._1,item._2.map(item=>item._2).reduce(_+_)))

.sortBy(t=>t._2,false,1)

.saveAsTextFile("file:///D:/demo/results01")

//4.关闭SparkContext

sc.stop();

}

}

来源:CSDN

作者:明月清风,良宵美酒

链接:https://blog.csdn.net/qq_40822132/article/details/103747416