关于修改topic默认副本数及分区迁移(以__consumer_offsets为例):

关于__consumer_offsets topic

与磁盘和内存相比,其实Kafka对计算处理能力的要求是相对较低的,不过它在一定程度上还是会影响整体的性能。其中比较耗cpu的是客户端为了优化网络和磁盘空间,会对消息进行压缩。服务器需要对消息进行批量解压,设置偏移量,然后重新进行批量压缩,再保存到磁盘上。

而由于Zookeeper并不适合大批量的频繁写入操作,0.10版本之后的Kafka已将所有消费组各consumer提交的位移Offset信息保存在Kafka内部的topic中,即__consumer_offsets ,且这个topic是由kafka自动创建的,默认50个,但是都存在一台kafka服务器上(ps:作者这边有多个kafka集群,观察发现有些kafka集群上的__consumer_offsets分区是均匀分布的,有些则都分布在某一台broker上)

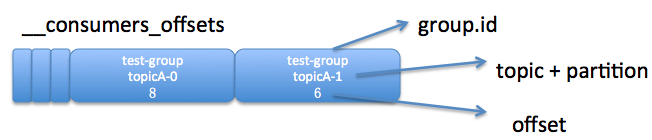

__consumer_offsets中的消息保存了每个consumer group某一时刻提交的offset信息。格式大概如下:

同时

[root@test-kafka-01 kafka]# source /etc/profile && ${PWD}/bin/kafka-topics.sh --describe --zookeeper 192.168.1.1:2181 --topic __consumer_offsets |awk 'NR==1{print $0}'

Topic:__consumer_offsets PartitionCount:50 ReplicationFactor:1 Configs:segment.bytes=104857600,cleanup.policy=compact,compression.type=producer

__consumers_offsets topic配置了compact策略,使得它总是能够保存最新的位移信息,既控制了该topic总体的日志容量,也能实现保存最新offset的目的

__consumer_offsets常见问题:

1.kafka集群由于__consumer_offsets默认的副本数为1而存在的单点故障的风险

2.如果__consumer_offsets的50个分区都分布在单台broker上,所有consumer都会通过该broker去提交和获取offset信息则又会造成负载过高的问题

问题解决:

- 在集群初始化前设置:

通过修改相关配置参数解决:

[root@test-kafka-01 kafka]# cat ${PWD}/config/server.properties

...

offsets.topic.replication.factor=2 设置__consumer_offsets的副本数是2或3较合理

...

- 集群正常对外提供服务时:

可以通过${KAFKA_HOME}/bin/kafka-reassign-partitions.sh脚本对__consumer_offsets分区副本进行热更新:

作者这里出现的问题是由于更新老版本kafka集群时,由于未提前加载jdk环境,导致部分kafka节点未正产启动,导致__consumer_offsets所有分区都分配到一台broker节点上,导致该broker负载过高,所以该topic的partitions需要均匀打散到所有broker上,分摊压力

#配置json格式文件,获取topic __consumer_offsets副本分区分配情况

[root@test-kafka-01 kafka]# cat ${PWD}/kafka_add_replicas.json

{"topics":

[{"topic":"__consumer_offsets"}],

"version": 1

}

[root@test-kafka-01 kafka]# ${PWD}/bin/kafka-reassign-partitions.sh --zookeeper 192.168.1.1:2181,192.168.1.2:2181,192.168.1.3:2181,192.168.1.4:2181,192.168.1.5:2181 --topics-to-move-json-file ${PWD}/kafka_add_replicas.json --broker-list "1,2,3,4,5" --generate

#可以看见__consumers_offsets topic为单副本,且都分配至第四台breakID为4的kafka节点上

Current partition replica assignment

{"version":1,"partitions":[{"topic":"__consumer_offsets","partition":22,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":30,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":8,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":21,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":4,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":27,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":7,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":9,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":46,"replicas":[4],"log_dirs":["any"]},

...}

#zookeeper推荐的topic分区broker节点规划,可以发现分区分配是均衡的,如果不考虑各节点实际负载情况可以直接引用,作者这里将直接引用

Proposed partition reassignment configuration

{"version":1,"partitions":[{"topic":"__consumer_offsets","partition":49,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":38,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":16,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":27,"replicas":[2],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":8,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":19,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":13,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":2,"replicas":[2],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":46,"replicas":[1],"log_dirs":["any"]},

...}

#也可以replicas":字段设置分区的副本数,e.g. replicas":[3,2,1] 列表第一个brokerID对应的是该分区的leader副本

[root@test-kafka-01 kafka]# cat ${PWD}/topic-reassignment.json

{"version":1,"partitions":[{"topic":"__consumer_offsets","partition":49,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":38,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":16,"replicas":[1],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":27,"replicas":[2],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":8,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":19,"replicas":[4],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":13,"replicas":[3],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":2,"replicas":[2],"log_dirs":["any"]},{"topic":"__consumer_offsets","partition":46,"replicas":[1],"log_dirs":["any"]},

...}

#去掉多余的分区log信息,不进行重新分配

[root@test-kafka-01 kafka]# sed -i "s/\,\"log\_dirs\"\:\[\"any\"\]//g" ${PWD}/topic-reassignment.json

开始迁移

[root@test-kafka-01 kafka]# ${PWD}/bin/kafka-reassign-partitions.sh --zookeeper 192.168.1.1:2181,192.168.1.2:2181,192.168.1.3:2181,192.168.1.4:2181,192.168.1.5:2181 --reassignment-json-file ${PWD}/reassignment-node.json --execute

查看分配进度:

[root@test-kafka-01 kafka]# ${PWD}/bin/kafka-reassign-partitions.sh --zookeeper 192.168.1.1:2181,192.168.1.2:2181,192.168.1.3:2181,192.168.1.4:2181,192.168.1.5:2181 --reassignment-json-file ${PWD}/reassignment-node.json --verify

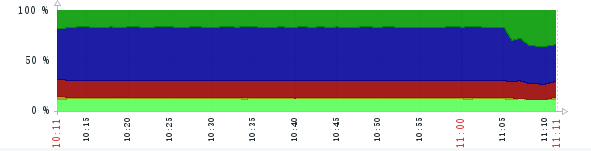

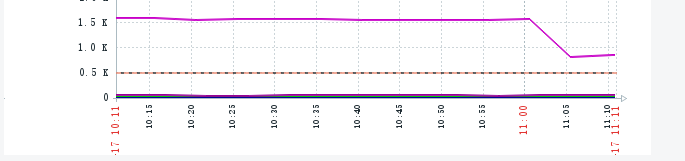

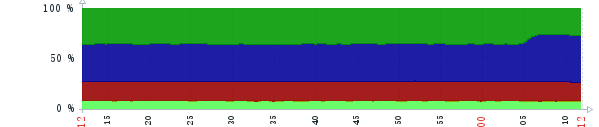

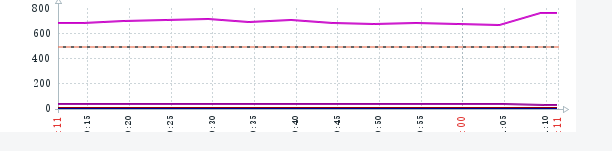

对比__consumer_offsets分区重新分配前后相关broker cpu及连接数变化:

之前独占__consumer_offsets所有分区的节点:

新分配到__consumer_offsets分区的节点:

来源:CSDN

作者:扛枪不遇鸟

链接:https://blog.csdn.net/weixin_43855694/article/details/103490849