保存到json文件

import codecs

class JsonEncodingPipeline(object):

def __init__(self):

self.file = codecs.open("job_info.json", 'w', encoding='utf-8')

self.file.write('[')

def process_item(self, item, spider):

"""

ensure_ascii=False 防止中文等编码错误

"""

lines = json.dumps(dict(item), ensure_ascii=False) + ',\n'

self.file.write(lines)

return item

def spider_closed(self, spider):

"""

spider_close(signal) 关闭文件

"""

self.file.write(']')

self.file.close()

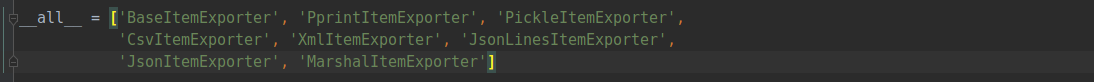

Scrapy自带的

class JsonExporterPipeline(object):

def __init__(self):

self.file = open('jon_info1.json', 'wb')

self.exporter = JsonItemExporter(self.file, encoding='utf-8', ensure_ascii=False)

self.exporter.start_exporting()

def process_item(self, item, spider):

self.exporter.export_item(item)

return item

def close_spider(self, spider):

self.exporter.finish_exporting()

self.file.close()

存储到MySQL

基于Twisted异步框架异步插入

class MysqlTwistedPipeline(object):

def __init__(self, dbpool):

self.dbpool = dbpool

@classmethod

def from_settings(cls, settings):

dbparms = dict(

host=settings["MYSQL_HOST"],

db=settings["MYSQL_DBNAME"],

user=settings["MYSQL_USER"],

passwd=settings["MYSQL_PASSWORD"],

charset='utf8',

cursorclass=MySQLdb.cursors.DictCursor,

use_unicode=True,

)

# twisted异步容器 使用MySQLdb模块连接

dbpool = adbapi.ConnectionPool("MySQLdb", **dbparms)

return cls(dbpool)

def process_item(self, item, spider):

# 使用Twisted将mysql插入变成异步执行

query = self.dbpool.runInteraction(self.do_insert, item)

# 处理异常

query.addErrback(self.handle_error, item, spider)

def handle_error(self, failure, item, spider):

print(failure)

def do_insert(self, cursor, item):

insert_sql = """

insert into job_info(

company_name,

company_addr,

company_info,

job_name,

job_addr,

job_info,

salary_min,

salary_max,

url,

time) VALUES (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)

"""

cursor.execute(insert_sql, (

item['company_name'],

item['company_addr'],

item['company_info'],

item['job_name'],

item['job_addr'],

item['job_info'],

item['salary_min'],

item['salary_max'],

item['url'],

item['time'],

))

在seetings中配置

MYSQL_HOST = 'localhost'

MYSQL_DBNAME = 'crawler'

MYSQL_USER = 'root'

MYSQL_PASSWORD = 'xxxxx'

来源:CSDN

作者:、moddemod

链接:https://blog.csdn.net/weixin_43833642/article/details/103538447