- Why MobileNet and Its Variants (e.g. ShuffleNet) Are Fast文章通过输入与输出连通性的角度直观上分析了不同卷积模式计算量的改变情况。

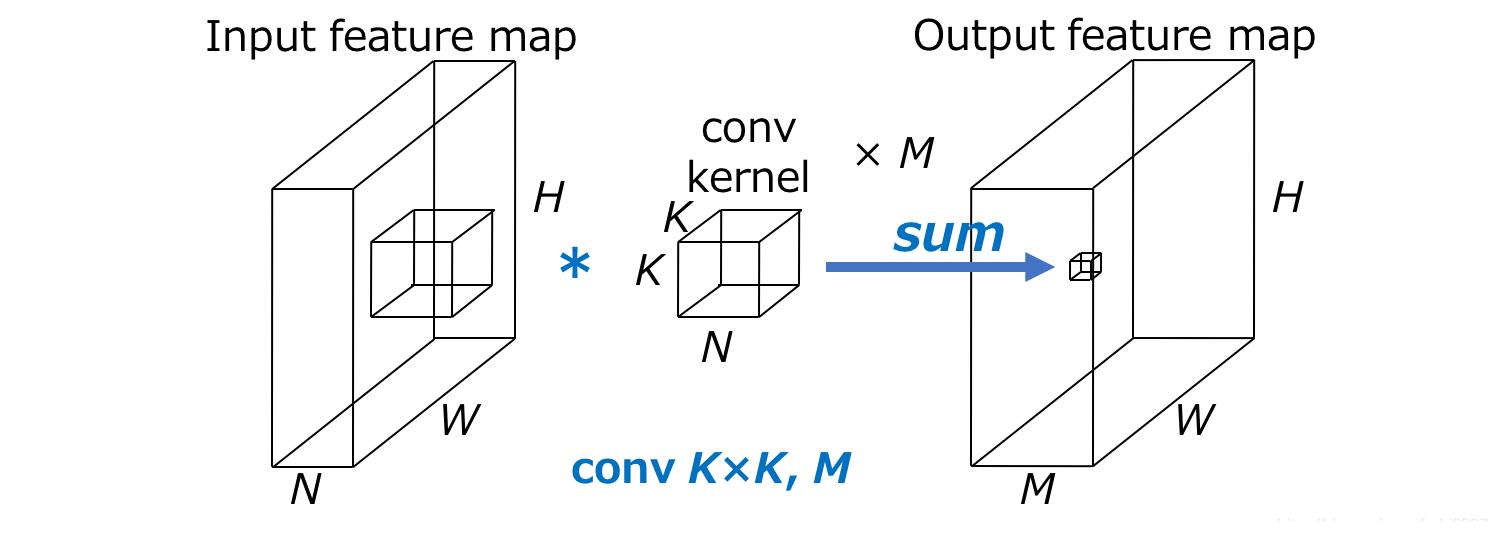

基础卷积模块

- standard convolution

标准卷积的计算量为HWNK²M,可以分为3部分

(1) the spatial size of the input feature map HxW,

(2) the size of convolution kernel K²

(3) the numbers of input and output channels NxM.

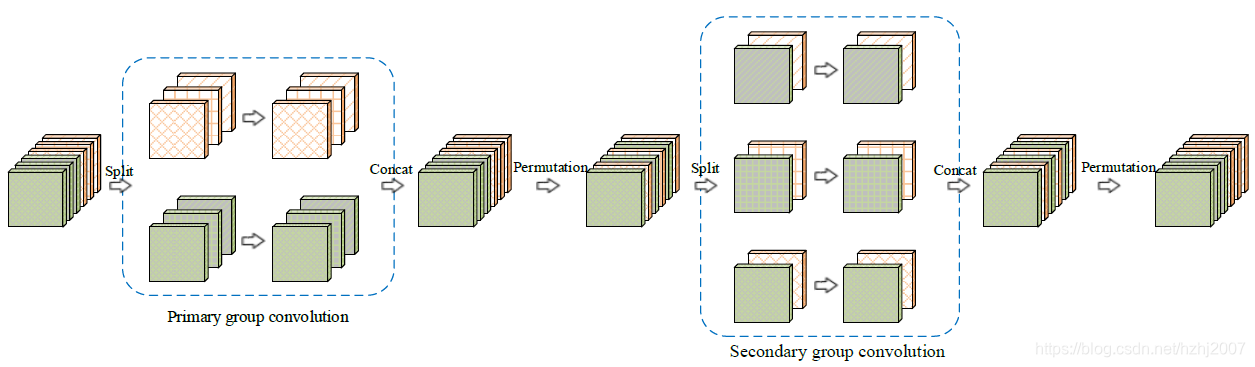

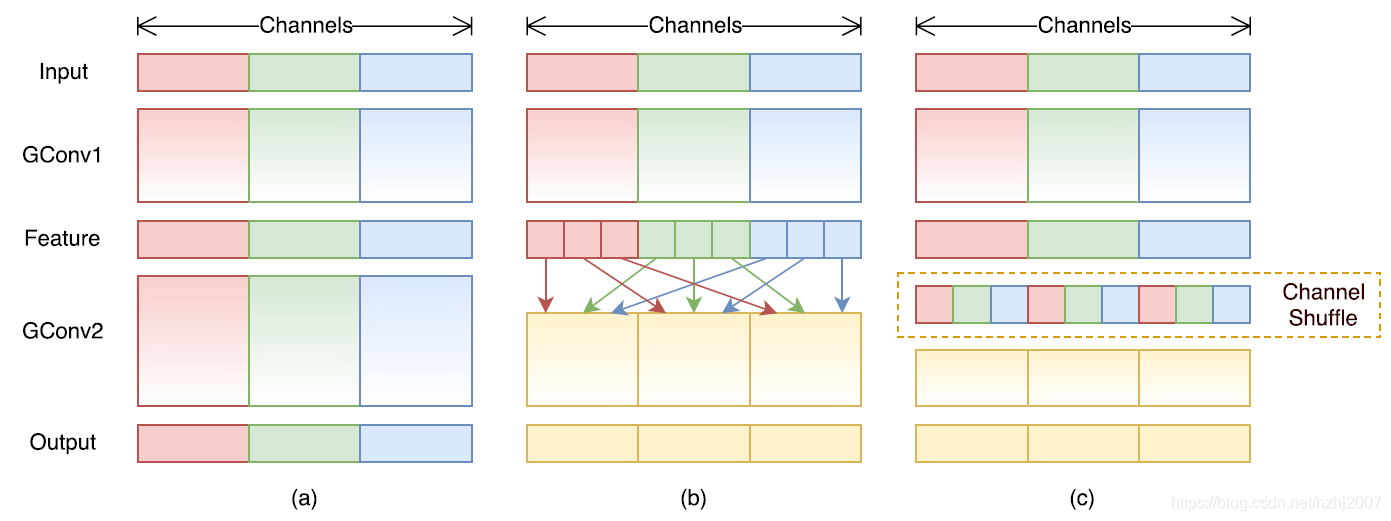

- group convolution

-

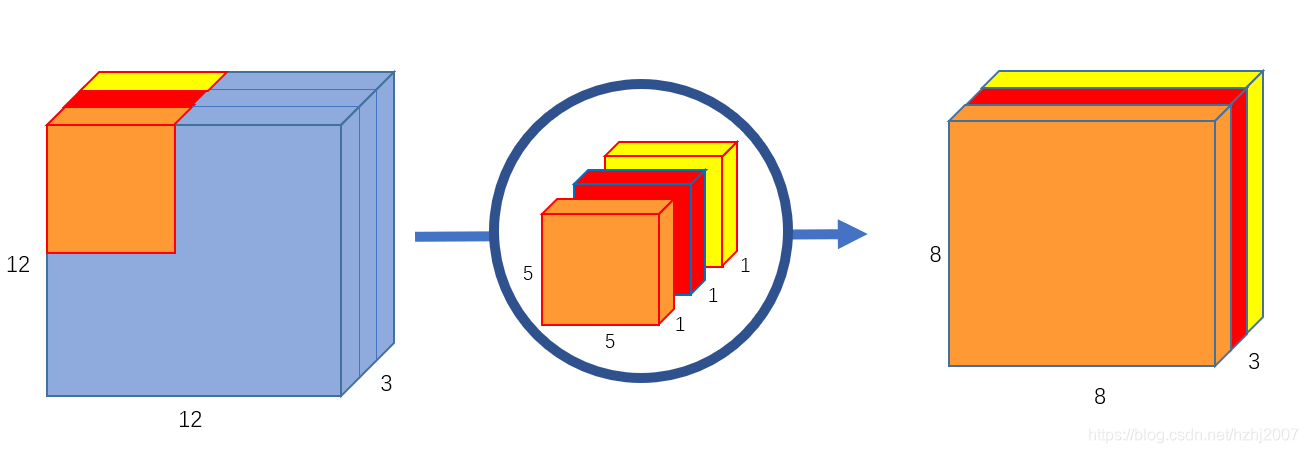

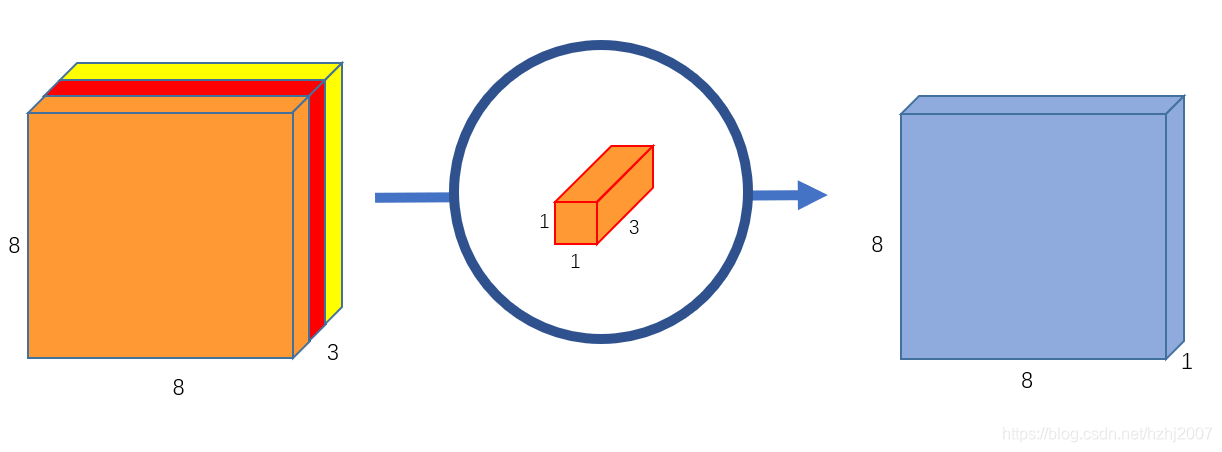

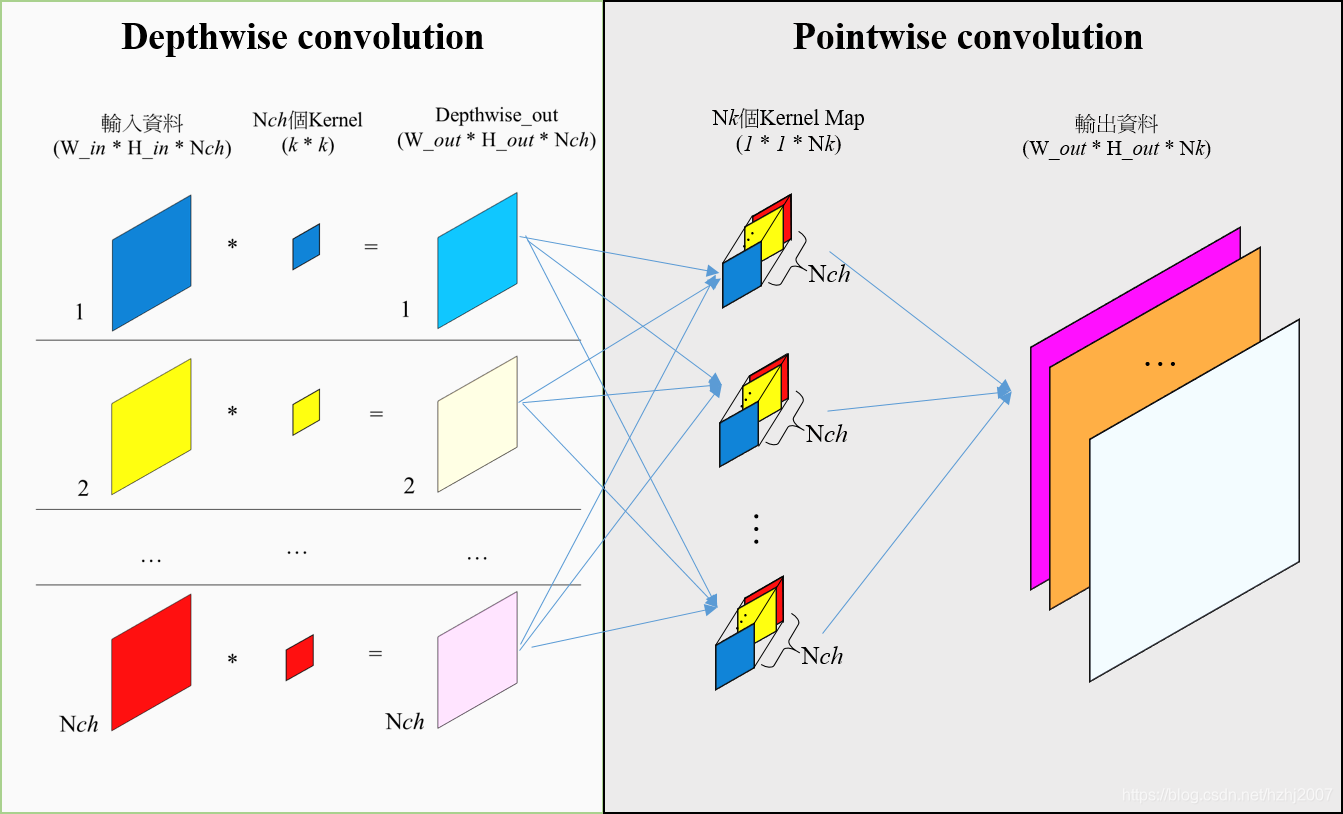

Depthwise Convolution

- Pointwise Convolution

- Depthwise separable convolution

- channel Shuffle

卷积操作变体

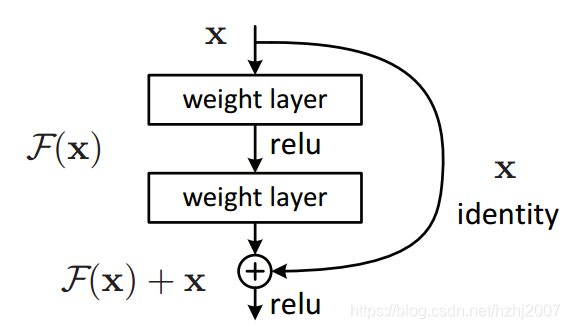

- Resnet

Residual blocks — Building blocks of ResNet文章较详细的介绍了skip connection及残差网络名称的由来。

Let us consider a neural network block, whose input is x and we would like to learn the true distribution H(x). Let us denote the difference (or the residual) between this as

R(x) = Output — Input = H(x) — x

Rearranging it, we get,

H(x) = R(x) + x

Our residual block is overall trying to learn the true output, H(x) and if you look closely at the image above, you will realize that since we have an identity connection coming due to x, the layers are actually trying to learn the residual, R(x). So to summarize, the layers in a traditional network are learning the true output (H(x))whereas the layers in a residual network are learning the residual (R(x)). Hence, the name: Residual Block

-

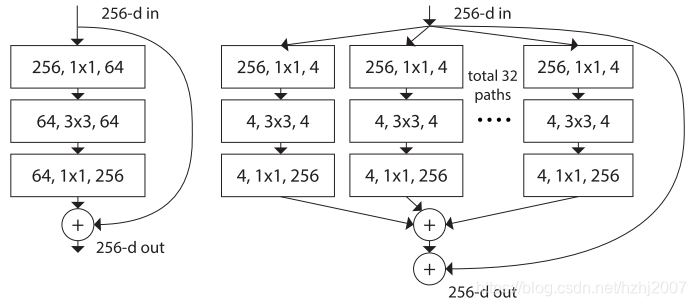

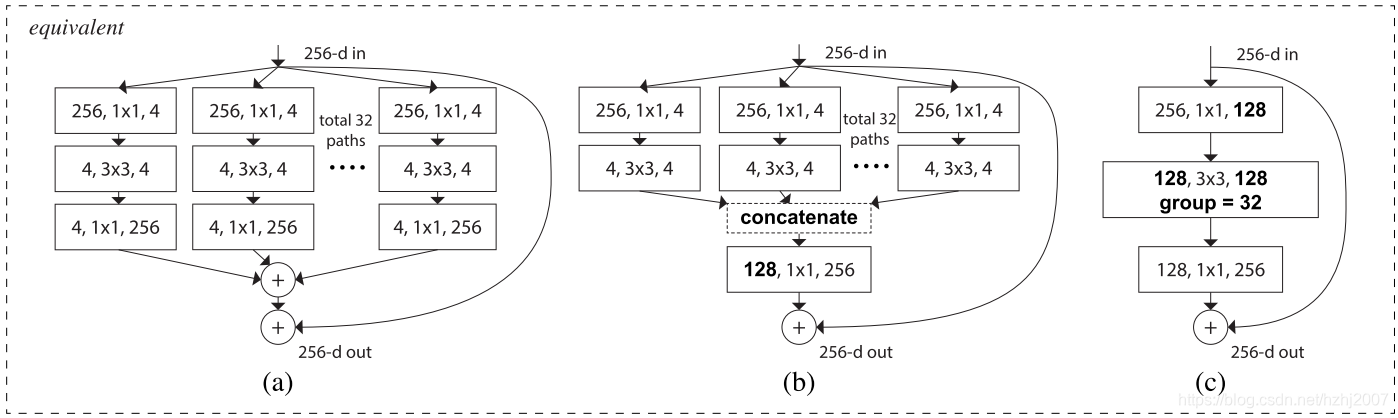

ResNeXt

该网络结构与inception及group convolution的视图如下。

-

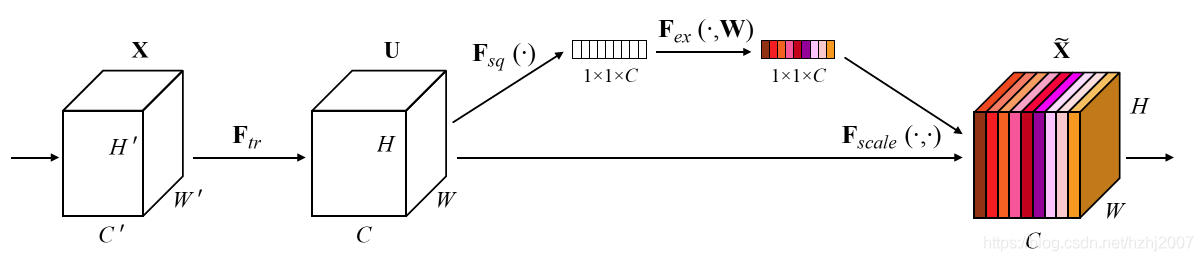

Squeeze-and-Excitation (SE) Block

参考文献:

来源:CSDN

作者:hzhj

链接:https://blog.csdn.net/hzhj2007/article/details/103469305