Pytorch深度强化学习训练Agent走直线

问题提出

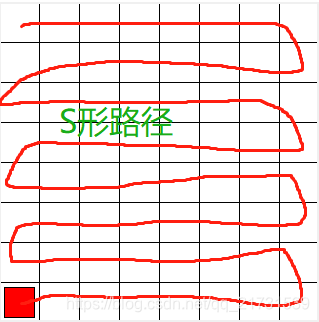

最近在学强化学习,想用强化学习的方法做一个机器人的运动路径规划,手头刚好有一个项目,问题具体是这样:机器人处在一个二维环境中,机器人的运动环境(Environment)我把它简化成了一个8x8(如图一)的棋盘格,而机器人就是占据1个格子的“棋子”。机器人刚开始在(0,0)点,机器人每次能采取的动作(Action)是“上、下、左、右”四个动作(当然不能跑出棋盘格外)。我最初想来做路径规划的目的是想让机器人遍历棋盘所有网格,而不重复走以前走过的点,就像扫地,最理想的情况当然是训练出一条“S”字型的扫地路径,这样每个格子都能扫到而且不重复。但在实际强化学习训练的过程样本中,我发现机器人一直在无规律的“踉跄”行走,连直线能没法走成,根本没有向标准“S”型路径收敛的迹象。注意到“S”型路径是由若干直线段组成的,为了让训练结果能更快速收敛到我想要的“S”型路径,也许先训练机器人走直线是一个更有效且关键的步骤。因此我打算先用强化学习来训练机器人走直线。

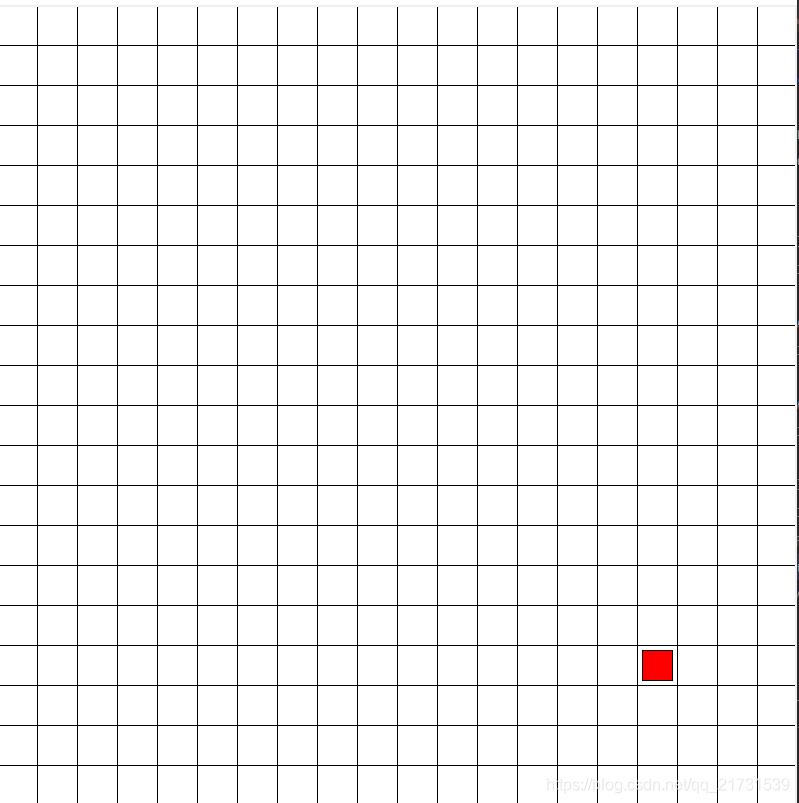

图一,8x8棋盘格与期望的机器人S形路径,图二,20x20棋盘格

用8x8棋盘格来做训练,机器人很容易碰到墙就停止,产生了一大堆没用的样本。可以试想人学会走路是在一个宽敞的环境,而不是一个狭窄的环境中,因此训练走直线并不一定得使用任务环境8x8棋盘格,完全可以用一个更大的20x20棋盘格(如图二)来训练。状态反馈(State)定义为机器人当前时刻的速度方向:上下左右。奖励函数(Reward)如下:若动作方向与当前速度方向相同,如速度上动作上、速度下动作下,则奖励为10;若动作方向与当前速度方向垂直,如速度上动作左、速度下动作右,则奖励为-5;若动作方向与当前速度方向相反,如速度上动作下、速度左动作右,则奖励为-10。Pytorch代码如下:

'''

训练环境

'''

import torch #使用Pytorch

import time

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

import tkinter as tk #使用仿真环境tkinter

UNIT = 40 # 像素

WP_H = 20 # 棋盘格高

WP_W = 20 # 棋盘格宽

direction= 4 #速度方向

class Run(tk.Tk, object):

def __init__(self):

super(Run, self).__init__()

self.action_space = ['u', 'd', 'l', 'r'] #上下左右

self.n_actions = len(self.action_space)

self.n_features = direction

self.title('Run')

self.geometry('{0}x{1}'.format(WP_H * UNIT, WP_H * UNIT))

self._build_WP()

def _build_WP(self):

self.canvas = tk.Canvas(self, bg='white',

height=WP_H * UNIT,

width=WP_W * UNIT)

# create grids

for c in range(0, WP_W * UNIT, UNIT):

x0, y0, x1, y1 = c, 0, c, WP_H * UNIT

self.canvas.create_line(x0, y0, x1, y1)

for r in range(0, WP_H * UNIT, UNIT):

x0, y0, x1, y1 = 0, r, WP_W * UNIT, r

self.canvas.create_line(x0, y0, x1, y1)

# create origin

origin = np.array([20, 20])

# create red rect

self.rect = self.canvas.create_rectangle(

origin[0] - 15, origin[1] - 15,

origin[0] + 15, origin[1] + 15,

fill='red')

# pack all

self.canvas.pack()

self.acculR=0 #累积奖赏

self.ToolCord=np.array([0,0])

self.speed = torch.zeros(1,4)

def reset(self):

self.update()

time.sleep(0.5)

self.canvas.delete(self.rect)

origin = np.array([20, 20])

self.acculR=0

self.speed = torch.zeros(4)

self.speed[np.random.randint(0, 4, 1)]=1 #初始速度是随机的

self.ToolCord = np.random.randint(0, WP_H, 2) #初始出发点是随机的

self.rect = self.canvas.create_rectangle(

self.ToolCord[0] * UNIT + origin[0] - 15, self.ToolCord[1] * UNIT + origin[1] - 15,

self.ToolCord[0] * UNIT + origin[0] + 15, self.ToolCord[1] * UNIT + origin[1] + 15,

fill='red')

s=self.speed.flatten()

return s #reset返回初始状态

def step(self, action):

outside = 0

base_action = np.array([0, 0])

if action == 0: # up

if self.speed[0]==1:

reward=10

elif self.speed[1]==1:

reward=-10

elif self.speed[2]== 1 or self.speed[3]== 1:

reward = -5

self.speed = torch.zeros(4)

if self.ToolCord[1] > 0:

base_action[1] -= UNIT

self.speed[0] = 1

self.ToolCord = np.add(self.ToolCord, (0, -1))

elif self.ToolCord[1] == 0:

base_action[1] = base_action[1]

outside = 1

self.speed[0] = 1

elif action == 1: # down

if self.speed[1]==1:

reward=10

elif self.speed[0]==1:

reward=-10

elif self.speed[2]==1 or self.speed[3]==1:

reward = -5

self.speed = torch.zeros(4)

if self.ToolCord[1] < (WP_H - 1):

base_action[1] += UNIT

self.speed[1] = 1

self.ToolCord = np.add(self.ToolCord, (0, 1))

elif self.ToolCord[1] == (WP_H - 1):

base_action[1] = base_action[1]

outside = 1

self.speed[1] = 1

elif action == 2: # right

if self.speed[2]==1:

reward=10

elif self.speed[3]==1:

reward=-10

elif self.speed[0]==1 or self.speed[1]==1:

reward = -5

self.speed = torch.zeros(4)

if self.ToolCord[0] < (WP_W - 1):

base_action[0] += UNIT

self.speed[2] = 1

self.ToolCord = np.add(self.ToolCord, (1, 0))

elif self.ToolCord[0] == (WP_W - 1):

base_action[0] = base_action[0]

outside = 1

self.speed[2] = 1

elif action == 3: # left

if self.speed[3]==1:

reward=10

elif self.speed[2]==1:

reward = -10

elif self.speed[0]==1 or self.speed[1]==1:

reward = -5

self.speed = torch.zeros(4)

if self.ToolCord[0] > 0:

base_action[0] -= UNIT

self.ToolCord = np.add(self.ToolCord, (-1, 0))

self.speed[3] = 1

elif self.ToolCord[0] == 0:

base_action[0] = base_action[0]

outside = 1

self.speed[3] = 1

self.canvas.move(self.rect, base_action[0], base_action[1]) # move agent

s_ = self.speed.flatten()

#end flag

self.acculR += reward

if self.acculR < -25 or outside==1:

done = True

else:

done = False

return s_, reward, done, outside

def render(self):

time.sleep(0.1)

self.update()

def update():

for t in range(2):

s = env.reset()

while True:

env.render()

a = 1

s_, r, done, ifoutside = env.step(a)

print(s_)

if done:

break

if __name__ == '__main__':

env = Run()

env.after(100, update)

env.mainloop()"""

学习过程

"""

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

import Learn_straight_env

import loc2glb_env

# Hyper Parameters

env=Learn_straight_env.Run()

N_ACTIONS = env.n_actions

N_STATES = env.n_features

MEMORY_CAPACITY = 100 #记忆容量

BATCH_SIZE = 20

# ENV_A_SHAPE = 0 if isinstance(env.action_space.sample(), int) else env.action_space.sample().shape

# Deep Q Network off-policy

class Net(nn.Module):

def __init__(self, ):

super(Net, self).__init__()

self.fc1 = nn.Linear(N_STATES, 4)

self.fc1.weight.data.normal_(0, 0.1) # initialization

self.out = nn.Linear(4, N_ACTIONS)

self.out.weight.data.normal_(0, 0.1) # initialization

def forward(self, x):

x = self.fc1(x) #网络前向传播

x = F.relu(x)

actions_value = self.out(x)

return actions_value

class DQN(object):

def __init__(self):

self.eval_net, self.target_net = Net(), Net()

self.learn_step_counter = 0 # for target updating

self.LR = 0.1 # learning rate

self.memory_counter = 0 # for storing memory

self.memory = np.zeros((MEMORY_CAPACITY, N_STATES * 2 + 2)) # initialize memory

self.optimizer = torch.optim.Adam(self.eval_net.parameters(), lr=self.LR)

self.loss_func = nn.MSELoss()

self.EPSILON = 0.5 # greedy policy

self.GAMMA = 0.9 # reward discount

self.TARGET_REPLACE_ITER = 1 # target update frequency

def choose_action(self, x):

x = torch.unsqueeze(torch.FloatTensor(x), 0)

# input only one sample

if np.random.uniform() < self.EPSILON: # greedy

actions_value = self.eval_net.forward(x)

action = torch.max(actions_value, 1)[1].data.numpy()

# action = action[0] if ENV_A_SHAPE == 0 else action.reshape(ENV_A_SHAPE) # return the argmax index

else: # random

action = np.random.randint(0, N_ACTIONS)

# action = action if ENV_A_SHAPE == 0 else action.reshape(ENV_A_SHAPE)

return action

def store_transition(self, s, a, r, s_):

transition = np.hstack((s, [a, r], s_))

# replace the old memory with new memory

index = self.memory_counter % MEMORY_CAPACITY

self.memory[index, :] = transition

self.memory_counter += 1

def learn(self):

# target parameter update

if self.learn_step_counter % self.TARGET_REPLACE_ITER == 0:

self.target_net.load_state_dict(self.eval_net.state_dict())

self.learn_step_counter += 1

# sample batch transitions

sample_index = np.random.choice(MEMORY_CAPACITY, BATCH_SIZE)

b_memory = self.memory[sample_index, :]

b_s = torch.FloatTensor(b_memory[:, :N_STATES])

b_a = torch.LongTensor(b_memory[:, N_STATES:N_STATES+1].astype(int))

b_r = torch.FloatTensor(b_memory[:, N_STATES+1:N_STATES+2])

b_s_ = torch.FloatTensor(b_memory[:, -N_STATES:])

# q_eval w.r.t the action in experience

q_eval = self.eval_net(b_s).gather(1, b_a) # shape (batch, 1)

q_next = self.target_net(b_s_).detach() # detach from graph, don't backpropagate

q_target = b_r + self.GAMMA * q_next.max(1)[0].view(BATCH_SIZE, 1) # shape (batch, 1)

loss = self.loss_func(q_eval, q_target)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

print('\nCollecting experience...')

EPI=200

dqn=DQN()

for i_episode in range(EPI):

s = env.reset()

ep_r = 0

dqn.EPSILON=0.6+0.3*i_episode/EPI #学习参数的调整

dqn.LR=0.1-0.09*i_episode/EPI

dqn.GAMMA=0.5

dqn.optimizer = torch.optim.Adam(dqn.eval_net.parameters(), lr=dqn.LR)

dqn.TARGET_REPLACE_ITER=1+int(10*i_episode/EPI)

while True:

env.render()

a = dqn.choose_action(s)

# take action

s_, r, done, outside = env.step(a)

dqn.store_transition(s, a, r, s_)

ep_r += r

if dqn.memory_counter > MEMORY_CAPACITY:

dqn.learn()

if done:

print('Ep: ', i_episode,'reward',ep_r)

if done:

print(s_)

break

s = s_

# train eval network

最后训练出的机器人可以走直线了,虽然我用的网络隐层只有一层,神经元数也特别少,但也总算是用强化学习完成了机器人自主走直线这一功能。

来源:CSDN

作者:Shawn_MB

链接:https://blog.csdn.net/qq_21731539/article/details/103466206