python数据分析入门,作为入门文章系列主要包含以下几个内容:

1.数据的来源(本案例采用的数据来自于上一篇文章中爬取的智联招聘信息):读取数据库数据、数据写入csv文件、读取csv文件等

2.数据的处理:对载入到内存的数据进行一系列的操作(处理总共包含清洗、过滤、分组、统计、排序、计算平均数等一系列操作,本文只简单涉及了其中某几个)

3.对处理后的数据进行可视化分析等

# !/usr/bin/env python

# -*-coding:utf-8-*-

"""

@Author : xiaofeng

@Time : 2018/12/19 15:23

@Desc : Less interests,More interest.(数据分析入门)

@Project : python_appliction

@FileName: analysis1.py

@Software: PyCharm

@Blog :https://blog.csdn.net/zwx19921215

"""

import pymysql as db

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib as mpl

import matplotlib.pyplot as plt

# mysql config

mysql_config = {

'host': '110.0.2.130',

'user': 'test',

'password': 'test',

'database': 'xiaofeng',

'charset': 'utf8'

}

"""

read data from mysql and write to local file

(从数据库中读取数据写入本地文件)

@:param page_no

@:param page_size

@:param path 文件写入路径

"""

def read_to_csv(page_no, page_size, path):

# read from database

database = db.connect(**mysql_config)

if page_no > 1:

page_no = (page_no - 1) * page_size

else:

page_no = 0

sql = 'select * from zhilian limit ' + str(page_no) + ',' + str(page_size) + ''

df = pd.read_sql(sql, database)

print(df)

database.close()

# write to csv

local = path

df.to_csv(local, encoding='gbk')

"""

read data from remote address or local address

(读取文件从远程地址或者本地)

"""

def read_from_csv(path):

# remote address

# data_url = 'https://xxxx/zhilian.csv'

# local address

# data_url = 'G:/zhilian.csv'

df = pd.read_csv(path, encoding='gbk')

return df

"""

数据集的简单(过滤、分组、排序)处理

"""

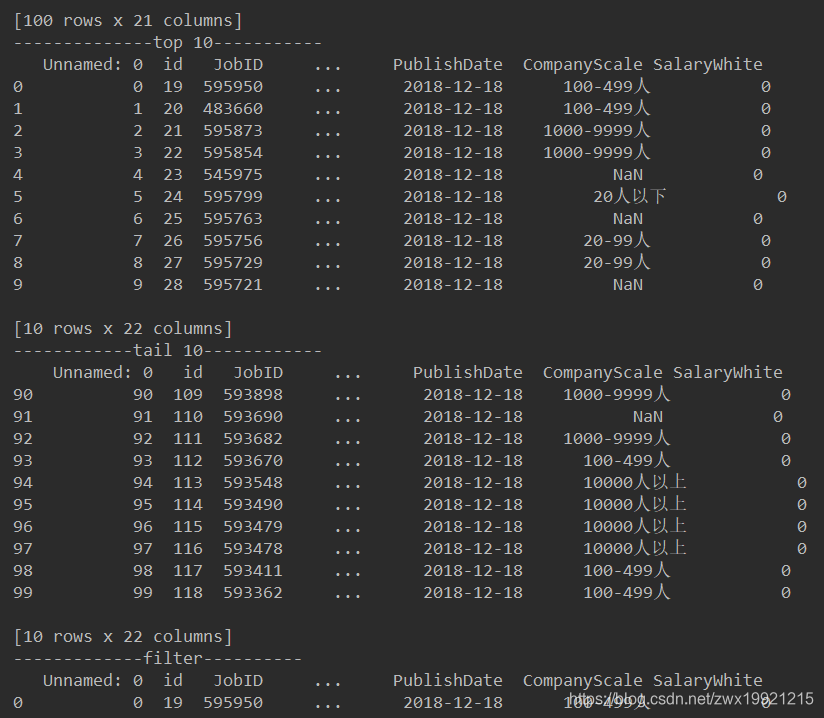

def simple_op(path):

df = read_from_csv(path)

# top 10 :获取前10行

print('--------------top 10-----------')

top = df.head(10)

print(top)

# tail 10:获取后10行

print('------------tail 10------------')

tail = df.tail(10)

print(tail)

# filter:根据指定条件过滤

print('-------------filter----------')

special_jobid = df[df.JobID == 595950]

print(special_jobid)

special = df[(df.id >= 110) & (df.PublishDate == '2018-12-18')]

print(special)

# check :检查各列缺失情况

print('-------------check----------------')

check = df.info()

print(check)

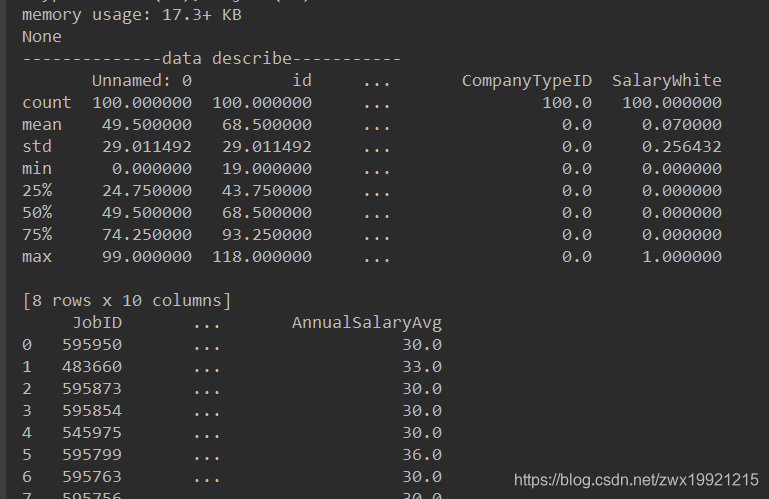

print('--------------data describe-----------')

# count,平均数,标准差,中位数,最小值,最大值,25 % 分位数,75 % 分位数

describe = df.describe()

print(describe)

# 添加新列

df2 = df.copy()

df2['AnnualSalaryAvg'] = (df['AnnualSalaryMax'] + df['AnnualSalaryMin']) / 2

# 重新排列指定列

columns = ['JobID', 'JobTitle', 'CompanyName', 'AnnualSalaryMin', 'AnnualSalaryMax', 'AnnualSalaryAvg']

df = pd.DataFrame(df2, columns=columns)

print(df)

"""

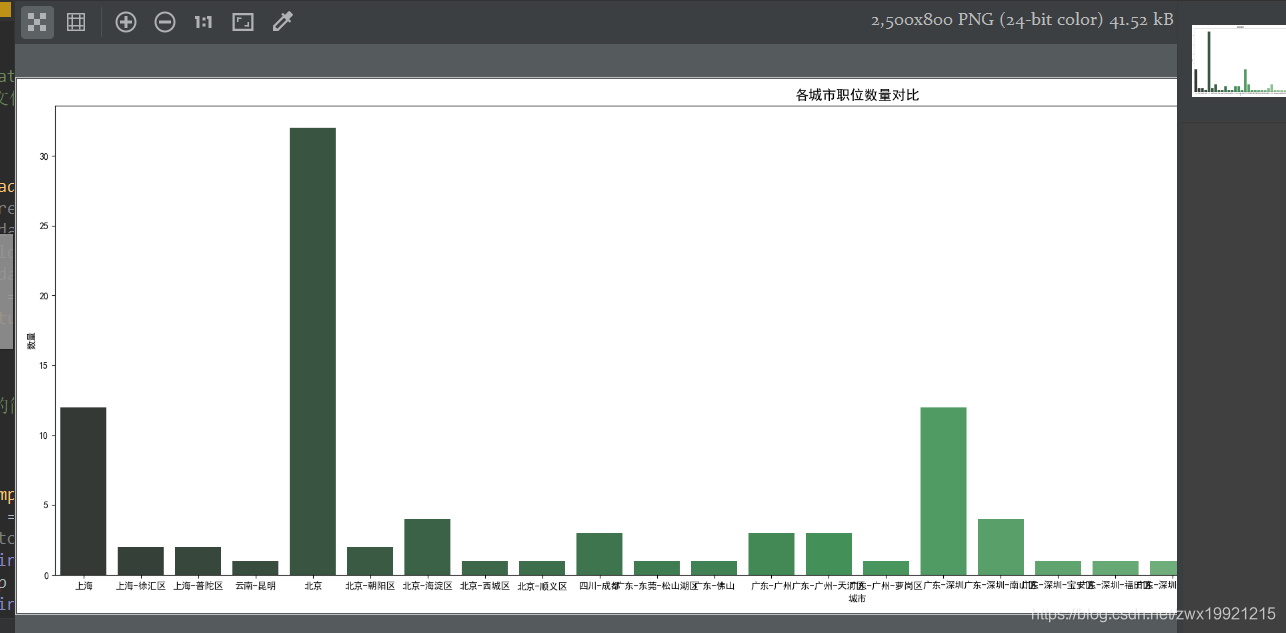

可视化分析

"""

def visualized_analysis(path):

df = read_from_csv(path)

df_post_count = df.groupby('JobLactionStr')['AnnualSalaryMax'].count().to_frame().reset_index()

# subplots(1, 1) 表示1x1个子图,figsize=(25, 8) 子图的宽度和高度

# f, [ax1,ax2] = plt.subplots(1, 2, figsize=(25, 8)) 表示1x2个子图

f, ax2 = plt.subplots(1, 1, figsize=(25, 8))

sns.barplot(x='JobLactionStr', y='AnnualSalaryMax', palette='Greens_d', data=df_post_count, ax=ax2)

ax2.set_title('各城市职位数量对比', fontsize=15)

ax2.set_xlabel('城市')

ax2.set_ylabel('数量')

# 用来正常显示中文标签

plt.rcParams['font.sans-serif'] = ['SimHei']

# 用来正常显示负号

plt.rcParams['axes.unicode_minus'] = False

plt.show()

if __name__ == '__main__':

path = 'G:/zhilian.csv'

read_to_csv(0, 100, path)

df = read_from_csv(path)

simple_op(path)

visualized_analysis(path)

控制台输出如下:

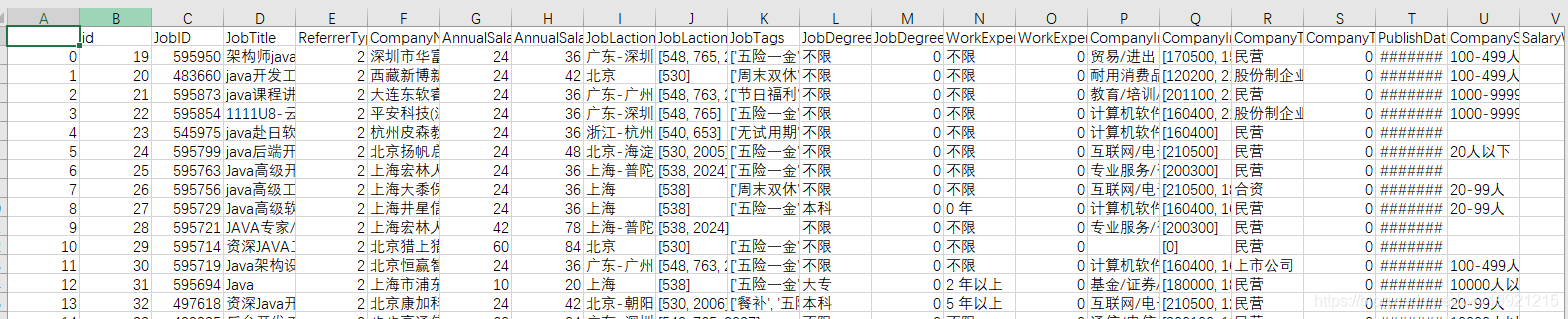

写入本地csv文件效果如下:

可视化效果如下:

注:由于是入门文章第一篇,所以并没有对数据分析做过深的探索,更深层次的研究将会在后续系列中呈现!

来源:CSDN

作者:Garry1115

链接:https://blog.csdn.net/zwx19921215/article/details/85125002