编译工具pycharm

代码

# -*- coding:UTF-8 -*-

"""

Please note, this code is only for python 3+. If you are using python 2+, please modify the code accordingly.

"""

import tensorflow as tf

def add_layer(inputs, in_size, out_size, activation_function=None):

# add one more layer and return the output of this layer

with tf.name_scope('layer'):

with tf.name_scope('weights'):

Weights = tf.Variable(tf.random_normal([in_size, out_size]), name='W')

with tf.name_scope('biases'):

biases = tf.Variable(tf.zeros([1, out_size]) + 0.1, name='b')

with tf.name_scope('Wx_plus_b'):

Wx_plus_b = tf.add(tf.matmul(inputs, Weights), biases)

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b, )

return outputs

# define placeholder for inputs to network

with tf.name_scope('inputs'):#输入包括x_inpuyt,y_input

xs = tf.placeholder(tf.float32, [None, 1], name='x_input')

ys = tf.placeholder(tf.float32, [None, 1], name='y_input')

# add hidden layer

l1 = add_layer(xs, 1, 10, activation_function=tf.nn.relu)

# add output layer

prediction = add_layer(l1, 10, 1, activation_function=None)

# the error between prediciton and real data

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction),

reduction_indices=[1]))

with tf.name_scope('train'):

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

sess = tf.Session()

writer = tf.summary.FileWriter("logs/", sess.graph)

# important step

sess.run(tf.global_variables_initializer())

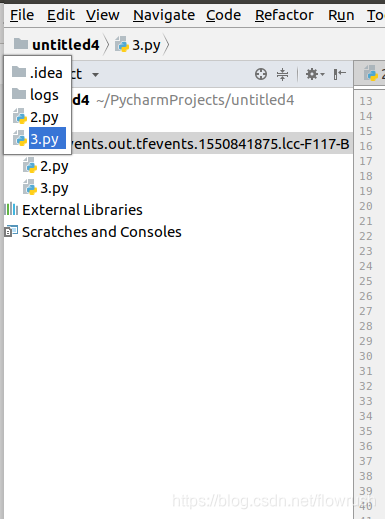

找log

查路径![]() 右键

右键

找到路径

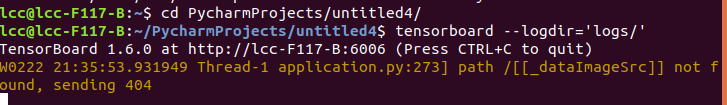

打开termernal

地址栏输入

来源:CSDN

作者:flowrush

链接:https://blog.csdn.net/flowrush/article/details/87886056