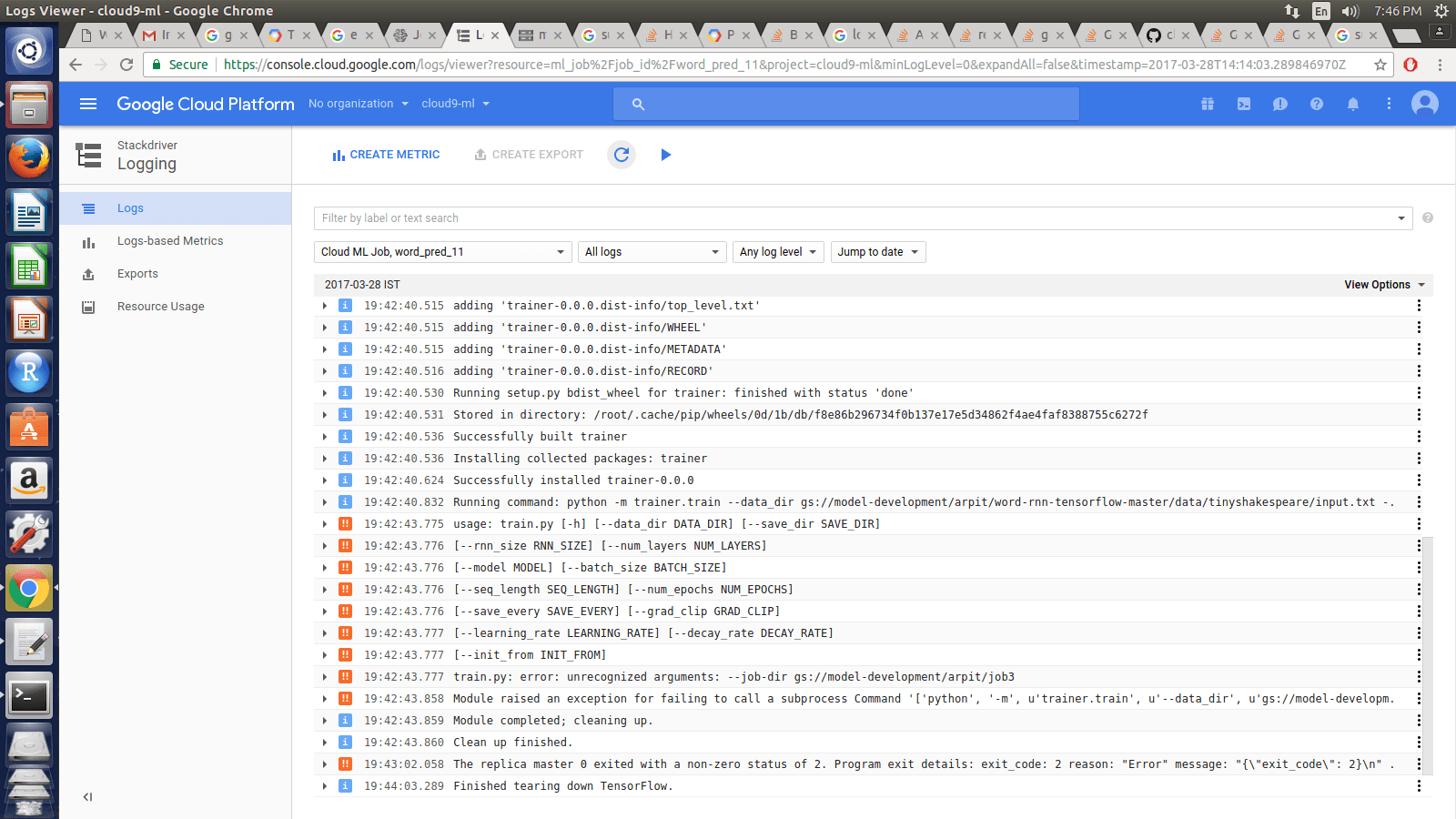

I tried running a word-RNN model from github on Google Cloud ML . After submitting the job,I am getting errors in log file.

This is what i submitted for training

gcloud ml-engine jobs submit training word_pred_7 \

--package-path trainer \

--module-name trainer.train \

--runtime-version 1.0 \

--job-dir $JOB_DIR \

--region $REGION \

-- \

--data_dir gs://model-development/arpit/word-rnn-tensorflow-master/data/tinyshakespeare/real1.txt \

--save_dir gs://model-development/arpit/word-rnn-tensorflow-master/save

This is what I get in the log file.

Finally, after submitting 77 jobs to cloud ML I am able to run the job and problem was not with the arguments while submitting the job. It was about the IO errors generated by files .npy which have to stores using file_io.FileIo and read as StringIO.

These IO Errors have not been mentioned anywhere and one should check for them if they find any errors where it says no such file or directory.

You will need to modify your train.py to accept a "--job-dir" command-line argument.

When you specify --job-dir in gcloud, the service passes it through to your program as an argument, so your argparser (or tf.flags, depending on which you're using), will need to be modified accordingly.

I had the same issue and it seems like google cloud somehow uses that --job-dir anyway when loading your own script (even if you place it before -- on the gcloud command)

The way I fixed it like the official gcloud census example on line 153 and line 183:

parser.add_argument(

'--job-dir',

help='GCS location to write checkpoints and export models',

required=True

)

args = parser.parse_args()

arguments = args.__dict__

job_dir = arguments.pop('job_dir')

train_model(**arguments)

Basically it means to let your python main program take in this --job-dir parameter, even if you are not using it.

In addition to adding --job-dir as accepted argument, I think you should also move the flag after the --.

From the getting started:

Run the local train command using the --distribued option. Be sure to place the flag above the -- that separates the user arguments from the command-line arguments

where, in that case, --distribued was a command-line argument

EDIT:

--job-dir IS NOT a user argument, so it is correct to place it before the --

来源:https://stackoverflow.com/questions/43072130/error-after-running-a-job-in-google-cloud-ml