1.规划

|

角色 |

IP |

主机名 |

|

master/etcd |

10.0.0.115 |

master |

|

node1/master/etcd |

10.0.0.116 |

node1 |

|

node2/master/etcd |

10.0.0.117 |

node2 |

|

node3 |

10.0.0.118 |

node3 |

2.基础环境设置

2.1设置主机名

hostnamectl set-hostname XXXX

2.2做免密

在master上操作

[root@master .ssh]# ssh-keygen -t rsa

[root@master .ssh]# cat id_rsa.pub >authorized_keys

[root@master .ssh]# scp id_rsa* 10.0.0.206:/root/.ssh/

[root@master .ssh]# scp id_rsa* 10.0.0.208:/root/.ssh/

[root@node1 .ssh]# cat id_rsa.pub >authorized_keys ##两台node上都要操作

[root@node2 .ssh]# cat id_rsa.pub >authorized_keys

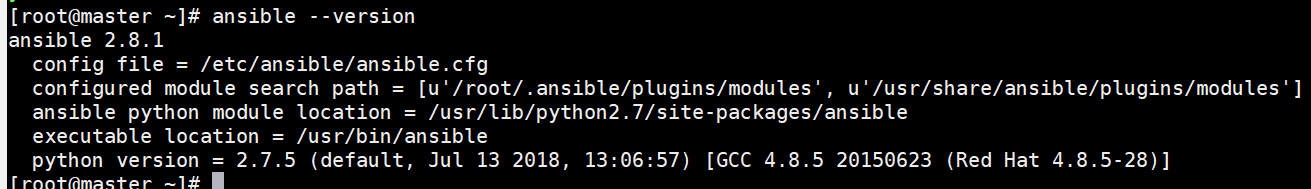

2.3安装ansible(也可以不安装,方便传文件)

这里只需在master1节点安装即可,后续一些操作均在此机器上执行,然后把生成的文件分发至对应节点

yum install -y epel-release

yum install ansible -y

[root@master ~]# ansible --version

定义主机组:

[root@master ~]# cat /etc/ansible/hosts

[k8s-master]

10.0.0.115

10.0.0.116

10.0.0.117

[k8s-all]

10.0.0.115

10.0.0.116

10.0.0.117

10.0.0.118

[k8s-node]

10.0.0.116

10.0.0.117

10.0.0.118

[root@master ~]# ansible all -m ping

2.4关闭防火墙/selinux

[root@master ~]# ansible all -m shell -a 'systemctl stop firewalld'

2.5设置内核

[root@master ~]# ansible all -m shell -a 'cat /proc/sys/net/bridge/bridge-nf-call-iptables'

若为1就正常,为0需要修改

vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#vm.swappiness=0

#使之生效

sysctl -p

2.6时间同步

[root@master ~]# ansible all -m yum -a "name=ntpdate state=latest"

ansible k8s-all -m cron -a "name='k8s cluster crontab' minute=*/30 hour=* day=* month=* weekday=* job='ntpdate ntp1.aliyun.com >/dev/null 2>&1'"

2.7创建集群目录

[root@master ~]# ansible all -m file -a 'path=/etc/kubernetes/ssl state=directory'

[root@master ~]# ansible all -m file -a 'path=/etc/kubernetes/config state=directory'

## k8s-master01节点所需目录

[root@k8s-master01 ~]# mkdir /opt/k8s/{certs,cfg,unit} -p

/etc/kubernetes/ssl #集群使用证书目录

/etc/kubernetes/config #集群各组件加载配置文件存放路径

/opt/k8s/certs/ #集群证书制作目录

/opt/k8s/cfg/ #集群组件配置文件制作目录

/opt/k8s/unit/ #集群组件启动脚本制作目录

3.创建证书

使用证书的组件如下:

- etcd:使用 ca.pem、etcd-key.pem、etcd.pem;(etcd对外提供服务、节点间通信(etcd peer)使用同一套证书)

- kube-apiserver:使用 ca.pem、ca-key.pem、kube-apiserver-key.pem、kube-apiserver.pem;

- kubelet:使用 ca.pem ca-key.pem;

- kube-proxy:使用 ca.pem、kube-proxy-key.pem、kube-proxy.pem;

- kubectl:使用 ca.pem、admin-key.pem、admin.pem;

- kube-controller-manager:使用 ca-key.pem、ca.pem、kube-controller-manager.pem、kube-controller-manager-key.pem;

- kube-scheduler:使用ca-key.pem、ca.pem、kube-scheduler-key.pem、kube-scheduler.pem;

3.1安装cfssl

生成证书时可在任一节点完成,这里在master主机执行,证书只需要创建一次即可,以后在向集群中添加新节点时只要将 /etc/kubernetes/ssl 目录下的证书拷贝到新节点上即可。

1[root@k8s-master01 ~]# mkdir k8s/cfssl -p 2[root@k8s-master01 ~]# cd k8s/cfssl/ 3[root@k8s-master01 cfssl]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 4[root@k8s-master01 cfssl]# chmod +x cfssl_linux-amd64 5[root@k8s-master01 cfssl]# cp cfssl_linux-amd64 /usr/local/bin/cfssl 6[root@k8s-master01 cfssl]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 7[root@k8s-master01 cfssl]# chmod +x cfssljson_linux-amd64 8[root@k8s-master01 cfssl]# cp cfssljson_linux-amd64 /usr/local/bin/cfssljson 9[root@k8s-master01 cfssl]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 10[root@k8s-master01 cfssl]# chmod +x cfssl-certinfo_linux-amd64 11[root@k8s-master01 cfssl]# cp cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

3.2创建CA根证书

由于维护多套CA实在过于繁杂,这里CA证书用来签署集群其它组件的证书

这个文件中包含后面签署etcd、kubernetes等其它证书的时候用到的配置

vim /opt/k8s/certs/ca-config.json

1{

2 "signing": {

3 "default": {

4 "expiry": "87600h"

5 },

6 "profiles": {

7 "kubernetes": {

8 "usages": [

9 "signing",

10 "key encipherment",

11 "server auth",

12 "client auth"

13 ],

14 "expiry": "87600h"

15 }

16 }

17 }

18}

19

20说明:

21ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

22signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

23server auth:表示client可以用该 CA 对server提供的证书进行验证;

24client auth:表示server可以用该CA对client提供的证书进行验证;

25expiry: 表示证书过期时间,我们设置10年,当然你如果比较在意安全性,可以适当减少

3.3创建 CA 证书签名请求模板

vim /opt/k8s/certs/ca-csr.json

1 {

2 "CN": "kubernetes",

3 "key": {

4 "algo": "rsa",

5 "size": 2048

6 },

7 "names": [

8 {

9 "C": "CN",

10 "ST": "ShangHai",

11 "L": "ShangHai",

12 "O": "k8s",

13 "OU": "System"

14 }

15 ]

16 }

3.4生成CA证书、私钥和csr证书签名请求

该命令会生成运行CA所必需的文件ca-key.pem(私钥)和ca.pem(证书),还会生成ca.csr(证书签名请求),用于交叉签名或重新签名。

[root@master cfssl]# cd /opt/k8s/certs/

安装cfssl证书命令: ##忽略此步,直接进行证书生成即可

[root@master certs]# curl -s -L -o /bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@master certs]# curl -s -L -o /bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@master certs]# curl -s -L -o /bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@master certs]# chmod +x /bin/cfssl*

证书生成:

[root@master certs]# cfssl gencert -initca /opt/k8s/certs/ca-csr.json | cfssljson -bare ca

1[root@master certs]# cfssl gencert -initca /opt/k8s/certs/ca-csr.json | cfssljson -bare ca 22019/06/26 17:24:59 [INFO] generating a new CA key and certificate from CSR 32019/06/26 17:24:59 [INFO] generate received request 42019/06/26 17:24:59 [INFO] received CSR 52019/06/26 17:24:59 [INFO] generating key: rsa-2048 62019/06/26 17:25:00 [INFO] encoded CSR 72019/06/26 17:25:00 [INFO] signed certificate with serial number 585032203412954806850533434549466645110614102863

3.5分发证书

所有节点都得分发

[root@master certs]# ansible all -m copy -a 'src=/opt/k8s/certs/ca.csr dest=/etc/kubernetes/ssl/'

[root@master certs]# ansible all -m copy -a 'src=/opt/k8s/certs/ca-key.pem dest=/etc/kubernetes/ssl/'

[root@master certs]# ansible all -m copy -a 'src=/opt/k8s/certs/ca.pem dest=/etc/kubernetes/ssl/'

4.部署ETCD

etcd 是k8s集群最重要的组件,用来存储k8s的所有服务信息, etcd 挂了,集群就挂了,这里把etcd部署在master节点上,etcd集群采用raft算法选举Leader, 由于Raft算法在做决策时需要多数节点的投票,所以etcd一般部署集群推荐奇数个节点,推荐的数量为3、5或者7个节点构成一个集群。

4.1下载etcd二进制文件

[root@master ~]# cd k8s/

[root@master k8s]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.12/etcd-v3.3.12-linux-amd64.tar.gz

[root@master k8s]# tar -xf etcd-v3.3.12-linux-amd64.tar.gz

[root@master k8s]# cd etcd-v3.3.12-linux-amd64

##etcdctl是操作etcd的命令

[root@master etcd-v3.3.12-linux-amd64]# cp etcd /usr/local/bin/

[root@master etcd-v3.3.12-linux-amd64]# cd /usr/local/bin/

[root@master bin]# chmod 0755 etcd

[root@master bin]# ll -d etcd

-rwxr-xr-x 1 root root 19237536 6月 26 19:34 etcd

##三台master都需要拷贝

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/k8s/etcd-v3.3.12-linux-amd64/etcd dest=/usr/local/bin/ mode=0755'

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/root/k8s/etcd-v3.3.12-linux-amd64/etcdctl dest=/usr/local/bin/ mode=0755'

4.2创建etcd证书请求模板文件

vim /opt/k8s/certs/etcd-csr.json

1{

2 "CN": "etcd",

3 "hosts": [

4 "127.0.0.1",

5 "10.0.0.115",

6 "10.0.0.116",

7 "10.0.0.117"

8 ],

9 "key": {

10 "algo": "rsa",

11 "size": 2048

12 },

13 "names": [

14 {

15 "C": "CN",

16 "ST": "ShangHai",

17 "L": "ShangHai",

18 "O": "k8s",

19 "OU": "System"

20 }

21 ]

22}

说明:hosts中的IP为各etcd节点IP及本地127地址,在生产环境中hosts列表最好多预留几个IP,这样后续扩展节点或者因故障需要迁移时不需要再重新生成证书。

4.3生成证书及私钥

注意命令中使用的证书的具体位置

cd /opt/k8s/certs/

cfssl gencert -ca=/opt/k8s/certs/ca.pem \

-ca-key=/opt/k8s/certs/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

1[root@master certs]# cfssl gencert -ca=/opt/k8s/certs/ca.pem \

2> -ca-key=/opt/k8s/certs/ca-key.pem \

3> -config=/opt/k8s/certs/ca-config.json \

4> -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

52019/06/26 19:40:18 [INFO] generate received request

62019/06/26 19:40:18 [INFO] received CSR

72019/06/26 19:40:18 [INFO] generating key: rsa-2048

82019/06/26 19:40:19 [INFO] encoded CSR

92019/06/26 19:40:19 [INFO] signed certificate with serial number 247828842085890133751587256942517588598704448904

102019/06/26 19:40:19 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

11websites. For more information see the Baseline Requirements for the Issuance and Management

12of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

13specifically, section 10.2.3 ("Information Requirements").

4.4查看证书

etcd.csr是签署时用到的中间文件,如果你不打算自己签署证书,而是让第三方的CA机构签署,只需要把etcd.csr文件提交给CA机构。

4.5证书分发

正常情况下只需要copy这三个文件即可,ca.pem(已经存在)、etcd-key.pem、etcd.pem

[root@master certs]# cp etcd-key.pem etcd.pem /etc/kubernetes/ssl/

4.6配置etcd配置文件

etcd.conf配置文件信息,配置文件中涉及证书,etcd用户需要对其有可读权限,否则会提示无法获取证书,644权限即可。

[root@master certs]# cd /opt/k8s/cfg/

[root@master cfg]# vim etcd.conf

1#[member] 2ETCD_NAME="etcd01" 3ETCD_DATA_DIR="/var/lib/etcd" 4#ETCD_SNAPSHOT_COUNTER="10000" 5#ETCD_HEARTBEAT_INTERVAL="100" 6#ETCD_ELECTION_TIMEOUT="1000" 7ETCD_LISTEN_PEER_URLS="http://10.0.0.115:2380" 8ETCD_LISTEN_CLIENT_URLS="http://10.0.0.115:2379,http://127.0.0.1:2379" 9#ETCD_MAX_SNAPSHOTS="5" 10#ETCD_MAX_WALS="5" 11#ETCD_CORS="" 12ETCD_AUTO_COMPACTION_RETENTION="1" 13ETCD_QUOTA_BACKEND_BYTES="8589934592" 14ETCD_MAX_REQUEST_BYTES="5242880" 15#[cluster] 16ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.115:2380" 17# if you use different ETCD_NAME (e.g. test), 18# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..." 19ETCD_INITIAL_CLUSTER="etcd01=http://10.0.0.115:2380,etcd02=http://10.0.0.116:2380,etcd03=http://10.0.0.117:2380" 20ETCD_INITIAL_CLUSTER_STATE="new" 21ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster" 22ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.115:2379" 23#[security] 24CLIENT_CERT_AUTH="true" 25ETCD_CA_FILE="/etc/kubernetes/ssl/ca.pem" 26ETCD_CERT_FILE="/etc/kubernetes/ssl/etcd.pem" 27ETCD_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem" 28PEER_CLIENT_CERT_AUTH="true" 29ETCD_PEER_CA_FILE="/etc/kubernetes/ssl/ca.pem" 30ETCD_PEER_CERT_FILE="/etc/kubernetes/ssl/etcd.pem" 31ETCD_PEER_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem"

参数解释:

- ETCD_NAME:etcd节点成员名称,在一个etcd集群中必须唯一性,可使用Hostname或者machine-id

- ETCD_LISTEN_PEER_URLS:和其它成员节点间通信地址,每个节点不同,必须使用IP,使用域名无效

- ETCD_LISTEN_CLIENT_URLS:对外提供服务的地址,通常为本机节点。使用域名无效

- ETCD_INITIAL_ADVERTISE_PEER_URLS:节点监听地址,并会通告集群其它节点

- ETCD_INITIAL_CLUSTER:集群中所有节点信息,格式为:节点名称+监听的本地端口,及:ETCD_NAME:https://ETCD_INITIAL_ADVERTISE_PEER_URLS

- ETCD_ADVERTISE_CLIENT_URLS:节点成员客户端url列表,对外公告此节点客户端监听地址,可以使用域名

- ETCD_AUTO_COMPACTION_RETENTION: 在一个小时内为mvcc键值存储的自动压实保留。0表示禁用自动压缩

- ETCD_QUOTA_BACKEND_BYTES: 事务中允许的最大操作数,默认1.5M,官方推荐10M,我这里设置5M,大家根据自己实际业务设置

- ETCD_MAX_REQUEST_BYTES: ETCDdb存储数据大小,默认2G,推荐8G

4.6分发etcd.conf配置文件到三台master

[root@k8s-master01 config]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/etcd.conf dest=/etc/kubernetes/config/etcd.conf'

##登陆对应主机修改配置文件,把对应IP修改为本地IP

例如:

1[root@node1 ~]# cat /etc/kubernetes/config/etcd.conf 2#[member] 3ETCD_NAME="etcd02" ##名称唯一,不能重复 4ETCD_DATA_DIR="/var/lib/etcd" 5#ETCD_SNAPSHOT_COUNTER="10000" 6#ETCD_HEARTBEAT_INTERVAL="100" 7#ETCD_ELECTION_TIMEOUT="1000" 8ETCD_LISTEN_PEER_URLS="http://10.0.0.116:2380" ##修改成本机IP 9ETCD_LISTEN_CLIENT_URLS="http://10.0.0.116:2379,http://127.0.0.1:2379" ##修改成本机IP 10#ETCD_MAX_SNAPSHOTS="5" 11#ETCD_MAX_WALS="5" 12#ETCD_CORS="" 13ETCD_AUTO_COMPACTION_RETENTION="1" 14ETCD_QUOTA_BACKEND_BYTES="8589934592" 15ETCD_MAX_REQUEST_BYTES="5242880" 16#[cluster] 17ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.116:2380" ##修改成本机IP 18# if you use different ETCD_NAME (e.g. test), 19# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..." 20ETCD_INITIAL_CLUSTER="etcd01=http://10.0.0.115:2380,etcd02=http://10.0.0.116:2380,etcd03=http://10.0.0.117:2380" 21ETCD_INITIAL_CLUSTER_STATE="new" 22ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster" 23ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.116:2379" ##修改成本机IP 24#[security] 25CLIENT_CERT_AUTH="true" 26ETCD_CA_FILE="/etc/kubernetes/ssl/ca.pem" 27ETCD_CERT_FILE="/etc/kubernetes/ssl/etcd.pem" 28ETCD_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem" 29PEER_CLIENT_CERT_AUTH="true" 30ETCD_PEER_CA_FILE="/etc/kubernetes/ssl/ca.pem" 31ETCD_PEER_CERT_FILE="/etc/kubernetes/ssl/etcd.pem" 32ETCD_PEER_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem"

4.7配置etcd.service 启动文件及启动etcd

1[root@k8s-master01 ~]# vim /opt/k8s/unit/etcd.service

2

3[Unit]

4Description=Etcd Server

5After=network.target

6After=network-online.target

7Wants=network-online.target

8

9[Service]

10Type=notify

11WorkingDirectory=/var/lib/etcd/

12EnvironmentFile=-/etc/kubernetes/config/etcd.conf

13User=etcd

14# set GOMAXPROCS to number of processors

15ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/local/bin/etcd --name=\"${ETCD_NAME}\" --data-dir=\"${ETCD_DATA_DIR}\" --listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\""

16Restart=on-failure

17LimitNOFILE=65536

18

19[Install]

20WantedBy=multi-user.target

21[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/etcd.service dest=/usr/lib/systemd/system/etcd.service'

22[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

23[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable etcd'

24[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start etcd'

[root@sunyun certs]# ansible k8s-master -m shell -a 'ps -efww|grep etcd'

4.8集群验证

[root@sunyun certs]# etcdctl cluster-health

[root@sunyun certs]# etcdctl member list

5.部署kebuctl

这里采用二进制安装,下载解压后,把对应组件二进制文件copy到指定节点

master节点组件:kube-apiserver、etcd、kube-controller-manager、kube-scheduler、kubectl

node节点组件:kubelet、kube-proxy、docker、coredns、calico

5.1部署master组件

5.1.1下载kubernetes二进制安装包

解压下载的压缩包,并把对应的二进制文件分发至对应master和node节点的指定位置

[root@sunyun ~]# cd k8s/

[root@sunyun k8s]# wget https://storage.googleapis.com/kubernetes-release/release/v1.14.1/kubernetes-server-linux-amd64.tar.gz

[root@sunyun k8s]# tar -xf kubernetes-server-linux-amd64.tar.gz

[root@sunyun k8s]# cd kubernetes

[root@sunyun kubernetes]# ll

总用量 34088

drwxr-xr-x. 2 root root 6 4月 9 01:51 addons

-rw-r--r--. 1 root root 28004039 4月 9 01:51 kubernetes-src.tar.gz

-rw-r--r--. 1 root root 6899720 4月 9 01:51 LICENSES

drwxr-xr-x. 3 root root 17 4月 9 01:46 server

##master二进制命令文件传输

[root@sunyun bin]# cd /root/k8s/kubernetes/server/bin

[root@sunyun bin]# scp ./{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 10.0.0.115:/usr/local/bin/

[root@sunyun bin]# scp ./{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 10.0.0.116:/usr/local/bin/

[root@sunyun bin]# scp ./{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 10.0.0.117:/usr/local/bin/

##node节点二进制文件传输

[root@sunyun bin]# scp ./{kube-proxy,kubelet} 10.0.0.116:/usr/local/bin/

[root@sunyun bin]# scp ./{kube-proxy,kubelet} 10.0.0.117:/usr/local/bin/

[root@sunyun bin]# scp ./{kube-proxy,kubelet} 10.0.0.118:/usr/local/bin/

5.1.2创建admin证书

kubectl用于日常直接管理K8S集群,kubectl要进行管理k8s,就需要和k8s的组件进行通信,也就需要用到证书。

kubectl部署在三台master节点

[root@sunyun ~]# vim /opt/k8s/certs/admin-csr.json

1{

2 "CN": "admin",

3 "hosts": [],

4 "key": {

5 "algo": "rsa",

6 "size": 2048

7 },

8 "names": [

9 {

10 "C": "CN",

11 "ST": "ShangHai",

12 "L": "ShangHai",

13 "O": "system:masters",

14 "OU": "System"

15 }

16 ]

17}

5.1.3生成admin证书和私钥

[root@sunyun ~]# cd /opt/k8s/certs/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

5.1.4分发证书

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin-key.pem dest=/etc/kubernetes/ssl/'

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin.pem dest=/etc/kubernetes/ssl/'

5.1.5生成kubeconfig 配置文件

下面几个步骤会在家目录下的.kube生成config文件,之后kubectl和api通信就需要用到该文件,这也就是说如果在其他节点上操作集群需要用到这个kubectl,就需要将该文件拷贝到其他节点。

##设置集群参数

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443

# 设置客户端认证参数

[root@k8s-master01 ~]# kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

#设置上下文参数

[root@k8s-master01 ~]# kubectl config set-context admin@kubernetes \

--cluster=kubernetes \

--user=admin

# 设置默认上下文

[root@k8s-master01 ~]# kubectl config use-context admin@kubernetes

以上操作会在当前目录下生成.kube/config文件,后续操作集群时,apiserver需要对该文件进行验证,创建的admin用户对kubernetes集群有所有权限(集群管理员)。

6.部署kube-apiserver

说明:

1、kube-apiserver是整个k8s集群中的数据总线和数据中心,提供了对集群的增删改查及watch等HTTP Rest接口

2、kube-apiserver是无状态的,虽然客户端如kubelet可通过启动参数"--api-servers"指定多个api-server,但只有第一个生效,并不能达到高可用的效果

6.1创建k8s集群各组件的运行用户

[root@sunyun ~]# ansible k8s-master -m group -a 'name=kube'

[root@sunyun ~]# ansible k8s-master -m user -a 'name=kube group=kube comment="Kubernetes user" shell=/sbin/nologin createhome=no'

6.2创建kube-apiserver证书请求文件

apiserver TLS 认证端口需要的证书

[root@sunyun ~]# vim /opt/k8s/certs/kube-apiserver-csr.json

1 {

2 "CN": "kubernetes",

3 "hosts": [

4 "127.0.0.1",

5 "10.0.0.115",

6 "10.0.0.116",

7 "10.0.0.117",

8 "10.254.0.1",

9 "localhost",

10 "kubernetes",

11 "kubernetes.default",

12 "kubernetes.default.svc",

13 "kubernetes.default.svc.cluster",

14 "kubernetes.default.svc.cluster.local"

15 ],

16 "key": {

17 "algo": "rsa",

18 "size": 2048

19 },

20 "names": [

21 {

22 "C": "CN",

23 "ST": "ShangHai",

24 "L": "ShangHai",

25 "O": "k8s",

26 "OU": "System"

27 }

28 ]

29}

hosts字段列表中,指定了master节点ip,本地ip,10.254.0.1为集群api-service ip,一般为设置的网络段中第一个ip

6.3生成 kubernetes 证书和私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

2019/06/28 18:33:47 [INFO] generate received request

2019/06/28 18:33:47 [INFO] received CSR

2019/06/28 18:33:47 [INFO] generating key: rsa-2048

2019/06/28 18:33:47 [INFO] encoded CSR

2019/06/28 18:33:47 [INFO] signed certificate with serial number 283642706418045699183694890122146820794624697078

2019/06/28 18:33:47 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

6.4证书分发

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-apiserver.pem dest=/etc/kubernetes/ssl'

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-apiserver-key.pem dest=/etc/kubernetes/ssl'

6.5配置kube-apiserver客户端使用的token文件

kubelet 启动时向 kube-apiserver发送注册信息,在双向的TLS加密通信环境中需要认证,手工为kubelet生成证书/私钥在node节点较少且数量固定时可行,采用TLS Bootstrapping 机制,可使大量的node节点自动完成向kube-apiserver的注册请求。

原理:kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token.csv 一致,如果一致则自动为 kubelet生成证书和秘钥。

[root@sunyun ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

3394045ede72aad6cb39ebe9b1b92b90

[root@sunyun ~]# vim /opt/k8s/cfg/bootstrap-token.csv

3394045ede72aad6cb39ebe9b1b92b90,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

##分发token文件

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/bootstrap-token.csv dest=/etc/kubernetes/config/'

6.6生成 apiserver RBAC 审计配置文件

[root@sunyun ~]# vi /opt/k8s/cfg/audit-policy.yaml

1# Log all requests at the Metadata level. 2apiVersion: audit.k8s.io/v1 3kind: Policy 4rules: 5- level: Metadata

##分发审计文件

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/audit-policy.yaml dest=/etc/kubernetes/config/'

6.7编辑kube-apiserver核心文件

apiserver 启动参数配置文件,注意创建参数中涉及的日志目录,并授权kube用户访问

[root@k8s-master01 ~]# vim /opt/k8s/cfg/kube-apiserver.conf

1### 2# kubernetes system config 3# 4# The following values are used to configure the kube-apiserver 5# 6# The address on the local server to listen to. 7KUBE_API_ADDRESS="--advertise-address=10.0.0.115 --bind-address=0.0.0.0" 8# The port on the local server to listen on. 9KUBE_API_PORT="--secure-port=6443" 10# Port minions listen on 11# KUBELET_PORT="--kubelet-port=10250" 12# Comma separated list of nodes in the etcd cluster 13KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.115:2379,http://10.0.0.116:2379,http://10.0.0.117:2379" 14# Address range to use for services 15KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" 16# default admission control policies 17KUBE_ADMISSION_CONTROL="--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,Priority,ResourceQuota" 18# Add your own! 19KUBE_API_ARGS=" --allow-privileged=true \ 20 --anonymous-auth=false \ 21 --alsologtostderr \ 22 --apiserver-count=3 \ 23 --audit-log-maxage=30 \ 24 --audit-log-maxbackup=3 \ 25 --audit-log-maxsize=100 \ 26 --audit-log-path=/var/log/kube-audit/audit.log \ 27 --audit-policy-file=/etc/kubernetes/config/audit-policy.yaml \ 28 --authorization-mode=Node,RBAC \ 29 --client-ca-file=/etc/kubernetes/ssl/ca.pem \ 30 --token-auth-file=/etc/kubernetes/config/bootstrap-token.csv \ 31 --enable-bootstrap-token-auth \ 32 --enable-garbage-collector \ 33 --enable-logs-handler \ 34 --endpoint-reconciler-type=lease \ 35 --etcd-cafile=/etc/kubernetes/ssl/ca.pem \ 36 --etcd-certfile=/etc/kubernetes/ssl/etcd.pem \ 37 --etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \ 38 --etcd-compaction-interval=0s \ 39 --event-ttl=168h0m0s \ 40 --kubelet-http=true \ 41 --kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \ 42 --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \ 43 --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \ 44 --kubelet-timeout=3s \ 45 --runtime-config=api/all=true \ 46 --service-node-port-range=30000-50000 \ 47 --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ 48 --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \ 49 --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \ 50 --v=2"

个别参数解释:

- KUBE_API_ADDRESS:向集群成员通知apiserver消息的IP地址。这个地址必须能够被集群中其他成员访问。如果IP地址为空,将会使用--bind-address,如果未指定--bind-address,将会使用主机的默认接口地址

- KUBE_API_PORT:用于监听具有认证授权功能的HTTPS协议的端口。如果为0,则不会监听HTTPS协议。 (默认值6443)

- KUBE_ETCD_SERVERS:连接的etcd服务器列表

- KUBE_ADMISSION_CONTROL:控制资源进入集群的准入控制插件的顺序列表

- apiserver-count:集群中apiserver数量

- KUBE_SERVICE_ADDRESSES: CIDR IP范围,用于分配service 集群IP。不能与分配给节点pod的任何IP范围重叠

##分发参数配置文件,同时把参数中出现的IP修改为对应的本机IP

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-apiserver.conf dest=/etc/kubernetes/config/'

##创建日志目录并授权

[root@k8s-master01 ~]# ansible k8s-master -m file -a 'path=/var/log/kube-audit state=directory owner=kube group=kube'

6.8配置kube-apiserver启动脚本配置文件kube-apiserver.service

vim /opt/k8s/unit/kube-apiserver.service

1[Unit] 2Description=Kubernetes API Server 3Documentation=https://github.com/GoogleCloudPlatform/kubernetes 4After=network.target 5After=etcd.service 6 7[Service] 8EnvironmentFile=-/etc/kubernetes/config/kube-apiserver.conf 9User=kube 10ExecStart=/usr/local/bin/kube-apiserver \ 11 $KUBE_LOGTOSTDERR \ 12 $KUBE_LOG_LEVEL \ 13 $KUBE_ETCD_SERVERS \ 14 $KUBE_API_ADDRESS \ 15 $KUBE_API_PORT \ 16 $KUBELET_PORT \ 17 $KUBE_ALLOW_PRIV \ 18 $KUBE_SERVICE_ADDRESSES \ 19 $KUBE_ADMISSION_CONTROL \ 20 $KUBE_API_ARGS 21Restart=on-failure 22Type=notify 23LimitNOFILE=65536 24 25[Install] 26WantedBy=multi-user.target

## 分发apiserver启动脚本文件

[root@k8s-master01 ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-apiserver.service dest=/usr/lib/systemd/system/'

6.9启动kube-apiserver 服务

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl enable kube-apiserver'

[root@k8s-master01 ~]# ansible k8s-master -m shell -a 'systemctl start kube-apiserver'

[root@sunyun ~]# ansible k8s-master -m shell -a 'ps -efww|grep kube-apiserver'

[root@sunyun ~]# ansible k8s-master -m shell -a 'netstat -an|grep 6443'

7.部署controller-manage

1、Kubernetes控制器管理器是一个守护进程它通过apiserver监视集群的共享状态,并进行更改以尝试将当前状态移向所需状态。

2、多master节点中的kube-controller-manager服务是主备的关系,leader的选举,通过修改参数be-controller-manager,设置启动参数"--leader-elect=true"。

7.1创建kube-conftroller-manager证书签名请求

1、kube-controller-mamager连接 apiserver 需要使用的证书,同时本身 10257 端口也会使用此证书

2、kube-controller-mamager与kubei-apiserver通信采用双向TLS认证

[root@sunyun ~]# vim /opt/k8s/certs/kube-controller-manager-csr.json

1{

2 "CN": "system:kube-controller-manager",

3 "hosts": [

4 "127.0.0.1",

5 "10.0.0.115",

6 "10.0.0.116",

7 "10.0.0.117",

8 "localhost"

9 ],

10 "key": {

11 "algo": "rsa",

12 "size": 2048

13 },

14 "names": [

15 {

16 "C": "CN",

17 "ST": "ShangHai",

18 "L": "ShangHai",

19 "O": "system:kube-controller-manager",

20 "OU": "System"

21 }

22 ]

23}

说明:

1、hosts 列表包含所有 kube-controller-manager 节点 IP;

2、CN 为 system:kube-controller-manager;

O 为 system:kube-controller-manager;kube-apiserver预定义的 RBAC使用的ClusterRoleBindings system:kube-controller-manager将用户system:kube-controller-manager与ClusterRole system:kube-controller-manager绑定。

7.2生成kube-controller-manager证书与私钥

[root@sunyun ~]# cd /opt/k8s/certs/

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2019/06/28 20:22:24 [INFO] generate received request

2019/06/28 20:22:24 [INFO] received CSR

2019/06/28 20:22:24 [INFO] generating key: rsa-2048

2019/06/28 20:22:24 [INFO] encoded CSR

2019/06/28 20:22:24 [INFO] signed certificate with serial number 463898038938255030318365806105397829277650185957

2019/06/28 20:22:24 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

7.3分发证书

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-controller-manager-key.pem dest=/etc/kubernetes/ssl/'

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-controller-manager.pem dest=/etc/kubernetes/ssl/'

7.4生成配置文件kube-controller-manager.kubeconfig

## 配置集群参数

## --kubeconfig:指定kubeconfig文件路径与文件名;如果不设置,默认生成在~/.kube/config文件。

## 后面需要用到此文件,所以把配置信息单独指向到指定文件中

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

## 配置客户端认证参数

## --server:指定api-server,若不指定,后面脚本中,可以指定master

## 认证用户为前文签名中的"system:kube-controller-manager";

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--kubeconfig=kube-controller-manager.kubeconfig

## 配置上下文参数

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

## 配置默认上下文

kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=kube-controller-manager.kubeconfig

## 分发生成的配置文件

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-controller-manager.kubeconfig dest=/etc/kubernetes/config/'

7.5编辑kube-controller-manager核心文件

controller manager 将不安全端口 10252 绑定到 127.0.0.1 确保 kubectl get cs 有正确返回;将安全端口 10257 绑定到 0.0.0.0 公开,提供服务调用;由于controller manager开始连接apiserver的6443认证端口,所以需要 --use-service-account-credentials 选项来让 controller manager 创建单独的 service account(默认 system:kube-controller-manager 用户没有那么高权限)

[root@sunyun certs]# vim /opt/k8s/cfg/kube-controller-manager.conf

1### 2# The following values are used to configure the kubernetes controller-manager 3 4# defaults from config and apiserver should be adequate 5 6# Add your own! 7KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 \ 8 --authentication-kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \ 9 --authorization-kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \ 10 --bind-address=0.0.0.0 \ 11 --cluster-name=kubernetes \ 12 --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ 13 --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ 14 --client-ca-file=/etc/kubernetes/ssl/ca.pem \ 15 --controllers=*,bootstrapsigner,tokencleaner \ 16 --deployment-controller-sync-period=10s \ 17 --experimental-cluster-signing-duration=87600h0m0s \ 18 --enable-garbage-collector=true \ 19 --kubeconfig=/etc/kubernetes/config/kube-controller-manager.kubeconfig \ 20 --leader-elect=true \ 21 --node-monitor-grace-period=20s \ 22 --node-monitor-period=5s \ 23 --port=10252 \ 24 --pod-eviction-timeout=2m0s \ 25 --requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \ 26 --terminated-pod-gc-threshold=50 \ 27 --tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \ 28 --tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \ 29 --root-ca-file=/etc/kubernetes/ssl/ca.pem \ 30 --secure-port=10257 \ 31 --service-cluster-ip-range=10.254.0.0/16 \ 32 --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ 33 --use-service-account-credentials=true \ 34 --v=2"

## 分发kube-controller-manager配置文件

ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-controller-manager.conf dest=/etc/kubernetes/config'

参数说明:

- address/bind-address:默认值:0.0.0.0,监听--secure-port端口的IP地址。关联的接口必须由集群的其他部分和CLI/web客户端访问。

- cluster-name:集群名称

- cluster-signing-cert-file/cluster-signing-key-file:用于集群范围认证

- controllers:启动的contrller列表,默认为”*”,启用所有的controller,但不包含” bootstrapsigner”与”tokencleaner”;

- kubeconfig:带有授权和master位置信息的kubeconfig文件路径

- leader-elect:在执行主逻辑之前,启动leader选举,并获得leader权

- service-cluster-ip-range:集群service的IP地址范围

7.6配置启动脚本

[root@sunyun ~]# vim /opt/k8s/unit/kube-controller-manager.service

1[Unit] 2Description=Kubernetes Controller Manager 3Documentation=https://github.com/GoogleCloudPlatform/kubernetes 4 5[Service] 6EnvironmentFile=-/etc/kubernetes/config/kube-controller-manager.conf 7User=kube 8ExecStart=/usr/local/bin/kube-controller-manager \ 9 $KUBE_LOGTOSTDERR \ 10 $KUBE_LOG_LEVEL \ 11 $KUBE_MASTER \ 12 $KUBE_CONTROLLER_MANAGER_ARGS 13Restart=on-failure 14LimitNOFILE=65536 15 16[Install] 17WantedBy=multi-user.target

## 分发启动脚本

ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-controller-manager.service dest=/usr/lib/systemd/system/'

7.7启动kube-controller-manager服务

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl enable kube-controller-manager'

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl start kube-controller-manager'

7.8查看leader主机

[root@sunyun ~]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

8.kube-scheduler部署

1、Kube-scheduler作为组件运行在master节点,主要任务是把从kube-apiserver中获取的未被调度的pod通过一系列调度算法找到最适合的node,最终通过向kube-apiserver中写入Binding对象(其中指定了pod名字和调度后的node名字)来完成调度

2、kube-scheduler与kube-controller-manager一样,如果高可用,都是采用leader选举模式。启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

简单总结:

kube-scheduler负责分配调度Pod到集群内的某一个node节点上

监听kube-apiserver,查询还未分配的Node上的Pod

根据调度策略为这些Pod分配节点

8.1创建kube-scheduler证书签名请求

kube-scheduler 连接 apiserver 需要使用的证书,同时本身 10259 端口也会使用此证书

[root@sunyun ~]# vim /opt/k8s/certs/kube-scheduler-csr.json

1{

2 "CN": "system:kube-scheduler",

3 "hosts": [

4 "127.0.0.1",

5 "localhost",

6 "10.0.0.115",

7 "10.0.0.116",

8 "10.0.0.117"

9 ],

10 "key": {

11 "algo": "rsa",

12 "size": 2048

13 },

14 "names": [

15 {

16 "C": "CN",

17 "ST": "ShangHai",

18 "L": "ShangHai",

19 "O": "system:kube-scheduler",

20 "OU": "System"

21 }

22 ]

23}

8.2生成kube-scheduler证书与私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2019/06/28 21:29:48 [INFO] generate received request

2019/06/28 21:29:48 [INFO] received CSR

2019/06/28 21:29:48 [INFO] generating key: rsa-2048

2019/06/28 21:29:48 [INFO] encoded CSR

2019/06/28 21:29:48 [INFO] signed certificate with serial number 237230903244151644276886567039323221591882479671

2019/06/28 21:29:48 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

8.3分发证书

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-scheduler-key.pem dest=/etc/kubernetes/ssl/'

[root@sunyun certs]# ansible k8s-master -m copy -a 'src=/opt/k8s/certs/kube-scheduler.pem dest=/etc/kubernetes/ssl/'

8.4生成配置文件kube-scheduler.kubeconfig

## 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

## 配置客户端认证参数

kubectl config set-credentials "system:kube-scheduler" \

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

## 配置上下文参数

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

## 配置默认上下文

kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=kube-scheduler.kubeconfig

## 配置文件分发

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/root/kube-scheduler.kubeconfig dest=/etc/kubernetes/config/'

8.5编辑kube-scheduler核心文件

kube-shceduler 同 kube-controller manager 一样将不安全端口10259绑定在本地,安全端口10251对外公开

[root@sunyun ~]# vim /opt/k8s/cfg/kube-scheduler.conf

1### 2# kubernetes scheduler config 3 4# default config should be adequate 5 6# Add your own! 7KUBE_SCHEDULER_ARGS="--address=127.0.0.1 \ 8 --authentication-kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \ 9 --authorization-kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \ 10 --bind-address=0.0.0.0 \ 11 --client-ca-file=/etc/kubernetes/ssl/ca.pem \ 12 --kubeconfig=/etc/kubernetes/config/kube-scheduler.kubeconfig \ 13 --requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \ 14 --secure-port=10259 \ 15 --leader-elect=true \ 16 --port=10251 \ 17 --tls-cert-file=/etc/kubernetes/ssl/kube-scheduler.pem \ 18 --tls-private-key-file=/etc/kubernetes/ssl/kube-scheduler-key.pem \ 19 --v=2"

## 分发配置文件

ansible k8s-master -m copy -a 'src=/opt/k8s/cfg/kube-scheduler.conf dest=/etc/kubernetes/config'

8.6编辑启动脚本

需要指定需要加载的配置文件路径

[root@sunyun ~]# vim /opt/k8s/unit/kube-scheduler.service

1[Unit] 2Description=Kubernetes Scheduler Plugin 3Documentation=https://github.com/GoogleCloudPlatform/kubernetes 4 5[Service] 6EnvironmentFile=-/etc/kubernetes/config/kube-scheduler.conf 7User=kube 8ExecStart=/usr/local/bin/kube-scheduler \ 9 $KUBE_LOGTOSTDERR \ 10 $KUBE_LOG_LEVEL \ 11 $KUBE_MASTER \ 12 $KUBE_SCHEDULER_ARGS 13Restart=on-failure 14LimitNOFILE=65536 15 16[Install] 17WantedBy=multi-user.target

##脚本分发

[root@sunyun ~]# ansible k8s-master -m copy -a 'src=/opt/k8s/unit/kube-scheduler.service dest=/usr/lib/systemd/system/'

8.7启动服务

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl daemon-reload'

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl enable kube-scheduler.service'

[root@sunyun ~]# ansible k8s-master -m shell -a 'systemctl start kube-scheduler.service'

8.8验证leader主机

[root@sunyun ~]# ansible k8s-master -m shell -a 'kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml'

node2为leader

8.9验证master集群状态

在三个节点中,任一主机执行以下命令,都应返回集群状态信息

[root@sunyun ~]# ansible k8s-master -m shell -a 'kubectl get cs'

9.kube-apiserver部署

1、以下操作属于node节点上组件的部署,在master节点上只是进行文件配置,然后发布至各node节点。

2、若是需要master也作为node节点加入集群,也需要在master节点部署docker、kubelet、kube-proxy。

9.1安装docker-ce

kubelet启动,需要依赖docker服务,并且,k8s部署服务,都是基于docker进行运行

如果之前安装过 docker,请先删掉

yum remove docker docker-common docker-selinux docker-engine

## 安装依赖

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'yum install -y yum-utils device-mapper-persistent-data lvm2'

## 添加yum源

cd /etc/yum.repos.d/

wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

sed -i 's#download.docker.com#mirrors.tuna.tsinghua.edu.cn/docker-ce#' /etc/yum.repos.d/docker-ce.repo

node节点都需要操作

## 安装指定版本docker

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'yum install docker-ce-18.09.5-3.el7 -y'

## 启动

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl enable docker'

[root@k8s-master01 ~]# ansible k8s-node -m shell -a 'systemctl start docker'

9.2创建nginx.conf配置文件

原理:为了保证 apiserver 的高可用,需要在每个 node 上部署 nginx 来反向代理(tcp)所有 apiserver;然后 kubelet、kube-proxy 组件连接本地 127.0.0.1:6443 访问 apiserver,以确保任何 master 挂掉以后 node 都不会受到影响;

## 创建nginx配置文件目录

[root@sunyun ~]# ansible k8s-node -m file -a 'path=/etc/nginx state=directory'

## 编辑nginx配置文件

[root@sunyun ~]# vim /opt/k8s/cfg/nginx.conf

1error_log stderr notice;

2

3worker_processes auto;

4events {

5 multi_accept on;

6 use epoll;

7 worker_connections 1024;

8}

9

10stream {

11 upstream kube_apiserver {

12 least_conn;

13 server 10.0.0.116:6443;

14 server 10.0.0.117:6443;

15 server 10.0.0.118:6443;

16 }

17

18 server {

19 listen 0.0.0.0:6443;

20 proxy_pass kube_apiserver;

21 proxy_timeout 10m;

22 proxy_connect_timeout 1s;

23 }

24}

## 分发nginx.conf至node节点

[root@sunyun ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/cfg/nginx.conf dest=/etc/nginx/'

9.3配置Nginx基于docker进程,然后配置systemd来启动

vim /opt/k8s/unit/nginx-proxy.service

1[Unit] 2Description=kubernetes apiserver docker wrapper 3Wants=docker.socket 4After=docker.service 5 6[Service] 7User=root 8PermissionsStartOnly=true 9ExecStart=/usr/bin/docker run -p 127.0.0.1:6443:6443 \ 10 -v /etc/nginx:/etc/nginx \ 11 --name nginx-proxy \ 12 --net=host \ 13 --restart=on-failure:5 \ 14 --memory=512M \ 15 nginx:1.14.2-alpine 16ExecStartPre=-/usr/bin/docker rm -f nginx-proxy 17ExecStop=/usr/bin/docker stop nginx-proxy 18Restart=always 19RestartSec=15s 20TimeoutStartSec=30s 21 22[Install] 23WantedBy=multi-user.target

## 分发至node节点

[root@sunyun ~]# ansible k8s-node -m copy -a 'src=/opt/k8s/unit/nginx-proxy.service dest=/usr/lib/systemd/system/'

## 启动服务

ansible k8s-node -m shell -a 'systemctl daemon-reload'

ansible k8s-node -m shell -a 'systemctl enable nginx-proxy'

ansible k8s-node -m shell -a 'systemctl start nginx-proxy'

10.kubelet部署

Kubelet组件运行在Node节点上,维持运行中的Pods以及提供kuberntes运行时环境,主要完成以下使命:

1.监视分配给该Node节点的pods

2.挂载pod所需要的volumes

3.下载pod的secret

4.通过docker/rkt来运行pod中的容器

5.周期的执行pod中为容器定义的liveness探针

6.上报pod的状态给系统的其他组件

7.上报Node的状态

只在node节点上安装

10.1创建角色绑定

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper cluster 角色(role), 然后 kubelet 才能有权限创建认证请求(certificate signing requests):

[root@sunyun ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

--user=kubelet-bootstrap 是部署kube-apiserver时创建bootstrap-token.csv文件中指定的用户,同时也需要写入bootstrap.kubeconfig 文件

10.2创建kubelet kubeconfig文件,设置集群参数

## 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=bootstrap.kubeconfig

## 设置客户端认证参数

## tocker是前文提到的bootstrap-token.csv文件中token值

[root@sunyun ~]# cat /etc/kubernetes/config/bootstrap-token.csv

3394045ede72aad6cb39ebe9b1b92b90,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

kubectl config set-credentials kubelet-bootstrap \

--token=3394045ede72aad6cb39ebe9b1b92b90 \

--kubeconfig=bootstrap.kubeconfig

## 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

## 设置默认上下问参数

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

## 分发生成的集群配置文件到各node节点

ansible k8s-node -m copy -a 'src=/root/bootstrap.kubeconfig dest=/etc/kubernetes/config/'

10.3创建系统核心配置文件服务

用ansible分发至三个node节点,然后修改对应主机名及IP即可

vim /opt/k8s/cfg/kubelet.conf

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--node-ip=10.0.0.116"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=node1"

# location of the api-server

# KUBELET_API_SERVER=""

# Add your own!

KUBELET_ARGS=" --address=0.0.0.0 \

--allow-privileged \

--anonymous-auth=false \

--authentication-token-webhook=true \

--authorization-mode=Webhook \

--bootstrap-kubeconfig=/etc/kubernetes/config/bootstrap.kubeconfig \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--network-plugin=cni \

--cgroup-driver=cgroupfs \

--cert-dir=/etc/kubernetes/ssl \

--cluster-dns=10.254.0.2 \

--cluster-domain=cluster.local \

--cni-conf-dir=/etc/cni/net.d \

--eviction-max-pod-grace-period=30 \

--image-gc-high-threshold=80 \

--image-gc-low-threshold=70 \

--image-pull-progress-deadline=30s \

--kubeconfig=/etc/kubernetes/config/kubelet.kubeconfig \

--max-pods=100 \

--minimum-image-ttl-duration=720h0m0s \

--node-labels=node.kubernetes.io/k8s-node=true \

--pod-infra-container-image=gcr.azk8s.cn/google_containers/pause-amd64:3.1 \

--rotate-certificates \

--rotate-server-certificates \

--fail-swap-on=false \

--v=2"

说明:

- authorization-mode:kubelet认证模式

- network-plugin:网络插件名称

- cert-dir:TLS证书所在的目录

- eviction-max-pod-grace-period:终止pod最大宽限时间

- pod-infra-container-image:每个pod的network/ipc namespace容器使用的镜像

- rotate-certificates:当证书到期时,通过从kube-apiserver请求新的证书,自动旋转kubelet客户机证书

- hostname-override:设置node在集群中的主机名,默认使用主机hostname;如果设置了此项参数,kube-proxy服务也需要设置此项参数

10.4创建kubelet系统脚本

[root@sunyun ~]# vim /opt/k8s/unit/kubelet.service

[Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet EnvironmentFile=-/etc/kubernetes/config/kubelet.conf ExecStart=/usr/local/bin/kubelet $KUBELET_ARGS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

## 分发脚本配置文件

ansible k8s-node -m copy -a 'src=/opt/k8s/unit/kubelet.service dest=/usr/lib/systemd/system/'

## 创建kubelet数据目录

ansible k8s-node -m file -a 'path=/var/lib/kubelet state=directory'

10.5启动服务

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl daemon-reload'

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl enable kubelet'

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl start kubelet'

10.6查看csr请求

查看未授权的csr请求,处于”Pending”状态

[root@sunyun ~]# kubectl get csr

10.7批准kubelet 的 TLS 证书请求

kubelet 首次启动向 kube-apiserver 发送证书签名请求,必须由 kubernetes 系统允许通过后,才会将该 node 加入到集群。

[root@sunyun ~]# kubectl certificate approve csr-fqln7

certificatesigningrequest.certificates.k8s.io/csr-fqln7 approved

[root@sunyun ~]# kubectl certificate approve csr-lj98c

certificatesigningrequest.certificates.k8s.io/csr-lj98c approved

## 查看node节点就绪状态

## 由于还没有安装网络,所以node节点还处于NotReady状态

[root@sunyun ~]# kubectl get nodes

11.kube-proxy部署

kube-proxy的作用主要是负责service的实现,具体来说,就是实现了内部从pod到service和外部的从node port向service的访问

新版本目前 kube-proxy 组件全部采用 ipvs 方式负载,所以为了 kube-proxy 能正常工作需要预先处理一下 ipvs 配置以及相关依赖(每台 node 都要处理)

## 开启ipvs

ansible k8s-node -m shell -a "yum install -y ipvsadm ipset conntrack"

ansible k8s-node -m shell -a 'modprobe -- ip_vs'

ansible k8s-node -m shell -a 'modprobe -- ip_vs_rr'

ansible k8s-node -m shell -a 'modprobe -- ip_vs_wrr'

ansible k8s-node -m shell -a 'modprobe -- ip_vs_sh'

ansible k8s-node -m shell -a 'modprobe -- nf_conntrack_ipv4'

11.1创建kube-proxy证书请求

[root@sunyun ~]# vim /opt/k8s/certs/kube-proxy-csr.json

1{

2 "CN": "system:kube-proxy",

3 "hosts": [],

4 "key": {

5 "algo": "rsa",

6 "size": 2048

7 },

8 "names": [

9 {

10 "C": "CN",

11 "ST": "ShangHai",

12 "L": "ShangHai",

13 "O": "system:kube-proxy",

14 "OU": "System"

15 }

16 ]

17}

11.2生成kube-proxy证书与私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

11.3证书分发

ansible k8s-node -m copy -a 'src=/opt/k8s/certs/kube-proxy-key.pem dest=/etc/kubernetes/ssl/'

11.4创建kube-proxy kubeconfig文件

kube-proxy组件连接 apiserver 所需配置文件

## 配置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-proxy.kubeconfig

## 配置客户端认证参数

kubectl config set-credentials system:kube-proxy \

--client-certificate=/opt/k8s/certs/kube-proxy.pem \

--embed-certs=true \

--client-key=/opt/k8s/certs/kube-proxy-key.pem \

--kubeconfig=kube-proxy.kubeconfig

## 配置集群上下文

kubectl config set-context system:kube-proxy@kubernetes \

--cluster=kubernetes \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

## 配置集群默认上下文

##配置文件分发至node节点

ansible k8s-node -m copy -a 'src=/root/kube-proxy.kubeconfig dest=/etc/kubernetes/config/'

11.5配置kube-proxy参数

[root@sunyun ~]# vim /opt/k8s/cfg/kube-proxy.conf

1### 2# kubernetes proxy config 3# default config should be adequate 4# Add your own! 5KUBE_PROXY_ARGS=" --bind-address=0.0.0.0 \ 6 --cleanup-ipvs=true \ 7 --cluster-cidr=10.254.0.0/16 \ 8 --hostname-override=node1 \ ###注意修改成每台node节点的hostname 9 --healthz-bind-address=0.0.0.0 \ 10 --healthz-port=10256 \ 11 --masquerade-all=true \ 12 --proxy-mode=ipvs \ 13 --ipvs-min-sync-period=5s \ 14 --ipvs-sync-period=5s \ 15 --ipvs-scheduler=wrr \ 16 --kubeconfig=/etc/kubernetes/config/kube-proxy.kubeconfig \ 17 --logtostderr=true \ 18 --v=2"

ansible k8s-node -m copy -a 'src=/opt/k8s/cfg/kube-proxy.conf dest=/etc/kubernetes/config/' ## 修改hostname-override字段所属主机名

11.6kube-proxy系统服务脚本

[root@sunyun ~]# vim /opt/k8s/unit/kube-proxy.service

1[Unit] 2Description=Kubernetes Kube-Proxy Server 3Documentation=https://github.com/GoogleCloudPlatform/kubernetes 4After=network.target 5 6[Service] 7EnvironmentFile=-/etc/kubernetes/config/kube-proxy.conf 8ExecStart=/usr/local/bin/kube-proxy $KUBE_PROXY_ARGS 9 10Restart=on-failure 11LimitNOFILE=65536 12 13[Install] 14WantedBy=multi-user.target

## 分发至node节点

ansible k8s-node -m copy -a 'src=/opt/k8s/unit/kube-proxy.service dest=/usr/lib/systemd/system/'

11.7启动服务

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl daemon-reload'

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl enable kube-proxy'

[root@sunyun ~]# ansible k8s-node -m shell -a 'systemctl start kube-proxy'

11.8查看ipvs路由规则

检查LVS状态,可以看到已经创建了一个LVS集群,将来自10.254.0.1:443的请求转到三台master的6443端口,而6443就是api-server的端口

[root@sunyun ~]# ansible k8s-node -m shell -a 'ipvsadm -L -n'

12.网络Calico部署

由于K8S本身不支持网络,当 node 全部启动后,由于网络组件(CNI)未安装会显示为 NotReady 状态,需要借助第三方网络才能进行创建Pod,下面将部署 Calico 网络为K8S提供网络支持,完成跨节点网络通讯。

12.1下载Calico yaml

[root@k8s-master01 ~]# mkdir /opt/k8s/calico

[root@k8s-master01 ~]# cd /opt/k8s/calico/

[root@k8s-master01 calico]# wget http://docs.projectcalico.org/v3.6/getting-started/kubernetes/installation/hosted/calico.yaml

12.2修改配置文件

默认calico配置清单文件与部署的集群并不兼容,所以需要修改以下字段信息

## etcd 证书 base64 地址 (执行里面的命令生成的证书 base64 码,填入里面,编码并用双引号引起来即可)

vim calico.yaml

1apiVersion: v1 2 3kind: Secret 4 5type: Opaque 6 7metadata: 8 9 name: calico-etcd-secrets 10 11 namespace: kube-system 12 13data: 14 15 etcd-key: (cat /etc/kubernetes/ssl/etcd-key.pem | base64 | tr -d '\n') 16 17 etcd-cert: (cat /etc/kubernetes/ssl/etcd.pem | base64 | tr -d '\n') 18 19 etcd-ca: (cat /etc/kubernetes/ssl/ca.pem | base64 | tr -d '\n')

注意yaml文件对缩进有严格要求,最好用vi命令进行编辑

## ConfigMap

### 修改etcd地址

etcd_endpoints: "http://10.0.0.117:2379,http://10.0.0.116:2379,http://10.0.0.115:2379"

## 修改etcd证书位置

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

## 修改pod 分配的IP段(不能与node、service等地址同一网段)

- name: CALICO_IPV4POOL_CIDR

value: "10.254.64.0/18"

12.3加载应用

kubectl apply -f calico.yaml

12.4验证

[root@sunyun calico]# kubectl get pods -n kube-system -o wide

[root@sunyun calico]# kubectl get nodes

13.coredns部署

集群其他组件全部完成后我们应当部署集群 DNS 使 service 等能够正常解析

13.1下载yaml配置清单

[root@k8s-master01 ~]# mkdir /opt/k8s/coredns

[root@k8s-master01 ~]# cd /opt/k8s/coredns/

[root@k8s-master01 coredns]# wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed

[root@k8s-master01 coredns]# mv coredns.yaml.sed coredns.yaml

13.2修改默认配置清单文件

[root@k8s-master01 ~]# vim /opt/k8s/coredns/coredns.yaml

# 第一处修改

1apiVersion: v1

2

3kind: ConfigMap

4

5metadata:

6

7 name: coredns

8

9 namespace: kube-system

10

11data:

12

13 Corefile: |

14

15 .:53 {

16

17 errors

18

19 health

20

21 ready

22

23 kubernetes cluster.local 10.254.0.0/18 {

24

25 pods insecure

26

27 fallthrough in-addr.arpa ip6.arpa

28

29 }

30

31 prometheus :9153

32

33 forward . /etc/resolv.conf

34

35 cache 30

36

37 loop

38

39 reload

40

41 loadbalance

42

43 }

## 第二处修改

.....

搜索 /clusterIP 即可

clusterIP: 10.254.0.2

参数说明:

2)health:健康检查,提供了指定端口(默认为8080)上的HTTP端点,如果实例是健康的,则返回“OK”。

3)cluster.local:CoreDNS为kubernetes提供的域,10.254.0.0/18这告诉Kubernetes中间件它负责为反向区域提供PTR请求0.0.254.10.in-addr.arpa ..换句话说,这是允许反向DNS解析服务(我们经常使用到得DNS服务器里面有两个区域,即“正向查找区域”和“反向查找区域”,正向查找区域就是我们通常所说的域名解析,反向查找区域即是这里所说的IP反向解析,它的作用就是通过查询IP地址的PTR记录来得到该IP地址指向的域名,当然,要成功得到域名就必需要有该IP地址的PTR记录。PTR记录是邮件交换记录的一种,邮件交换记录中有A记录和PTR记录,A记录解析名字到地址,而PTR记录解析地址到名字。地址是指一个客户端的IP地址,名字是指一个客户的完全合格域名。通过对PTR记录的查询,达到反查的目的。)

4)proxy:这可以配置多个upstream 域名服务器,也可以用于延迟查找 /etc/resolv.conf 中定义的域名服务器

5)cache:这允许缓存两个响应结果,一个是肯定结果(即,查询返回一个结果)和否定结果(查询返回“没有这样的域”),具有单独的高速缓存大小和TTLs。

# 这里 kubernetes cluster.local 为 创建 svc 的 IP 段

kubernetes cluster.local 10.254.0.0/18

# clusterIP 为 指定 DNS 的 IP

13.3加载配置文件

[root@sunyun coredns]# kubectl apply -f coredns.yaml

## 查看资源创建

[root@sunyun ~]# kubectl get pod,svc -n kube-system -o wide

13.4测试dns解析

## 创建pod

[root@sunyun ~]# kubectl run nginx --image=nginx:1.14.2-alpine

可以看到pod被调度到node2节点上,IP地址10.254.75.2

## 创建service

[root@sunyun ~]# kubectl expose deployment nginx --port=80 --target-port=80

[root@sunyun ~]# kubectl get service

可以看到service nginx已经创建,并已经分配地址

## 验证dns解析

[root@sunyun ~]# kubectl run alpine --image=alpine -- sleep 3600

## 创建包含nslookup的pod镜像alpine

[root@k8s-master01 ~]# kubectl run alpine --image=alpine -- sleep 3600

## 查看pod名称

## 测试

[root@sunyun coredns]# kubectl exec -it alpine -- nslookup nginx

自个虚拟机环境出现异常,目前不知道什么原因

正确的测试结果如下图:

coredns参考文档: