总体介绍

https://pytorch.org/docs/stable/torch.html

https://pytorch.apachecn.org/docs/1.2/torch.html

nn实现了神经网络中大多数的损失函数,例如nn.MSELoss用来计算均方误差,nn.CrossEntropyLoss用来计算交叉熵损失。

nn.Module是nn中最重要的类,可把它看成是一个网络的封装,包含网络各层定义以及forward方法,调用forward(input)方法,可返回前向传播的结果。定义网络时,需要继承nn.Module,并实现它的forward方法,把网络中具有可学习参数的层放在构造函数__init__中。如果某一层(如ReLU)不具有可学习的参数,则既可以放在构造函数中,也可以不放,但建议不放在其中,而在forward中使用nn.functional代替。

import torch.nn as nn

import torch.nn.functional as F

torch.optim中实现了深度学习中绝大多数的优化方法,例如RMSProp、Adam、SGD等,更便于使用,因此大多数时候并不需要手动写上述代码。

import torch.optim as optim

torchvision实现了常用的图像数据加载功能,例如Imagenet、CIFAR10、MNIST等,以及常用的数据转换操作,这极大地方便了数据加载,并且代码具有可重用性。

torch

torch.set_default_dtype(_d_)

>>> torch.tensor([1.2, 3]).dtype # initial default for floating point is torch.float32

torch.float32

>>> torch.set_default_dtype(torch.float64)

>>> torch.tensor([1.2, 3]).dtype # a new floating point tensor

torch.float64

torch.get_default_dtype() → torch.dtype

>>> torch.get_default_dtype() # initial default for floating point is torch.float32

torch.float32

>>> torch.set_default_dtype(torch.float64)

>>> torch.get_default_dtype() # default is now changed to torch.float64

torch.float64

>>> torch.set_default_tensor_type(torch.FloatTensor) # setting tensor type also affects this

>>> torch.get_default_dtype() # changed to torch.float32, the dtype for torch.FloatTensor

torch.float32

torch.tensor(_data_, _dtype=None_, _device=None_, _requires_grad=False_, _pin_memory=False_) → Tensor

>>> torch.tensor([[0.1, 1.2], [2.2, 3.1], [4.9, 5.2]])

tensor([[ 0.1000, 1.2000],

[ 2.2000, 3.1000],

[ 4.9000, 5.2000]])

>>> torch.tensor([0, 1]) # Type inference on data

tensor([ 0, 1])

>>> torch.tensor([[0.11111, 0.222222, 0.3333333]],

dtype=torch.float64,

device=torch.device('cuda:0')) # creates a torch.cuda.DoubleTensor

tensor([[ 0.1111, 0.2222, 0.3333]], dtype=torch.float64, device='cuda:0')

>>> torch.tensor(3.14159) # Create a scalar (zero-dimensional tensor)

tensor(3.1416)

>>> torch.tensor([]) # Create an empty tensor (of size (0,))

tensor([])

torch.from_numpy(_ndarray_) → Tensor

>>> a = numpy.array([1, 2, 3])

>>> t = torch.from_numpy(a)

>>> t

tensor([ 1, 2, 3])

>>> t[0] = -1

>>> a

array([-1, 2, 3])

torch.zeros(_*size_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.zeros(2, 3)

tensor([[ 0., 0., 0.],

[ 0., 0., 0.]])

>>> torch.zeros(5)

tensor([ 0., 0., 0., 0., 0.])

torch.zeros_like(_input_, _dtype=None_, _layout=None_, _device=None_, _requires_grad=False_) → Tensor

>>> input = torch.empty(2, 3)

>>> torch.zeros_like(input)

tensor([[ 0., 0., 0.],

[ 0., 0., 0.]])

torch.ones(_*size_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.ones(2, 3)

tensor([[ 1., 1., 1.],

[ 1., 1., 1.]])

>>> torch.ones(5)

tensor([ 1., 1., 1., 1., 1.])

torch.ones_like(_input_, _dtype=None_, _layout=None_, _device=None_, _requires_grad=False_) → Tensor

>>> input = torch.empty(2, 3)

>>> torch.ones_like(input)

tensor([[ 1., 1., 1.],

[ 1., 1., 1.]])

torch.arange(_start=0_, _end_, _step=1_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.arange(5)

tensor([ 0, 1, 2, 3, 4])

>>> torch.arange(1, 4)

tensor([ 1, 2, 3])

>>> torch.arange(1, 2.5, 0.5)

tensor([ 1.0000, 1.5000, 2.0000])

torch.range(_start=0_, _end_, _step=1_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.range(1, 4)

tensor([ 1., 2., 3., 4.])

>>> torch.range(1, 4, 0.5)

tensor([ 1.0000, 1.5000, 2.0000, 2.5000, 3.0000, 3.5000, 4.0000])

torch.linspace(_start_, _end_, _steps=100_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.linspace(3, 10, steps=5)

tensor([ 3.0000, 4.7500, 6.5000, 8.2500, 10.0000])

>>> torch.linspace(-10, 10, steps=5)

tensor([-10., -5., 0., 5., 10.])

>>> torch.linspace(start=-10, end=10, steps=5)

tensor([-10., -5., 0., 5., 10.])

>>> torch.linspace(start=-10, end=10, steps=1)

tensor([-10.])

torch.eye(_n_, _m=None_, _out=None_, _dtype=None_, _layout=torch.strided_, _device=None_, _requires_grad=False_) → Tensor

>>> torch.eye(3)

tensor([[ 1., 0., 0.],

[ 0., 1., 0.],

[ 0., 0., 1.]])

torch.index_select(_input_, _dim_, _index_, _out=None_) → Tensor

>>> x = torch.randn(3, 4)

>>> x

tensor([[ 0.1427, 0.0231, -0.5414, -1.0009],

[-0.4664, 0.2647, -0.1228, -1.1068],

[-1.1734, -0.6571, 0.7230, -0.6004]])

>>> indices = torch.tensor([0, 2])

>>> torch.index_select(x, 0, indices)

tensor([[ 0.1427, 0.0231, -0.5414, -1.0009],

[-1.1734, -0.6571, 0.7230, -0.6004]])

>>> torch.index_select(x, 1, indices)

tensor([[ 0.1427, -0.5414],

[-0.4664, -0.1228],

[-1.1734, 0.7230]])

torch.reshape(_input_, _shape_) → Tensor

>>> a = torch.arange(4.)

>>> torch.reshape(a, (2, 2))

tensor([[ 0., 1.],

[ 2., 3.]])

>>> b = torch.tensor([[0, 1], [2, 3]])

>>> torch.reshape(b, (-1,))

tensor([ 0, 1, 2, 3])

torch.squeeze(_input_, _dim=None_, _out=None_) → Tensor

返回一个张量,其中所有大小为1的输入的维都已删除。例如如果输入的形状为(A×1×B×C×1×D),那么输出的tensor的形状为(A×B×C×D)。

如果指定了dim,则仅在给定维度上执行挤压操作。例如如果输入为(A×1×B),那么`squeeze(input,0)不会改变这个tensor; 而squeeze(input,1)将挤压这个tensot为(A×B)。

>>> x = torch.zeros(2, 1, 2, 1, 2)

>>> x.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x)

>>> y.size()

torch.Size([2, 2, 2])

>>> y = torch.squeeze(x, 0)

>>> y.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x, 1)

>>> y.size()

torch.Size([2, 2, 1, 2])

torch.unsqueeze(_input_, _dim_, _out=None_) → Tensor

Returns a new tensor with a dimension of size one inserted at the specified position.

返回的张量与此张量共享相同的基础数据。

Parameters

- input (Tensor) – the input tensor.

- dim (int) – the index at which to insert the singleton dimension

- out (Tensor_, _optional) – the output tensor.

>>> x = torch.tensor([1, 2, 3, 4])

>>> torch.unsqueeze(x, 0)

tensor([[ 1, 2, 3, 4]])

>>> torch.unsqueeze(x, 1)

tensor([[ 1],

[ 2],

[ 3],

[ 4]])

torch.nn

## Parameters

CLASStorch.nn.Parameter

A kind of Tensor that is to be considered a module parameter.

Parameters are Tensor subclasses, that have a very special property when used with Module s - when they’re assigned as Module attributes they are automatically added to the list of its parameters, and will appear e.g. in parameters() iterator. Assigning a Tensor doesn’t have such effect. This is because one might want to cache some temporary state, like last hidden state of the RNN, in the model. If there was no such class as Parameter, these temporaries would get registered too.

Parameters

-

data (Tensor) – parameter tensor.

-

requires_grad (bool_, _optional) – if the parameter requires gradient. See Excluding subgraphs from backward for more details. Default: True

Containers

Module

CLASStorch.nn.Module

Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in a tree structure. You can assign the submodules as regular attributes:

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Sequential

CLASStorch.nn.Sequential(*args)

A sequential container. Modules will be added to it in the order they are passed in the constructor. Alternatively, an ordered dict of modules can also be passed in.

To make it easier to understand, here is a small example:

# Example of using Sequential

model = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

# Example of using Sequential with OrderedDict

model = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(1,20,5)),

('relu1', nn.ReLU()),

('conv2', nn.Conv2d(20,64,5)),

('relu2', nn.ReLU())

]))

Convolution layers

Conv1d

CLASStorch.nn.Conv1d(_in_channels_, _out_channels_, _kernel_size_, _stride=1_, _padding=0_, _dilation=1_, _groups=1_, _bias=True_, _padding_mode='zeros'_)

Conv2d

CLASStorch.nn.Conv2d(_in_channels_, _out_channels_, _kernel_size_, _stride=1_, _padding=0_, _dilation=1_, _groups=1_, _bias=True_, _padding_mode='zeros'_)

input size:(N,Cin,H,W),其中N是batch size, C是通道数,H是图像高度,W是图像宽度(像素)

stridecontrols the stride for the cross-correlation, a single number or a tuple.paddingcontrols the amount of implicit zero-paddings on both sides forpaddingnumber of points for each dimension.dilationcontrols the spacing between the kernel points; also known as the à trous algorithm. It is harder to describe, but this link has a nice visualization of whatdilationdoes.groupscontrols the connections between inputs and outputs.in_channelsandout_channelsmust both be divisible bygroups. For example,

- At groups=1, all inputs are convolved to all outputs.

- At groups=2, the operation becomes equivalent to having two conv layers side by side, each seeing half the input channels, and producing half the output channels, and both subsequently concatenated.

- At groups=

in_channels, each input channel is convolved with its own set of filters, of size:out+channels/in_channels

The parameters kernel_size, stride, padding, dilation can either be:

- a single

int– in which case the same value is used for the height and width dimension- a

tupleof two ints – in which case, the first int is used for the height dimension, and the second int for the width dimension

NOTE

Depending of the size of your kernel, several (of the last) columns of the input might be lost, because it is a valid cross-correlation, and not a full cross-correlation. It is up to the user to add proper padding.

Parameters

- in_channels (int) – Number of channels in the input image

- out_channels (int) – Number of channels produced by the convolution

- kernel_size (int_ or _tuple) – Size of the convolving kernel

- stride (int_ or tuple, _optional) – Stride of the convolution. Default: 1

- padding (int_ or tuple, _optional) – Zero-padding added to both sides of the input. Default: 0

- padding_mode (string_, _optional) – zeros

- dilation (int_ or tuple, _optional) – Spacing between kernel elements. Default: 1

- groups (int_, _optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool_, _optional) – If

True, adds a learnable bias to the output. Default:True

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-55uM3gwO-1572010326237)(%E5%9B%BE%E7%89%87%E7%9B%AE%E5%BD%95/conv2d.png)]](https://img-blog.csdnimg.cn/20191025213407894.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzI1ODAwMzEx,size_16,color_FFFFFF,t_70)

>>> # With square kernels and equal stride

>>> m = nn.Conv2d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

>>> # non-square kernels and unequal stride and with padding and dilation

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2), dilation=(3, 1))

>>> input = torch.randn(20, 16, 50, 100)

>>> output = m(input)

Pooling layers

MaxPool2d

CLASStorch.nn.MaxPool2d(_kernel_size_, _stride=None_, _padding=0_, _dilation=1_, _return_indices=False_, _ceil_mode=False_)

Applies a 2D max pooling over an input signal composed of several input planes.

If padding is non-zero, then the input is implicitly zero-padded on both sides for padding number of points. dilation controls the spacing between the kernel points. It is harder to describe, but this link has a nice visualization of what dilation does.

The parameters kernel_size, stride, padding, dilation can either be:

- a single

int– in which case the same value is used for the height and width dimension- a

tupleof two ints – in which case, the first int is used for the height dimension, and the second int for the width dimension

Parameters

- kernel_size – the size of the window to take a max over

- stride – the stride of the window. Default value is

kernel_size - padding – implicit zero padding to be added on both sides

- dilation – a parameter that controls the stride of elements in the window

- return_indices – if

True, will return the max indices along with the outputs. Useful fortorch.nn.MaxUnpool2dlater - ceil_mode – when True, will use ceil instead of floor to compute the output shape

Shape:![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-CBB3ALOw-1572010326237)(%E5%9B%BE%E7%89%87%E7%9B%AE%E5%BD%95/MaxPool2d.png)]](https://img-blog.csdnimg.cn/20191025213327480.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzI1ODAwMzEx,size_16,color_FFFFFF,t_70)

>>> # pool of square window of size=3, stride=2

>>> m = nn.MaxPool2d(3, stride=2)

>>> # pool of non-square window

>>> m = nn.MaxPool2d((3, 2), stride=(2, 1))

>>> input = torch.randn(20, 16, 50, 32)

>>> output = m(input)

Non-linear activations (weighted sum, nonlinearity)

CLASStorch.nn.ReLU(_inplace=False_)

Applies the rectified linear unit function element-wise:

ReLU(x)=max(0,x)

Parameters

inplace – can optionally do the operation in-place. Default: False

Shape:

- Input: (N, *)(N,∗) where * means, any number of additional dimensions

- Output: (N, *)(N,∗) , same shape as the input

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-RZzIfX36-1572010326238)(https://pytorch.org/docs/stable/_images/ReLU.png)]](https://img-blog.csdnimg.cn/20191025213249393.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzI1ODAwMzEx,size_16,color_FFFFFF,t_70)

>>> m = nn.ReLU()

>>> input = torch.randn(2)

>>> output = m(input)

An implementation of CReLU - https://arxiv.org/abs/1603.05201

>>> m = nn.ReLU()

>>> input = torch.randn(2).unsqueeze(0)

>>> output = torch.cat((m(input),m(-input)))

Non-linear activations (other)

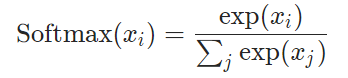

Softmax

CLASStorch.nn.``Softmax(dim=None)

Applies the Softmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range [0,1] and sum to 1.

Softmax is defined as:

Shape:

Input: ()(∗) where * means, any number of additional dimensions

Output: ()(∗) , same shape as the input

Returns

a Tensor of the same dimension and shape as the input with values in the range [0, 1]

Parameters

dim (int) – A dimension along which Softmax will be computed (so every slice along dim will sum to 1).

>>> m = nn.Softmax(dim=1)

>>> input = torch.randn(2, 3)

>>> output = m(input)

Softmax2d

CLASStorch.nn.Softmax2d

>>> m = nn.Softmax2d()

>>> # you softmax over the 2nd dimension

>>> input = torch.randn(2, 3, 12, 13)

>>> output = m(input)

Transformer layers

Transformer

CLASS torch.nn.Transformer(d_model=512, nhead=8, num_encoder_layers=6, num_decoder_layers=6, dim_feedforward=2048, dropout=0.1, activation='relu', custom_encoder=None, custom_decoder=None)

Parameters

- d_model – the number of expected features in the encoder/decoder inputs (default=512).

- nhead – the number of heads in the multiheadattention models (default=8).

- num_encoder_layers – the number of sub-encoder-layers in the encoder (default=6).

- num_decoder_layers – the number of sub-decoder-layers in the decoder (default=6).

- dim_feedforward – the dimension of the feedforward network model (default=2048).

- dropout – the dropout value (default=0.1).

- activation – the activation function of encoder/decoder intermediate layer, relu or gelu (default=relu).

- custom_encoder – custom encoder (default=None).

- custom_decoder – custom decoder (default=None).

>>> transformer_model = nn.Transformer(nhead=16, num_encoder_layers=12)

>>> src = torch.rand((10, 32, 512))

>>> tgt = torch.rand((20, 32, 512))

>>> out = transformer_model(src, tgt)

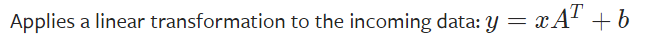

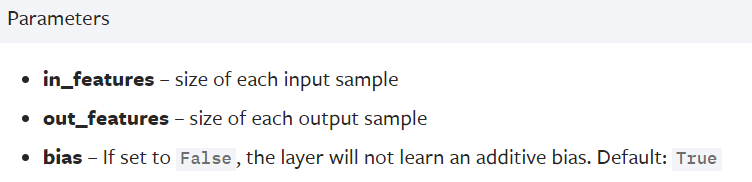

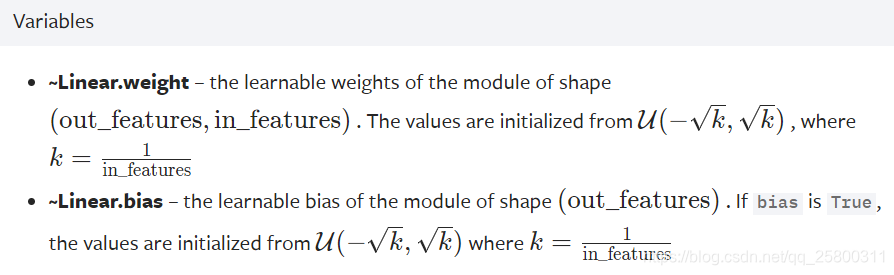

Linear layers

Linear

CLASS torch.nn.Linear(in_features, out_features, bias=True)

>>> m = nn.Linear(20, 30)

>>> input = torch.randn(128, 20)

>>> output = m(input)

>>> print(output.size())

torch.Size([128, 30])

Dropout layers

Dropout

CLASS torch.nn.Dropout(p=0.5, inplace=False)

During training, randomly zeroes some of the elements of the input tensor with probability p using samples from a Bernoulli distribution. Each channel will be zeroed out independently on every forward call.

This has proven to be an effective technique for regularization and preventing the co-adaptation of neurons as described in the paper Improving neural networks by preventing co-adaptation of feature detectors .

Parameters

- p – probability of an element to be zeroed. Default: 0.5

- inplace – If set to True, will do this operation in-place. Default: False

>>> m = nn.Dropout(p=0.2)

>>> input = torch.randn(20, 16)

>>> output = m(input)

来源:https://blog.csdn.net/qq_25800311/article/details/102750658