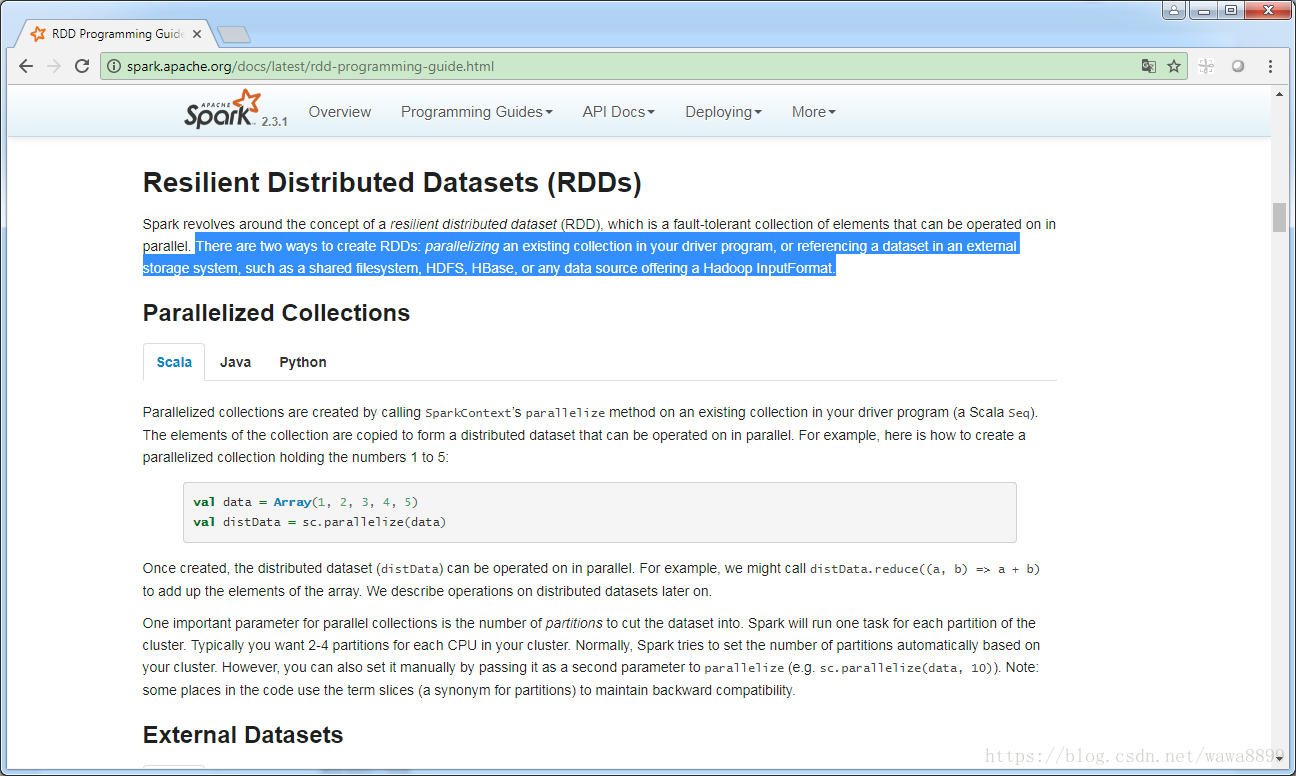

创建RDD的方式:

1 - 测试:通过并行化一个已经存在的集合,转化成RDD;

2 - 生产:引用一些外部的数据集(共享的文件系统,包括HDFS、HBase等支持Hadoop InputFormat的都可以)。

第一种方式创建RDD

[hadoop@hadoop01 ~]$ spark-shell --master local[2] Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/07/12 22:30:58 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/07/12 22:31:05 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException Spark context Web UI available at http://10.132.37.38:4040 Spark context available as 'sc' (master = local[2], app id = local-1531405859803). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.2.0 /_/ Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_45) Type in expressions to have them evaluated. Type :help for more information. scala> val data = Array(1, 2, 3, 4, 5) # 定义一个数组 data: Array[Int] = Array(1, 2, 3, 4, 5) scala> val distData = sc.parallelize(data) # 把这个数组转化成RDD distData: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:26 scala> 文章来源: [Spark] RDD创建